How to Optimize Your GPU for Ethereum Mining (Updated)

Tuning your card for the best performance and efficiency is important

Ethereum GPU mining remains profitable, at least until it shifts to proof of stake some time this year (we hope). But there's more to it than just firing up the software and letting it run in the background, especially if you've managed to procure one of the best graphics cards or best mining GPUs. Most of the graphics cards in our GPU benchmarks hierarchy can earn money right now by mining, depending on how much you pay for power. However, you'll want to tune your graphics card with the optimal settings, and the brand and card model can have a big impact on overall performance and efficiency.

First, let's note that we're not trying to actively encourage anyone to start a mining farm with GPUs. If you want to know how to mine Ethereum, we cover that elsewhere, but the "how" is quite different from the "why." In fact, based on past personal experience that some of us have running consumer graphics cards 24/7, it is absolutely possible to burn out the fans, VRMs, or other elements on your card. Note also that we do periodically 'refresh' this article, but the original text was from early 2021. Mining at this stage is far less profitable.

At the same time, we know there's a lot of interest in the topic, and we wanted to shed some light on the actual power consumption — measured using our Powenetics equipment — that the various GPUs use, as well as the real-world hashrates we achieved. If you've pulled up data using a mining profitability calculator, our figures indicate there's a lot of variation between power and hash rates, depending on your settings and even your particular card. Don't be surprised if you don't reach the level of performance others are showing.

We'll start with the latest generation of AMD and Nvidia GPUs, but we also have results for most previous generation GPUs. Nvidia and its partners now have LHR (Lite Hash Rate) Ampere cards that perform about half as fast as the non-LHR cards, though the newer NBminer releases can get that into the 70% range (with the appropriate drivers — older is better, typically). Nvidia has managed to undo some of those gains with updated drivers, however, so newer cards like the RTX 3050 might not be all that great. This is all likely setting the stage for Nvidia's next GPUs, Ada Lovelace, which we expect to see in the latter part of 2022.

Nvidia Ampere and AMD RDNA2 Mining Performance

There are a few things you should know before getting started. First, Ethereum GPU mining requires more than 4GB of VRAM, so if you're still hanging on to an RX 570 4GB, it won't work — and neither will the new Radeon RX 6500 XT. Second, there are a lot of different software packages for mining, but we're taking the easy route and using NiceHash Miner. It includes support for the most popular mining solutions, and it will even benchmark your card to determine which one works best. Even though some algorithms may perform better than others, we're going to focus exclusively on Ethereum hashing for now.

We’ve used our standard GPU testbed for these tests, running a single GPU. This isn't an necessarily an optimal miner PC configuration, but it will suffice and is a closer representation to what most of our readers are probably using. You don't need a high-end CPU, motherboard, or memory for mining purposes, and many larger installations will use Pentium CPUs and B360 chipset motherboards with more PCIe slots.

The most important factors for a mining PC are power and cooling, as they both directly impact overall profitability. If you can keep your GPU and other components cool, they'll last longer and not break down as often. Meanwhile, power can be very expensive for larger mining setups, and poor efficiency PSUs (power supply units) will generate more heat and use more electricity.

We've run these benchmarks using NiceHash Miner, looking at actual realtime hash rates rather than the results of its built-in benchmark. We tested each graphics card in stock mode, and then we also attempted to tune performance to improve overall efficiency — and ideally keep temperatures and fan speeds at reasonable levels. We let the mining run for at least 15 minutes before checking performance, power, etc., as often things will slow down once the graphics card starts to heat up.

It's also important to note that we're reporting raw graphics card power for the entire card, but we don't account for the power consumption of the rest of the PC or power supply inefficiencies. Using an 80 Plus Platinum PSU, we should be running at around 92% efficiency, and wall outlet power consumption is typically about 50-80W higher than what we show in the charts. About 40W of power goes to the CPU, motherboard, and other components, while the remainder depends on how much power the GPU uses, including PSU inefficiencies.

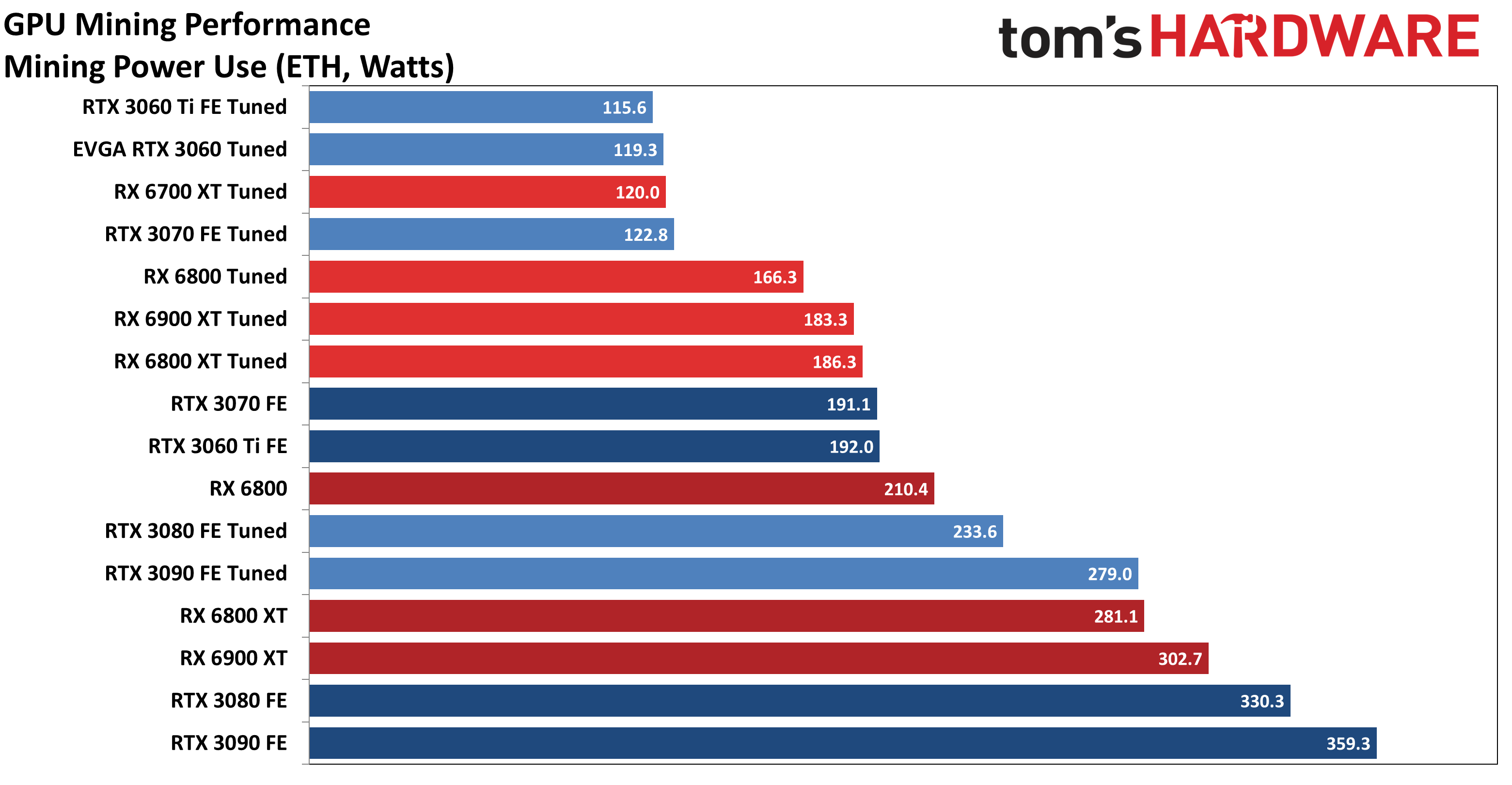

There's a lot to discuss with these charts, specifically, what do we mean by "tuned" performance? The answer: It varies, often massively, by GPU. (After the initial article, we've updated and added more GPUs, but we've skipped the "stock" testing and only included our tuned results.)

Let's talk about the big picture quickly before we get into the details. The fastest GPUs for Ethereum mining right now are the RTX 3080 and RTX 3090, by quite a large margin. Our baseline RTX 3080 FE measurement got 85MH/s, and the baseline 3090 FE got 105MH/s. Additional tuning improved the 3080 performance to 93MH/s, while the 3090 FE limited us (on memory temps) to around 106MH/s. It's critical to note that both the 3080 and 3090 Founders Edition cards run very hot on the GDDR6X, which limits performance. Modding those with better thermal pads, or buying a third party card, can boost performance and lower memory temperatures.

Meanwhile, the RTX 3060 Ti and 3070 cards started at close to 52MH/s — even though the 3070 is theoretically faster. That's because Ethereum hashing depends quite heavily on memory bandwidth. Overclocking the VRAM on those GPUs got performance up to around 60MH/s. AMD's RX 6800, 6800 XT, and 6900 XT cards started at close to 60MH/s, and with tuning, we achieved 65MH/s — there wasn't much difference between the three AMD GPUs, mostly because they're all using the same 16GB of 16Gbps GDDR6 memory.

Finally, the newer RTX 3060 and RX 6700 XT both have 12GB of GDDR6, with a 192-bit memory bus width, effectively cutting bandwidth by 25% relative to the 256-bit cards. This in turn limits performance to around 47–48 MH/s after tuning. Without the memory overclock, performance drops to around 40MH/s.

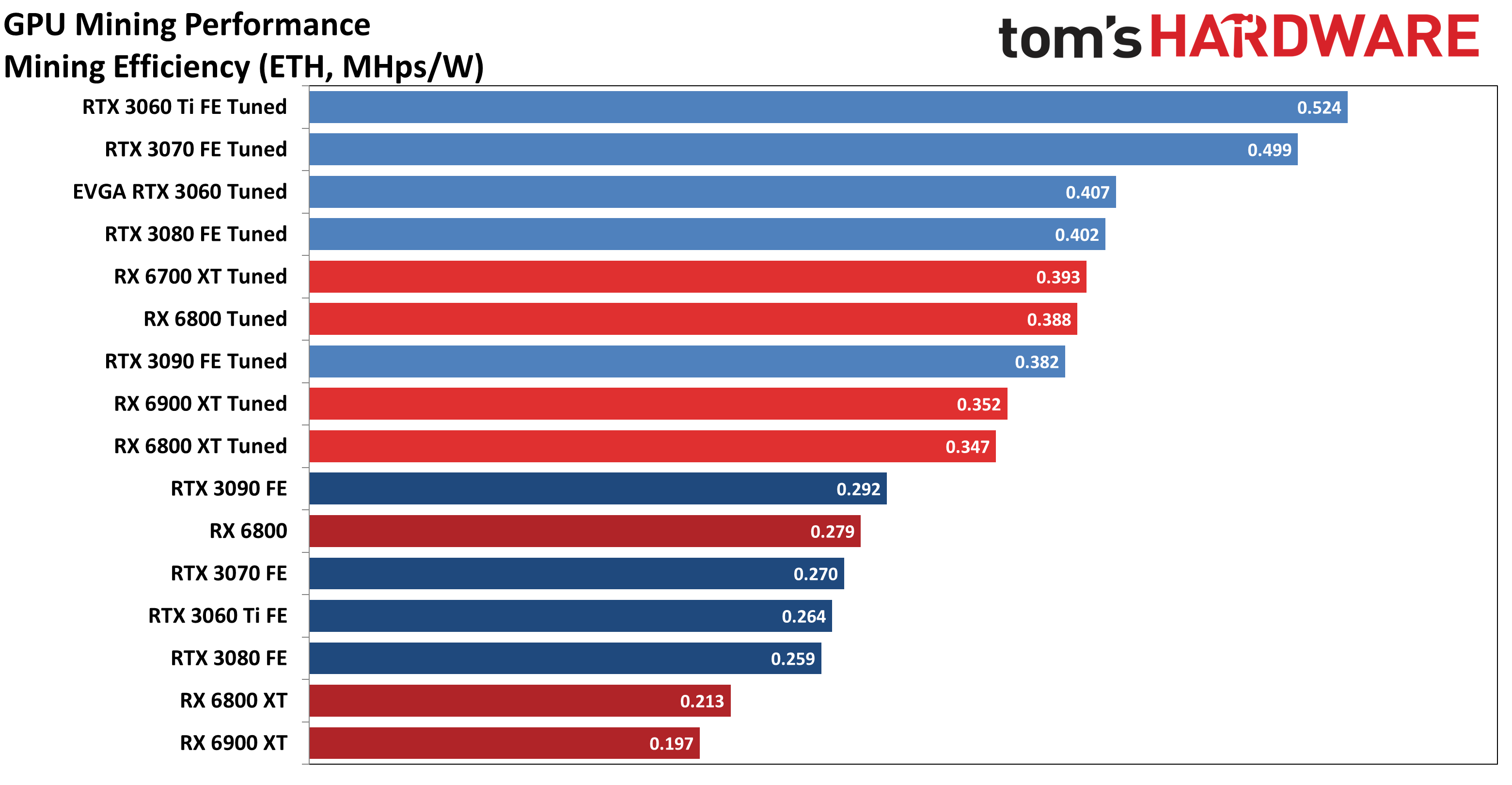

You can check out the power chart, but the overall efficiency chart is more important than raw power use. Here, the lower power RTX 3060 Ti and 3070 shoot to the top, and then there's a moderate step down to the RTX 3060, 3080, RX 6700 XT, RX 6800, and so on. Most of the cards are pretty close in terms of overall efficiency for Ethereum mining, though the additional GPU cores on the 6800 XT and 6900 XT ended up dropping efficiency a bit — more tuning might improve the results, particularly if you're willing to sacrifice a bit of performance to reduce the power use.

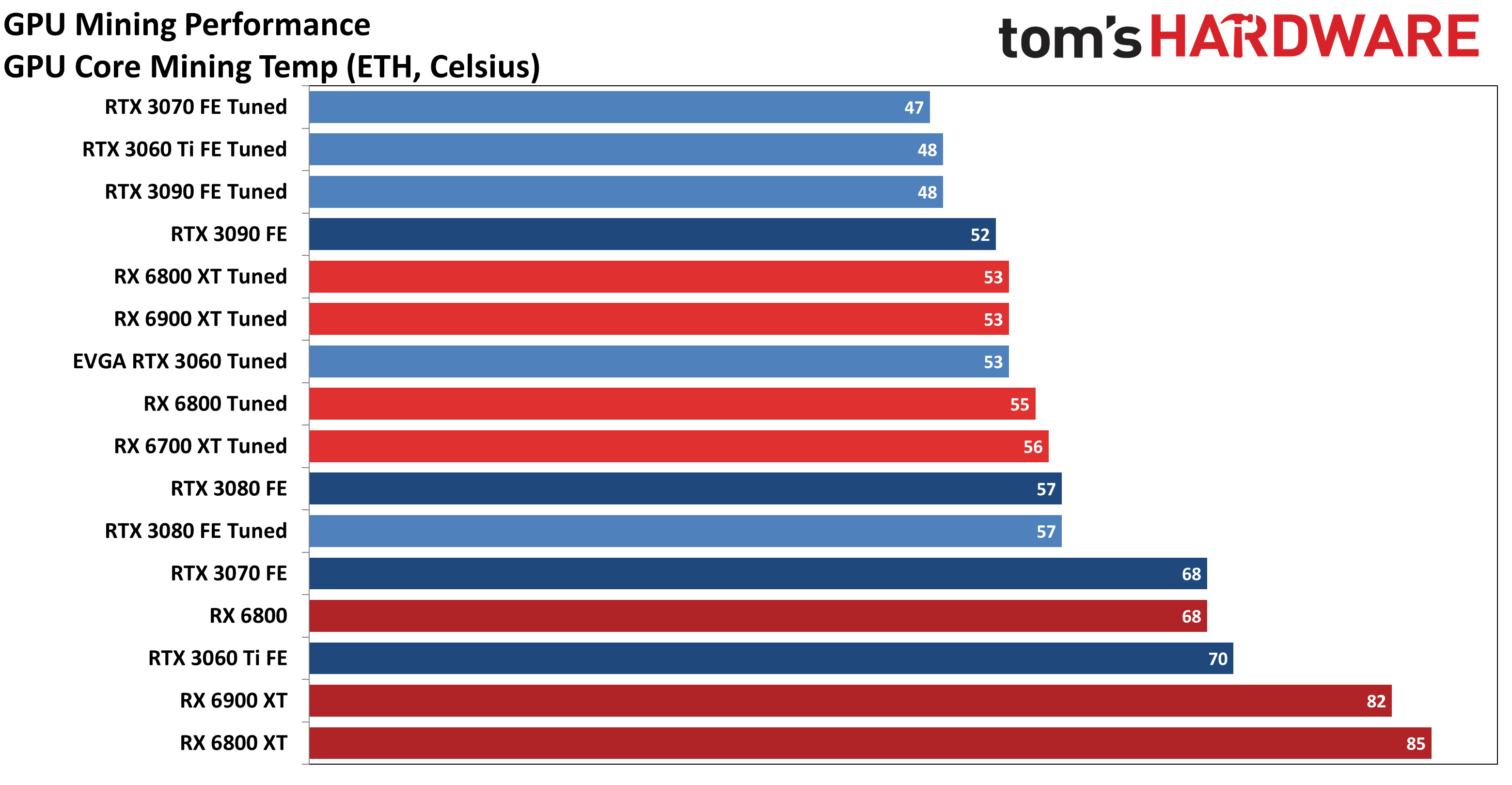

Finally, we have temperatures. These are GPU core temperatures, but they're actually not the critical factor on many of the cards. AMD's cards ran hot at stock settings, but all of the cards benefit greatly from tuning. More importantly, while we couldn't get GDDR6 temperatures on the 3060 Ti and 3070, we did get VRAM temps on the 3080 and 3090 as well as the AMD cards. At stock, the 3080 and 3090 Founders Edition cards both hit 108-110C on the GDDR6X, at which point the GPU fans would kick up to 100% (or nearly so). The cards settled in at 106 degrees Celsius, with GPU clocks fluctuating quite a bit. AMD's RX 6000 cards also peaked at around 96C on their GDDR6 at stock, but tuning dropped VRAM temps all the way down to around 68-70C. This brings us to the main area of interest.

How to Tune Your Graphics Card's Ethereum Mining Performance

Let's start by noting that every card model is different — and even cards of the same model may vary in performance characteristics. For the 3080 and 3090 cards with GDDR6X memory, keeping that cool is critical. We've seen examples of cards (specifically, the EVGA RTX 3090 FTW3) that can run at up to 125MH/s, while the memory sits at around 85C. That's because EVGA appears to have put a lot of effort into cooling the memory. Without altering the cards, the Nvidia 3080/3090 Founders Editions let the memory get very hot while mining, which can dramatically hinder performance and/or reduce the card's lifespan. Let's take each card in turn.

GeForce RTX 3090 Founders Edition: While technically the fastest card for mining that we tested, we really don't like the idea of running this one 24/7 without hardware modifications or serious tuning. At stock, the fans end up running at 100% to try to keep the GDDR6X below 110C, and that's not good.

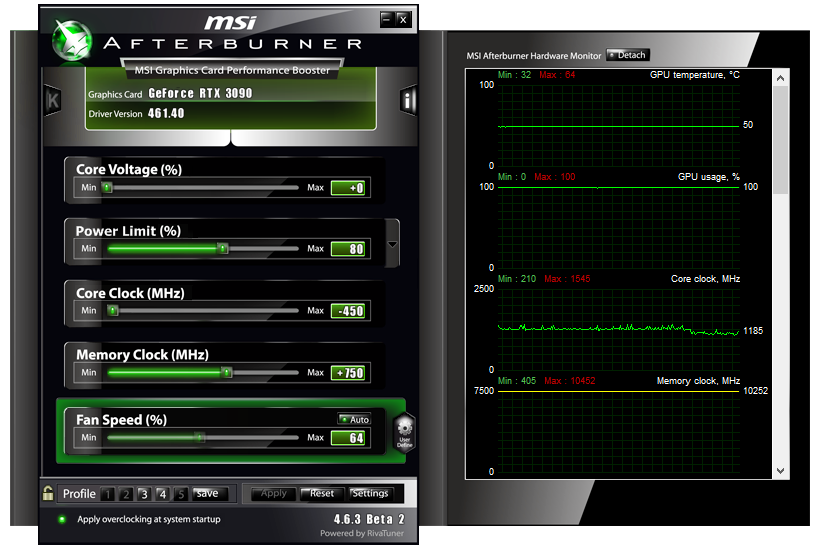

For our purposes, we tuned the card by dropping the GPU core to the maximum allowed -502MHz, set the memory clock to +250MHz, and put the power limit at 77%. That gave us a GDDR6X temperature of 104C, which is still higher than we'd like, and performance remained at around 106MH/s. Power use also dropped to 279W, is quite good considering the hash rate.

Alternatively, you can go for broke and max out fan speed, set the power to 80%, drop the GPU clocks by 250-500MHz, and boost the VRAM clocks by 750-1000MHz. If you don't mod the card to improve GDDR6X cooling, you'll typically end up at 106-110C (depending on your card, case, cooling, and other factors), and the maxed out fan speed is not going to be good for fan longevity. Consider yourself warned.

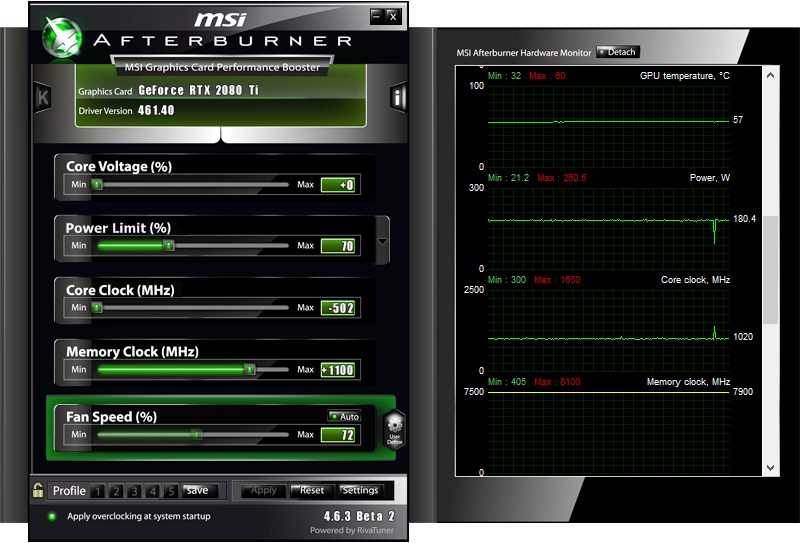

GeForce RTX 3080 Founders Edition: Tuning this card was very similar to the 3090 FE. It doesn't like stock settings, as the GDDR6X gets very toasty. We again dropped the GPU core the maximum allowed (-502MHz), set the memory clock to +750MHz, and put the power limit at 70%. That resulted in the same GDDR6X temperature of 104C as the 3090 FE, and performance was only slightly slower at 93-94MH/s.

Again, maxing out fan speeds and memory clocks while dropping the GPU core clocks and power limit are key to improving overall hash rates. Modding the card and replacing the VRAM thermal pads with thicker/better pads is possible and will help cooling and performance. We'd prefer using an RTX 3080 with better GDDR6X cooling, however. Which brings us toa card that we've since removed from the charts.

The Colorful RTX 3080 Vulcan is an example of a 3080 model with better VRAM cooling than Nvidia's reference card, so the memory didn't get quite as hot. However, we still found we achieved the best result by dropping the power limit to 80-90% and then setting the GPU core clocks to the minimum possible value in MSI Afterburner (-502MHz). Then we overclocked the memory by 750MHz base clock, which gave a final speed of 20Gbps (the Ampere cards run at 0.5Gbps below their rated memory speed when mining). That yielded similar hash rates of 93MH/s, while fan speed, GPU temperature, and power consumption all dropped. Most importantly, at the same (relatively) performance as the 3080 FE, GDDR6X temperatures stabilized at 100C. It's not ideal, but at these temperatures a 4C difference can be significant.

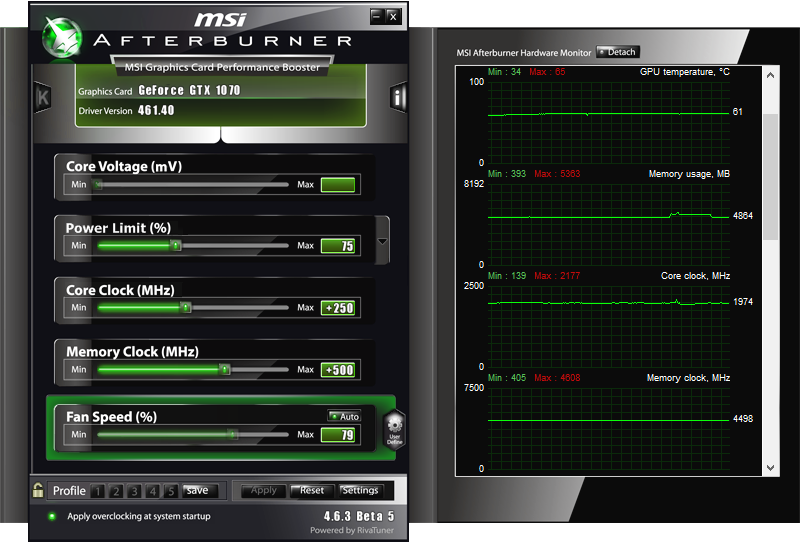

The RTX 3070 and RTX 3060 Ti have the same 8GB of 14Gbps GDDR6, and as we'll see with the AMD GPUs, that appears to be the limiting factor. Our initial results were poor, as these were the first cards we tested, but we've revisited the settings after looking at the RX 6000 series.

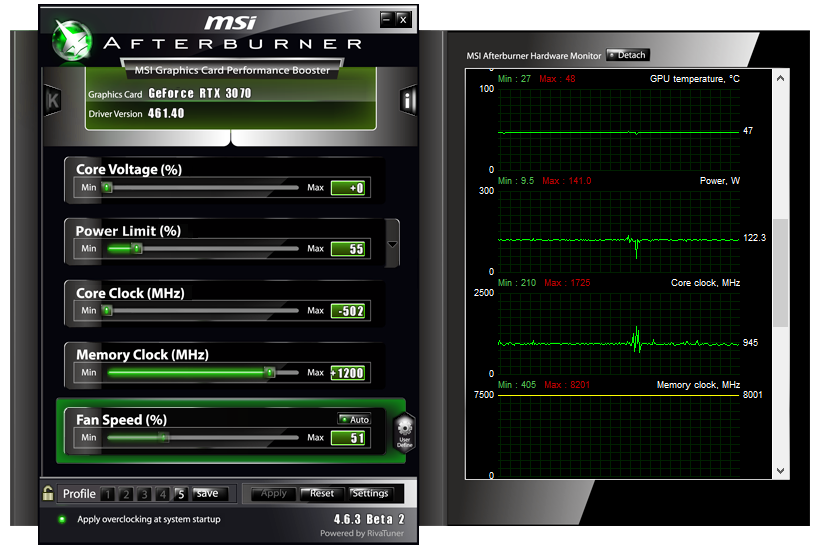

GeForce RTX 3070 Founders Edition: The main thing for improving performance on the 3070 was to boost the GDDR6 clock. We were able to add 1200MHz, giving a 16.4Gbps effective speed in theory, but the memory actually ran at 16Gbps (vs. 13.6Gbps at stock settings). Along with the boost to memory clocks, we dropped the GPU clock down to the maximum -502MHz in Afterburner and set the power limit at 55%. That resulted in actual GPU clocks of 960MHz on average. You'd think that wouldn't be sufficient, but boosting the GPU clocks up to 1.9GHz resulted in the same performance while substantially increasing the amount of power used. At the 55%, meanwhile, the 3070 is second only to its little sibling in overall efficiency.

GeForce RTX 3060 Ti Founders Edition: As with the 3070, we bumped the memory speed up as the main change to improve performance. We went from stock to +1200MHz (compared to +750, which is about as far as we could go for gaming overclocks), giving a maximum speed of 16.4Gbps, with a 400MHz negative offset for GPU compute workloads. Other settings were similar: -502MHz GPU clock, 55% power limit, and 50% fan speed. Performance was very close to the 3070 while using less power, making this the overall winner in efficiency.

Asus RTX 3060 Ti TUF Gaming OC: Again, we removed this from the updated charts, but unlike the 3080 and 3090, third party cards weren't markedly different in hashing performance with the 3060 Ti and 3070 GPUs. Our tuned settings ended up with higher clocks (due to the factory overclock) and more power use than the 3060 Ti Founders Edition, but basically the same hashing performance. Optimal efficiency can vary a bit, but for the GA104-based cards, GDDR6 speed is the limiting factor on performance.

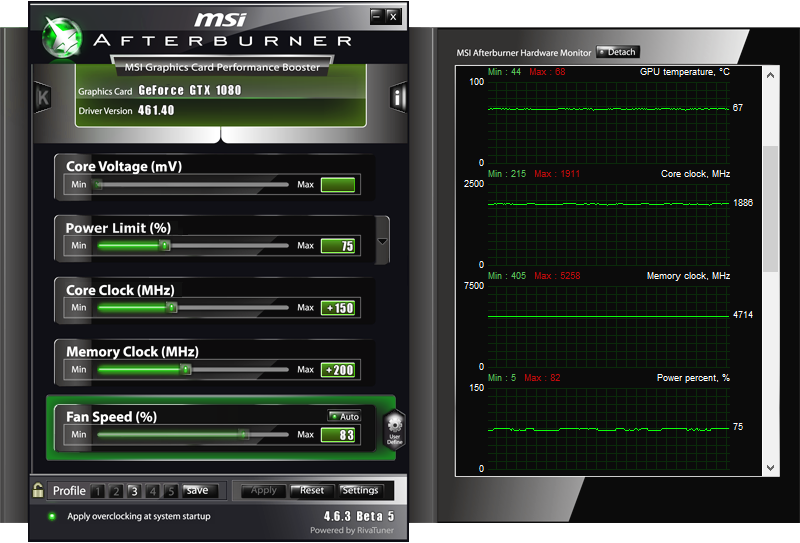

EVGA RTX 3060 12GB: There's no official reference card for the new GA106-based RTX 3060, so we're using the EVGA card that we used in our RTX 3060 12GB review. We set the power limit to 75%, increased the GDDR6 clocks by 1250MHz, and ended up with a relatively high fan speed of around 80% with the default fan curve. (The cooling on this card isn't nearly as robust as many of the other GPUs.) We didn't record 'stock' performance, but it was around 41MH/s.

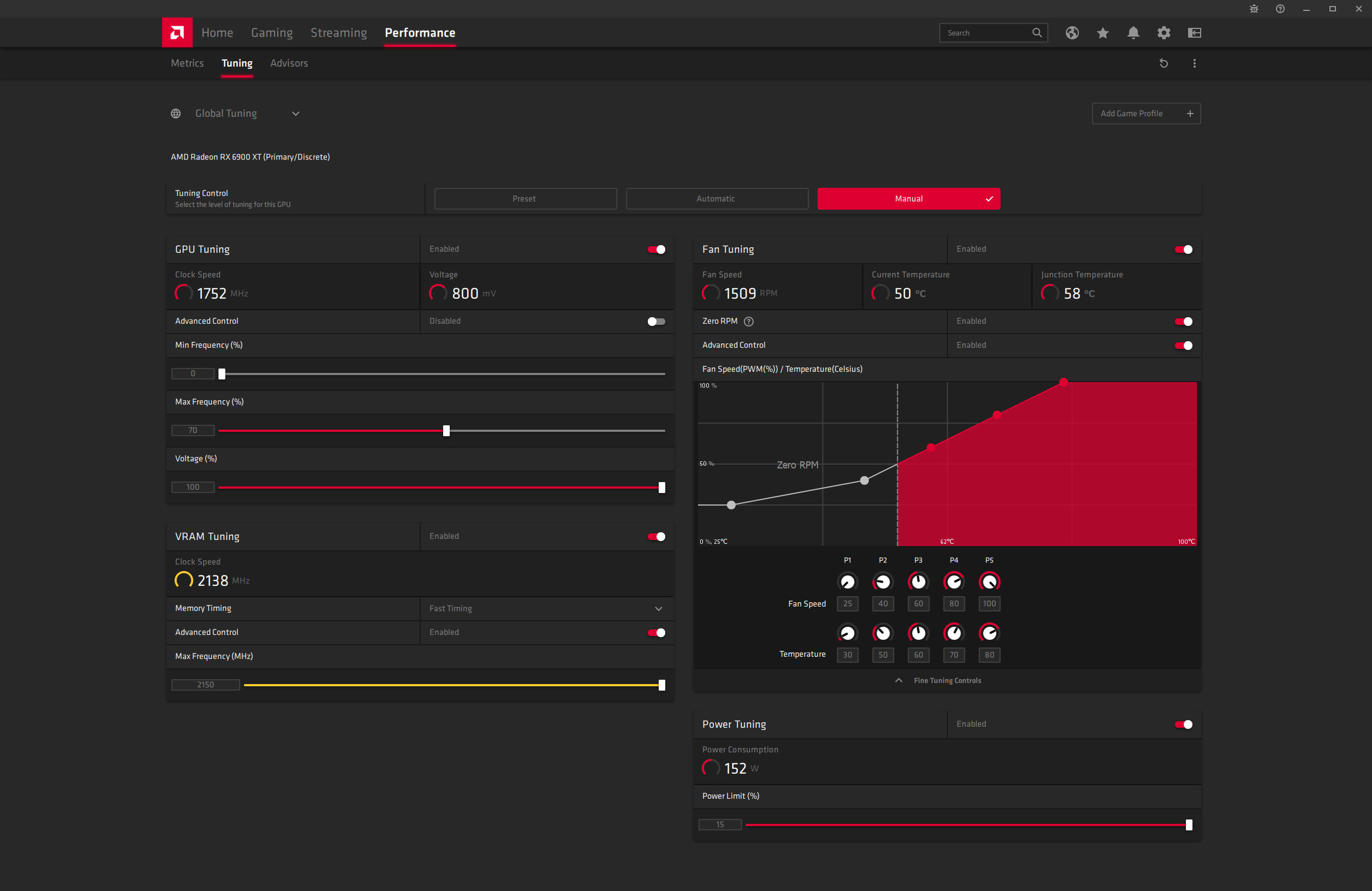

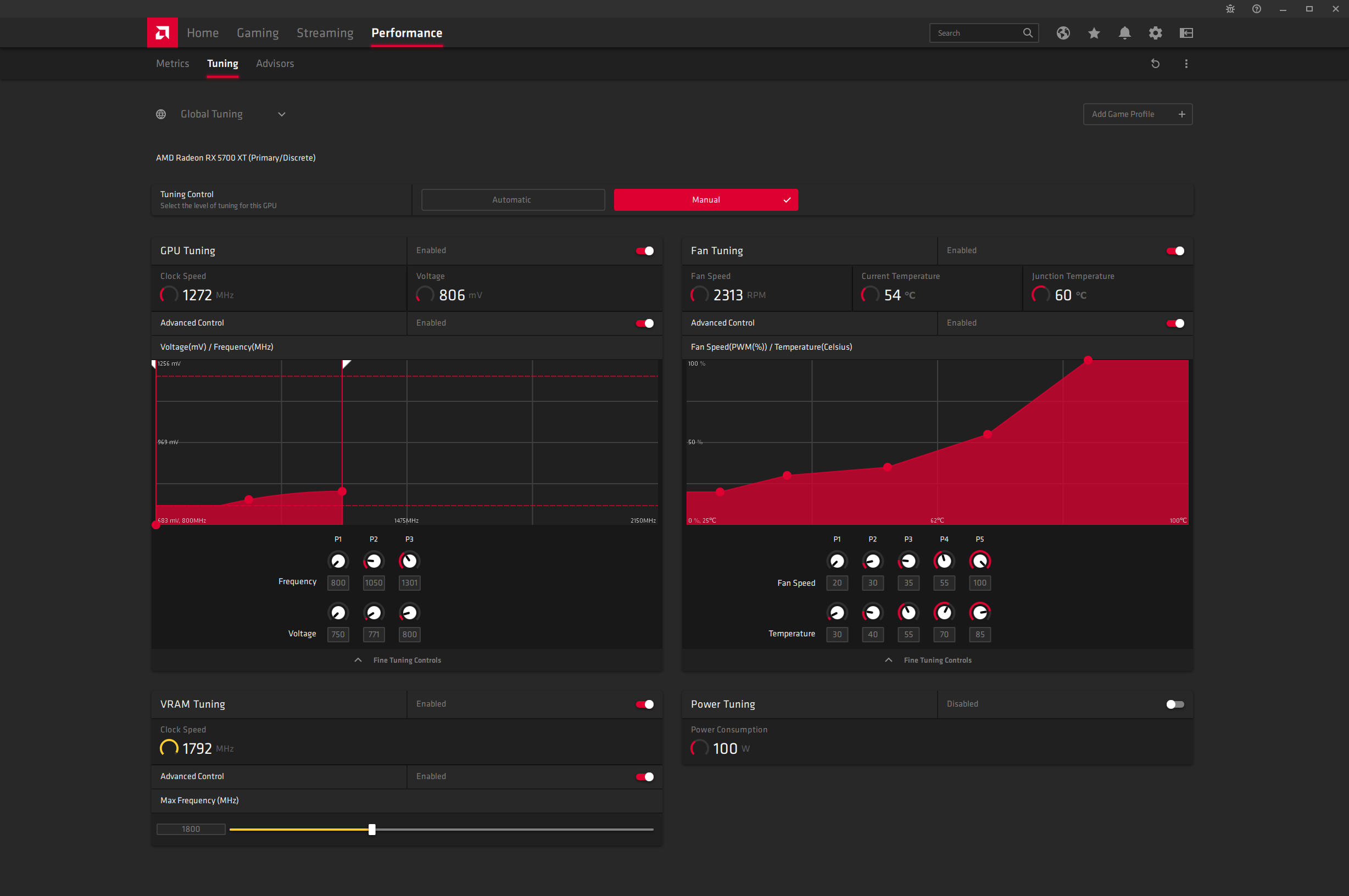

Radeon RX 6900 XT (Reference): Tuning all three of AMD's reference RX 6000 cards ended up very similar. The GPU clocks can go very high at stock, but the memory bandwidth appears to be the main bottleneck. Running with GPU clocks of 2.2-2.5GHz just wastes power and generates heat without improving performance. We cranked the power limit to the maximum 115% just to ensure the VRAM wasn't being held back, then set the memory at +150MHz (the maximum allowed in Radeon Settings), enabled fast RAM timings, and dropped the maximum GPU clock down to 70%. That gave us final clocks of 1747MHz compared to 2289MHz at stock and about 8% higher hash rates overall. More importantly, power consumption took a massive dive, and efficiency improved to one of the better results in our testing. But this actually isn't AMD's best overall showing.

Radeon RX 6800 XT (Reference): Same approach as above, but due to differences in the core configuration and … honestly, we're not sure what the deal is, but we ended up with an optimal maximum GPU frequency setting of only 50% this time, which gave us clocks of 1206MHz instead of 2434MHz — and performance still went up, matching the RX 6900 XT and RX 6800. At the same time, power requirements dropped substantially, from 281W to 186W. Whatever is going on behind the scenes, it appears different AMD Navi 21 GPUs run optimally at different "maximum frequency" settings. Like Nvidia, AMD's GPUs are largely limited in performance by their memory speed, and without tools to overclock beyond 17.2Gbps, there's not much to be done.

Radeon RX 6800 (Reference): With only 60 CUs (compared to 72 on the 6800 XT and 80 on the 6900 XT), you might expect the 6800 vanilla card to end up slower. However, the memory proves the deciding factor once again. We set the GPU power limit at the same 115%, which does make a difference, oddly — average power dropped about 15W if we set it to 100%, even though the card was running well below the official 250W TGP. We also maxed out the memory slider at +150MHz (17.2Gbps effective), and this time achieved optimal performance with the GPU set to 75% on maximum clocks. That resulted in a 1747MHz clock compared to 2289MHz at stock, but fan speed was higher this time. That's because we set the fan to run at 40% at 50C, 60% at 60C, 80% at 70C, and 100% at 80C — and it ended up at 50% speed, which is perhaps more than is required, but we feel it's better safe than sorry if you're looking at 24/7 mining.

Radeon RX 6700 XT (Reference): AMD cuts down to just 40 CUs on the Navi 22 chip, but clocks are quite a bit higher. The memory also gets cut to a 192-bit bus, a 25% reduction in bandwidth that translates directly into hashing performance. We set the maximum GPU frequency to 50% and ramped the VRAM clocks up by 150MHz, which resulted in actual clocks of around 1300MHz while mining. Boosting the clocks back to 2.0GHz didn't improve hash rates, so it's best left alone. With these settings, we got 47MH/s, up from about 40MH/s at stock, with power draw of 120W. Temperatures were quite good, with fan speed of around 50%.

Radeon RX 6600 XT (ASRock): Navi 23 trims the GPU down to 32 CUs, but the memory interface is now just a 128-bit bus. After tuning, the RX 6600 XT basically gets about 2/3 the hashrate of the RX 6700 XT. We again set the maximum GPU frequency to 50% and ramped the VRAM clocks up by 150MHz, which resulted in actual clocks of around 1300MHz while mining. We got 32MH/s, up from about 28MH/s at stock, with power draw of 75W. The efficiency looks good, but the raw hashrate is definitely lacking — it's only marginally faster than a RX 470 8GB from five years back.

Real-World Profitability and Performance

After the testing we've completed, one thing we wanted to do was look at real-world profitability from mining. There's a reason people do this, and results can vary quite a bit depending on your specific hardware. Our results definitely don't match up with what we've seen reported on some GPUs at places like NiceHash or WhatToMine. We've used the optimal tuned settings, as well as power draw figures. However, note that the power draw we're reporting doesn't include PSU inefficiencies or power for the rest of the PC. We're mostly looking at reference models as well, which often aren't the best option, but here's how our data compares to what NiceHash reports.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

| Header Cell - Column 0 | TH Hashrate | NH Hashrate | % Difference | TH Power | NH Power | % Difference |

|---|---|---|---|---|---|---|

| RTX 3090 FE | 106.5 | 120.0 | -11.3% | 279 | 285 | -2.1% |

| RTX 3080 FE | 93.9 | 96.0 | -2.2% | 234 | 220 | 6.4% |

| RTX 3070 FE | 61.3 | 60.6 | 1.2% | 123 | 120 | 2.5% |

| RTX 3060 Ti FE | 60.6 | 60.5 | 0.2% | 116 | 115 | 0.9% |

| RTX 3060 12GB | 48.6 | 49.0 | -0.8% | 119 | 80 | 48.8% |

| RX 6900 XT | 64.6 | 64.0 | 0.9% | 183 | 220 | -16.8% |

| RX 6800 XT | 64.5 | 64.4 | 0.2% | 186 | 190 | -2.1% |

| RX 6800 | 64.6 | 63.4 | 1.9% | 166 | 175 | -5.1% |

| RX 6700 XT | 47.1 | 47.0 | 2.1% | 120 | 170 | -29.4% |

There are some interesting results. The RTX 3090 Founders Edition is definitely not the best sample of mining performance, and NiceHash's number (perhaps with maxed out fans) is 12% higher than what we got, but also used 2% more power — or if you prefer, our numbers were 11% slower while using 2% less power. On the 3080, the FE ended up just 2% slower while using 6% more power.

NiceHash specifically recommends running your fans at 90-100% on the 3080 and 3090, which can definitely boost performance. Our stance is that this is a Very Bad Idea (tm). Not only will the fans make a lot of noise, but they're also destined to fail sooner rather than later. If you're okay replacing the card's fans in the future, or if you want to mod the card with better cooling pads in the first place, you can definitely achieve the NiceHash performance figures. Power use (as measured using Powenetics) would of course increase.

We were relatively close on the 3060 Ti performance, and our earlier power data showed much better results than NiceHash, but now those figures have been updated and are slightly lower than our measured power. The RTX 3060 meanwhile ended up with similar performance, but our power results were significantly higher — perhaps our EVGA sample just wasn't a good starting point.

Shifting over to AMD, everything was very close on performance — within 2% across all four cards. This time, however, our power testing showed anywhere from a few percent to as much as 29% lower power requirements than what NiceHash reports.

The current thinking for a lot of miners is that Nvidia's RTX 30-series cards are superior to AMD, but that's really only true if you look at pure hashrates on the 3080 and 3090. Factor in power efficiency and things are much closer. Besides, it's not like you can buy any of these GPUs right now — unless you're willing to fork out a lot of money or have some good industry contacts for building your mining farm.

Mining with Previous Generation Hardware

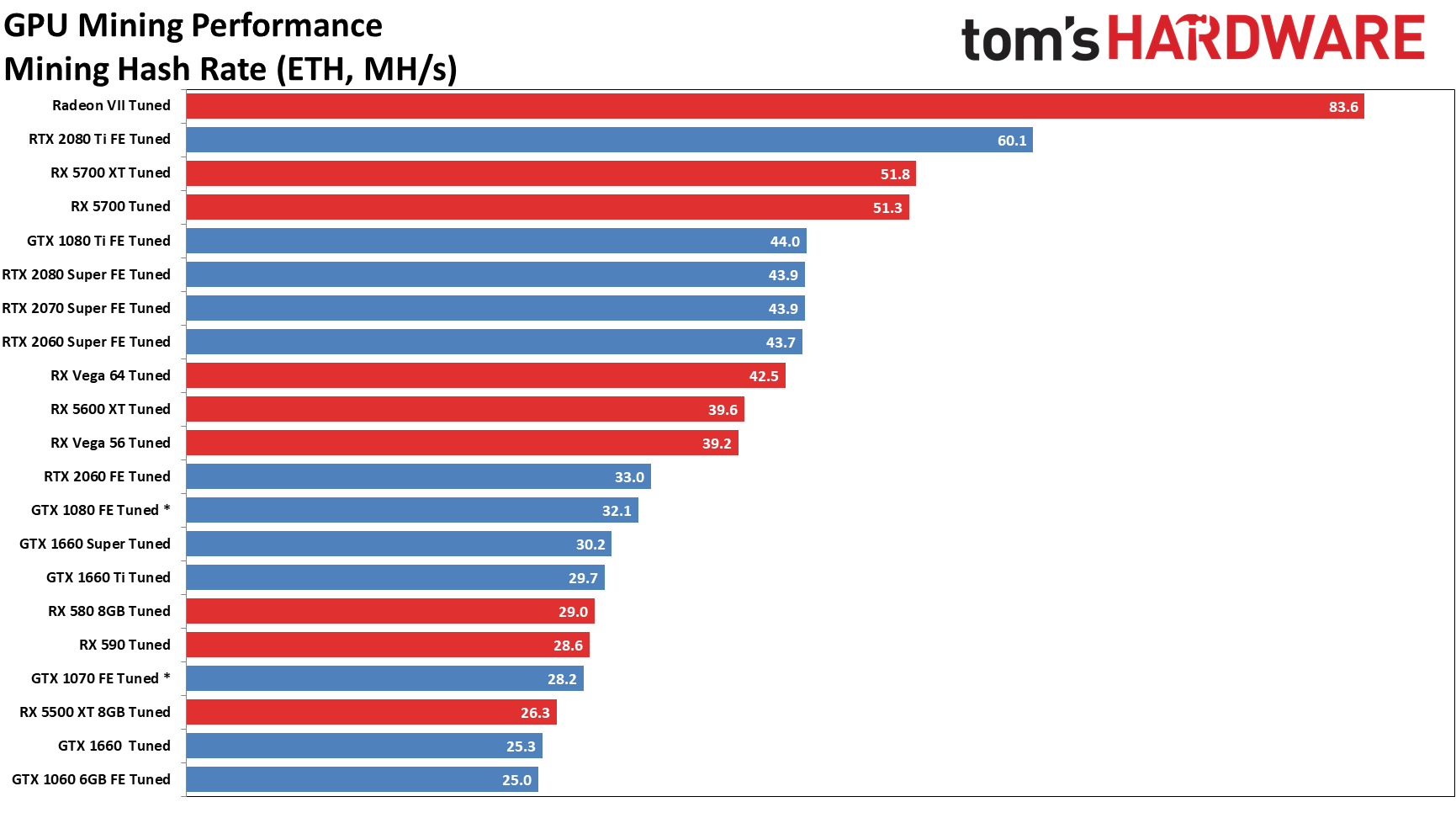

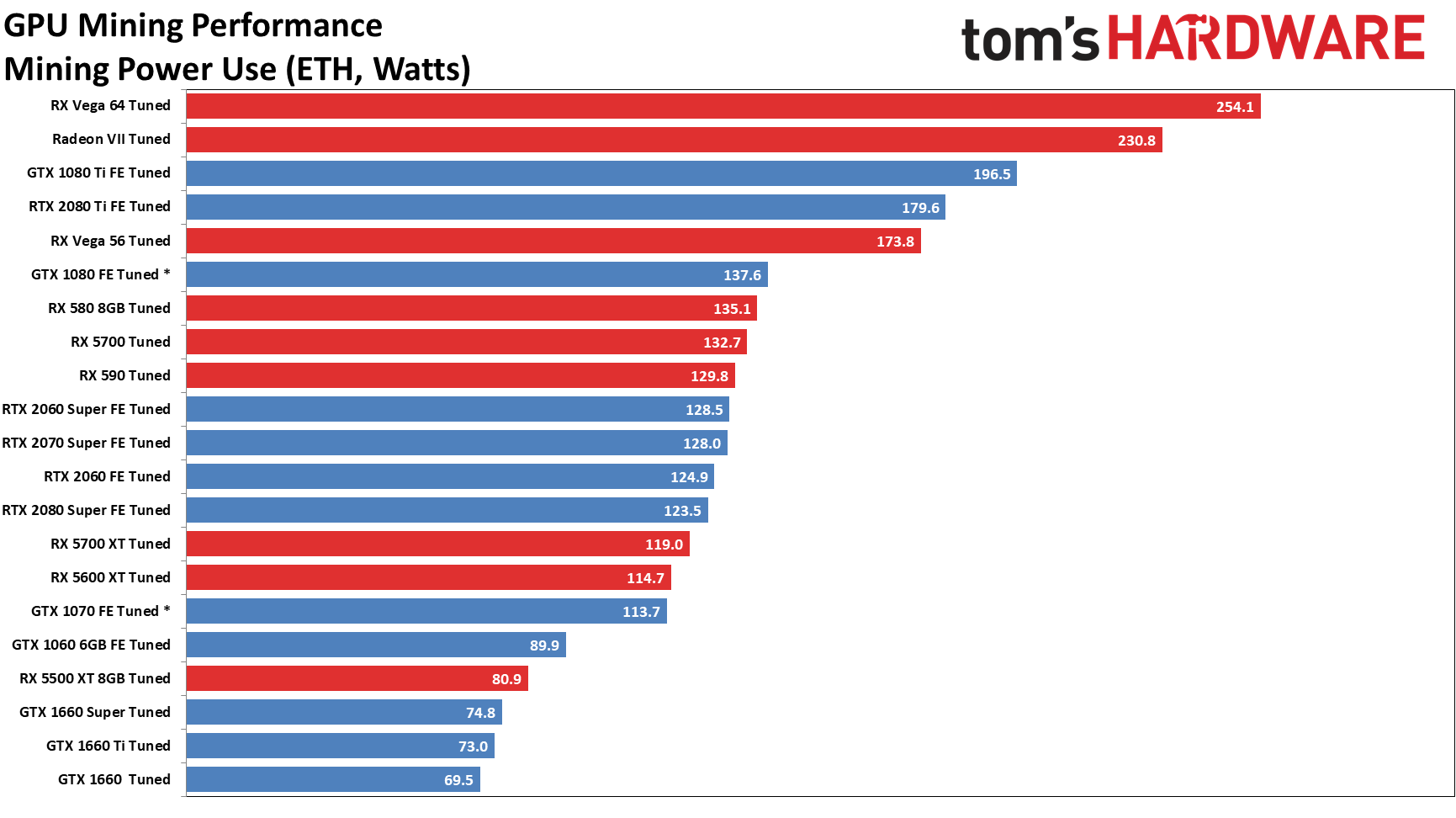

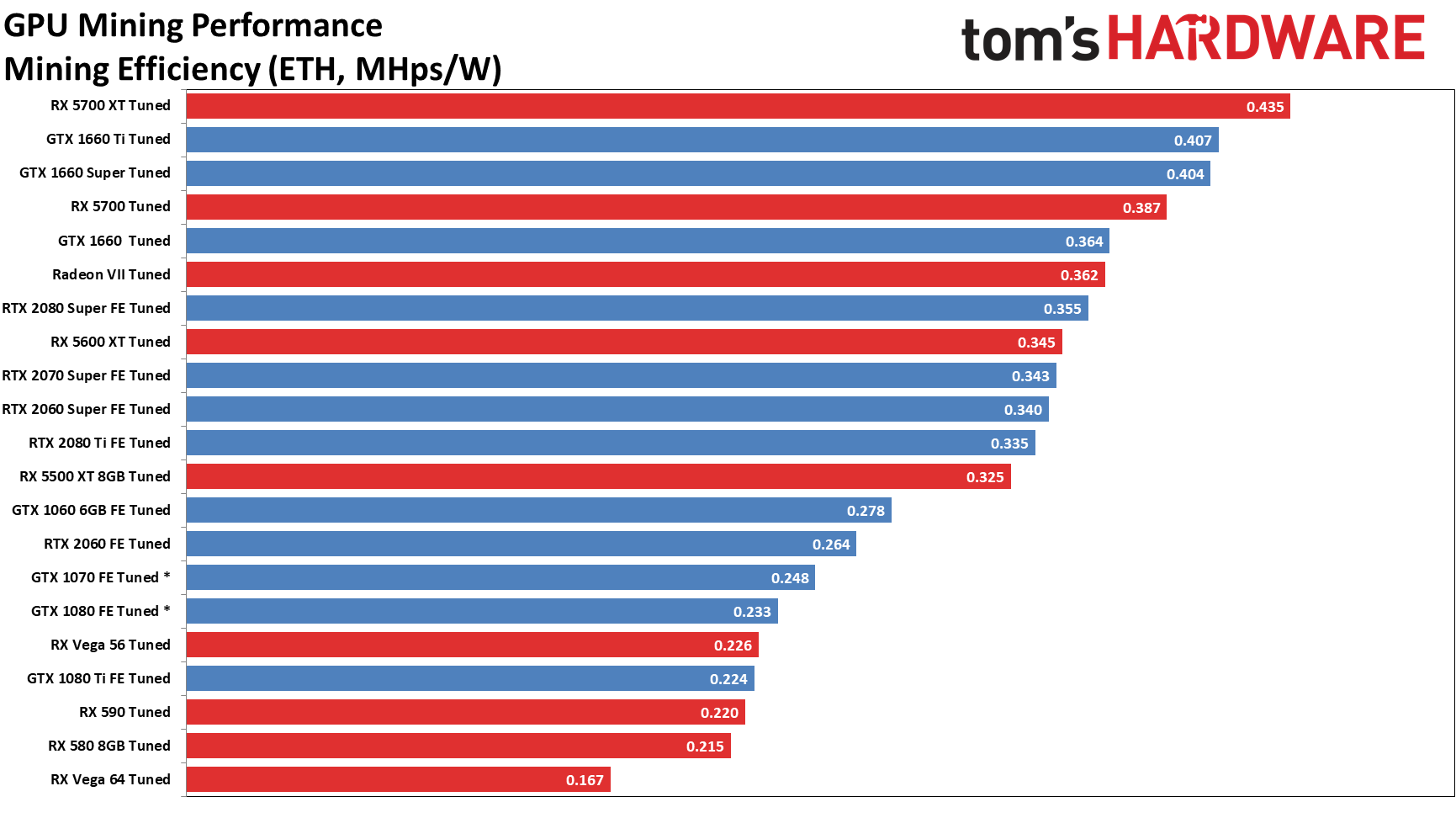

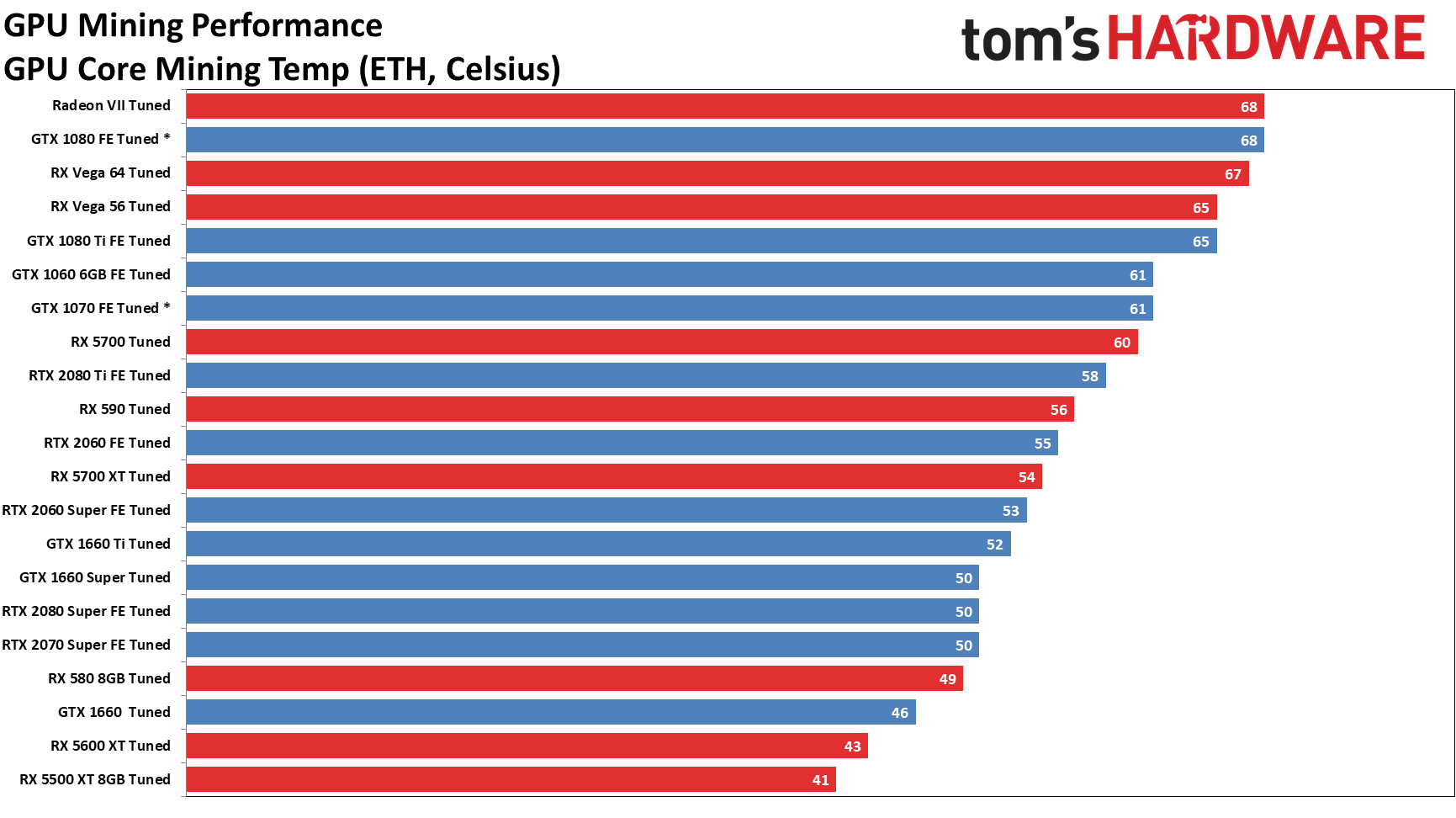

Nvidia Ampere and AMD RDNA2, aka Big Navi, GPUs are getting most of the headlines today, but what about previous generation hardware? The principles we've outlined above generally apply to the older GPUs as well. We've included Nvidia's RTX 20-series, GTX-16-series, and GTX 10-series cards, along with AMD's RX 5000, Vega, and 500 series parts.

We're going to skip all the baseline performance metrics this time, and just jump straight to optimized performance. Note that outside of the RX 580 and 590, and the three GTX 1660 variants, all of our tests were done using the reference models from AMD and Nvidia, which tend to be more of a baseline or worst-case scenario for most GPUs. We'll list our optimized settings below, but here are the results.

* - Our GTX 1070 and GTX 1080 are original Founders Edition cards from 2016 and appear to perform far worse than other 1070/1080 cards. Our Vega cards are also reference models and were far more finnicky than other GPUs. YMMV!

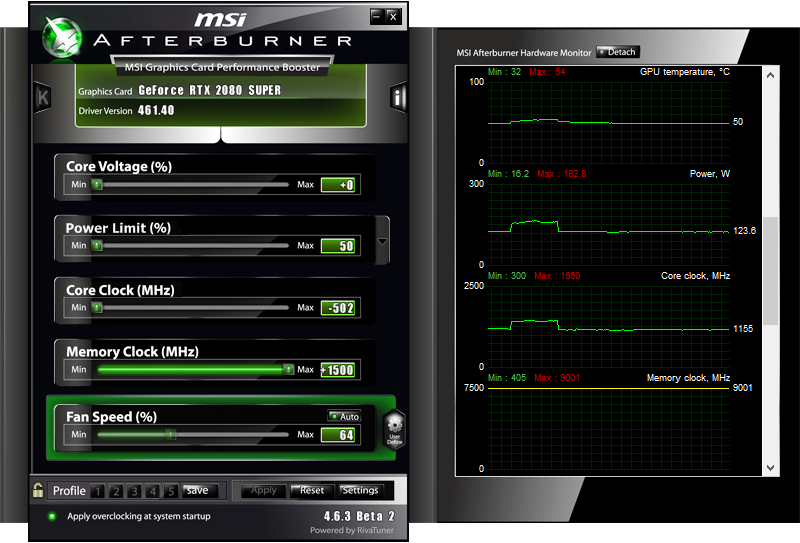

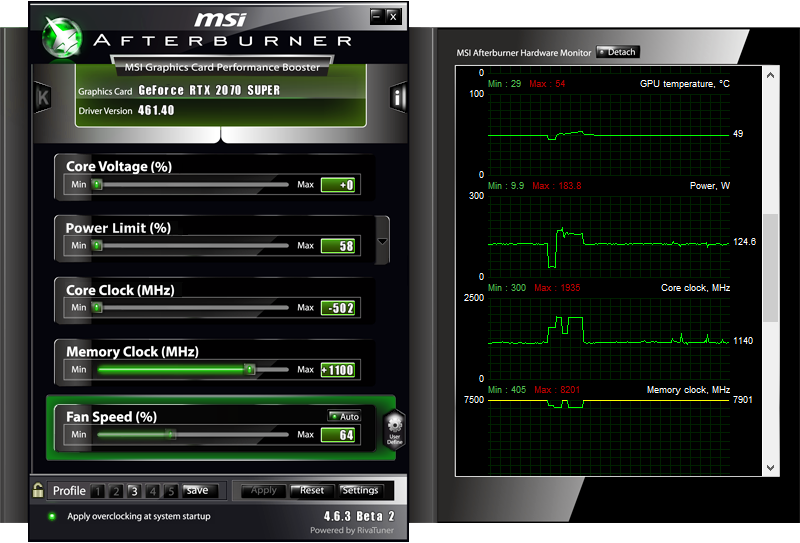

For Nvidia's Turing GPUs, performance again correlates pretty much directly with memory bandwidth, though with a few interesting exceptions. Notice that the 2060 Super, 2070 Super, and 2080 Super all end up with nearly identical performance? That's not an error. The odd bit is that the 2080 Super requires substantially higher memory clocks to get there.

The 2060 Super and 2070 Super both had to run with a +1100 MHz offset in MSI Afterburner, giving an effective speed of 15.8Gbps (with a 400Mbps negative offset for compute). The 2080 Super meanwhile has a base GDDR6 speed of 15.5Gbps and we were able to max out the memory overclock at +1500 MHz, yielding a final speed of 18Gbps for compute. Except we still ended up at the same 44MH/s hash rate. Most likely the memory timings on the GDDR6 in the 2080 Super are more relaxed (looser), so even though bandwidth is higher, bandwidth plus latency ends up balancing out. 2080 Ti meanwhile hits the same ~60MH/s as the 3060 Ti and 3070, thanks to its 352-bit memory interface.

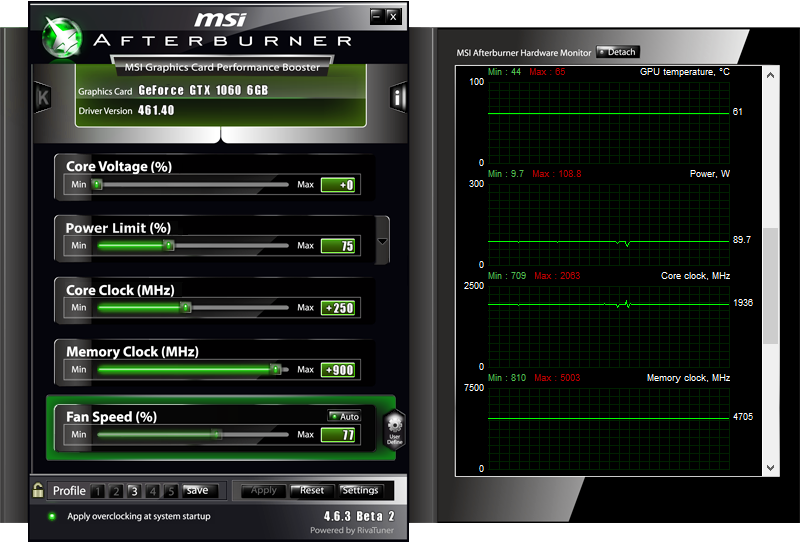

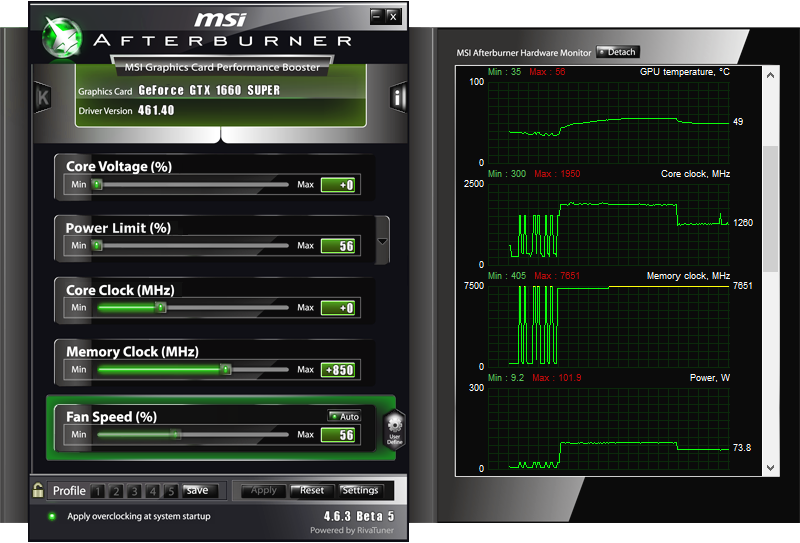

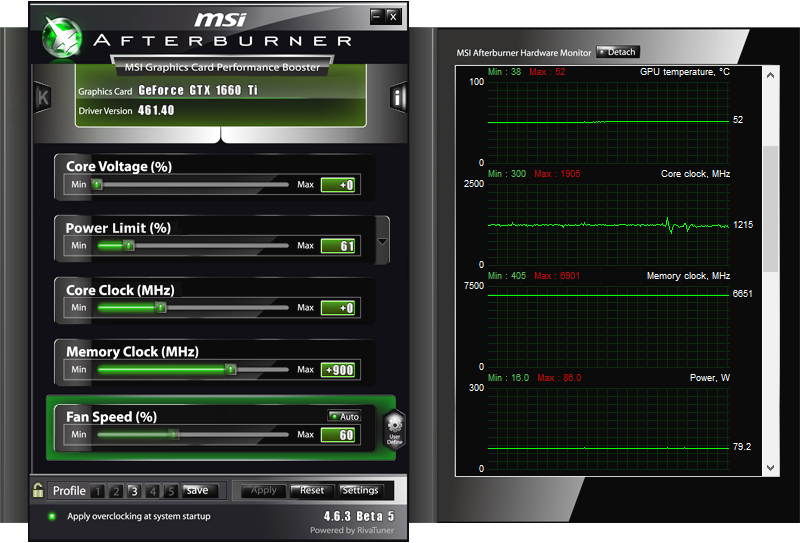

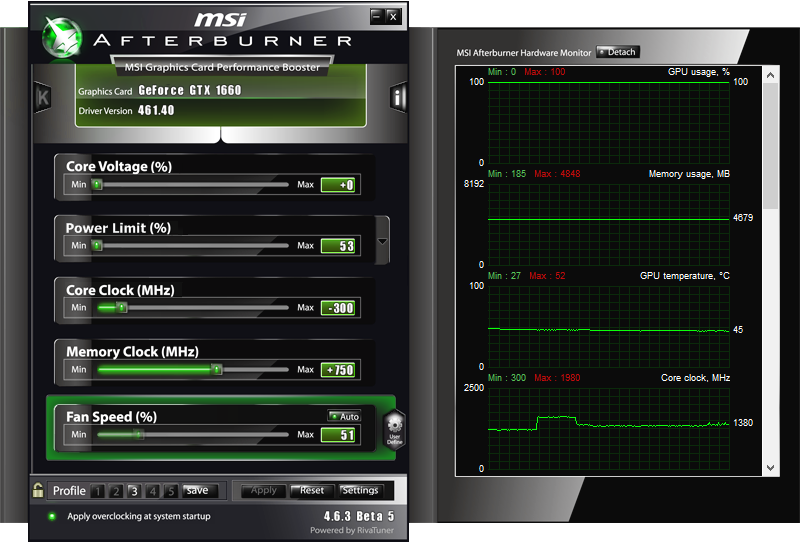

The GTX 16-series GPUs meanwhile offer a decent blend of performance and power. The 1660 Ti and 1660 Super are basically the same speed, though we had better luck with memory overclocking on the Super. The vanilla 1660 with GDDR5 memory doesn't clock quite as high on the VRAM and thus performs worse on Ethereum mining. Regardless of which GPU you're looking at, though, all of the GTX 1660 models benefit greatly from dropping the GPU clocks. That reduces power use and temperatures and boosts overall efficiency.

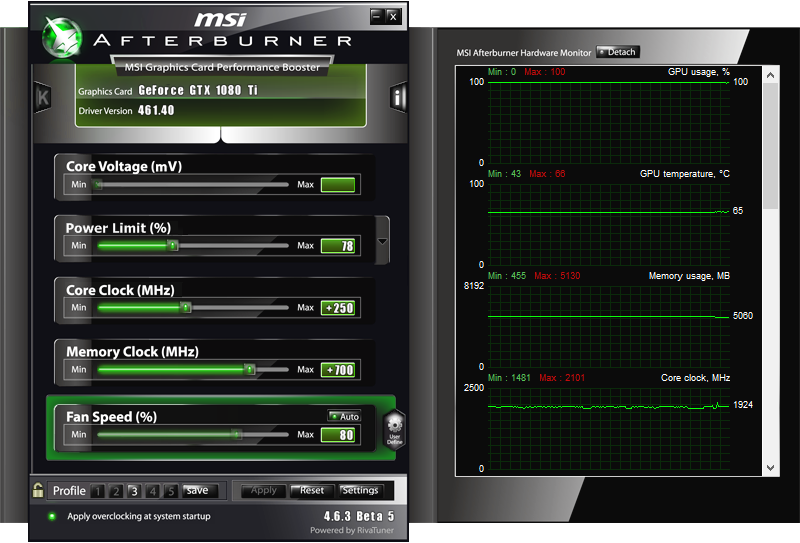

Stepping back one generation further to Pascal (GTX 10-series), the approach changes a bit. Maximum memory clocks are still critical, but core clocks start to matter more—the architecture isn't tuned for compute as much as Turing and Ampere. We got our best results by overclocking the GPU core and memory speed, but then setting a power limit. Except, being nearly four years old, two of our GPUs (the GTX 1070 and GTX 1080) really weren't happy with memory overclocking. Anything more than a 200MHz bump on the 1080 caused a hard PC crash, and while the 1070 managed +500MHz, our hashing results were still a bit lower than expected.

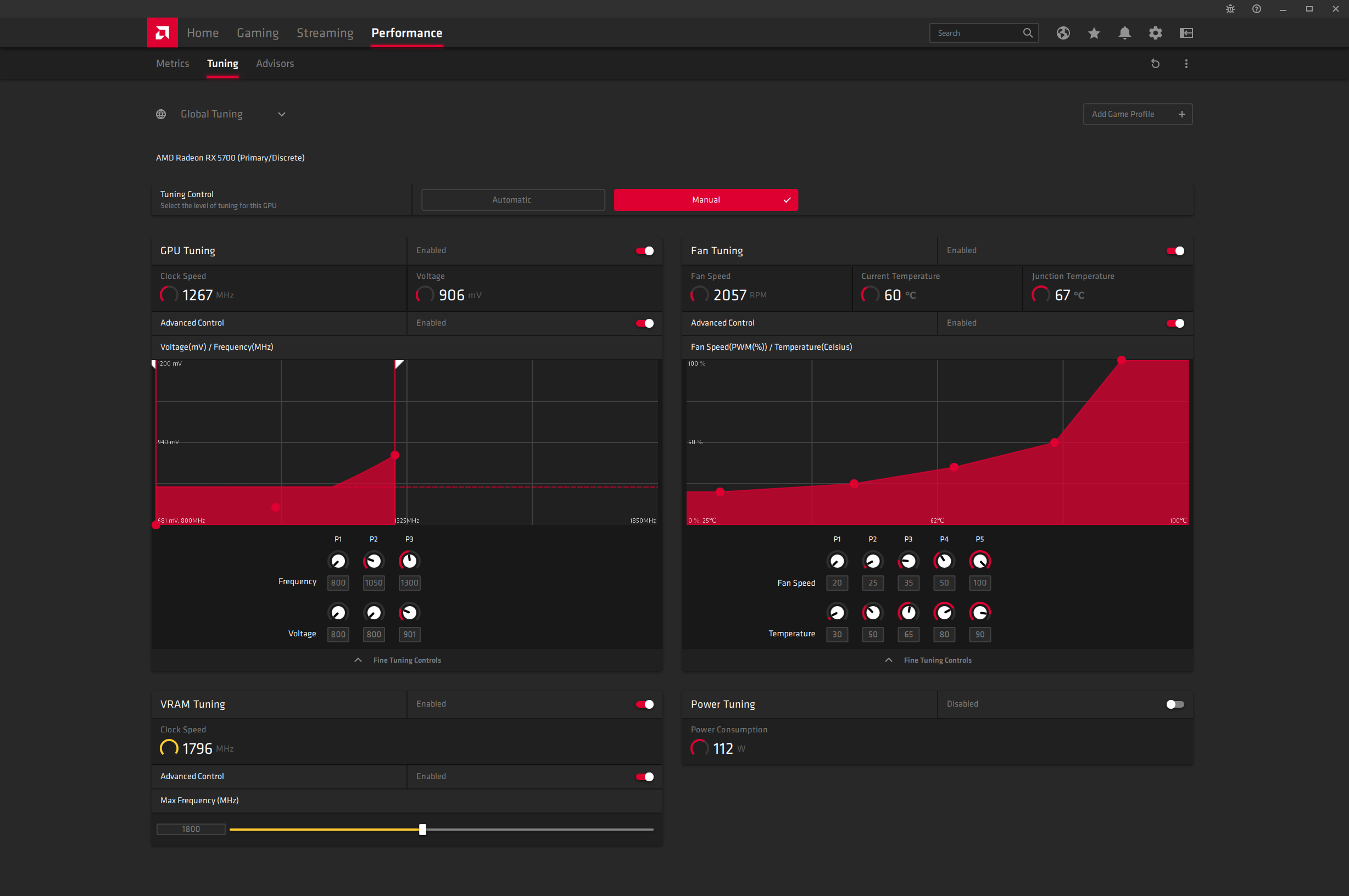

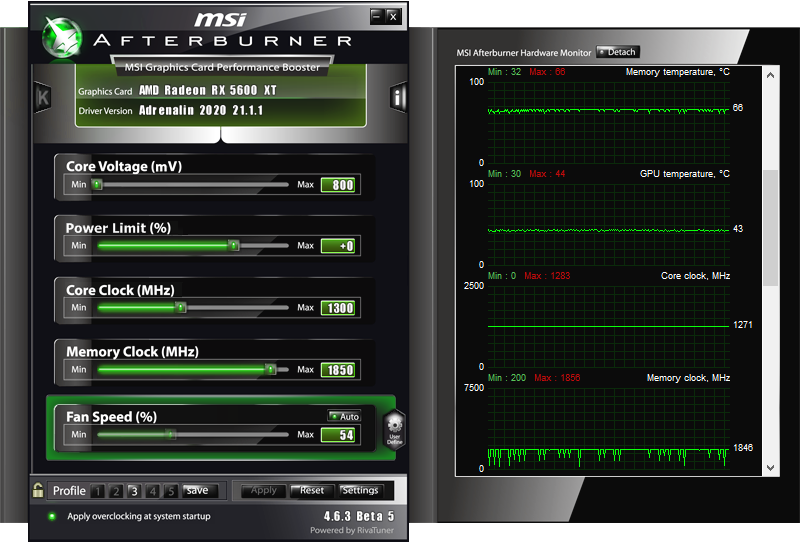

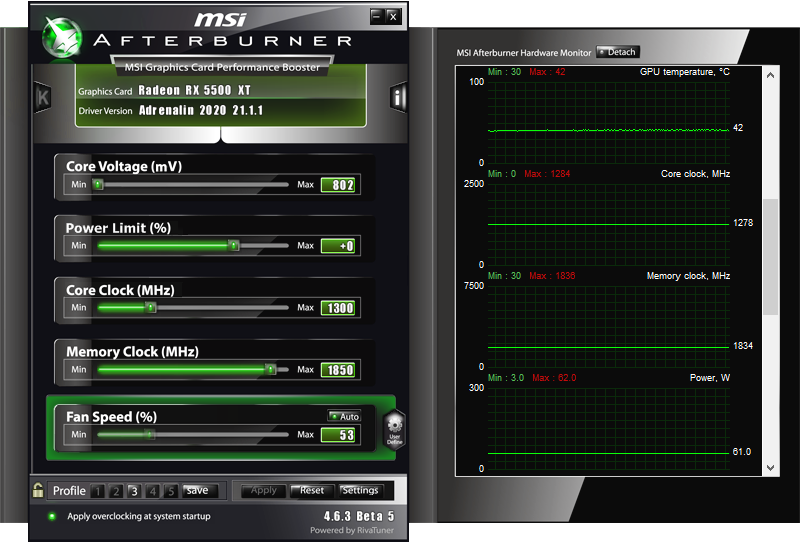

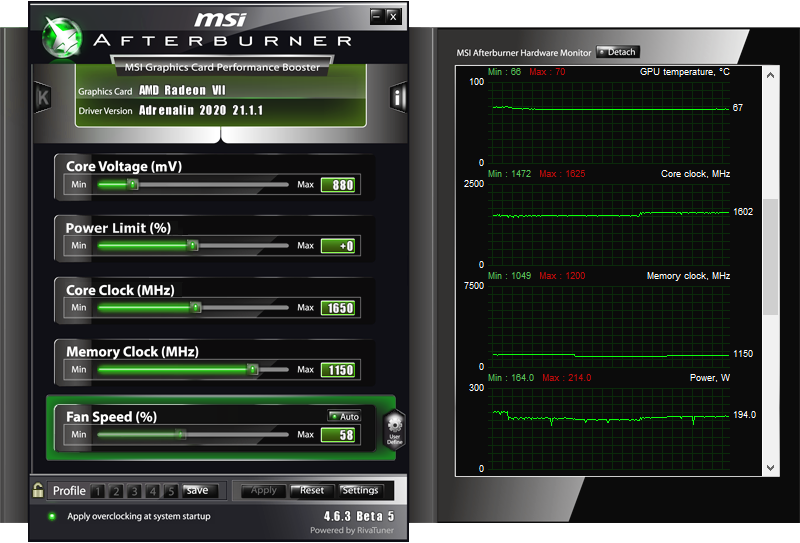

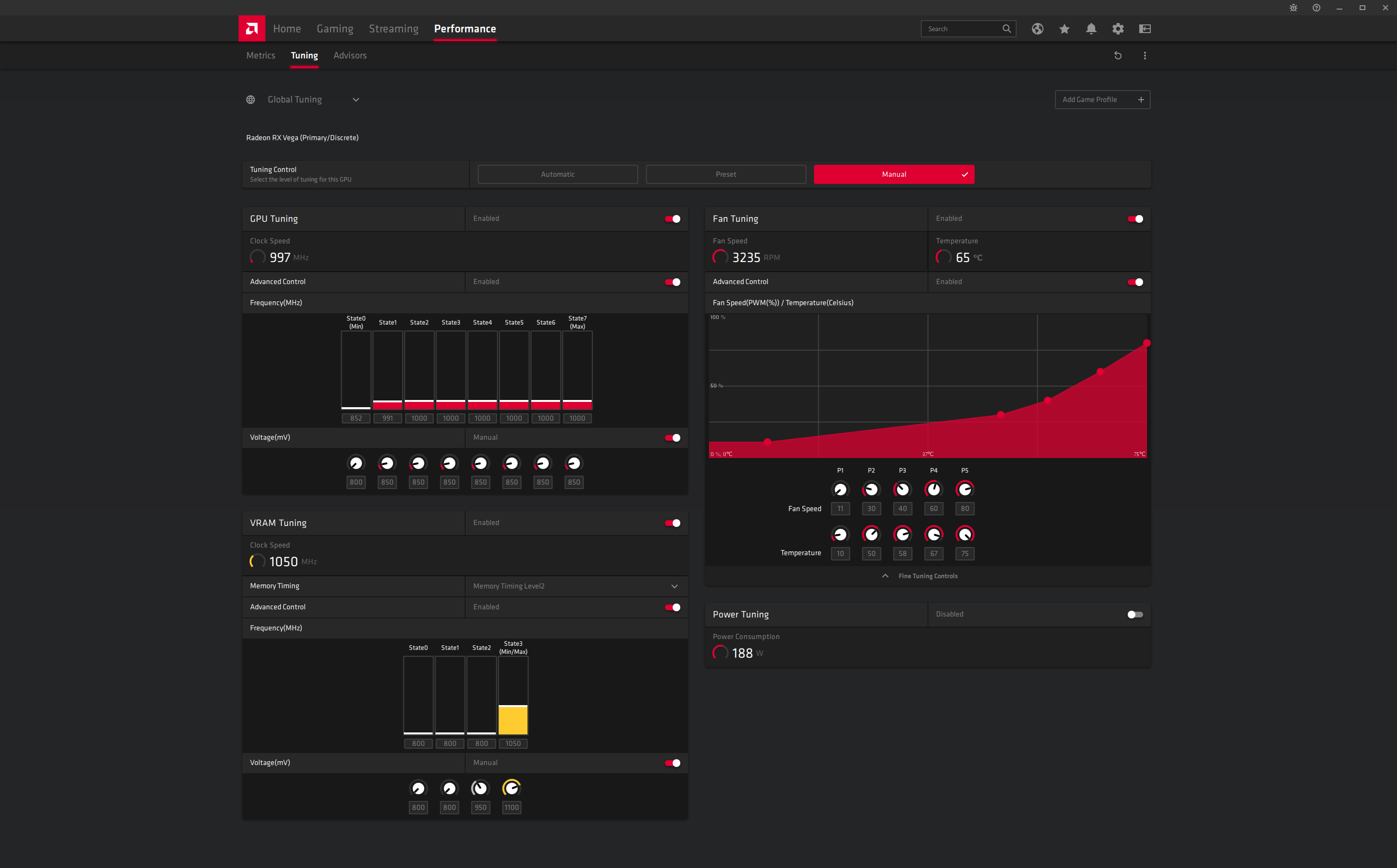

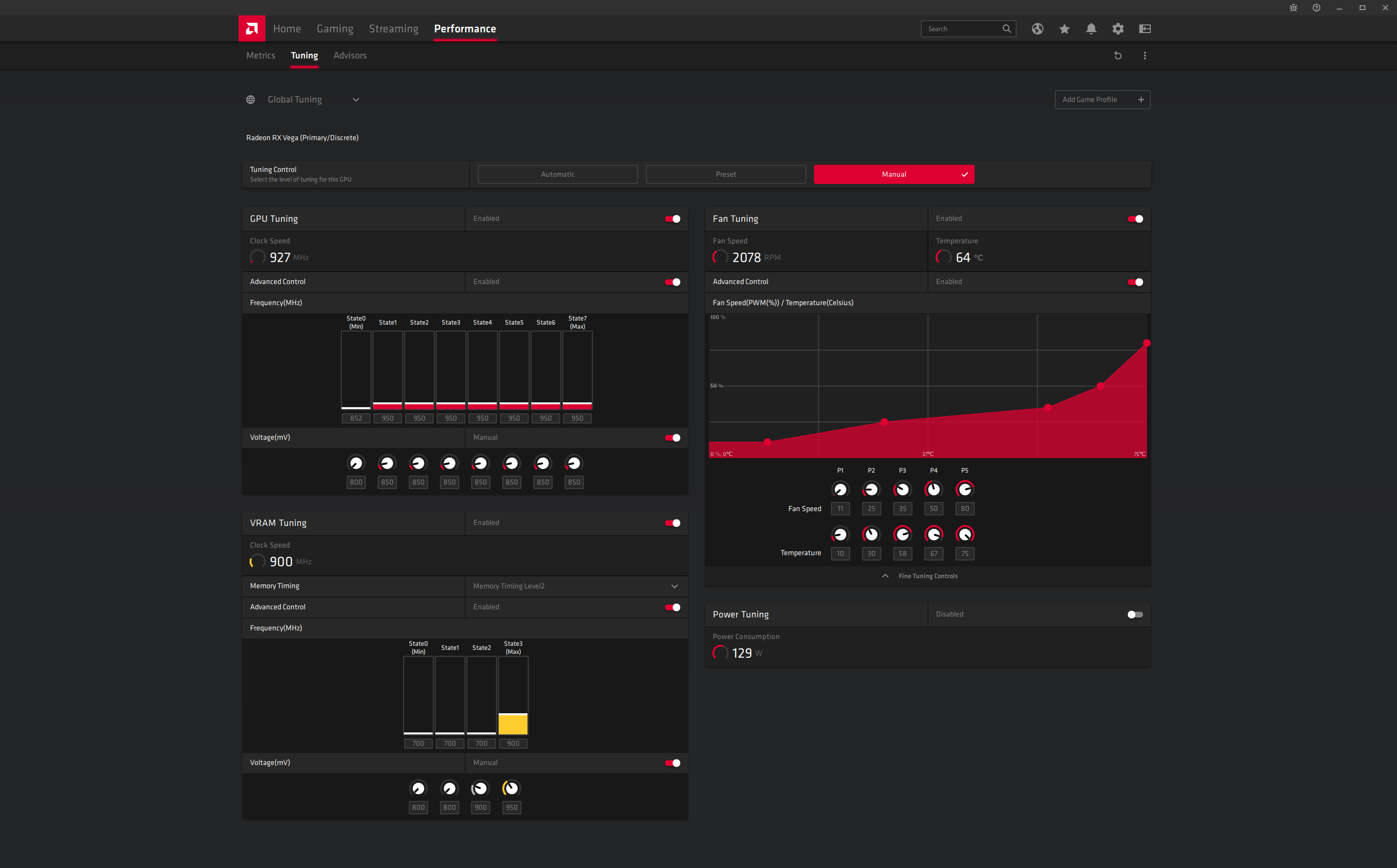

Tweaking AMD's previous generation GPUs is a bit different, in that rather than limiting the power, the best approach is to tune the voltage and clock speed. Not surprisingly, the older the GPUs get, the lower the hash rates and efficiency become. Let's start with the previous generation and move back from there.

The RX 5000 series GPUs (aka RDNA, aka Navi 1x) all continue to perform quite well in Ethereum mining. RX 5700 XT and RX 5700 are nearly as fast as the latest RDNA2 chips, the main difference being in memory bandwidth. Most cards won't go much beyond around 1800MHz on GDDR6 clocks, which is equivalent to 14.4Gbps, though some might be able to do 14.8Gbps. The RX 5600 XT is the same GPU but with a 192-bit memory interface, which cuts performance down by about 25% again, right in step with memory bandwidth. Overall, the 5700 and 5700 XT end up as the most efficient previous generation GPUs for mining.

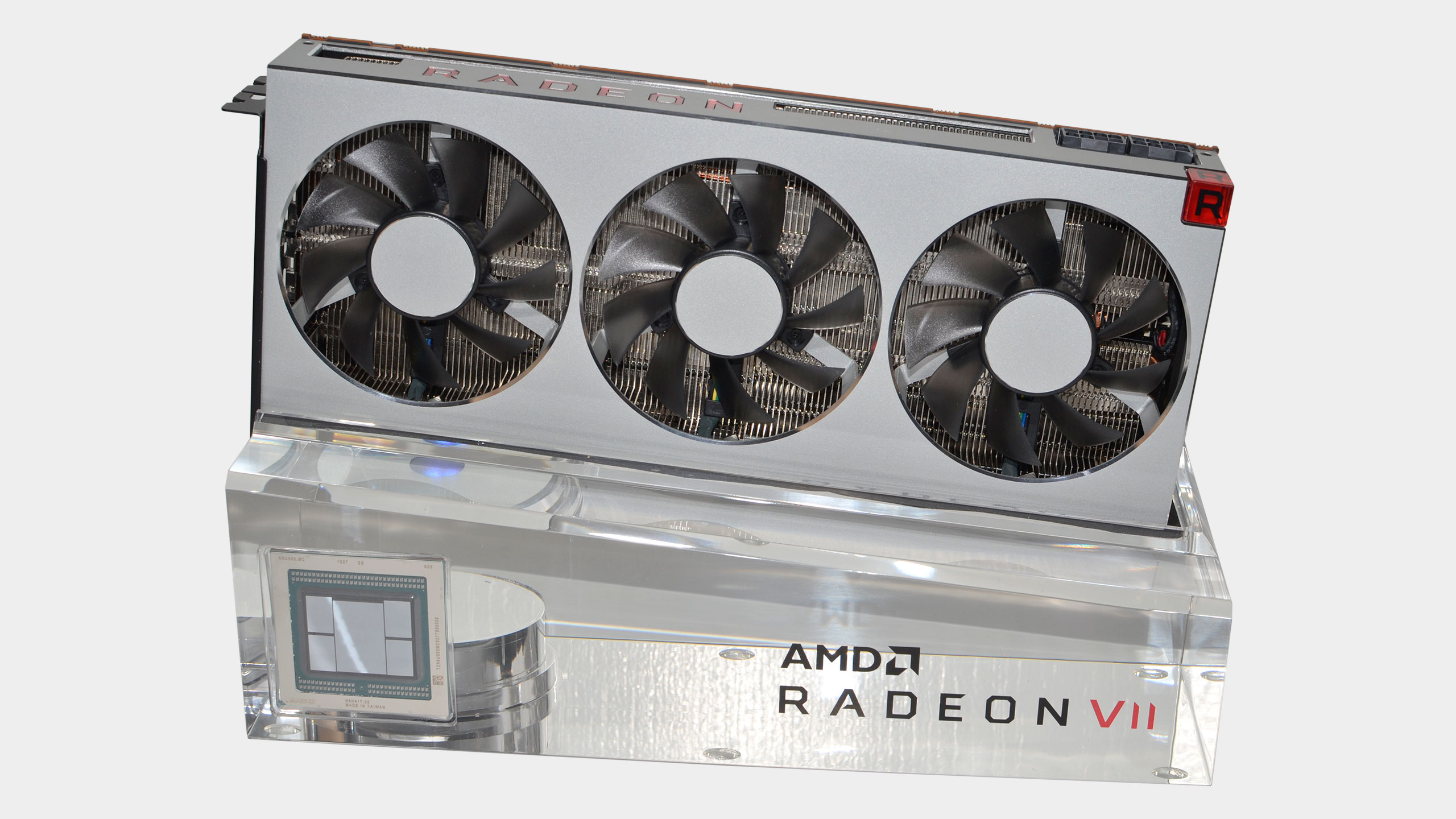

Move back to the Vega architecture and the large memory bandwidth that comes from HBM2 comes into play. The Radeon VII with its monster 4096-bit memory interface and a modest overclock for good measure ends up with 1178 GBps of bandwidth—only the RTX 3090 comes close. But Vega was also a power-hungry architecture, and it benefits from turning down the GPU clocks. We ended up at a 1650MHz setting (stock is 1800MHz) on the Radeon VII, and we set the core voltage to 880mV. That gave mining clocks of 1620MHz.

Vega 64 and Vega 56 used similar settings, but half the memory capacity and bus width limits performance quite a bit relative to the Radeon VII. Also, our results on the reference blower cards are probably far less than ideal—just about any custom Vega card would be a better choice than these blowers. We experienced a lot of crashing on the two Vega cards while trying to tune performance.

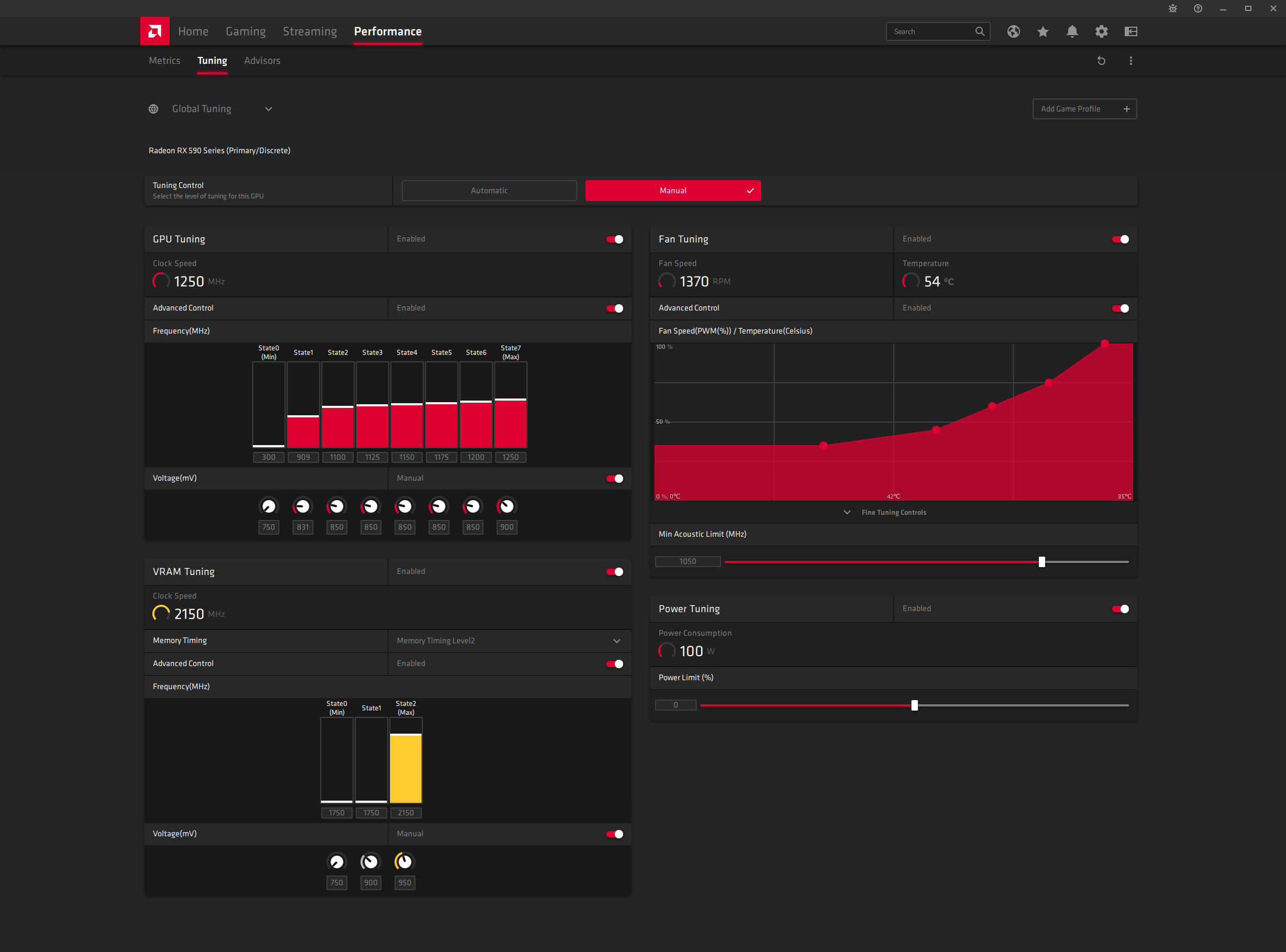

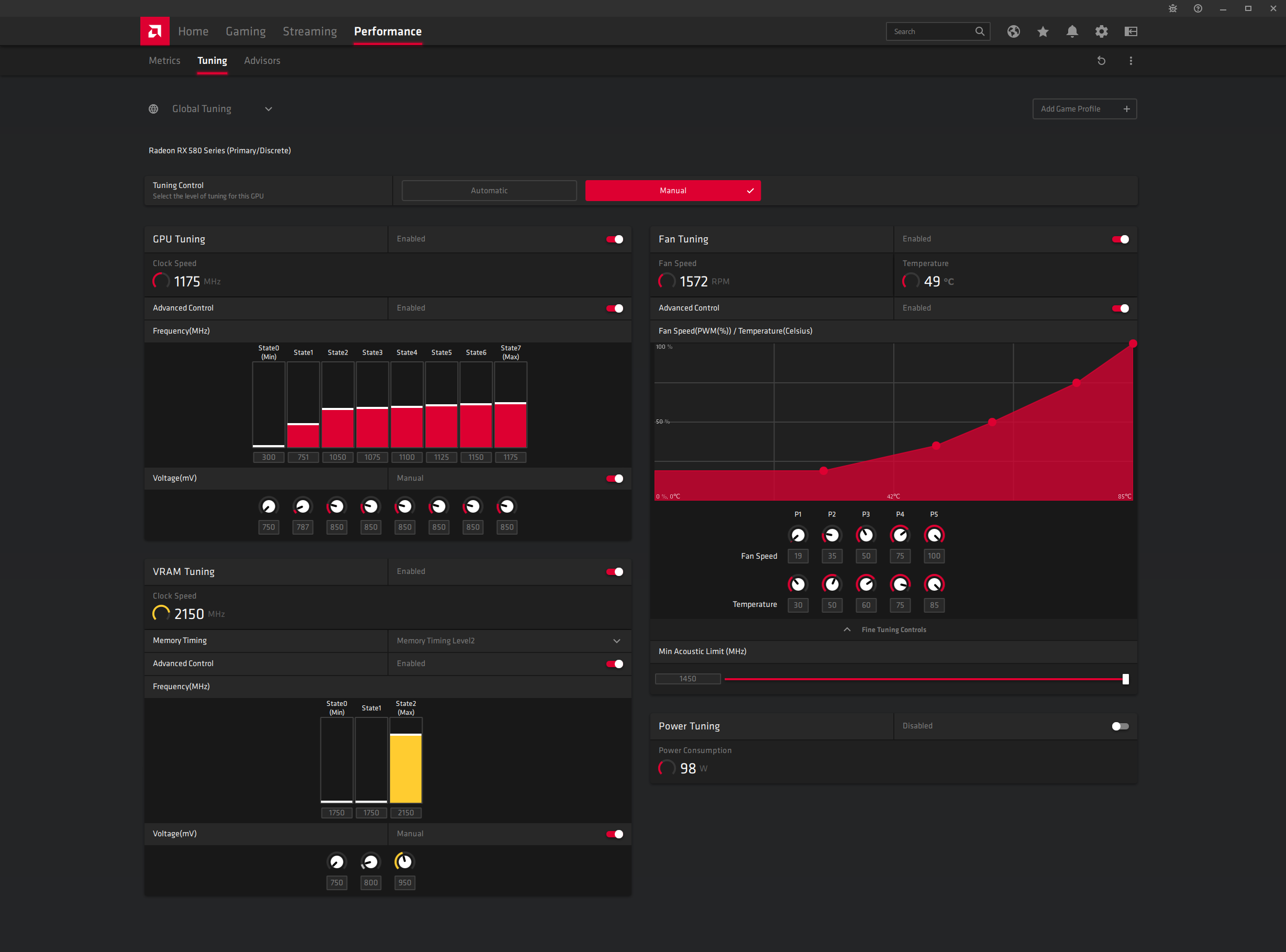

And then there's Polaris. Much like the Pascal GPUs, our tuning efforts took more time and effort. Many places recommend VBIOS flashing, but that's not something we want to do (as we're just testing mining performance, not building a 24/7 mining farm). As such, our maximum hash rates ended up about 10% lower than what some places show. Besides setting a low voltage of 800-900mV, depending on the card, we set the memory timings to level2 in Radeon Settings, and that gave the best results with reasonable power use. YMMV.

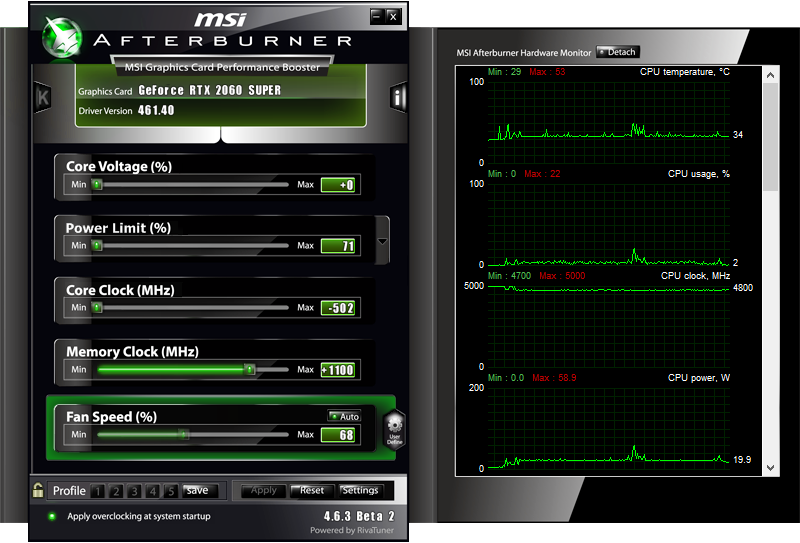

Here's a gallery of all the 'tuned' settings we used for the legacy cards. Use at your own risk, and know that some cards prefer different miner software (or simply fail to work with certain miners).

Is it possible to improve over our results? Absolutely. This is just a baseline set of performance figures and data, using our specific samples. Again, non-reference cards often perform a bit better, and if you want to research VBIOS flashing and hardware modding it's possible to hit higher hash rates. But out of the box, these are numbers that just about any card using one of these GPUs should be able to match.

Should You Start Mining?

This brings us to the final point we want to make. Suppose you already have a graphics card and want to mine using your GPU's spare cycles. In that case, it might be worth considering, particularly if you live in an area where power isn't super expensive. However, where previously you could theoretically net over $10 per day on a 3080 or 3090 card, current profitability has dropped significantly, and less than $4 per day for a 3090 is typical.

At the same time, we strongly recommend against 'redlining' your card for maximum hashrate at all costs. The fans on consumer cards aren't designed to spin at 100%, 24/7 without failing. They will burn out if you run them that way. We also have serious concerns with any component temperature that's consistently at or above 100C (or really, even 90C). But if you do some tuning to get fan speeds down to the 40-50% range, with temperatures below 70C, you can probably mine with a card for quite a while without having it go belly up. Will it be long enough to recover the cost of the card? That's the big unknown.

Here's the thing to remember: Cryptocurrencies are extremely volatile. Ethereum's price has fluctuated over 30% just in the past month, and often swings by 5% (or more) in one day. This means that, as fast as the price shot up, it could plummet just as quickly. At one point, it might have been possible to break even on the cost of a new GPU in a few months. These days, it would take more than a year at current rates, assuming nothing changes. It could go up, but the opposite is more likely. Just ask the GameStop 'investors' how that worked out if you think the sky's the limit.

Again, if you already have a GPU, putting it into service isn't a terrible idea — it's your hardware, do with it what you please. Paying extreme prices for mid-range hardware to try and build your own personal mining mecca, on the other hand, is a big risk. You might do fine, you might do great, or you might end up with a lot of extra PC hardware and debt. Plus, what about all the gamers that would love to buy a new GPU right now and they can't? Somebody, please think of the gamers!

Anyway, if you're looking for additional information, here is our list of the best mining GPUs, and we've checked profitability and daily returns of each GPU. Just remember to account for power costs and cash out enough coins to cover that, and then hopefully you won't get caught holding the bag.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Phaaze88 Uhh... :cautious:Reply

You know this is going to trigger some folks, right?

Kudos for the information though. -

btmedic04 should change the name of the article to: How to disenfranchise and lose your target audienceReply -

JarredWaltonGPU Reply

Yup. But we cover all sorts of hardware news, and after quite a bit of discussion we felt it was better to at least provide some clear testing results -- power in particular being something we can do better than the software power reporting. I've also tried to provide real numbers on hash rates, power, and temperatures, in the hope of discouraging some people from getting caught up in the hype. The likelihood of someone seeing this article on TH and not already having intent to mine is pretty slim.Phaaze88 said:Uhh... :cautious:

You know this is going to trigger some folks, right?

Kudos for the information though.

Hopefully, if nothing else, we can help some people recognize that 24/7 mining isn't a panacea. I know multiple people that tried to build mining rigs back in 2017 to "strike it rich." At best, they came out slightly ahead after all the power and hardware costs. Sure, if they had saved everything and then sold at today's highs, they could have made a lot of money. But waiting three years to recoup thousands of dollars (all the while wondering if the prices will actually go up) ... there are better ways of investing IMO. And at least we're not sponsored by NiceHash. :unsure: -

tomspown Oh well Toms Hardware has hit a topic thats not realy welcome in the pc gaming community, they should also include sites for the miners where they can get the last few available Gpus as well, we will just sit on the side line and watch Tommy offer advice to miners.Reply -

Mythy While its true that mining is making new GPU purchases difficult I would argue that this article is valid and should be appreciated. I myself make a little over $600 a month in PROFIT from just running my gaming rig and my kids/wifes on NiceHash. I mean look.. These cards literally pay for them self in time so we gamers might as well make the purchase a little bit easier lol As for availability if anyone is having issues buying a 3000 series I would suggest hitting up the Microcenter discord servers. They have the inventory delivery's posted, times, numbers ect. Not that hard to get one if you know where to look. I grabbed my 3090 in 2 days.Reply -

digitalgriffin I'm very anti mining:Reply

It causes artificial inflation on component prices

It's bad for the environment in general. The mining in the world consumes energy for mining more than several of the largest states combined.

The biggest users of Crypto are buying stuff they shouldn't, or using it to blackmail people. It funds terrorist states like Iran and N.Korea. -

demonoman Why tomshardware promote scams??? Nicehash still hold 18% of my funds stolen in 2017, and they don't want return it! They are scammers and perhaps this is paid article. Tomshardware you lost my trust...Reply

Keep away from nicehash, it is a scam!