Intel Launches Xeon E7-8800, 4800 v4 Broadwell-EX Family

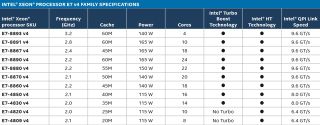

Intel announced its newest Xeon E7-8800/4800 v4 Broadwell-EX series of processors. The Broadwell-EX E7 v4 series features an additional QPI lane to increase scalability compared to the E5-2600 v4 Xeons we recently reviewed, and increases the LLC (Last Level Cache) to 60 MB and the core count to 24 (an increase over the 18-core maximum found with the previous-generation E7 v3 Haswell-EX family). The E7 v4 Series is socket-compatible with the previous generation E7 v3 series Brickland platform after a BIOS update.

The E7 v4 series features the same 14nm Broadwell microarchitecture found on the Broadwell-EP E5 v4 series. Intel is focusing on offering more features for each generation of Xeons as it wrestles with the expiration of Moore's Law. The E7 v4 series offers the expanded feature set that we found with the E5 v4 Broadwell-EP series, such as Posted Interrupts, Page Modification Logging, Cache Allocation Technology and Memory Bandwidth Monitoring, among many others. The E7 v4 series, like the E5 v4, offers 70 RAS (Reliability, Availability and Serviceability) features and up to 70 percent more encryption performance.

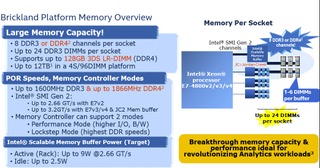

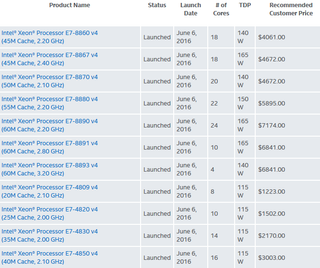

The Broadwell-EX family provides up to 60 MB of Last Level Cache, as opposed to an upper limit of 45 MB for the E5 v4 series, and also brings the notable addition of support for 3DS LRDIMMs and DDR4 Write CRC (an enhanced error control scheme). The E7 v4 series reaches a maximum 165W TDP, but also offers 150, 140 and 115W TDP flavors.

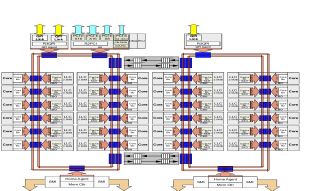

The E7 v4 only offers up to 32 PCIe 3.0 lanes in comparison to the 40 lanes provided by the E5 v4 series, but E7 processors tend to be deployed into quad-socket (or more) implementations. PCIe lanes scale accordingly with the addition of more processors, thus offering an increase in the number of PCIe lanes available to the system. For instance, a dual-socket E5 v4 system provides 80 PCIe 3.0 lanes, but a quad-socket E7 v4 provides 128 PCIe 3.0 lanes.

Some Broadwell-EP E5 v4 SKUs scale up to four sockets, but the E7 v4 Broadwell-EX series supports up to eight sockets in a native configuration. The E7 v4 series also expands up to 32 sockets with third party node controllers (available from select server vendors).

The E7 v4 series also supports up to 24 TB of memory in an eight-socket configuration (128GB 3DS LR-DIMMS), which is double the amount supported by the E7 v3 series. This incredibly dense configuration can be accomplished by deploying 24 DIMMs per socket (spread over the eight available memory channels).

The Brickland platform links the on-die four-channel memory controllers to four Intel Scalable Memory Buffers (codenamed Jordan Creek), through separate Intel SMI Gen 2 channels, which increases the number of memory channels to eight. A single socket supports up to 3 TB of memory with three of the 128 GB 3DS LR-DIMMs on each channel.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

This continued expansion of addressable memory will be important for large-scale analytics applications in the enterprise. In-memory databases (storing the working data set in memory) are becoming widespread as data centers look to wring the utmost performance from the compute resource without the hindrance of limited storage performance.

Users can deploy 3D XPoint with NVDIMMs to use it as bit-addressable memory (much like a slower tier of memory). According to Intel, its forthcoming 3D XPoint memory offers up to 10x the density of DRAM, which could be another motivation for Intel to double the amount of memory supported on the platform.

The E7 v4 block diagram indicates that the Broadwell-EX architecture employs the same modular design as the E5 v4 HCC die, but brings the addition of the third QPI link to the ring on the right. The additional QPI link creates a mesh for data traffic. This reduces the number of "hops" required in quad-socket configurations by allowing all four CPUs to communicate directly with one another. The additional QPI link also reduces the number of jumps between each CPU in larger multi-socket configurations.

The high-end E5 v4 HCC die actually supported up to 24 cores, but Intel disabled one core on each side of the ring, which led to the 22-core limitation. Each core also features a 2.5 MB cache slice, so the two disabled cores on the E5 v4 reduced the maximum amount of LLC to 55 MB.

The E7-8890 v4 has all 24 cores active, and as a result, it offers up to 60 MB of LLC due to the cache associated with the extra two cores.

The E7 v4 Broadwell-EX series scales from 8 cores/16 threads up to 24 cores/48 threads and clock frequencies span from 3.2 GHz to 2.0 GHz. All of the E7 v4 models support hyperthreading, but the E7-4820 v4 and E7-4809 v4 do not support Turbo Boost Technology.

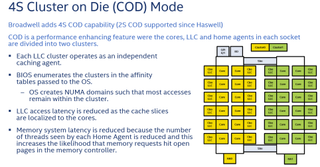

Intel extended Cluster On Die (COD) mode to the E7 v4 series in four socket environments, which is an increase in comparison to the dual-socket limitation with the Haswell-EX series. COD speeds performance by splitting the cores, LLC and home agents of each ring into a distinct cluster, which then operates within a NUMA domain to localize cache accesses to the same ring/cluster. This feature ultimately reduces LLC access latency, which improves performance.

Intel positions the Broadwell-EX E7 v4 series for scale-up compute-intensive workloads, such as real-time analytics, in-memory databases, online transaction processing (OLTP) workloads, supply chain management (SCM) and enterprise resource planning (ERP), among others.

Intel claims that the E7-8890 v4 provides up to 1.4x more performance with half the power consumption of an IBM Power8 platform, along with 10x the performance per dollar. Intel also touts that its new architecture supports 3TB of memory per socket in comparison to 2 TB per socket for the IBM Power8 competitor, but it is notable that IBM has its Power9 architecture waiting in the wings.

Intel indicates that the E7 v4 series has set 27 new benchmark world records and offers up to 1.3x average performance with several key industry-standard workloads (SPECjbb, SPECint, SPECvrt, TPC-E). The company also claims up to 35 percent more VM density in comparison to the E7 v3 series, as measured with the SPECvrt_sc 2013 benchmark.

Many of the users that will migrate to the new platform follow a multi-year update cadence due to maintenance contracts, so Intel included comparisons to the E7 v2 Ivy Bridge-EX series. Intel claims that the E7 v4 series provides up to twice the VM density, 4.6x faster ad-hoc queries, and 2.9x the performance with STAC-M3 theoretical profit and loss workloads in comparison to the E7 v2 series.

Intel indicated that the new E7 v4 series would feature the same MSRP as the respective E7 v3 SKU stack, and are available today worldwide.

Paul Alcorn is a Contributing Editor for Tom's Hardware, covering Storage. Follow him onTwitter and Google+.

Follow us @tomshardware, on Facebook and on Google+.

EDIT 6/6/2016 9:00 CST - Added Brickland Platform Memory Overview graphic.

Paul Alcorn is the Managing Editor: News and Emerging Tech for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

TechyInAZ It's pretty cool to see what's happening on the server market. That is a lot of cores. :)Reply -

jimmysmitty Reply18078432 said:It's pretty cool to see what's happening on the server market. That is a lot of cores. :)

The real crazy thing is that servers can and will benefit from every aspect of this unlike consumer desktops. Servers are just better at utilizing cores and performance enhancements than consumer desktops are. -

derekullo ReplyServers are just better at utilizing cores and performance enhancements than consumer desktops are.

Handbrake can use all 16 of my i7 threads just as well as a xeon could

-

bit_user ReplyFor instance, a dual-socket E5 v4 system provides 80 PCIe 3.0 lanes

Did you mean 64, or did I misunderstand how it works? -

Nuckles_56 Reply

It is 64 lanes for the E7 v4 CPUs which are being announced, whilst the E5 CPUs which are already on the market will support 80 PCIe 3.0 lanes. This is for dual socket configurationsFor instance, a dual-socket E5 v4 system provides 80 PCIe 3.0 lanes

Did you mean 64, or did I misunderstand how it works? -

Aspiring techie I was drooling until I saw the $7000+ price tag. Who would have though a chip about the size of a square inch could be that much?Reply -

bit_user Reply

That's certainly what I thought.18080322 said:

It is 64 lanes for the E7 v4 CPUs which are being announced, whilst the E5 CPUs which are already on the market will support 80 PCIe 3.0 lanes. This is for dual socket configurationsFor instance, a dual-socket E5 v4 system provides 80 PCIe 3.0 lanes

Did you mean 64, or did I misunderstand how it works?

I'm guessing they repurposed the pins for 8 PCIe lanes for the extra QPI channel. Even if you don't get all 3 QPI channels per slot, it's still pretty impressive that existing boards can support these with only a BIOS upgrade.

The article also states that these CPUs have 8 memory channels, but the specs on ark.intel.com indicate only 4. -

bit_user Reply

At $6841.00, I wonder if the E7-8893 v4 is their most expensive quad-core, ever...18080604 said:I was drooling until I saw the $7000+ price tag. Who would have though a chip about the size of a square inch could be that much? -

PaulAlcorn Reply18080678 said:

That's certainly what I thought.18080322 said:

It is 64 lanes for the E7 v4 CPUs which are being announced, whilst the E5 CPUs which are already on the market will support 80 PCIe 3.0 lanes. This is for dual socket configurationsFor instance, a dual-socket E5 v4 system provides 80 PCIe 3.0 lanes

Did you mean 64, or did I misunderstand how it works?

I'm guessing they repurposed the pins for 8 PCIe lanes for the extra QPI channel. Even if you don't get all 3 QPI channels per slot, it's still pretty impressive that existing boards can support these with only a BIOS upgrade.

The article also states that these CPUs have 8 memory channels, but the specs on ark.intel.com indicate only 4.

Yes, only 32 PCIe lanes on the E7 v4.

The move up to eight memory channels comes via the Brickland platform, which allows up to eight DDR3 or DDR4 channels per socket. I added a graphic in the article (and a bit of text) to help clear it up. The platform links the on-die memory controllers to memory buffers, codenamed Jordan Creek, through four of the Intel SMI Gen 2 channels, thus inflating the memory channel count. Very exciting tech, it helps Intel compete with the impressive per-socket RAM capacity on the Power8 architecture.

-

Haravikk Reply

I suspect they meant in terms of total workload; even with Handbrake you're probably not encoding 100% of the time and most consumer desktops aren't used for anything near as demanding as that. Traditionally if you require a machine for encoding and similar tasks you buy a workstation, but there's certainly less need for it these days.Servers are just better at utilizing cores and performance enhancements than consumer desktops are.

Handbrake can use all 16 of my i7 threads just as well as a xeon could

For example my main working machine used to be a 2008 Mac Pro with 2x quad core 3.2ghz xeon CPUs and a Nvidia 8800 GT. I've since replaced it with a tiny system using an i7-4790T (quad core, eight threads, 45W with HD4600) which actually outperforms the Mac Pro at most tasks, and in areas where the Mac Pro does still have an edge it's only a small one. But that's the nature of the game these days; processors are getting so efficient you can do a lot with a system made from consumer grade components. That said, I'd hesitate to call an i7 desktop as "consumer" desktop, more like "prosumer" as you don't spend the money for an i7 unless you think you're going to need that kind of performance; even in gaming systems it's usually a waste of money, so things like video encoding are more suitable tasks for it, but they're not really regular consumer tasks.

But ehm… yeah, for the kinds of systems these xeon processors are for you're looking at close to maximum utilisation at all times, probably using some kind of virtualisation to run as many jobs as possible on as many systems are required to meet demand. That usually means pushing the minimum number of servers to their limits so you can let others sleep until demand increases.

Anyway, as much as I'd love a 24 core (48 thread) CPU I think the price is a little steep for me ;)

I wonder, is the decrease in PCIe lanes a result of the increased memory lanes?