The 23 Greatest Graphics Cards Of All Time

Do you remember the first card that introduced you to 3D gaming? How about the best accelerator you ever owned? Take a trip with us down memory lane as we recount the 23 most powerful graphics boards of their respective eras.

Meet The Most Legendary Graphics Cards

Can you remember the graphics engines we consider worthy of commemoration as the title holders of their time? We thought it would be fun to walk down memory lane and recount the most powerful GPUs. We'll start back in 1996 with a single pixel pipeline and make our way to the present day with modern monsters capable of handling over 3000 pixel shader operations per clock!

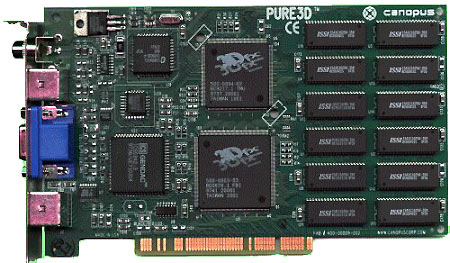

March 1996: 3dfx Voodoo1

The first accomplished accelerator was 3dfx's original Voodoo. With a 50 MHz core/memory clock and 4 MB of RAM, this 3D-only product needed to be used in tandem with a 2D graphics card, as it was incapable of running Windows on its own. Despite that disadvantage, it delivered 3D frame rates superior to every other product on the market, easily beating the S3 Virge, ATI Rage II, and Rendition Verite 1000 chipsets.

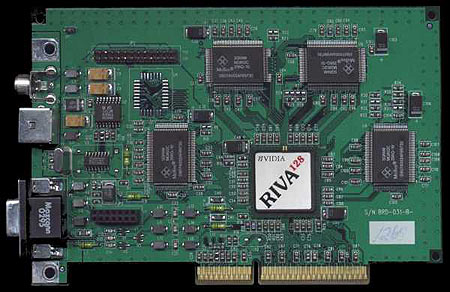

Late 1997: Nvidia Riva 128

After more than a year of undisputed supremacy, 3dfx was finally usurped by an upstart company called Nvidia and its new Riva 128 3D accelerator. With a 100 MHz core/memory clock and 4 MB of SGRAM, the Riva 128 was probably the first real competition for the Voodoo chipset. While this graphics engine couldn't run 3dfx's popular and proprietary Glide API, it performed well with Microsoft's DirectX 5 application interface.

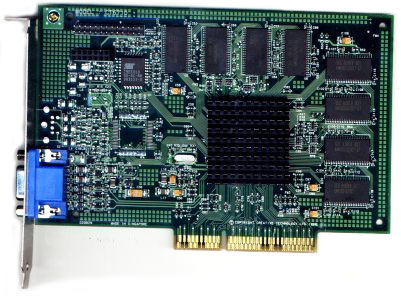

February 1998: 3dfx Voodoo2

With a 90 MHz core and up to 12 MB of EDO RAM at 100 MHz, the Voodoo2 chipset regained the championship title for 3dfx with about three times the polygon calculation performance of the original Voodoo. This was not only the fastest product available, but it also brought new features to the table like edge anti-aliasing and Scan-Line Interleave (SLI), a technology that allowed two cards top be used in tandem for the ultimate performance.

Mid 1998: Nvidia Riva TNT

The TNT arrived in 1998 with dual pixel pipelines and 90 MHz core clock. While two Voodoo2 cards in SLI were the key still enabled the best graphics performance, when it came to a single card, Nvidia's Riva TNT with 4 MB of RAM was often the fastest solution. While it's true that 3dfx still enjoyed tremendous popularity and strong support for its proprietary Glide API, DirectX continued to gain traction among developers. ATI's Rage 128 provided good competition for the Riva TNT, but showed up late to the party in December 1998. At this time, ATI wasn't considered a serious contender for enthusiasts because it concentrated on partnerships with system integrators instead of the retail space.

May 1999: Nvidia TNT 2 Ultra

Making its way to retail in May 1999, Nvidia's TNT2 removed any doubts as to whether Nvidia was capable of keeping pressure on 3dfx. With two pixel pipelines, a 150 MHz core clock, and 32 MB of VRAM, the TNT2 exceeded expectations in DirectX and OpenGL applications, APIs that kept growing in importance compared to Glide. The Voodoo3 arrived soon after with strong performance but significant limitations: 16-bit color, no AGP texturing support, and a lack of OpenGL support.

August 1999: Nvidia GeForce 256

The original GeForce 256 with SDR RAM was powerful, but the DDR version of this card is what cemented Nvidia as the gorrilla in the market and put the nail in 3dfx's coffin. Featuring hardware-accelerated transform and lighting, this was the first graphics chipset dubbed a Graphics Processing Unit, or GPU. With it's 150 MHz core clock and 32 MB of DDR memory at 300 MT/s, the GeForce easily beat out competition from the ATI Rage Fury MAXX and Voodoo3 3500.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

April 2000: Nvidia GeForce 2 GTS

With four pixel pipelines capable of rendering two texels per pass, a 200 MHz core clock, and 32 MB of DDR memory running at 666 MT/s, the GeForce 2 GTS dominated the competition. ATI introduced its Radeon 256 a few months later, but the GeForce 2 consistently beat it in 16-bit color mode, the contemporary standard of its day. It's notable that the Radeon sometimes showed an advantage in 32-bit color mode, but this was rarely used at the time due to performance implications, and the GeForce was widely regarded as the faster card. Nevertheless, it was the first time ATI stepped up to the plate as the primary competition for Nvidia. 3dfx's Voodoo5 5500, lacking hardware-accelerated TnL, was left in the dust. The company filed for bankruptcy soon after and Nvidia purchased its assets.

February 2001: Nvidia GeForce 3

The GeForce 3's introduction marked the beginning of the DirectX 8 generation and the use of programmable vertex and pixel shaders. The original GeForce 3 was equipped with 64 MB of 460 MHz DDR RAM and four pixel shaders running at 200 MHz. About six months after it's release, ATI countered with the Radeon 8500. Despite a more advanced architecture (including advanced features like hardware tessellation that wouldn't become popular until DirectX 11) ATI's card never claimed the performance crown from Nvidia, which stayed on top via aggressive driver development and product updates (like the GeForce 3 Ti 500).

February 2002: Nvidia GeForce 4 Ti 4600

Nvidia improved DirectX 8 functionality and performance with its GeForce 4. Anti-aliasing also received attention. The GeForce 4 Ti 4600 was the line's flagship, sporting a 300 MHz core and 128 MB of RAM at 650 MHz. It's interesting to note that the GeForce 4 Ti 4800 was simply a GeForce 4 Ti 4600 with AGP 8x support. The GeForce 4 line enjoyed six-month reign of unchallenged supremacy until the arrival of ATI's R300 GPU, though Matrox's Parhelia provided an interesting distraction at the time.

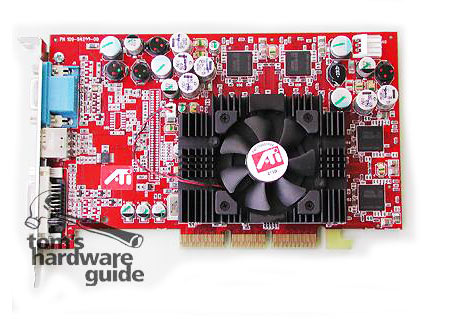

August 2002: ATI Radeon 9700 Pro

Nvidia's iron grip on the performance crown was usurped by ATI's R300, the first DirectX 9 GPU. With eight pixel pipelines in a 325 MHz GPU and 256 MB of 620 MT/s DDR memory, the Radeon 9700 Pro was a real beast. Not only did the card beat the GeForce 4 Ti 4800, but its Radeon 9800 Pro successor surpassed Nvidia's GeForce FX 5800 Ultra (and its notoriously loud cooler) a year later. Nvidia fixed a lot of the 5800's problems with the GeForce 5900 Ultra, but ATI continued defending its title with the Radeon 9800 XT, essentially an overclocked Radeon 9700 Pro. From then on, ATI was established as a serious contender in the high-end 3D graphics market, and an epic rivalry was born.

-

JackMomma Never thought I'd be proud of my ol' 295GTX as I am now... Anyway, it's time to let her cool off again.Reply -

jimmysmitty One thing, you part with the 3870 X2 states it had a combined 160 Pixel Shaders but a single 3870 had 320 Stream processors, same as the HD2900, and a 3870 X2 had 640 Stream processing units.Reply

If anything, the 3870 X2 had 128 SP units that acted as 5 SP units giving it 640.

Other than that, nice article. I remember my 9700 Pro. Too bad the memory died out on it. But it was ok. I got a 9800XT to replace it. -

buzznut Its amazing how many of these I've owned. Voodoo II, Voodoo III, GeForce 4ti, Riva TNT, Radeon 9800, TNT 2 Ultra..Course now that I'm married I can't spend money on things like the latest video card. :(Reply -

utengineer Still running GTX 280's in SLI. I don't get DX11, but I still play every game out there with no problem. 3 years and still going strong. Great investment.Reply -

cangelini jimmysmittyOne thing, you part with the 3870 X2 states it had a combined 160 Pixel Shaders but a single 3870 had 320 Stream processors, same as the HD2900, and a 3870 X2 had 640 Stream processing units.If anything, the 3870 X2 had 128 SP units that acted as 5 SP units giving it 640.Other than that, nice article. I remember my 9700 Pro. Too bad the memory died out on it. But it was ok. I got a 9800XT to replace it.Reply

Absolutely right. Don must have owned a "special" 3870 X2. I've updated the story to reflect the X2s everyone else owned ;) -

toxxel Still have my MSI Geforce 4 TI 4200 128mb DDR card, no real use for it now a days so it just lays in a box with the rest of my outdated stuff.Reply