P67, X58, And NF200: The Best Platform For CrossFire And SLI

Intel’s flagship X58 chipset supports three-way SLI and triple-channel RAM, while its fastest gaming processor is stuck with a far less-capable P67 platform. With multiple GPU installations hanging in the balance, which solution should you choose?

Force Versus Finesse

Tom's Hardware's Three-Part, 3-Way Graphics Scaling Series

Part 1, The Cards: Triple-GPU Scaling: AMD CrossFire Vs. Nvidia SLI

Part 2, The Slots: GeForce And Radeon On Intel's P67: PCIe Scaling Explored

Part 3, The Chipsets: P67, X58, And NF200: The Best Platform For CrossFire And SLI

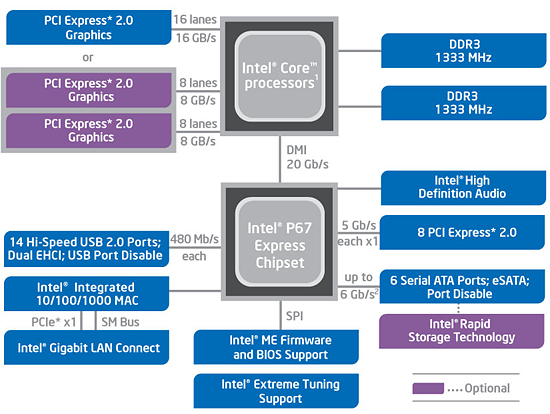

The advantages and shortcomings of Intel’s mainstream platforms are well-known to anyone who follows technology. A total of sixteen PCIe 2.0 lanes originating from the CPU reduce latency (good) and total available bandwidth (bad) compared to Intel’s high-end X58 chipset.

Fortunately, an unlocked multiplier on the K-series processors makes overclocking a piece of cake. On the other hand, though, the CPU also only supports two graphics cards for SLI configurations. That's actually a limitation imposed by Nvidia. Technically, P67 enables the processor's 16 lanes and trio of PCIe controllers.

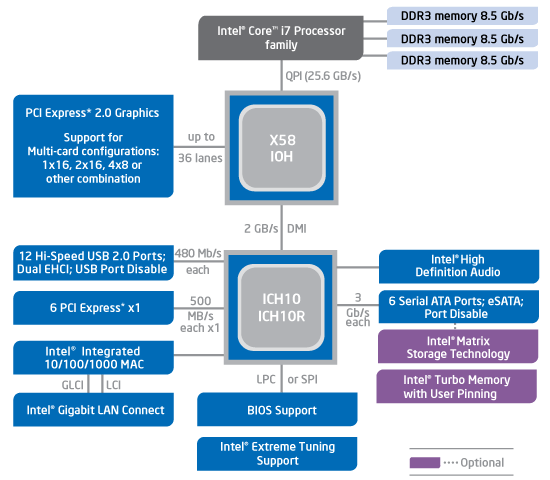

Artificial roadblocks aside, those limitations allow Intel’s high-end X58 chipset to remain a top choice for extreme enthusiasts, given 36 PCIe 2.0 lanes supporting up to four graphics cards in x8 arrangements with four lanes to spare. Too bad unlocked multipliers for that platform are limited to very expensive Extreme Edition CPUs. But even still, overclocking via the base clock gives less expensive processors access to faster interface speeds. And of course, there's the benefit of a triple-channel memory controller, providing up to 50% more bandwidth than any of Intel’s mainstream solutions (even if the advantage is largely academic).

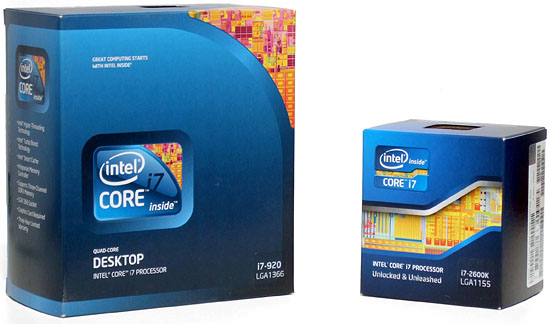

Part one of our this three-part series answered questions about multiple-GPU scaling, while part two addressed PCIe bandwidth needs for a single card. Tying it all together, today we’re going to determine whether a 32-lane (or greater) PCIe controller is really a requirement for dual- and triple-GPU arrays, whether triple-channel memory and twice the base clock can help a 4 GHz Core i7 CPU based on the Bloomfield design (an overclocked Core i7-920) overcome the architectural advancements of a 4 GHz Core i7 processor based on Sandy Bridge (an overclocked Core i7-2600K), and how much of a difference Nvidia’s lane-multiplying NF200 PCIe bridge makes when 16 or 32 lanes aren’t enough.

Any comparison between slightly-different platforms is sure to lead proponents of one side to scream bias against the other. These parts were carefully chosen to make this a fair fight, though.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

For example, fans of the LGA 1155 interface will point out that by having triple-channel memory, X58 Express also has 50% greater memory capacity, so long as the modules are identical. But we'd argue that triple-channel (and the extra memory) is simply a missing feature from the closer-to-mainstream platform. Anyone standing up for their LGA 1366-based board will point out that the Core i7-990X is age-appropriate for this comparison, yet we’ve found that ultra-expensive six-core CPUs offer no performance advantage in games. While a different Bloomfield model might have allowed a closer price match, we would have still picked 20 x 200 MHz (Bloomfield) vs 40 x 100 MHz (Sandy Bridge) clock settings to squeeze the greatest performance from both processors at the resulting 4 GHz comparison frequency.

-

jsowoc Very nice, thorough analysis. It is articles like these that keep me reading Tom's Hardware.Reply -

aznguy0028 Great job on this article toms. Here's another article with X58 vs 1155. Using the 990x and 2500/2600kReply

http://www.xbitlabs.com/articles/cpu/display/core-i7-2600k-990x.html

same conclusions. the S.B slows the 1366 out of the water when it comes to gaming, good read too :)

-

jprahman LGA1366 is officially dead, at least for gaming. Ever since Sandy Bridge was released (honestly ever since LGA1156) the only justification for people buying LGA1366 systems was the notion that somehow LGA1155 (or LGA1156) would "bottleneck" SLI or Crossfire, which this clearly shows to be false. Only point for LGA1366 now is for workstation builds which need 6 cores or for $3000+ bragging rights builds with quad-SLI/quad-Crossfire. Even then, anyone in the market for such systems know that LGA2011 is still going to come out later this year and will want to wait for that, instead of buying into LGA1366.Reply -

amk09 There will be an astronomical number of people who will butthurt after seeing the conclusion.Reply -

gracefully Part 4 should be triple monitor, triple GPU scaling test. Let's see what happens when the bottleneck is returned to the GPU. Then we can raise the clocks of the processors to lessen the chance of CPU bottlenecking.Reply -

BarackMcBush With the biggest difference being 10% and the average only being 2% , I hardly see "LGA1366 is officially dead, at least for gaming." This is a great article but LGA1366 is still a Very Fast Platform.Reply -

BarackMcBush With the biggest difference being 10% and the average only being 2% , I hardly see "LGA1366 is officially dead, at least for gaming." This is a great article but LGA1366 is still a Very Fast Platform.Reply -

BarackMcBush P67 definitely wins on Price Performance , You can get a 2600k ,a nice Motherboard and Ram for the same price as just the i7 990x cpu!Reply -

rolli59 Great article but I come to a different conclusion. If your plan is to build a rig with 1 or 2 GPU's, then there is no reason to spend extra money on a NF200 equipped board, the performance difference between the 2 cards at x16/x16 and x8/x8 is next to nothing. If you are going with 3 GPU setup is when the NF200 comes in and is worth the expense.Reply -

andrewcutter thank you for the review. however i do think that you made a decision without taking into account all situations. it is clear that for one monitor what you said is true. however will you guys be doing the same tests on a multi monitor setup of say 3 1200 monitors, crank settings high to m put these dual cards on sever stress and then see if the same holds true. i feel this is a major part that you haven't looked at. if you are going to do this then i take back my word and will wait eagerly for that article. In case you are not planning to , lease consider doing it.Reply