Intel 10th Gen Comet Lake Processor as Fast as AMD Ryzen 7 3800X? Early Benchmarks Point to Twice the Power Draw

There are already leaked benchmarks for Intel's upcoming Core i7-10700K and Core i7-10700F Comet Lake desktop CPUs, so it's only fair that the unreleased Core i7-10700 and Core i7-10700KF Comet Lake chips get some of the spotlight too.

The Core 10000-series (codename Comet Lake) isn't out yet, so these specifications should be taken with a grain of salt. While based on the Comet Lake microarchitecture, these new chips are still fabricated on Intel's 14nm process node.

- Based on our own testing, these are the best gaming CPUs

- Workhorse? These are the best desktop CPUs for work

- Intel 10th Gen Comet Lake processor benchmarked: i9-10900 Engineering Sample

The i7 models are believed to come with eight cores, 16 threads and 16MB of L3 cache. If the rumors are true, Intel will likely market Comet Lake at three different tiers: Enthusiast (125W), Mainstream (65W) and Low Power (35W). As usual, we expect different variants of the same SKUs.

The i7-10700KF is rumored to feature a 3.8 GHz base clock and 5.1 GHz boost clock. However, well-known hardware leaker @TUM_APISAK's findings this week showed the octa-core chip allegedly boosting as high as 5.3 GHz. If true, this would probably be due to Intel's Thermal Velocity Boost (TVB) feature that basically acts as a turbo on top of the turbo. The boost clock comes as a surprise as an earlier leak of an alleged Intel PowerPoint slide for the F-series suggested that the Core i7 wouldn't benefit from TVB.

Intel 10th Gen Comet Lake Desktop CPU Specs*

| Model | Part Number | Cores / Threads | Base / Boost Clock (GHz) | L3 Cache (MB) | TDP (W) |

|---|---|---|---|---|---|

| Intel Core i7-10700KF | BX8070110700KF | 8 / 16 | 3.8 / 5.1 | 16 | 125 |

| Intel Core i7-10700K | BX8070110700K | 8 / 16 | 3.8 / 5.1 | 16 | 125 |

| Intel Core i7-10700 | BX8070110700 | 8 / 16 | 2.9 / 4.7 | 16 | 65 |

| Intel Core i7-10700F | BX8070110700F | 8 / 16 | 2.9 / 4.7 | 16 | 65 |

| Intel Core i7-10700T | BX8070110700T | 8 / 16 | 2.0 / 4.4 | 16 | 35 |

Specifications are unconfirmed.

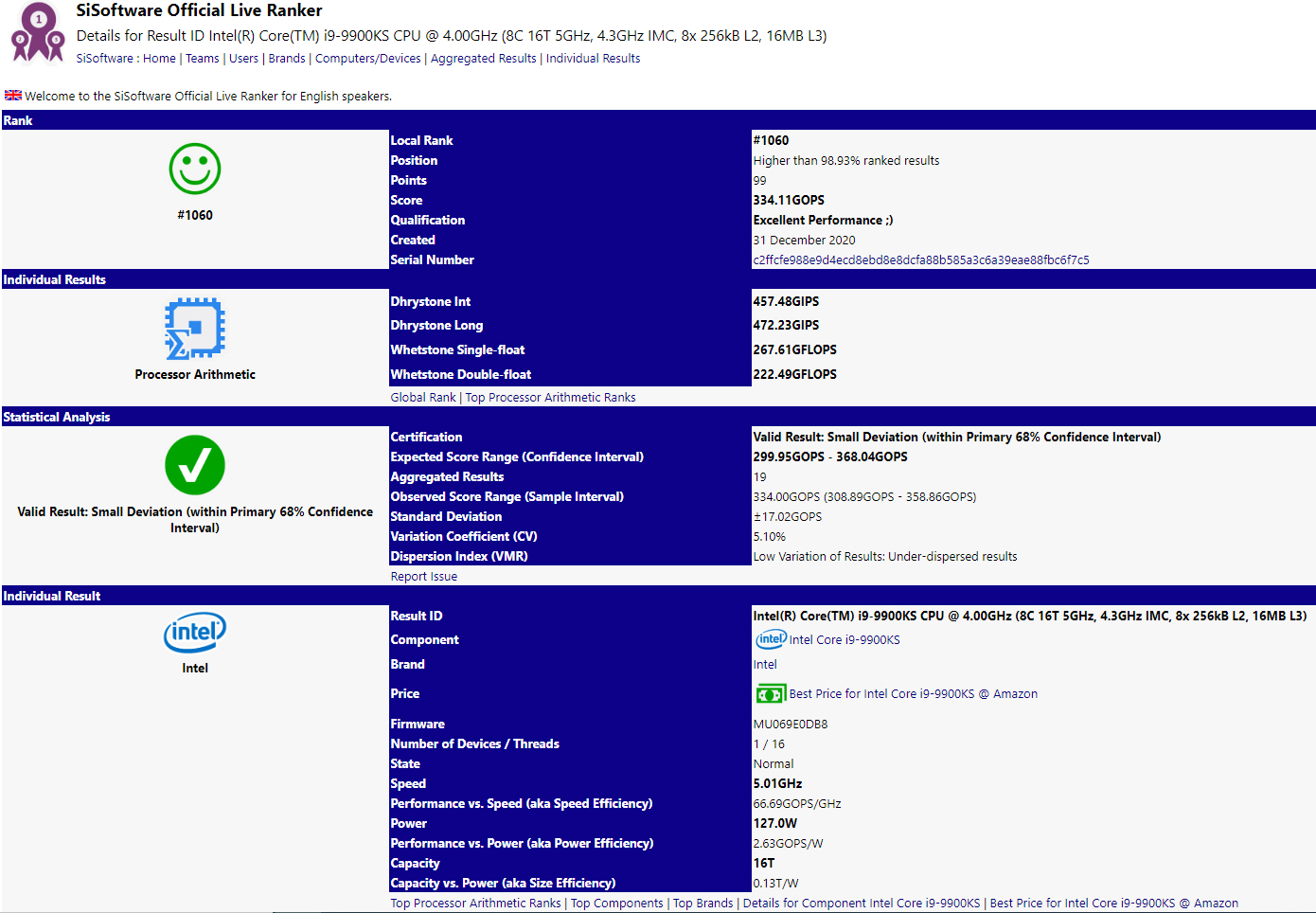

The i7-10700KF is expected to be an i7-10700K without the integrated graphics. In terms of TDP (thermal design power), the two chips are probably rated for 125W, which is slightly below the current-gen Core i9-9900KS's 127W TDP.

The Ryzen 7 3800X has a 105W TDP and complies with the rating, according to our testing from our Ryzen 7 3800X review. However, Intel's TDP criteria is a bit different. Intel might market the i7-10700KF with a 125W TDP even if it only adheres to that when running at base clock speeds (PL1). In reality, the chip's peak power consumption would probably be far greater when operating at the boost clock speed (PL2).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

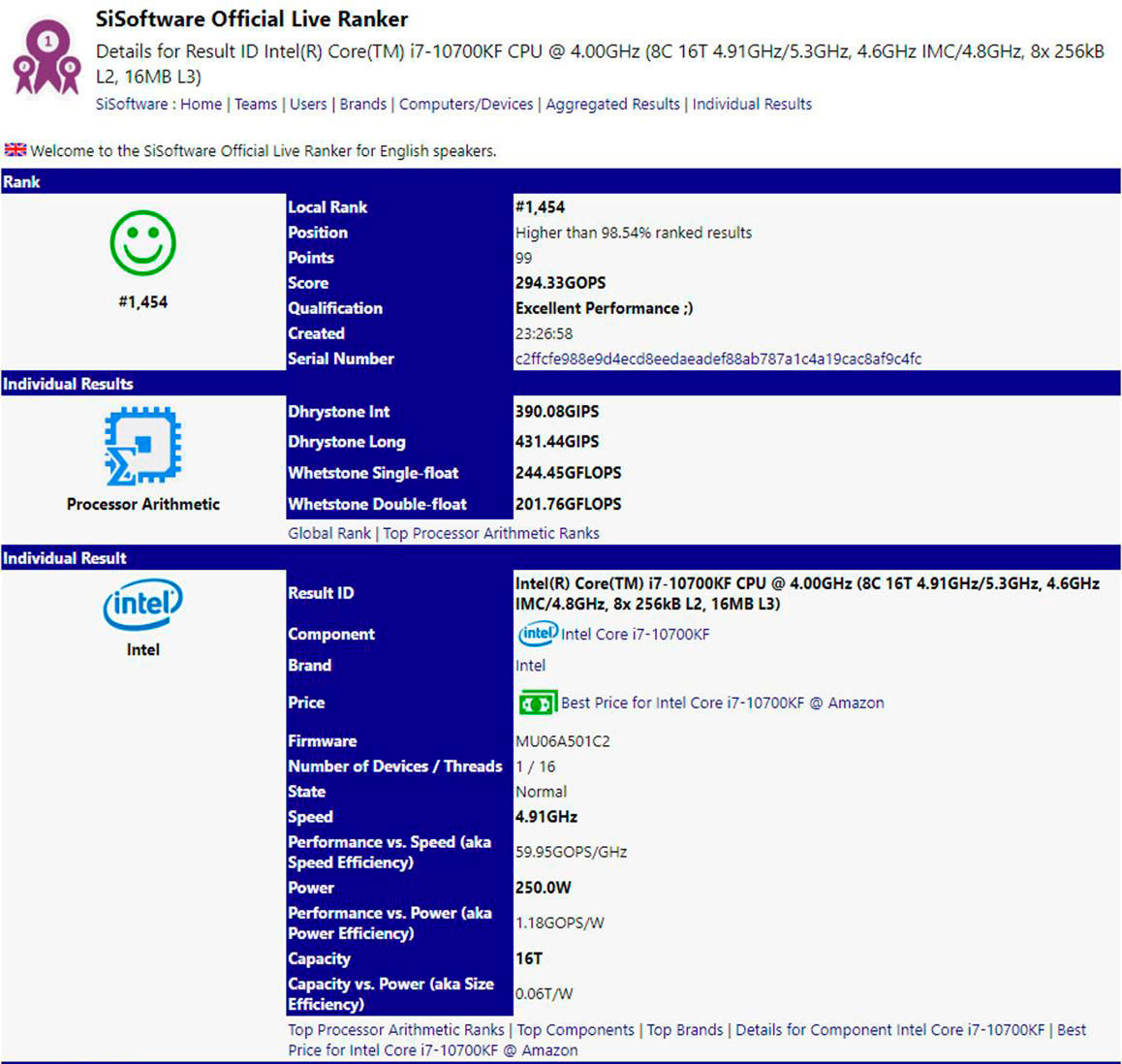

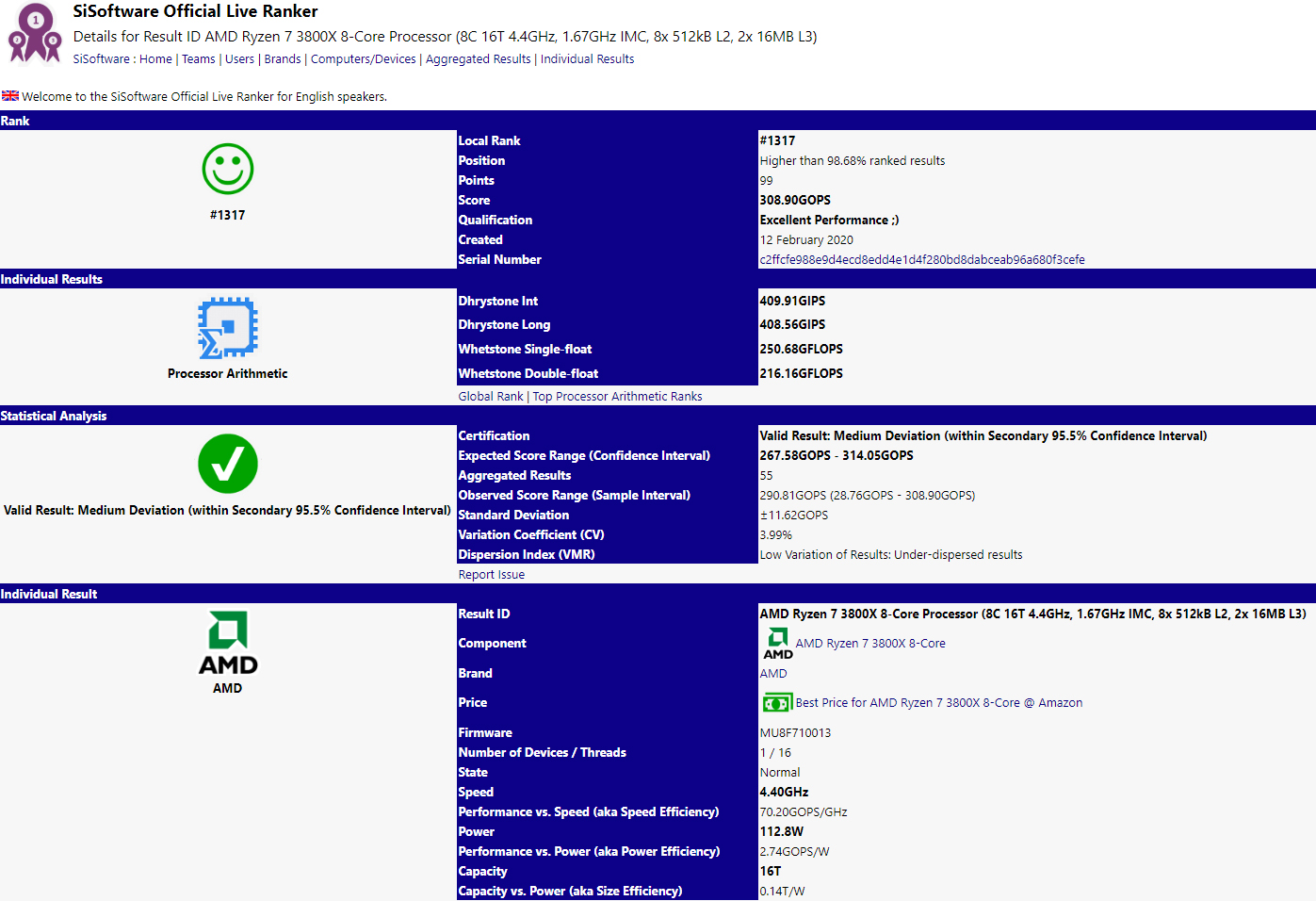

If the posted benchmarks are anything to go by, the i7-10700KF's performance will be on the same level as the Ryzen 7 3800X. Intel's chip reportedly put up a score of 294.33 GOPS, while the Ryzen 7 3800X scores up to 308.90 GOPS. However, the i7-10700KF allegedly draws considerably more power.

The Ryzen 7 3800X delivers an efficiency of 2.74 GOPS/W while the i7-10700KF seemingly offers a disappointing 1.18 GOPS/W. The i7-10700KF's peak power consumption would work out to around 250W in this specific benchmark, which draws considerably more power than most standard applications. Although this would be on par with the Ryzen 7 3800X, the i7-10700KF would pull more than twice the power of its competitor.

Meanwhile, the i7-10700 and the i7-10700F should be the 65W variants of the i7-10700K and i7-10700KF, respectively. @TUM_APISAK recently discovered the i7-10700 listed with a 2.9 GHz base clock and 4.7 GHz boost clock.

The i7-10700T should be the only octa-core Comet Lake chip to compete in the 35W category. Last year's big Comet Lake leak claims that i7-10700T rolls with a 2 GHz base clock and 4.4 GHz boost clock.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

jgraham11 Has anyone looked at Toms Hardware's little minute and a half videoReply

on choosing the right CPU:

1. "As long as its current gen the brand difference is a wash"

a) Toms doesn't recognize the problem with all the Intel CPU bugs, many of which are not patched, some have been tried and failed.

Intel recommends you disable Hyper-Threading if you care about security.

b) Toms doesn't recognize the double the power for same work thing.

c) Toms doesn't recognize that AMD CPUs are 95% of what Intel

CPUs are for gaming while being more than double the performance

for everything else at almost every price point.

2. "Clock speed is more important than core count"

a) Coincidently that statement favours Intel CPUs

b) When a CPU does more per clock cycle that's not the case

c) AMD CPUs cores are better: better IPC, better L1 cache, etc.

d) Intel's ring bus latency is the only thing keeping AMD behind in

gaming, not clock speed.

The last 3 actually seem legitimate...

Tom's: why don't you just come right out with it: "We support Intel"

Just buy it! -

derekullo Reply

Obvious trolling but I'll play along.jgraham11 said:Has anyone looked at Toms Hardware's little minute and a half video

on choosing the right CPU:

1. "As long as its current gen the brand difference is a wash"

a) Toms doesn't recognize the problem with all the Intel CPU bugs, many of which are not patched, some have been tried and failed.

Intel recommends you disable Hyper-Threading if you care about security.

b) Toms doesn't recognize the double the power for same work thing.

c) Toms doesn't recognize that AMD CPUs are 95% of what Intel

CPUs are for gaming while being more than double the performance

for everything else at almost every price point.

2. "Clock speed is more important than core count"

a) Coincidently that statement favours Intel CPUs

b) When a CPU does more per clock cycle that's not the case

c) AMD CPUs cores are better: better IPC, better L1 cache, etc.

d) Intel's ring bus latency is the only thing keeping AMD behind in

gaming, not clock speed.

The last 3 actually seem legitimate...

Tom's: why don't you just come right out with it: "We support Intel"

Just buy it!

1a. Tom's has numerous articles on Intel CPU bugs

https://www.tomshardware.com/news/intel-reveals-taa-vulnerabilities-in-cascade-lake-chips-and-a-new-jcc-bughttps://www.tomshardware.com/features/intel-amd-most-secure-processorsAMD wins 5 out of 6 of the safety tests.

1b. This article and numerous other Tom's articles mention that Intel's 14nm is not as power efficient as AMD's 7nm.

"The Ryzen 7 3800X has a 105W TDP and complies with the rating, according to our testing from our Ryzen 7 3800X review. However, Intel's TDP criteria is a bit different. Intel might market the i7-10700KF with a 125W TDP even if it only adheres to that when running at base clock speeds. In reality, the chip's peak power consumption would probably be far greater when operating at the boost clock speed. "

1c. Tom's benchmarks show exactly what you are saying for most games with Farcry 5 and a few others being Intel dominated.

2a. For the most part games favor clock-speed but it all depends on how a game is coded. As above Farcry 5 does appear to favor clock-speed.

Having said that most modern games are coded for at least 4 threads so you need at least 4 threads at a bare minimum for decent performance.

2b. Architecture does matter, but at the end of the day real world benchmarks are how we interpret how successful an architecture is.

2c. Can't argue/explain opinion.

2d. Intel does need a solution for this.

"Intel is starting to reach the core-count barrier beyond which the ringbus has to be junked in favor of Mesh Interconnect tiles, or it will suffer the detrimental effects of ringbus latencies. "

https://www.techpowerup.com/review/intel-core-i9-9900k/3.html

I don't have a bias for Intel or AMD.

I choose the one that has the best performance per dollar for the games and other software I use. -

alextheblue Reply

Sorry, but Intel's "spec" is completely worthless. They should print it on toilet paper. Why? Intel doesn't actually bother enforcing it at a chip level. They tell the board manufacturers "OK this is what the spec is, now do whatever you want". So you can take a STOCK board, slap in the latest Intel i7 Core+++ KSFTW 10777, and change NO SETTINGS... and it will run roughshod over these supposed Intel specs, again WITHOUT overclocking or touching ANYTHING. Meanwhile AMD chips behave as expected regardless of board... until the user intervenes and changes something.PCWarrior said:a higher TDP allows for higher sustained turbo frequencies when you strictly adhere to Intel’s spec (i.e. with power limits enforced). That is not something that the vast majority of reviews (including Tom’s Hardware own reviews) on the 9900K/9900KS did however. So, the power consumption figures for the 9900K/9900KS that we have are with power limits removed (hence and the reported violation of the TDP for the 9900K/9900KS).

Intel absolutely could override the board settings and stay within spec, at a chip level, until the user enables auto or manual OC. They could absolutely force board manufacturers to default to those settings. They do not... as a result, blaming reviewers who "don't adhere to Intel's spec" is completely ridiculous. They would literally have to change FROM stock settings TO Intel "Spec" by detuning. Their results would no longer reflect what end-users experience.

Anyway, not all loads hammer these processors the same. You conveniently ignored the fact that they were supposing a peak power of 250W. That requires a high-end board, cooling, and a taxing load with AVX like you'd get with Prime95. With that being said, they are likely to pick up 10-20W over the previous gen flagships even in more typical heavy workloads. -

mdd1963 250W?Reply

I'd be highly skeptical of that, short of it perhaps actually drawing that much at perhaps 5.2-5.3 GHz on all cores....then, it might very well be accurate.) -

usiname ReplyPCWarrior said:(4) Since the 10700K has (slightly) improved silicon compared to the 9900K, it should be expected to have better power efficiency (closer to a highly binned 9900K i.e. a 9900KS). It should also have better thermals as the die it is based on is that of the 10-core 10900K which is larger than that of the 9900K and therefore heat is transferred away through a larger area. And better thermals help with power consumption as lower temps means lower resistive transistor power losses.

9600k - 149mm2

9700k - 174mm2

try again -

Olle P Reply

I couldn't agree more, but with one caveat: It does depend on what motherboard is used.alextheblue said:... Intel's "spec" is completely worthless. ... Intel doesn't actually bother enforcing it at a chip level. They tell the board manufacturers "OK this is what the spec is, now do whatever you want". So you can take a STOCK board, slap in the latest Intel i7 Core+++ KSFTW 10777, ... and it will run roughshod over these supposed Intel specs, ...

Would be nice to see some benchmarks of the higher end Intel CPUs with B360 motherboards used. -

mitch074 Reply

I wouldn't be surprised if it actually peaked at 250W with default motherboard settings, as all mobo makers do push Intel's Turbo boost far past Intel's specs. A 5.2-5.3 GHz all-core overclock would probably pull more than that, since a 9900K OC'd at 5.0 already pull 250W - and both chips use the same process.mdd1963 said:250W?

I'd be highly skeptical of that, short of it perhaps actually drawing that much at perhaps 5.2-5.3 GHz on all cores....then, it might very well be accurate.) -

germanium I have the Core I9 9900K & it can draw as much as 250 watts but usually crashes at that point even with water cooling. I have disabled hyperthreading & am running all core boost 5.0GHz. At these settings it can still draw up to 180 watts but can run this speed all day long without down clocking due to heat or power draw. This is due to my settings in bios. Most programs do not max out power at all though i have seen windows antivirus pull lots of power due to using AVX code but with hyperthreading disabled can still run at full 5.0GHz. With hyyperthreading turned on I have to reduce all core speed to 4.9GHz for AVX code work on windows antivirus. These are not stock settings. TDP only applies to stock non turbo speeds on these chips. on the older 4 core non k chips TDP was indeed fore all cores max turbo but not these 8 core chips not even at stock max all core turbo frequency. Most programs without hyperthreading max out at less than 150 watts with my settings.Reply -

joeblowsmynose Replymdd1963 said:250W?

I'd be highly skeptical of that, short of it perhaps actually drawing that much at perhaps 5.2-5.3 GHz on all cores....then, it might very well be accurate.)

Agreed, the 9900k can pull 250w under certain loads (Tom's review of 9900k) .. so when we take vasically the identical processor and we crank up the clocks higher the consumption should go down, for sure.

Intel actually has two TDPs for their processors; the one that they advertise is the TDP for base frequency(PL1), the TDP for boost frequency on the 9900k (or maybe it was the KS?) was I think 250w (PL2 - "Peak") TDP. I actually saw an Intel document with these numbers on it, and I thought ... "so Intel actually does have accurate TDP figures, at each PL state ... but they only advertise and share one of them ..." -- If they actually shared both, everyone would be impressed with the accuracy of their TDPs ... because when you see both, then the Pl1 TDP that they advertise actually makes sense.

Anandtech noted this and had a similar sentiment back in 2018: https://www.anandtech.com/show/13544/why-intel-processors-draw-more-power-than-expected-tdp-turbo

I think most people buying Intel CPUs these days though have no intention of actually running any meaningful full load on them. Gaming generally doesn't peg CPUs much at all. So maybe it doesn't matter.

If a person is doing any serious muliti-threaded heavy workloads, I think they would have moved to Ryzen by now, or will be soon ... or wait another year for Intel gets their node size down ...