First in-depth look at Elon Musk's 100,000 GPU AI cluster — xAI Colossus reveals its secrets

Now, witness the firepower of this fully armed and operational AI supercluster

Elon Musk's new expensive project, the xAI Colossus AI supercomputer, has been detailed for the first time. YouTuber ServeTheHome was granted access to the Supermicro servers within the 100,000 GPU beast, showing off several facets of the supercomputer. Musk's xAI Colossus supercluster has been online for almost two months, after a 122-day assembly.

What's Inside a 100,000 GPU Cluster

Patrick from ServeTheHome takes a camera around several parts of the server, providing a birds-eye view of its operations. The finer details of the supercomputer, like its power draw and pump sizes, could not be revealed under a non-disclosure agreement, and xAI blurred and censored parts of the video before its release. The most important things, like the Supermicro GPU servers, were left mostly intact in the footage above.

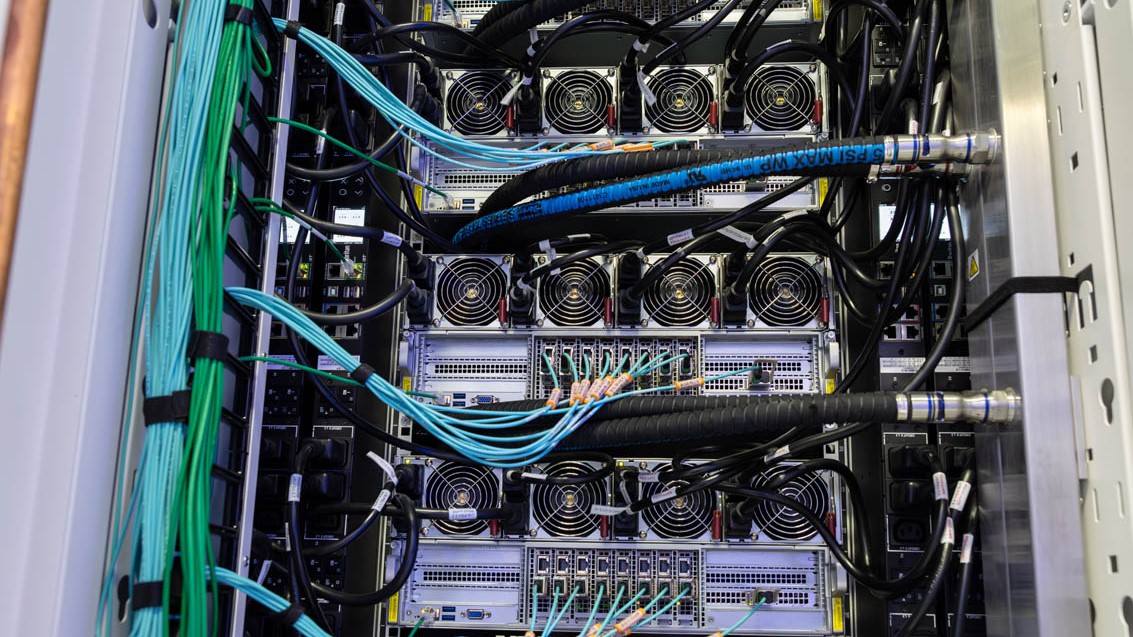

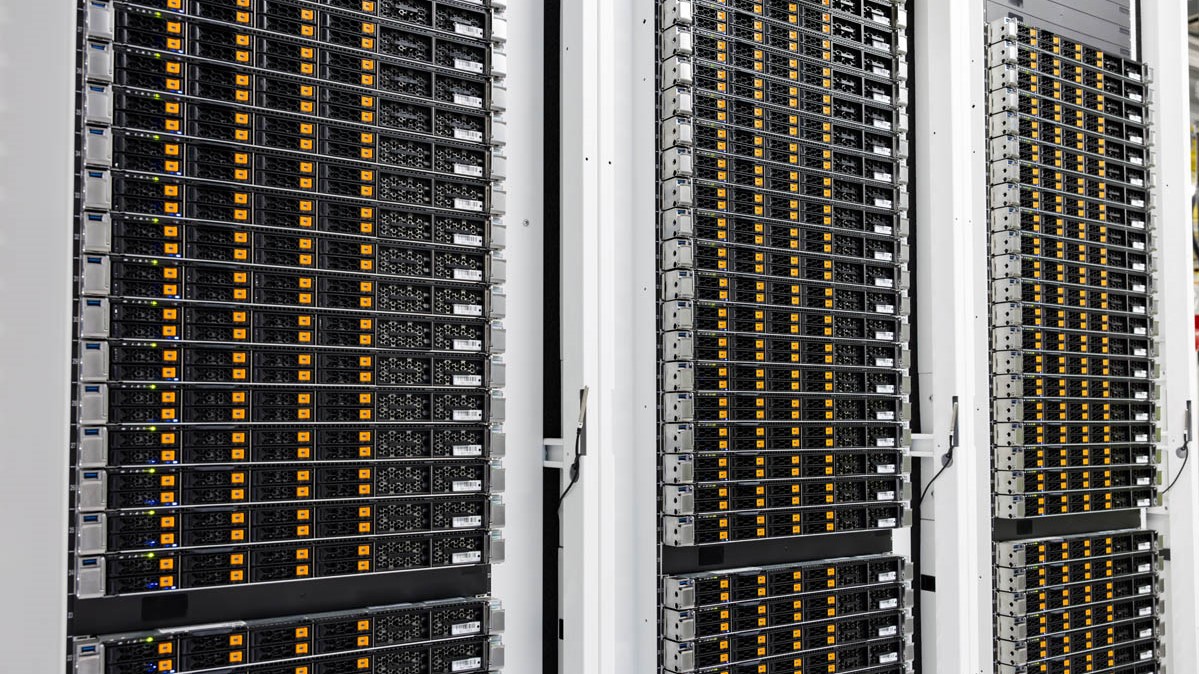

The GPU servers are Nvidia HGX H100s, a server solution containing eight H100 GPUs each. The HGX H100 platform is packaged inside Supermicro's 4U Universal GPU Liquid Cooled system, providing easy hot-swappable liquid cooling to each GPU. These servers are loaded inside racks which hold eight servers each, making 64 GPUs per rack. 1U manifolds are sandwiched between each HGX H100, providing the liquid cooling the servers need. At the bottom of each rack is another Supermicro 4U unit, this time with a redundant pump system and rack monitoring system.

These racks are paired in groups of eight, making 512 GPUs per array. Each server has four redundant power supplies, with the rear of the GPU racks revealing 3-phase power supplies, Ethernet switches, and a rack-sized manifold providing all of the liquid cooling. There are over 1,500 GPU racks within the Colossus cluster, or close to 200 arrays of racks. According to Nvidia CEO Jensen Huang, the GPUs for these 200 arrays were fully installed in only three weeks.

Because of the high-bandwidth requirements of an AI supercluster constantly training models, xAI went beyond overkill for its networking interconnectivity. Each graphics card has a dedicated NIC (network interface controller) at 400GbE, with an extra 400Gb NIC per server. This means that each HGX H100 server has 3.6 Terabit per second ethernet. And yes, the entire cluster runs on Ethernet, rather than InfiniBand or other exotic connections which are standard in the supercomputing space.

Of course, a supercomputer based on training AI models like the Grok 3 chatbot needs more than just GPUs to function. Details on the storage and CPU computer servers in Colossus are more restricted. From what we can see in Patrick's video and blog post, these servers are also mostly in Supermicro chassis. Waves of NVMe-forward 1U servers with some kind of x86 platform CPU inside hold either storage and CPU compute, also with rear-entry liquid cooling.

Outside, some heavily bundled banks of Tesla Megapack batteries are seen. The start-and-stop nature of the array with its milliseconds of latency between banks was too much for the power grid or Musk's diesel generators to handle, so some amount of Tesla Megapacks (holding up to 3.9 MWh each) are used as an energy buffer between the power grid and the supercomputer.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Colossus's Use, and Musk's Supercomputer Stable

The xAI Colossus supercomputer is currently, according to Nvidia, the largest AI supercomputer in the world. While many of the world's leading supercomputers are research bays usable by many contractors or academics for studying weather patterns, disease, or other difficult compute tasks, Colossus is solely responsible for training X's (formerly Twitter) various AI models. Primarily Grok 3, Elon's "anti-woke" chatbot only available to X Premium subscribers. ServeTheHome was also told that Colossus is training AI models "of the future"; models whose uses and abilities are supposedly beyond the powers of today's flagship AI.

Colossus's first phase of construction is complete and the cluster is fully online, but it's not all done. The Memphis supercomputer will soon be upgraded to double its GPU capacity, with 50,000 more H100 GPUs and 50,000 next-gen H200 GPUs. This will also more than double its power consumption, which is already too much for Musk's 14 diesel generators added to the site in July to handle. It also falls below Musk's promise of 300,000 H200s inside Colossus, though that may become phase 3 of upgrades.

The 50,000 GPU Cortex supercomputer in the "Giga Texas" Tesla plant is also under a Musk company. Cortex is devoted to training Tesla's self-driving AI tech through camera feed and image detection alone, as well as Tesla's autonomous robots and other AI projects. Tesla will also soon see the construction of the Dojo supercomputer in Buffalo, New York, a $500 million project coming soon. With industry speculators like Baidu CEO Robin Le predicting that 99% of AI companies will crumble when the bubble pops, it remains to be seen if Musk's record-breaking AI spending will backfire or pay off.

Sunny Grimm is a contributing writer for Tom's Hardware. He has been building and breaking computers since 2017, serving as the resident youngster at Tom's. From APUs to RGB, Sunny has a handle on all the latest tech news.

-

JRStern Wow, about as impressive as 100,000 plugs on a telephone operator switchboard from 1911.Reply -

EzzyB Elon's "anti-woke" chatbotReply

OK, we have this, and China's chatbot which has not been released because it's answers aren't yet "properly socialist."

You know these things aren't all that smart to start with. Do we really want to make them more stupid? -

williamcll Reply

These two are polar opposites on the political spectrum. Any untamed AI would just eat every troll post on the internet and begin spewing insane statements, look at what Bing Chat was like when it was released (or Microsoft's previous chatbot which became incredibly racist).EzzyB said:Elon's "anti-woke" chatbot

OK, we have this, and China's chatbot which has not been released because it's answers aren't yet "properly socialist."

You know these things aren't all that smart to start with. Do we really want to make them more stupid? -

gburke Reply

but can it play Crysis?Admin said:YouTuber ServeTheHome was granted an inside look at Supermicro's side of the xAI Colossus supercluster. The 100,000 GPU server is operational thanks to simple-to-deploy systems and thousands of dollars of networking.

First in-depth look at Elon Musk's 100,000 GPU AI cluster — xAI Colossus reveals its secrets : Read more -

oofdragon Aí is just a marketing scheme, this is just a machine spitting out whatever nonsense it was fedReply -

EzzyB Reply

That would seem to be be idea behind them wouldn't it? Control how they respond by how they are trained. So you have your Fox AI, your MSNBC AI, your Supreme Communist AI. Useless...williamcll said:These two are polar opposites on the political spectrum. Any untamed AI would just eat every troll post on the internet and begin spewing insane statements, look at what Bing Chat was like when it was released (or Microsoft's previous chatbot which became incredibly racist).