Everything Zen: AMD Presents New Microarchitecture At HotChips

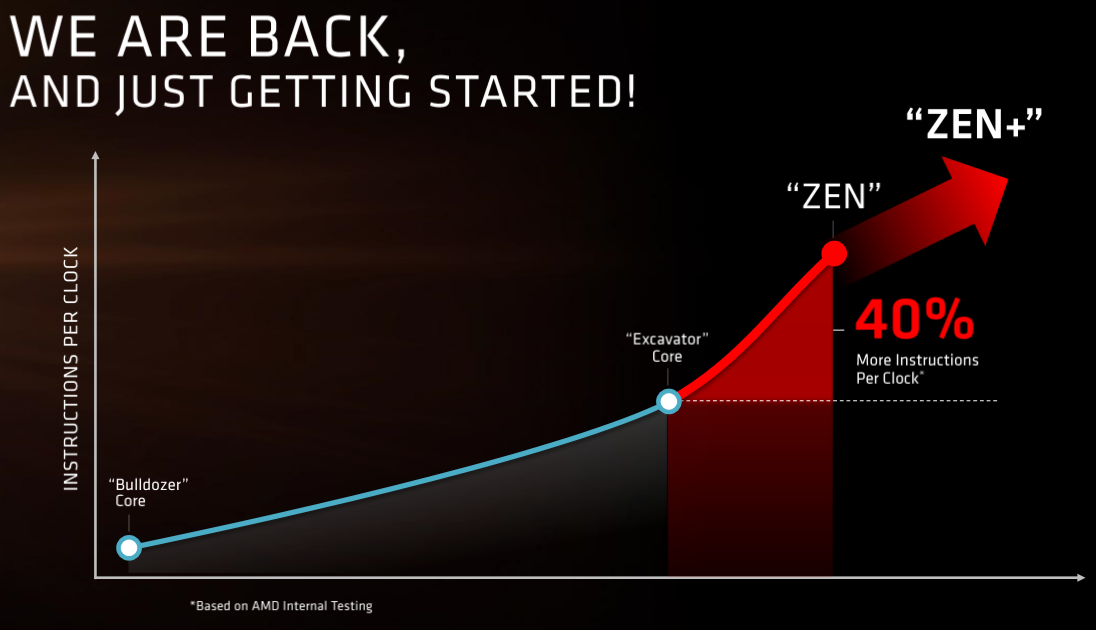

AMD timed its recent revelation of the Zen microarchitecture and Bristol Ridge CPUs at an offsite location during IDF perfectly to steal a bit of Intel's thunder during the former's own show. AMD pulled the veil back on its newest chips, but it painted its achievements only in broad strokes, though it did throw in a Blender rendering benchmark that appeared to put Zen's IPC on par with Intel's beefy Broadwell-E Core i7-6900K. In either case, the picture was enough for investors and enthusiasts alike to catch AMD fever for the first time in years.

The rise of Zen brings the promise of a competitive AMD, but the true test lies in the virtues of silicon, and not the promises of a company. Frankly, on the CPU side of things, AMD has left many enthusiasts disappointed for quite some time, so there is justified skepticism on many fronts.

AMD dove further into the details of its core microarchitecture at the aptly named HotChips conference, which is a yearly industry event that attracts the best and brightest of the processor world. AMD disclosed more of the core engine details, but it is still holding some basic AM4 and SoC particulars close to the chest, such as memory channel configuration and PCIe support. If it’s any consolation, we had a meeting with AMD Fellow and lead Zen architect Michael Clark, and he informed us that the company would divulge more AM4 details soon. This makes sense, because OEM systems with Bristol Ridge, and thus AM4 motherboards, should hit the channel soon.

Sowing The Seeds Of Reduced Power Consumption

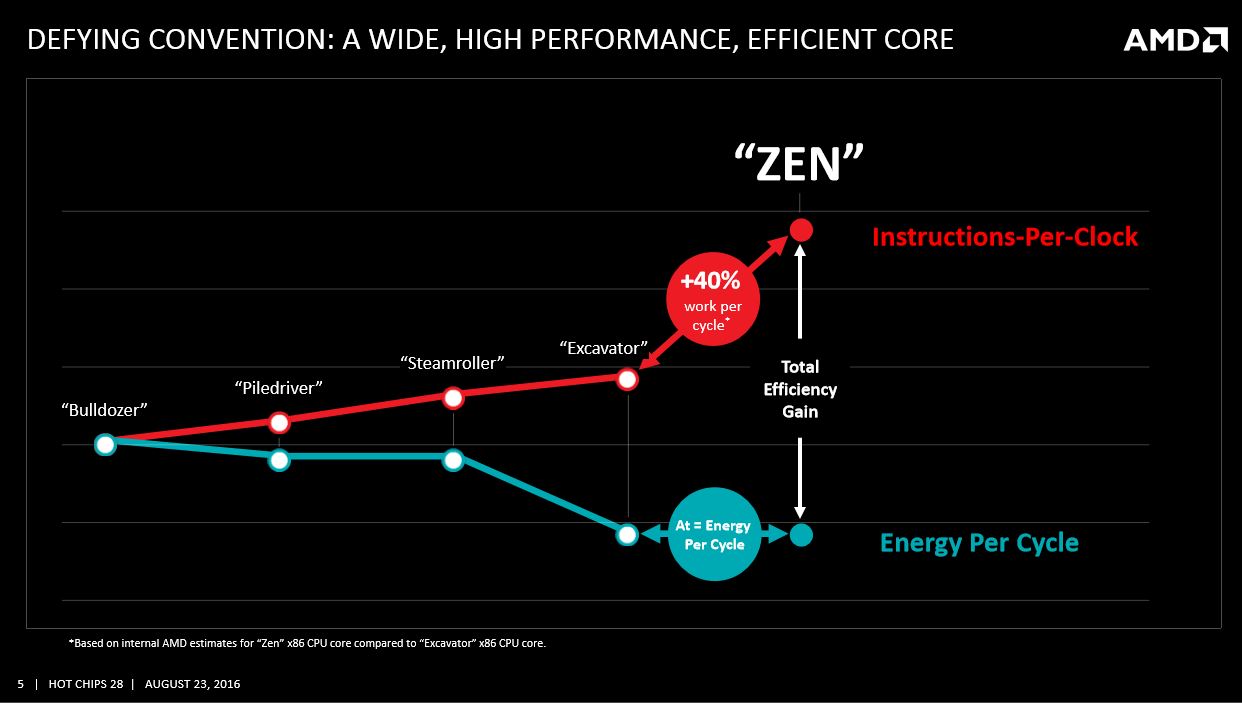

During his presentation, Clark's illuminating ruminations on the design process added color to the fundamental concepts behind the Zen architecture. Clark has serious chops; he's worked on every in-house x86 processor AMD has designed, and as the lead Zen architect, he had the pleasure of naming the chip. He chose "Zen" in homage to the delicate balance the company had to strike among a multitude of factors to provide a 40% increase in performance while keeping power in check.

Clark indicated that transitioning from AMD's current stratified CPU microarchitecture playbook was a challenge. The segmentation features both high-power and low-power designs, but Zen is designed to be a single scalable architecture that can address the full computing spectrum. The company not only had to start with a clean sheet to achieve Feng Shui, but it also had to rethink its entire design process.

Designing a microarchitecture that can scale from low-powered fanless notebooks to the fastest supercomputers is fraught with challenges, but achieving a 40% boost in IPC at the same time is even more daunting. Defining a strict power consumption envelope only compounds the problem, so AMD shook up its design process and viewed every architectural decision, from its infancy, through the prism of power consumption.

In the past, AMD instituted power refinements later in the design process, but unfortunately, the optimizations occurred after it had already laid the bulk of the architectural foundation. It's always easier to fix the wiring before you've hung the sheetrock, and AMD found a similar problem with its previous design flow. Clark stated that his goal was to "place power on equal footing with frequency and performance," which allowed his team to address power challenges at the early stages of the design process, instead of just trying to tweak a design with existing (and fundamental) inefficiencies.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The power-first thinking isn't entirely new; the industry has been pounding the power drums for a decade. In fact, Intel had just presented a run-through of its power optimizations on its aging Skylake architecture (Speed Shift and the like) on the same stage. Intel has set the power efficiency bar very high, so the onus is on AMD to meet or exceed those expectations. For now, AMD has treated us to vague descriptions of a radical performance increase within the same power envelope as Excavator, but it has not released any hard data (such as TDP) to cement its claims. One could rationally assume that Intel's new process, architecture and optimize cadence gives it plenty of time to tweak its existing Skylake architecture into a more power-efficient Kaby Lake design, so the clock is ticking for AMD.

Of course, a 40% IPC increase in one generation is much more impressive than Intel's comparatively unimpressive steady trickle of improvements over several generations. However, the fact that a 40% increase only brings Zen to par, or possibly slightly above, the Core i7-6900K (sprinkle grains of salt, liberally), serves to illuminate just how far AMD is behind.

Everyone loves the underdog, but only if they win. Let's take a closer look at what steps AMD's taken to regain its footing.

The Basics

The first stop on the road to reduced power consumption consisted of discarding the 28nm process that AMD employed with the Excavator/Steamroller microarchitectures and adopting GlobalFoundries’ 14nm FinFET process. The road to 14nm FinFET is well traveled; the company began its FinFET journey with the Polaris GPUs.

The 14nm FinFET provides more performance within a similar power envelope, and the performance boost is actually the key to reducing overall power consumption. Accelerated performance speeds the “race to idle.” A higher IPC allows the CPU to satisfy workloads faster and thus shutter portions of the chip quickly. AMD uses a multi-level clock gating strategy that reduces power consumption of subsections of the core when they aren’t busy. There is always a bit of a performance tradeoff associated with clock gating, and it can generate bugs as well, so striking the right balance is critical. Clark noted that his team worked hard to ensure that clock gating doesn’t affect the speed paths or critical areas of the chip.

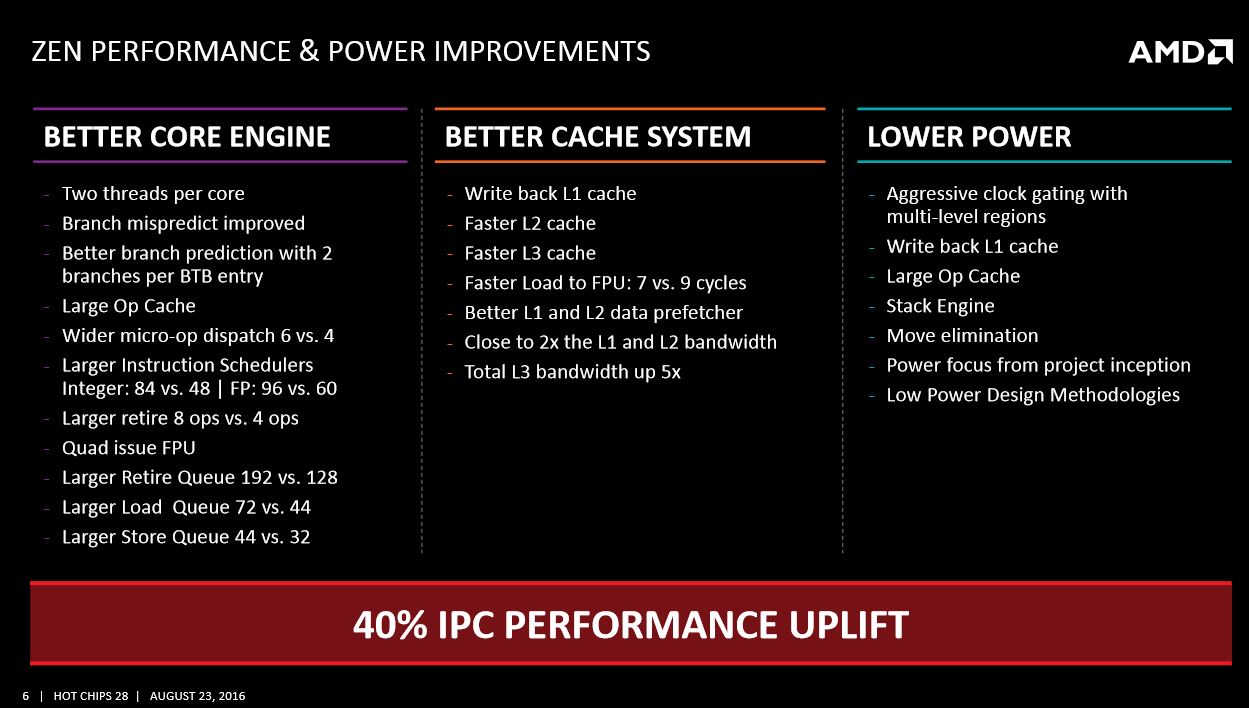

At a high level, the company focused on designing a better core engine and cache system in tandem with the aforementioned power optimizations. AMD boosted the core engine by adding an additional thread to each core (Simultaneous Multi-Threading), and also added a micro-op cache to boost ILP (Instruction Level Parallelism). AMD claims the architecture provides 75% more scheduling capacity and a 50% increase in instruction width. Improved branch prediction also steps in to improve performance. The company overhauled the cache architecture to boost performance, and it claims to have doubled the L1 and L2 bandwidth. Wider pipes also fuel a 5X improvement in L3 cache bandwidth.

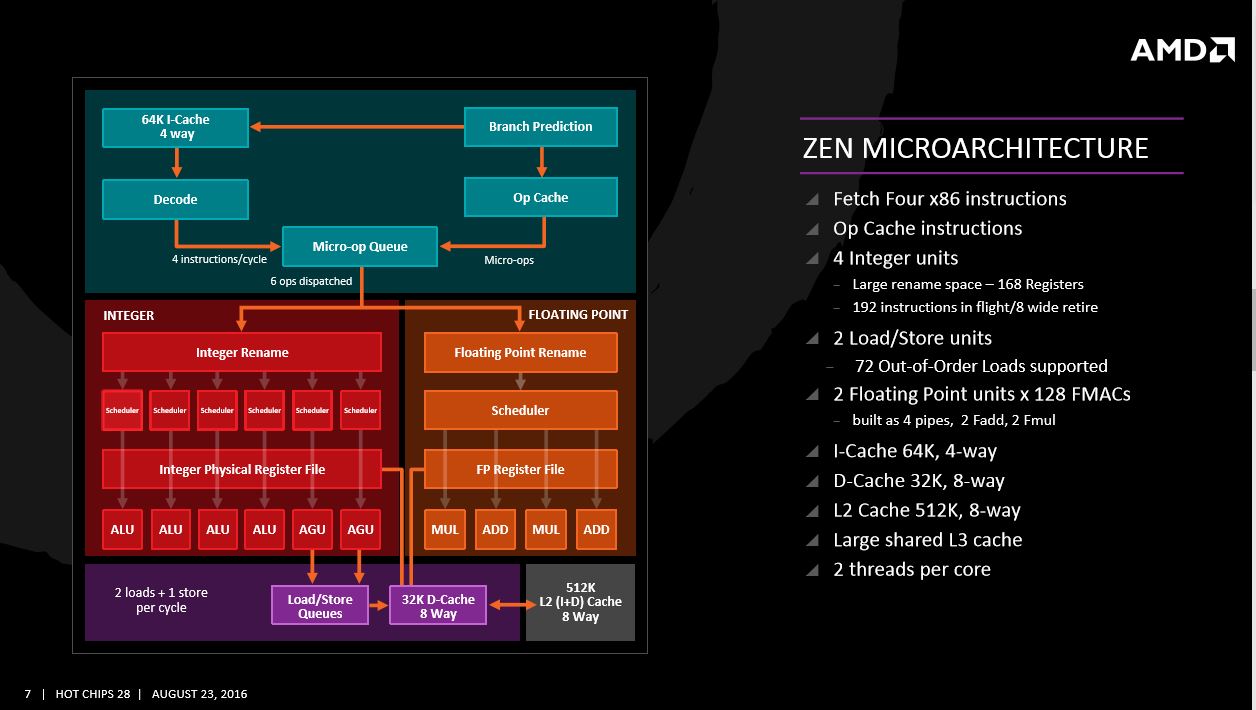

The high-level block diagram illustrates the flow through the core. It all begins with branch prediction, which feeds instructions into the 64K 4-way I-Cache. Data flows into decode, which then issues four instructions per cycle to the micro-op queue. Micro-ops are also stored in the op cache, which, in turn, serves frequently encountered ops to the queue. This technique boosts performance and saves power by reducing pipeline stages. As expected, Clark declined to comment on the specific length of the pipeline but noted that the op cache scheme allows the company to shorten it.

The op cache is a big advance, but the architects also devised a scheme to increase its density and magnify the performance gains. Zen employs a branch fusion technique inside of the op cache so it can hold two ops in a single-op form. The cache can fuse two instructions (a compare and a branch) into one micro-op (one schedule and one execute), and store the fused micro-op until expansion during dispatch.

The micro-op queue feeds 6 ops per cycle into the integer and floating point (FP) queues, which is an improvement over the 4-op dispatch with previous architectures. The integer and FP section have their own separate execution pipes, rename, schedulers and register files, so they essentially serve as separate co-processors. The flow continues into the 32K 8-way D-Cache (Data Cache), which has a 512K 8-way L2 cache.

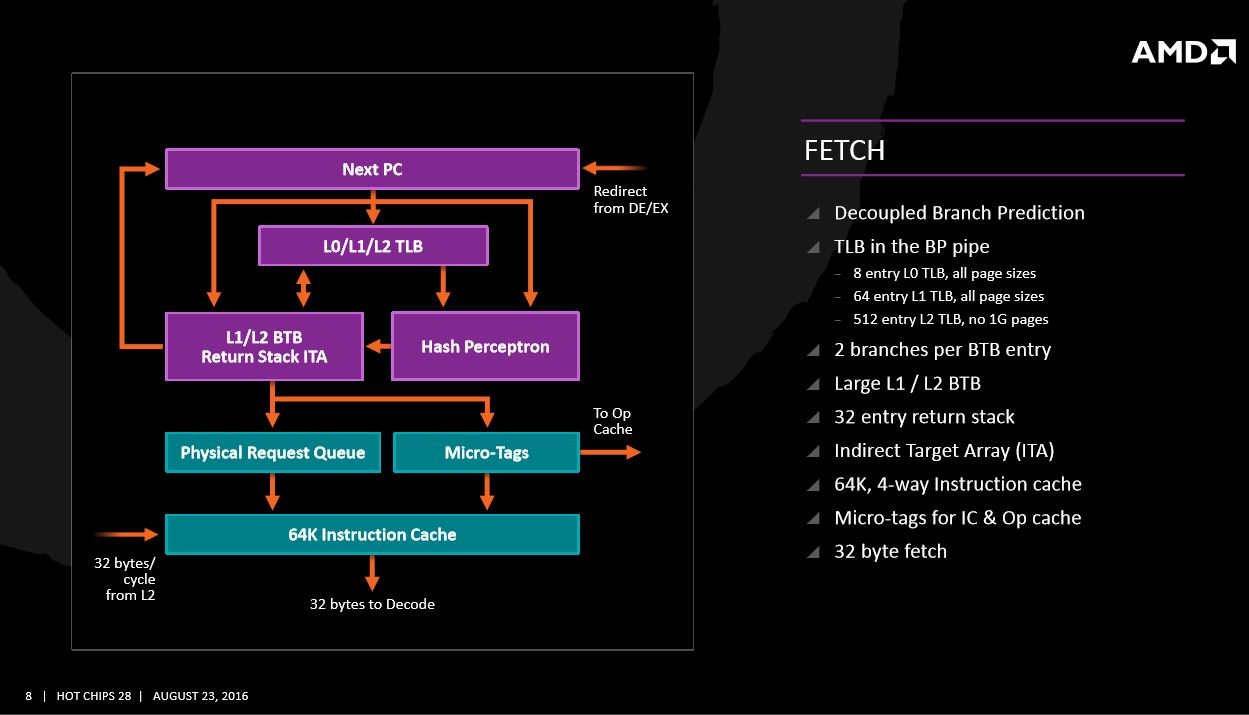

Fetch

The fetch pipe employs decoupled branch prediction. The company moved the TLB above the other units within the hierarchy to optimize prefetches from the instruction cache. AMD stores two branches per BTB (Branch Target Buffer) entry and employs an ITA (Indirect Target Array) for indirect branches. The 64K instruction cache propels 32 bytes per cycle to decode.

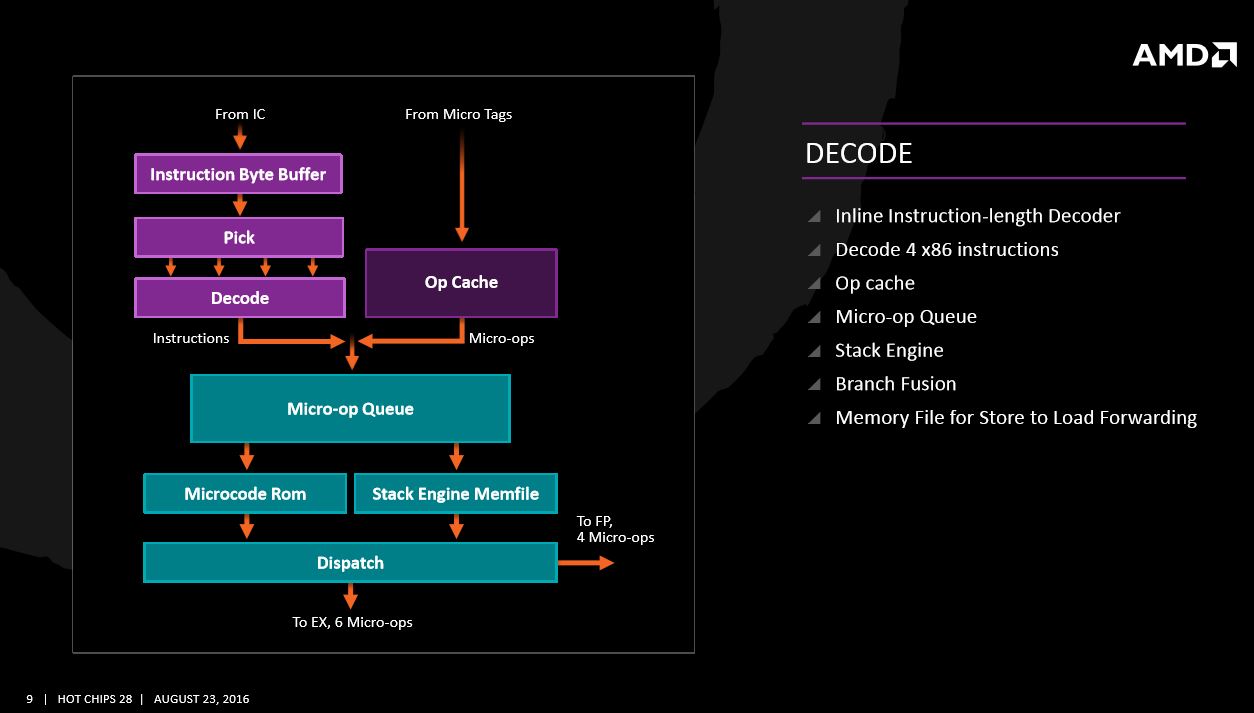

Decode

The decode unit highlights some of the key advantages of the op cache. On the left, data flows in through the instruction byte buffer, through the pick stage, and out to the four instruction decode unit. In contrast, the op cache simply serves the micro-ops directly to the micro-op queue, which avoids the three-step process. It also employs the branch fusion technique (covered above). The shorter pipeline reduces exposure to the comparatively high-power decode unit, which reduces redirect latency by “several cycles” and saves power.

The stack engine helps eliminate false dependencies, and the memory file provides store-to-load forwarding at dispatch. Dispatch serves 6 micro-ops per cycle to the integer/execute engine.

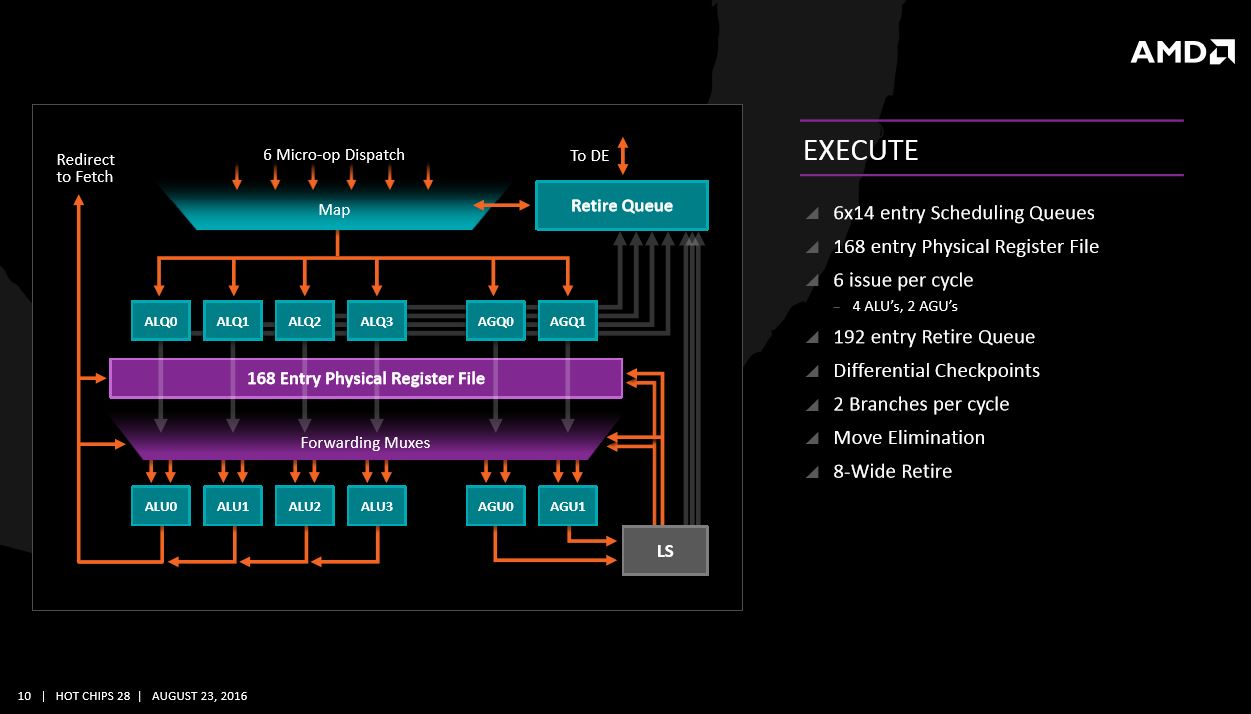

Execute

The integer unit houses a total of six schedulers. Each of the four integer units (ALU) has its own scheduler, and the two AGU (Address Generation Units) have their own independent schedulers. The integer schedulers are all 14 entries deep, and the AGUs feed the load/store queues, which support two loads and one store per cycle.

The execute engine has a 192 entry and retire queue. The architecture executes two branches per cycle in both SMT and single-threaded mode. Interestingly, the architecture receives six ops per cycle but can retire eight at a time, which helps restore performance during pipeline stalls.

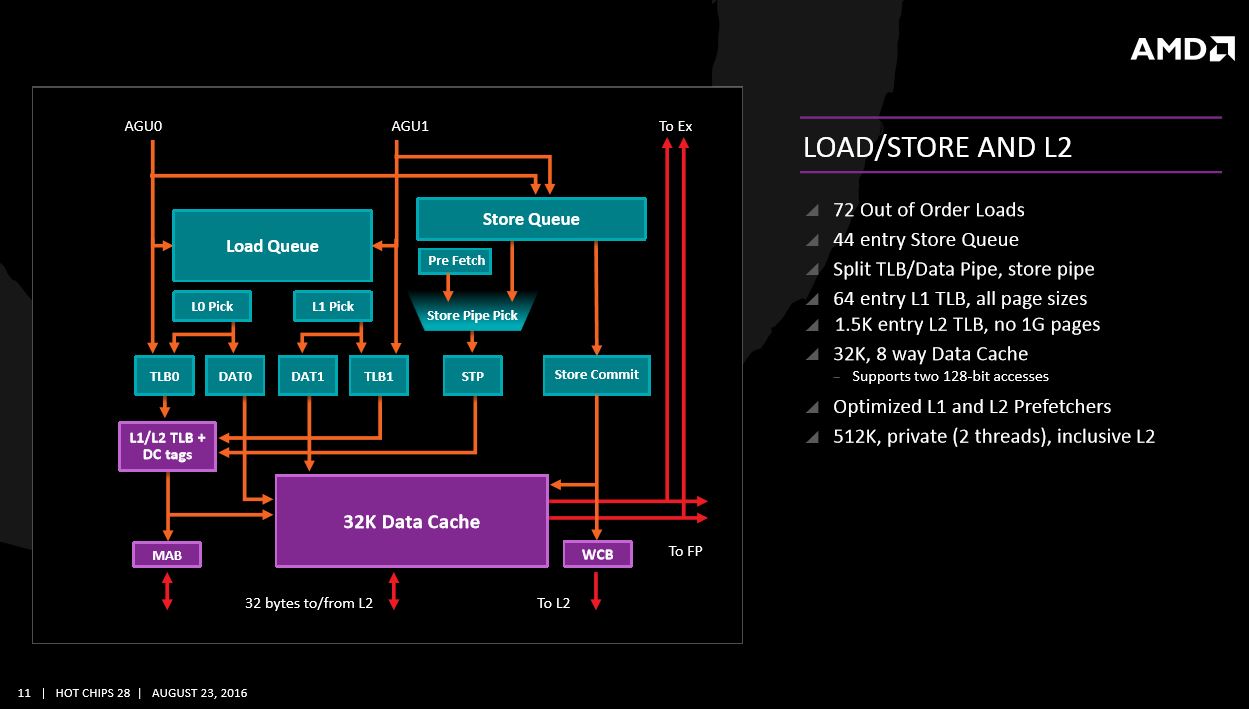

Load/Store And L2

The flow moves from the execute unit into the load/store and L2 area through the split TLB data pipe (fed by two AGU). The design can tolerate 72 out of order loads, and notably, features optimized L1 and L2 prefetchers.

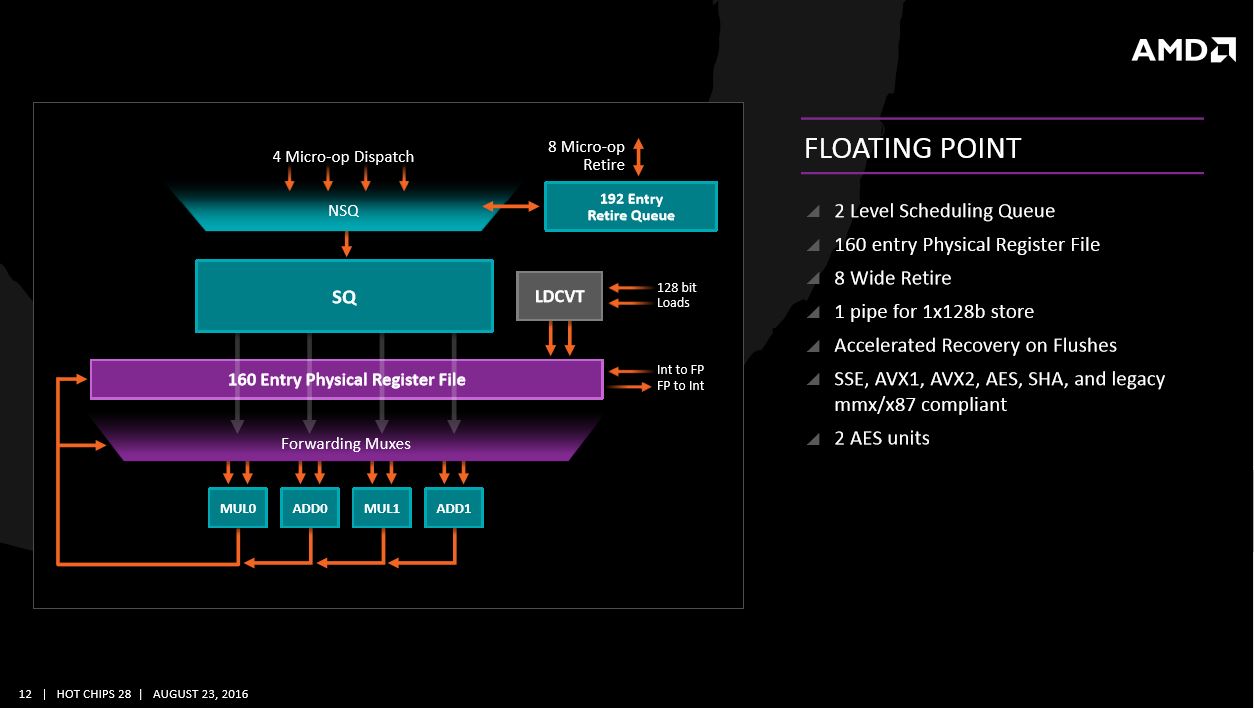

Floating Point Unit

The floating point unit actually has a two-level scheduler. The NSQ (non-scheduling queue) enables dispatching instructions to the integer unit through the 192 entry/retire queue. Ops can’t schedule out of the non-scheduling queue, but the load/store queue can start working even though the floating point operations aren't ready. AMD indicated that the technique assures the data is ready to schedule for the floating point side without using the instruction window, which boosts IPC.

The normal SQ (Scheduling Queue) schedules ops into two MUL and two ADD units. The floating point scheduler features a 160-entry physical register file. Interestingly, the eight-wide retire option resurfaces in this unit. Also note the two encryption-boosting AES units.

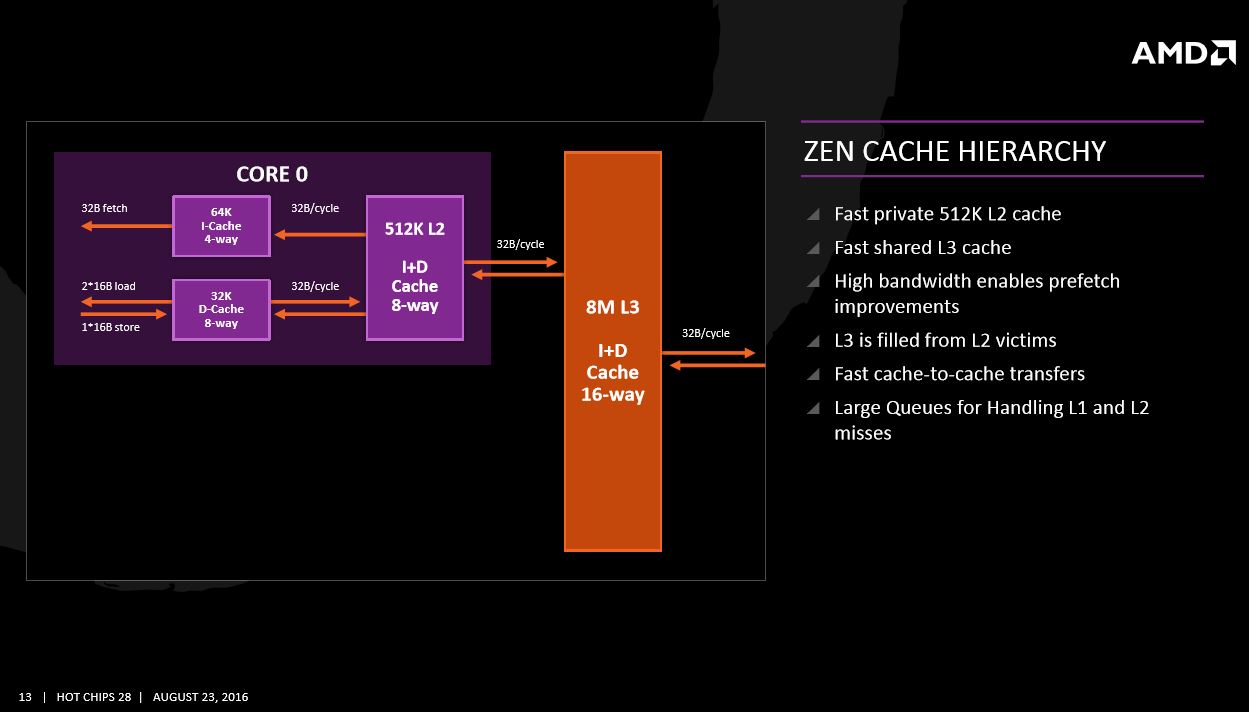

Cache Hierarchy

The cache architecture was yet another area of drastic improvement, and it pushes 32 bytes per cycle between the different levels. The wider pipes provide enough throughput to connect all of the cores to the L3 victim cache. The architecture employs larger queues to handle L1 and L2 misses, and AMD migrated the L1 cache from a slower write-through technique to write-back.

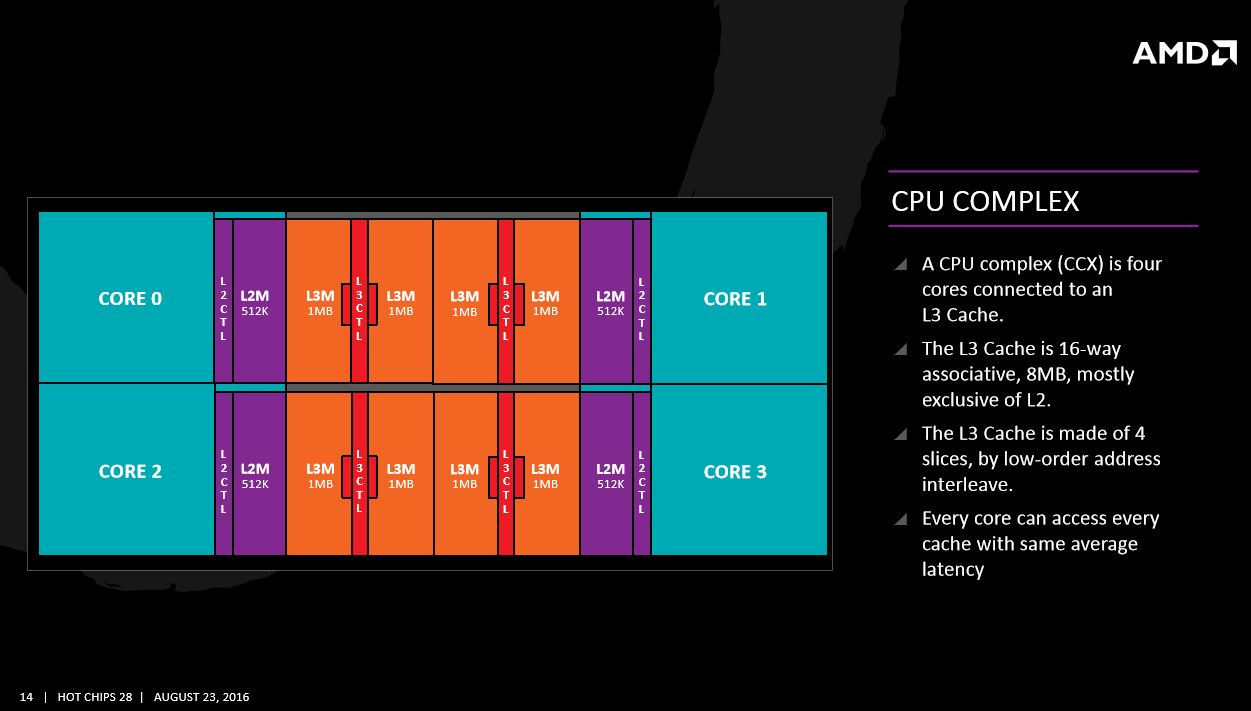

On To The Core Complex

AMD arranged the CPU complex (CCX) into four cores connected to a centralized 16-way associative 8MB L3 cache that it split into four slices. All of the cores can access the entire pool of L3 cache (purportedly with the same average latency). Each core also has a private 512KB L2 cache. AMD connects multiple CCXs together to create higher core counts, such as the 8-core/16-thread (2 x CCX) Summit Ridge CPU it used for its presentations, so the Summit Ridge demo CPUs featured a total of 16MB of L3 cache.

AMD used the aging HyperTransport interconnect to glue CCX together in the past but would not comment on the technique it is using with the Zen architecture.

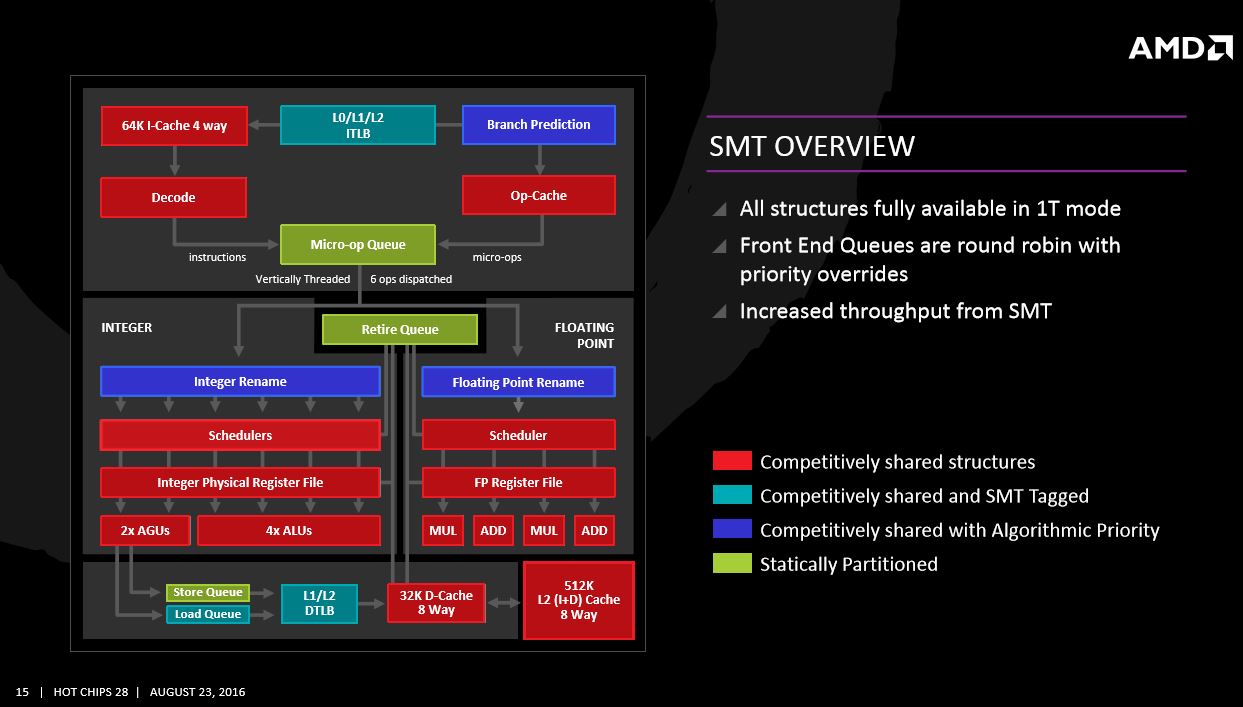

SMT Overview

AMD moved to an SMT (Simultaneous Multi-Threading) design with Zen, as opposed to its old clustered multithreading technique that aimed to keep all of the structures fully available to increase single-threaded performance.

The color-coded slide highlights the shared portions of the microarchitecture. Moving to SMT requires "competitive sharing" of caches, decode, execution units and schedulers between the threads. The processor competitively shares some units, but prioritizes others via an algorithmic priority technique. The branch prediction unit and dispatch interface constantly monitor the threads to ensure they get adequate throughput. Thread priorities are adjusted as needed. AMD attempted to avoid statically partitioning units on a per-thread basis, which consists of physically splitting the structure into two (one for each thread). AMD had to statically partition the store queue, retire queue and micro-op queue, but claimed that it still maintains high throughput in SMT mode.

Reaping The Fruits Of A Power-First Architecture? TBD

The new architecture appears to make several huge steps forward, and it may just bring us back to the old days of a competitive CPU market. However, AMD is still holding key details out of its presentations, and it's worth noting that we aren't even sure of clock speeds, PCIe lane allocations, memory channel support and a host of other essentials.

Most notably, AMD has focused on delivering the power efficiency message but has yet to provide tangible specifics. The move to 14nm FinFET is a great start, but the complexities of on-die power management, and how it plays into the entire SoC picture, are daunting.

The demo of an 8-core Summit Ridge CPU slugging it out with an Intel i7-6900K was encouraging, but it really only serves to illuminate one obviously culled workload. A broader spate of benchmarks will give us more of an indication of where the architecture stands, but there is little doubt that it provides big gains over Excavator and Bulldozer. For many, the real litmus test will be how well Zen can take an overclock, but we still have quite a bit of time before we can make that determination.

The industry desperately needs something big. The desktop PC market has been in a sustained freefall for an extended period of time, but maybe a competitive AMD can pop the chute. The slow cadence of incremental performance increases by the dominant Intel has left most of us with no reason to upgrade. In fact, we still recommend the previous-generation i7-5820K over the latest Intel flagship, which says a lot about the state of the industry.

That is truly the promise of Zen. AMD doesn't have to beat Intel outright in every benchmark; it just needs to bring the right blend of price and performance to market. A competitive AMD will hopefully spur Intel into action, which might reduce prices and push larger jumps in performance. Intel's extended tick-tock-tock cadence might also be the key to Zen's success.

Zen+ Is Waiting In The Wings

AMD has its "Zen+" slated for the future, but it will likely consist of tweaks to the same 14nm architecture. The 10nm process isn't coming anytime soon, so it will likely be a Zen versus Kaby Lake competition for some time.

AMD executives repeated the "Zen is not a destination; it's a starting point" mantra during both of the Zen presentations in an obvious ploy to tease the forthcoming Zen+. I hope that the Zen+ refrain isn't a preemptive ploy to defray possible disappointment with the first Zen generation. Designing one microarchitecture to address all market segments is a risky proposition, and AMD has to be careful with our heightened expectations; yet another disappointment could serve it a crushing blow.

First, let's see what the first-gen Zen can do.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

ssdpro Why would AMD already be using a Mantra that there is a Zen+ to follow right behind Zen? AMD needs to focus on Zen. We all know something will follow but specifically spelling out Zen+ will be the optimization of Zen means Zen itself has issues that can be optimized - that or the editor's concern of defraying disappointment is real (and that sucks). The worrisome point is that AMD is still holding back clock speeds, PCIe lanes, and memory support. Hope they aren't pumping the stock with cherry picked details so the management can unload at the next trading window.Reply -

Sojiro1 Amd compared themselves against a downlocked 6900k lol I mean I like them, their amd hype they live on by "challenging" high tech. Lol. In the end I still, and most, buy amd for its budget price. Does anyone else notice how ridiculous these 6 cores 12 thread AMD processors are being promoted to budget gamers..? People who buy amd don't really care about i7 extreme...... more hype less case. Intel cpu, amd gpu.Reply -

Orumus I hope AMD realizes that, considering their standing both financially and with the public, if they burn us again with a complete let down of a CPU there will be no coming back for them. I hope to heck AMD can be competitive, even if only in the high mid-range and lower, not because I love AMD but because I love choice. A healthy AMD is good for us all.Reply -

photonboy There seems to be an assumption that Intel is capable of pumping out significant improvements but just doesn't due to financial reasons (i.e. why bother spending money when it is ahead?).Reply

I think the reality is likely that there may not be a lot that can be done to improve the architecture without breaking backwards compatibility. -

Brian_R170 Yeah, talk of Zen+ is scary when you look at it like that. I am a PC enthusiast and I have hopes that Zen will help fuel a resurgence in the ever-shrinking client PC market. For that to happen, Zen needs to deliver something compelling. Not just the performance/$ game AMD has been playing. It needs to have features that allow PCs to do things they can't do today (form factor, battery life, whatever). If it just matches the features of current Intel CPUs, then the best we can hope for is for PC prices to drop a little, and we already know pricing isn't the reason the PC market is shrinking.Reply -

clonazepam With all of the news about Zen, I think the benchmarks I'm most interested in seeing is the performance at different RAM speeds (and to a lesser extent, quantity, and # of sticks). It's all really exciting, but that point sticks out the most for me.Reply -

Kewlx25 Reply18494839 said:I hope AMD realizes that, considering their standing both financially and with the public, if they burn us again with a complete let down of a CPU there will be no coming back for them. I hope to heck AMD can be competitive, even if only in the high mid-range and lower, not because I love AMD but because I love choice. A healthy AMD is good for us all.

The Bulldozer was a complete miss. Felt like they lied. The Polaris is better than their last gen, but compared to Nvidia, it's a pretty big let down. I already have the feeling they're twisting the truth. Nvidia may be a greedy corp, but at least they mostly tell the truth. -

emayekayee I waited on Zen for a long time. I finally pulled the trigger on Skylake when I got an i5 6600k for $209 back in June. I've been an AMD user since the first Athlons. I had to ask myself, "do I believe AMD is going to release a processor that matches the i5 6600k in gaming performance at a sub $200 price point?" I wish team red all the best, but I think I made the right choice.Reply -

ElGruff @KEWLX25Reply

Really? xD

Maxwell async drivers? Bumpgate? Fermi Dx12 drivers? Woodscrews? Overstating efficiency of cards? 970 specs?

What about their reviewers "guide"? Paying off system builders to drop / slate AMD (Tier 0 programme)? Gameworks (failworks, even destroys performance for their own cards)

There's also an ongoing case regarding Nvidia poaching staff from AMD and those staff "stealing" internal documents before they left for team green.

The list goes on. AMD doesn't always deliver on promises (they do however build tech that's well ahead of it's time and don't milk their customer base by removing instructions or completely hampering performance) but at least it is an honest company that doesn't employ underhand tactics like it's two biggest competitors.