Baidu's 'Ring Allreduce' Library Increases Machine Learning Efficiency Across Many GPU Nodes

Baidu’s Silicon Valley AI Lab (SVAIL) announced an implementation of the ring allreduce algorithm for the deep learning community, which will enable significantly faster training of neural networks across GPU models.

Need For Efficient Parallel Training

As neural networks have grown to include hundreds of millions or even over a billion parameters, the number of GPU nodes needed to do the training has also increased. However, the higher the number of nodes grows, the less efficient the system becomes in terms of how much computation is done by each node. Therefore, the need for algorithms that maximize the performance across the highly parallel system has also increased.

Using all the GPU nodes more efficiently means the neural network training can be done faster and that the company training a neural network doesn’t have to spend as much on hardware that would otherwise be underutilized. Baidu has taken one algorithm, called the "ring allreduce," from the high-performance computing (HPC) world and brought it to deep learning to increase the efficiency of its GPU nodes. The ring allreduce algorithm could speed up the training of an example neural network by 31x across 40 GPUs, compared to using a single GPU.

Ring Allreduce Algorithm

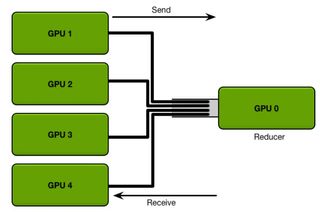

The way training is normally done on GPUs is that the data is sent from multiple GPUs to a single “reducer” GPU, which creates bottlenecks in how fast the training can be done. The more data needs to be trained, the more this bandwidth bottleneck becomes a problem.

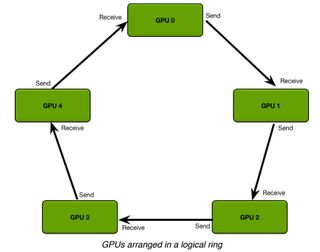

With the ring allreduce algorithm, less time is spent sending data and more is spent doing computations on that data locally on each GPU. The GPUs send data to each other in a “logical ring” (as seen in the first picture above), a structure that allows the computation to be done more efficiently.

“The ring allreduce allows us to efficiently average gradients in neural networks across many devices and many nodes," said Andrew Gibiansky, a research scientist at Baidu. "By using this bandwidth-optimal algorithm during training, you can drastically reduce the communication overhead and scale to many more devices, while still retaining the determinism and predictable convergence properties of synchronous stochastic gradient descent,” he added.

Another Example of HPC/Machine Learning Merger

As we’ve seen recently with the partnership between the Tokyo Institute of Technology and Nvidia to enhance Japan’s fastest supercomputer with AI computation, the HPC and machine learning worlds seem to be merging at a rapid pace.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The hardware is increasingly being developed for both, and now so is the software, as Baidu’s new ring allreduce library shows. The synergy between the two worlds could further speed up the evolution of machine learning and artificial intelligence.

Baidu’s SVAIL used the ring allreduce algorithm to train state of the art speech recognition models, and now it hopes that others will take advantage of the group’s implementation to do even more interesting things. The group released its ring allreduce implementation as both a standalone C++ library as well as a patch for TensorFlow.