Google’s DeepMind Creates Another Real-World Breakthrough In Synthetic Speech Generation

Early this year, after Google’s DeepMind artificial intelligence technology proved it can beat an 18-time world champion at a game as complex as Go, many wondered if that intelligence could be put to good use in the real world, and how soon. Since then, Google showed that DeepMind can be used to cut the cooling bill for one of its data centers by 40%. The company just announced another real-world breakthrough pertaining to text-to-speech (TTS) technology.

Synthetic Speech Breakthrough

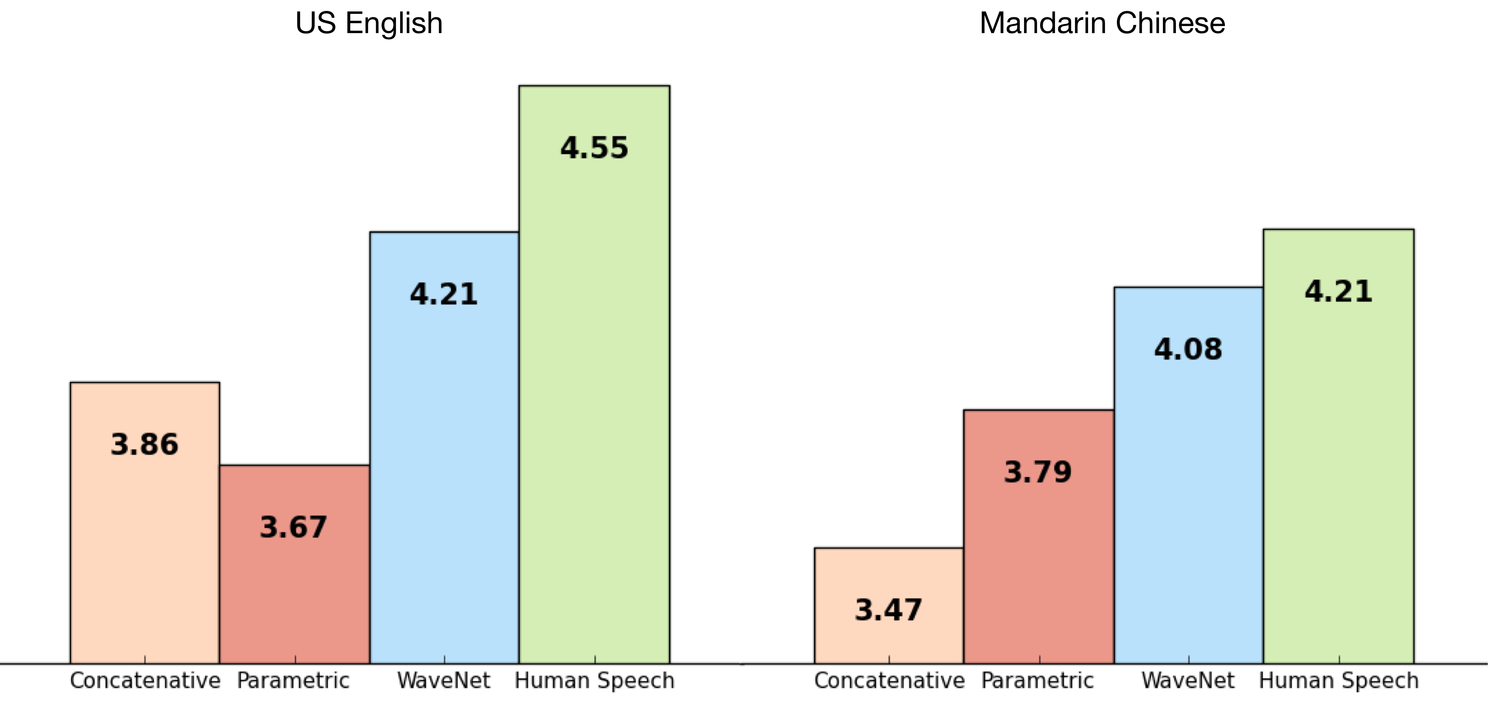

According to Google, DeepMind’s technology, called WaveNet, succeeded in reducing the gap between Google’s best TTS technology and the human voice by 50%, in terms of how natural synthetic speech can sound.

Until now, Google would use concatenative TTS, where the company would first record speech fragments spoken by real humans and then combine them to form sentences. This approach is what gives text-to-speech its “robotic” tone, because the words are spoken with no context or emotion.

Google also uses the parametric approach, where all the information required to generate the data is stored in the parameters of the model, and the contents and characteristics of the speech can be controlled via the inputs to the model. However, so far the parametric technology has been successful only with non-syllabic languages such as Mandarin Chinese, but it makes syllabic languages such as English sound less natural than does the concatenative method.

How WaveNet Works

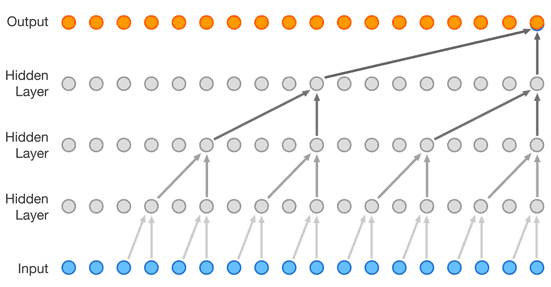

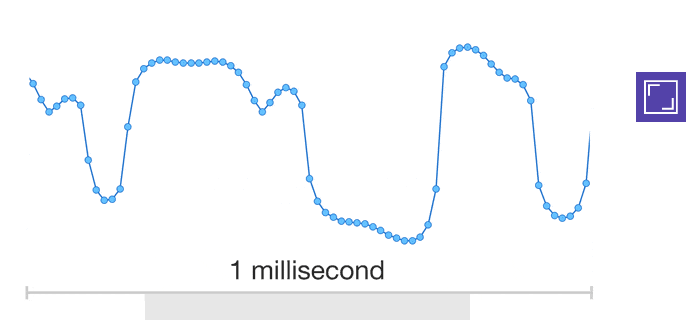

WaveNet is a fully convolutional neural network that can modify the raw waveform of the audio signal one sample at a time. That means that for one second of audio, WaveNet can modify 16,000 samples (16KHz audio), making synthesized speech sound much more natural. Sometimes, WaveNet even generates sounds such as mouth movements or breathing, which shows the flexibility of using raw waveforms.

At training time, the inputs are real waveforms recorded from human speakers. After the training, Google can sample the network to generate synthetic speech. The process for picking the samples one step at a time makes for a computationally expensive process, but Google said that it is essential to generate realistic-sounding audio.

To test how good the new text-to-speech engine was, Google did a blind test with human subjects who would give 500 ratings on 100 sentences. The results are shown below, and as you can see, WaveNet reduced the gap between Google’s best previous technology (either concatenative for English or parametric for Mandarin) and human speech by around 50%.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Google’s DeepMind team seemed surprised that directly generating each audio sample even worked at all with deep neural networks, and they were even more surprised that it could outperform the company’s previous cutting edge TTS technology.

The team will continue to improve WaveNet so that it can create synthesized speech that’s even more human-like. Presumably, the team will also want to reduce the computational costs so that Google can start using WaveNet commercially as soon as possible.

-

kenjitamura Yay. We're now one step closer to making celebrities say whatever the hell we want them to say and floating the clips around the internet for people to think they're real sound bytes.Reply -

McWhiskey So we fully replace actors visually with cgi. Step 2, replacing them audibly with "cga" is right around the corner.Reply

Then actors will be obsolete.

But if actors are obsolete, who will we collectively worship? -

bloodroses Oh great.... now the spam phone calls we receive from telemarketing machines are going to get more realistic sounding; or tech support calls for that matter.Reply

I guess that means that voice recognition security is now useless as well.... lol -

lorfa Should have included the link: https://deepmind.com/blog/wavenet-generative-model-raw-audio/Reply

Gives the examples and explains better what it is they're doing. -

Flying-Q Reply

Do bear in mind that there will still need to be directors and animators creating the content as Deep Mind has not yet got to the stage of cohesive dramatic inspiration.18573767 said:So we fully replace actors visually with cgi. Step 2, replacing them audibly with "cga" is right around the corner.

Then actors will be obsolete.

But if actors are obsolete, who will we collectively worship? -

George Phillips We are getting closer and closer to creating the robots in the movie A.I. It will definitely come true!Reply -

fixxxer113 It's all good until one day, you hear a perfectly synthesized human voice sayingReply

"I'm sorry Dave, I'm afraid I can't let you do that..."