GeForce RTX 3090, RTX 3080 Specifications Reportedly Exposed In Vendor Spec Sheets

The latest Ampere leak from VideoCardz might have just ruined Nvidia's announcement. The publication has obtained new information that allegedly lends credence to the rumored specifications for the GeForce RTX 3090 and GeForce RTX 3080 that will soon vie for a spot on our list of Best Graphics cards.

Starting from the top, Ampere silicon will seemingly be manufactured on the 7nm process node. The leaked specification sheets don't specify the foundry, but TSMC's 7nm FinFET manufacturing process is the first thing to come to mind. Ampere-based graphics, such as the GeForce RTX 3090 and RTX 3080 will get to exploit the PCIe 4.0 interface. Both models are rumored to use the same GA102 die. Nvidia SLI is only supported on the GeForce RTX 3090 through the chipmaker's NVLink interface.

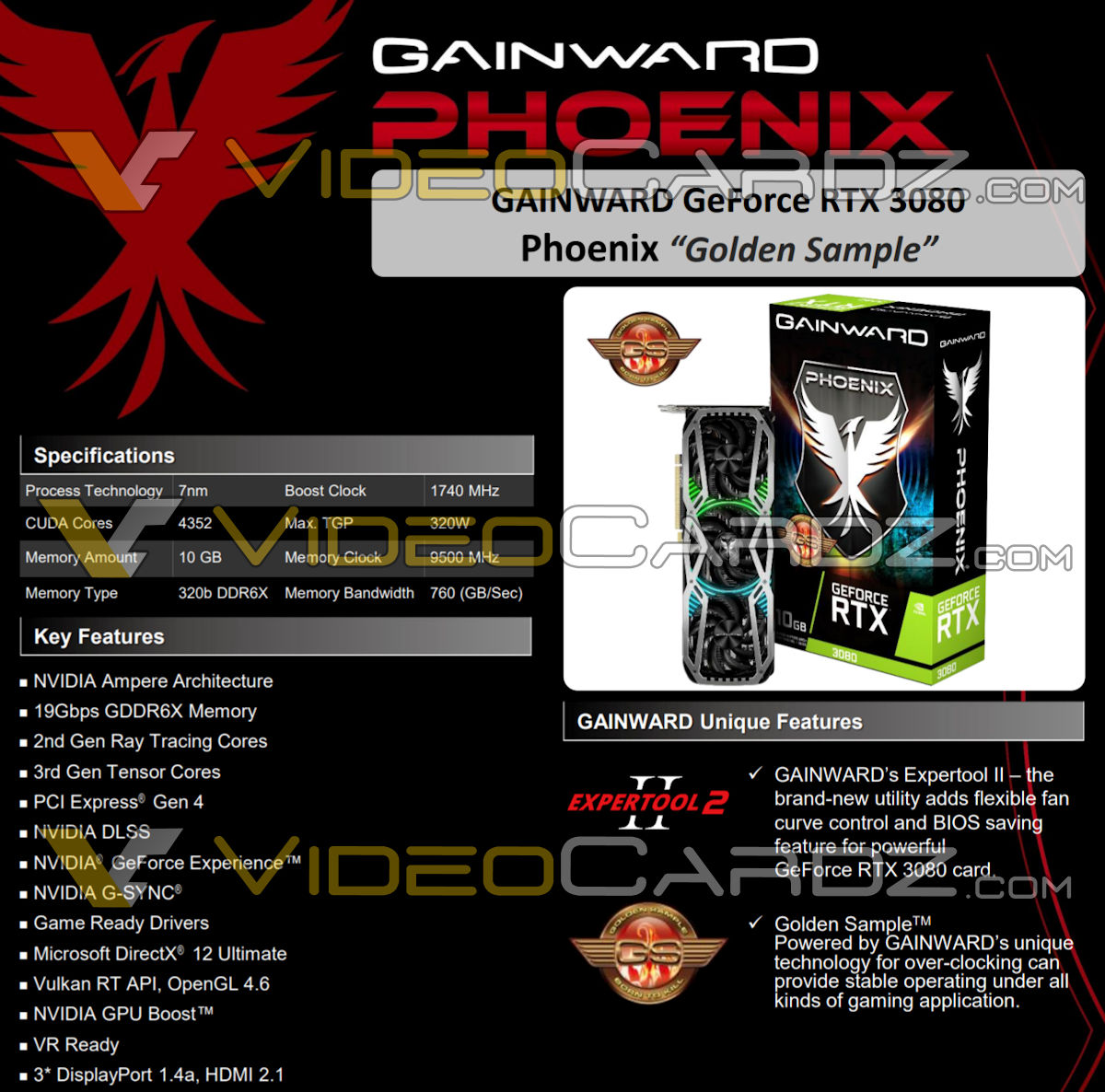

Physically, Gainward's custom models fall right in line with what we've seen from the Founders Edition and the Zotac Gaming GeForce RTX 3090 Trinity HoLo. The GeForce RTX 3090 and GeForce RTX 3080 are going to be gigantic graphics cards. The Gainward models adhere to a 2.7-slot design and relies on a beefy triple-fan cooling solution.

Nvidia GeForce RTX 3090, RTX 3080 Specifications

| Graphics Card | CUDA Cores | Memory Clock | Memory Capacity | Memory Bus | Memory Bandwidth | TGP |

|---|---|---|---|---|---|---|

| GeForce RTX 3090* | 5,248 | 19 Gbps | 24GB GDDR6X | 384-bit | 935.8 GBps | 350W |

| Titan RTX | 4,608 | 14 Gbps | 24GB GDDR6 | 384-bit | 672 GBps | 280W |

| GeForce RTX 3080* | 4,352 | 19 Gbps | 10GB / 20GB GDDR6X | 320-bit | 760 GBps | 320W |

| GeForce RTX 2080 Ti | 4,352 | 14 Gbps | 11GB GDDR6 | 352-bit | 616 GBps | 250W |

*Specifications are unconfirmed.

The GeForce RTX 3090 appears to come equipped with 5,248 CUDA cores and 24GB of GDDR6X memory after all. As for clock speeds, the Gainward GeForce RTX 3090 Phoenix Golden Sample has a 1,725 MHz boost clock.

The GDDR6X memory on the GeForce RTX 3090 runs at 19.5 Gbps across a 384-bit memory interface. If you do the math, the maximum theoretical memory bandwidth adds up to 936 GBps, just a bit shy of 1 TBps. The GeForce RTX 3090's TGP (total graphics power) is rated for 350W. Gainward's model confirms early rumors about the utilization of twin 8-pin PCIe power connectors. In regards to video outputs, the GeForce RTX 3090 Phoenix Golden Sample in particular provides three DisplayPort 1.4a outputs and one HDMI 2.1 port.

As for the GeForce RTX 3080, Gainward's specification table has the graphics card with 4,352 CUDA cores and 10GB of GDDR6X memory. The company's custom model flexes a 1,740 MHz boost clock. The memory checks in at 19 Gbps with a 320-bit memory bus, which amounts to a memory bandwidth of 760 GBps.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The TGP for the GeForce RTX 3080 seems to be 320W, just 30W lower than the GeForce RTX 3090. This should still merit the presence of two 8-pin PCIe power connectors. Video outputs on the GeForce RTX 3080 Phoenix Golden Sample are identical to those of its bigger brother.

The GeForce RTX 3090's 24GB VRAM screams that it's the replacement for the Titan RTX. This is puzzling because it isn't like Nvidia to release a Titan-esque graphics card this early in a product launch. The Titan models typically come a few months after. It's not like Nvidia is shooting itself in the foot, either. The GA102 silicon presumably houses up to 7,552 CUDA cores, and only 5,248 CUDA cores are enabled in the GeForce RTX 3090. If necessary, Nvidia has more than enough headroom to roll an Ampere-powered Titan graphics card later on or even a GeForce RTX 3080 Ti.

The GeForce RTX 3080, on the other hand, is a curious case. The graphics card reportedly has the same number of CUDA cores as the GeForce RTX 2080 Ti but loses 1GB of VRAM. In the Nvidia's defense, the GeForce RTX 3080 did receive a memory upgrade to push the memory bandwidth up by 23.4%. The graphics card is on a new architecture though so it remains to be seen how the situation pans out.

Nvidia's big announcement is this Tuesday. It'll be interesting to see how the chipmaker sells Ampere to the public.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

sstanic 3090 seems like a xx80Ti with a bit more VRAM, but 3080 definitely seems like a xx70. Nvidia seems to just have 'upgraded' the naming scheme to justify higher pricing. They probably learned that lesson with the RTX2000 series..Reply -

Prisoner #6 I think before I plop down 600-800 dollars on a GPU I will wait until AMD brings out RDNA2 cards. Why pay these premium prices until we see what both AMD and NVIDA has to offer. I will probably not purchase a card until I see the reviews on both 3000 series and whatever AMP calls theirs-and I need one! I am still on a Pascal card and its not an 1080 TI. If I could go back 4 years I would have purchased the 1080 TI. It was a bargain compared to Turning and Ampere even with their performance increases.Reply -

Zizo007 I am staying with my 2080 Ti، its enough for my 4K60. But 7,552 CUDA for Titan? Seriously? I think it will be the first time that a Titan will be worth its price.Reply -

spongiemaster Reply

The 3080 has the same number of cuda cores as the 2080ti, and we know there will be IPC improvement, so the 3080 will be faster in rasterized graphics. Rumors indicate it will crush a 2080ti in raytracing which is where Nvidia's focus is now, and it has 25% more memory than a 2080. How does that sound like a xx70?sstanic said:3090 seems like a xx80Ti with a bit more VRAM, but 3080 definitely seems like a xx70. Nvidia seems to just have 'upgraded' the naming scheme to justify higher pricing. They probably learned that lesson with the RTX2000 series.. -

spongiemaster Reply

It's only there if Nvidia needs it, which won't be likely. If AMD can challenge the 3090 with an RDNA2 refresh next year, we may see Nvidia drop the hammer with a 3090Ti. Otherwise, I would expect a 3080Ti to slot between the 3080 and 3090 in a refresh next year.Zizo007 said:I am staying with my 2080 Ti، its enough for my 4K60. But 7,552 CUDA for Titan? Seriously? I think it will be the first time that a Titan will be worth its price. -

sstanic Reply

Yes, I am aware of all that, and hopefully it is even better, but usually xx80 and xx70 were the first two to be introduced. It used to be a midrange chip, and a slightly cut down midrange chip, which would then be marketed by Nvidia as high-end. If these rumours are correct, this time they are also introducing a midrange chip and its slightly cut down version, but with different names. Number of CUDA cores and overall performance increase is naturally due to 2 years of development, just as it always used to be, so we see smaller node, more VRAM etc. but it is still essentially a midrange chip.spongiemaster said:The 3080 has the same number of cuda cores as the 2080ti, and we know there will be IPC improvement, so the 3080 will be faster in rasterized graphics. Rumors indicate it will crush a 2080ti in raytracing which is where Nvidia's focus is now, and it has 25% more memory than a 2080. How does that sound like a xx70?

So why would they keep chip development on the same-ish track but change the marketing names? There could be various reasons, introduction of future products in between, after they see how AMD's chips are doing etc. One obvious reason is also that newer smaller nodes cost significantly more than old 16-12nm, probably more than 75% more, so they get more performance but at a higher cost. And since the market didn't really like the large price hike with RTX2000, it seems logical to go with more performance=more money=higher ranking name, since the buyers' eventual discontent will be subdued significantly that way. And so the 3070 becomes 3080, and 3080 Ti becomes 3090.. -

mdd1963 ReplyAdmin said:VideoCardz leaks Gainward's GeForce RTX 3090 and GeForce RTX 3080 Phoenix Golden Sample graphics cards.

GeForce RTX 3090, RTX 3080 Specifications Reportedly Exposed In Vendor Spec Sheets : Read more

24 GB of VRAM, it said? Wow!

That would seem largely wasted at 'only' 4k, I'd think; so, short of few folks attempting to tinker with/run 8K gaming, having a bunch of idle VRAM at only 1440P hardly seems like money well spent. (Well, short of a handful of folks thinking twice the VRAM must be twice as fast) -

RodroX I hope theres a lot more going on under the hood, cause increasing the cuda cores alone and a faster memory wont justify a huge price increase. Not to mention the important power requirements (at least on this papers).Reply

I think nvidia didn't got the memo about PC tech going smaller, been more efficiente while keeping the same or lower price.