With RealSense, Intel Seeks To Mimic Human Perception

It’s no secret that Intel, the company we know for its CPUs, has been talking more about the Internet of Things, about RealSense, about machine learning, and even about mixed (or “merged”) reality. Its conference keynotes now tend to feature drones and synchronized robots and virtually produced musical numbers. It’s all in good fun, and certainly all in good business.

We made a visit to Intel’s R&D labs for a bit more esoteric view of the underpinnings of it all, and what we learned surprised us in its audacity. Intel’s goal, we were told, is to mimic the human perceptual system. We weren’t fed details about sensor process technology; we were given lessons in human biology. We didn’t talk about overclocking CPUs; we talked about overclocking neurons.

What drove our visit was, in part, RealSense, and what we saw at Intel’s developer conference earlier in the year. We’ve spent a fair amount of time writing about RealSense, back when Intel introduced it a few IDFs and CESes ago, showcasing it in laptops (and those drones and robots).

But this year, the company announced the R400 series, which is even more advanced, as you’d imagine. Further, the company showed off a prototype of a virtual reality technology called Project Alloy, which will soon feature those same R400 sensors. Project Alloy introduced a new term into our lexicon: “merged reality,” by which Intel really means something more like VR with some real world elements mixed in. (It’s basically augmented reality, with a twist; let’s call it “XR.”) We’ll get into a bit of a discussion about the specifics of what this means, but for now, it’s best to think of Intel’s vision, as it were, as a reversed version of the augmented reality we’ve come to know and love in Microsoft’s Hololens.

Our tutor for our visit was Dr. Achin Bhowmik, VP of Intel’s Perceptual Computing Lab. In person he is exactly as he comes off when presenting in an IDF session: irrepressible and enthusiastic, with moderately complex explanations of incredibly complex subjects. And always smiling. Smiling, perhaps, because he gets to educate people about the work he and his team are doing.

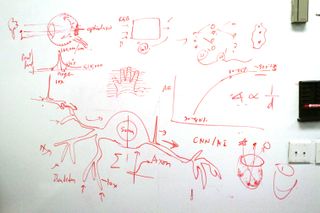

Bhowmik is a natural teacher (as evidenced, perhaps, by his stops at numerous universities around the world)--which is good, because we had (and have) much to learn about the technology he’s working on--and in response to our questions, he went immediately to a whiteboard and began illustrating how human vision and perception systems work with a marker and rapid patter.

A few of the younger employees in his lab were on hand for our visit. I watched them watch him with wide eyes. “You guys probably get this all the time, don’t you,” I joked. “No,” one whispered back, “He never goes off like this.” Simply, Bhowmik the teacher had the attention of the room.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The Camera: As Far As The Eyes Can Feel

Cameras, as Dr. Bhowmik told us, have been for preserving memories. But Intel views them as real-time visual sensors that can help us understand the world around us.

Bhowmik talked about the human perceptual system, about the relationship between your eyes and the visual cortex, and about the body’s motion sensors --the movement of fluid in the vestibular organ near the cochlea as you move, directionally, sending that information to neurons, which send the motion signals to the brain and maps them to what your eyes are seeing.

When that information does not align, we get motion sickness. Think about being on a ship with no windows: You’re feeling the movement, but not seeing it. For many people, this leads to motion sickness.

Intel is trying to mimic both the eyes and the vestibular systems of your body, and map them. “We’re a hardware company,” Bhowmik said. The goal, then, is to create a visual cortex abstraction in hardware.

Of course, perception isn’t just a reflection of what you see, but how you interpret it. Hence, Intel’s view naturally includes the notion of machine learning/artificial intelligence. Bhowmilk said that we can train a perceptual system with millions of samples of, say, hands, but in truth there are billions of possibilities, so robots and other sensing devices need to learn and adapt to what they “see” beyond what they’re programmed to understand.

The deeper the network and sample base of knowledge the better, but the network needs to be learning and growing all the time. In the case of on-device knowledge systems, the device checks in with the network, sharing what it’s learned, and gathering what other systems have learned.

This, too, resembles human biology. The human brain has billions of neurons, interconnected and constantly evolving. Those neurons are the point of input where tens of thousands of electrical pulses (ions) produce one single output. The input pulses carry weights, some excitatory, some inhibitory -- think of a child seeing fire, with the added weight telling them it’s going to hurt if they touch it. These weights determine if the neuron fires or not, and these weights strengthen over time as you rest and sleep. This is how we evolve.

The hardware-based sensor system is similar. The cameras and motion sensors on RealSense devices provide inputs to the onboard chip dedicated to processing visual and motion information.

Bhowmik said that some of the learning systems we have -- Siri, Amazon Echo, etc. -- make mistakes, but because they seem partly human, we forgive them. Unlike computers, which are meant to be correct and precise, perceptual systems are, like humans, imprecise at times. Intel CPUs (meaning general purpose processors), he said, are not built to run artificial intelligence.

Bhowmik made another biological comparison: A camera provides uniform pixel density, but the human eye does not. Your eye has a lens, and photoreceptors that convert light into electrical signals, and that is passed to the visual cortex. An image is inverted around an optical axis, and there’s an area where you have the highest pixel density and areas where there is no pixel density; further, at the far periphery, there are actually no color sensors. (This is, in a way, similar to foveated rendering.)

Two eyes give you depth perception -- two different pictures of the same world. The spherical shape of the eyeball is the most optimized shape you can have. The cornea and the lens can focus the light better and form an image. Again, sensors are built to mimic the eyes, capturing depth, and the color of the pixel, and also where in space the color is.

One more step: All of these pictures are used to train the network. Millions of depth camera sensors feed the neural network.

RealSense Camera Tech

That is the idea behind Intel’s RealSense technology. But there are multiple versions of Intel RealSense cameras.

There is the SR300, which uses coded light technology, and essentially miniaturizes what Kinect can do. It consists of a MEMS laser projector that creates a coded IR pattern (an 8-bit code for every point in space), and a high speed IR camera that captures that reflected pattern, which the ASIC processes in real time to calculate depth for each pixel.

So you’ve got your laser-generated 2D spatial patterns, the IR camera is taking pictures of them, and the chip is calculating the coordinates of the 3D point cloud, and doing the calibration to map color and depth.

The depth sensing is done in IR, and the 60 FPS camera captures in RGB. It is a standard, off-the-shelf camera sensor, Intel said. The ASIC can map a 3D coordinate on the color pixel so that it creates an overall texture map, according to Bhowmik.

This is best demonstrated in Intel’s 3D scanning application. I gave this a whirl, and the SR300 scanned my entire body. Every frame has a 3D coordinate and color value for that point, and the ASIC maps all of the depth and textures.

Another version of a RealSense camera, the R200, has a longer range sensor (10s of meters), and is designed for applications such as drones. It has an IR projector, two IR cameras, a color camera, and the processor.

There is also the new (actually, still to come) R400 series, which Intel announced at IDF. This camera has longer range and better depth quality, Bhowmik said. It can capture HD color at 60Hz. It consists of two IR cameras, a laser projector, and an IR projector with a diffuser. This camera can take pictures from slightly different points, and it can calculate the binocular disparity from the shift between them and calculate the difference, he said. A peripheral camera on the side will detect motion.

Project Alloy

One of the more popular applications for all of these sensors is, of course, VR. To that end, Intel announced Project Alloy at IDF16. What was so stunning about Project Alloy was that it combined VR and AR, and it did so using cameras for inside-out 6DoF tracking--all without the confines of external sensors like those from Oculus (Constellation) and HTC (Lighthouse), and all without the entanglement of wires, like so many dog leashes.

Back to this notion of re-creating the actual human experience: If Alloy delivers on its possibilities, you won’t be confined only to areas tracked by external sensors. In a way, your virtual world would be as infinite as your actual world. Because Alloy can bring the physical world into the virtual world via RealSense, it is recreating (or correcting?) the vestibular limitations of other VR systems. Inside the HMD, you see what RealSense sees.

Many have asked why Intel doesn’t call Alloy “augmented reality.” Bhowmik explained that in AR, you overlay something virtual onto the real world. VR, by contrast, transports you to another world entirely, occluding the real world. With Alloy -- and here, Intel calls this a “merged reality” -- the real and virtual worlds are brought together as one, from inside an occluded HMD. Some of the demonstrations Intel has provided include taking objects in the real world and having those interact with and physically impact what’s going on in the virtual world.

Bhowmik talked about the Proprioception cue. This is your brain’s way of bringing in your sense of your own body with the perceptual system. In short, your subconscious gets nervous when you don’t see your actual limbs over a period of time. Bhowmik said that even if you know you’re in VR, and you don’t experience any motion sickness during the experience, over time, you may start to get sick anyway. There’s an “animalistic instinct that something is missing,” he said. Therefore, being able to see your limbs in VR is a crucial feature.

Today’s pre-production Alloy uses two custom R200 RealSense cameras and two fisheye cameras. The final production unit will use one R400. Bhowmik revealed that this will include the fixed function hardware in the ASIC, doing the 3D point cloud calculations and mapping in the color, and doing all of the time stamping and synchronization work. Intel isn’t talking about the sensor processor chip, but it will do the 6DoF tracking, and it will run all of the sensor software, including processing hand interactions and collision detection. There will be a CPU and GPU, of course.

Because Intel has yet to divulge specifications on Project Alloy (and/or any other VR HMD iterations or variations), most of the rest of the details on Project Alloy is all speculation--which we have already done at length. What we do know, though, is that Intel now owns Movidius, a company that makes vision processors and deep learning technology. The deep learning-related goals that Intel has professed for Project Alloy are already present in Movidius’ portfolio. We presume the continued lag on the release of the R400 series cameras has to do with Intel implementing Movidius’ technology into it.

So why is this all significant? It’s not just because the intersection of XR and deep learning portends entirely new types of personal computing--which by itself is of enormous importance--it’s because one of the many companies exploring these technologies in earnest is Chipzilla itself. That is, although these are presently somewhat niche, fringey, developing technologies, Intel sees a future in which they underpin the next wave of computing.

If Intel is betting correctly here, the work being done by Dr. Achin Bhowmik and his team in the Perceptual Computing Lab will keep the company in computing’s critical path, long after Moore’s Law becomes just another chapter in the technology history books.

Update, 12/9/16, 10:40am PT: We have adjusted a few bits of the text with more accurate descriptions of the technology being discussed.

-

Sarreq Teryx tell me again why a camera (of any sort) necessitates a large bezel any more? those sensor boards are tiny.Reply