Tom's Hardware Verdict

Pros

- +

Fastest Graphics Card (until 3090 arrives)

- +

Priced the same as RTX 2080 Super

- +

Delivers true 4K 60+ fps gaming

- +

Runs reasonably cool and quiet

- +

Major architectural updates for future games

Cons

- -

Highest TDP for a single GPU (until 3090)

- -

Potential for CPU bottlenecks

- -

Needs a 1440p or 4K display to shine

- -

We're not sold on the 12-pin power adapter

- -

No RGB bling (but this is a pro for many!)

Why you can trust Tom's Hardware

Nvidia's GeForce RTX 3080 Founders Edition is here, claiming the top spot on our GPU benchmarks hierarchy, and ranking as the best graphics card currently available — provided you're after performance first, with price and power being lesser concerns. After months of waiting, we finally have independent benchmarks and testing data. Nvidia has thrown down the gauntlet, clearly challenging AMD's Big Navi to try and match or beat what the Ampere architecture brings to the table.

We're going to hold off on a final verdict for now, as we have other third-party RTX 3080 cards to review, which will begin as soon as tomorrow. That's good news, as it means customers won't be limited to Nvidia's Founders Edition for the first month or so like we were with the RTX 20-series launch. Another piece of good news is that there's no Founders Edition 'tax' this time: The RTX 3080 FE costs $699, direct from Nvidia, and that's the base price of RTX 3080 cards for the time being. The bad news is that we fully expect supply to be insufficient to keep up with what we expect to be exceptionally high demand.

The bottom line, if you don't mind spoilers, is that the RTX 3080 FE is 33% faster than the RTX 2080 Ti, on average. Or, if you prefer other points of comparison, it's 57% faster than the RTX 2080 Super, 69% faster than the RTX 2080 FE — heck, it's even 26% faster than the Titan RTX!

But there's a catch: We measured all of those 'percent faster' results across our test suite running at 4K ultra settings. The lead narrows if you drop down to 1440p, and it decreases even more at 1080p. It's still 42% faster than a 2080 FE at 1080p ultra, but this is very much a card made for higher resolutions. Also, you might need a faster CPU to get the full 3080 experience — check out our companion GeForce RTX 3080 CPU Scaling article for the full details.

| Graphics Card | RTX 3080 FE | RTX 2080 Super FE | RTX 2080 FE |

|---|---|---|---|

| Architecture | GA102 | TU104 | TU104 |

| Process (nm) | Samsung 8N | TSMC 12FFN | TSMC 12FFN |

| Transistors (Billion) | 28.3 | 13.6 | 13.6 |

| Die size (mm^2) | 628.4 | 545 | 545 |

| GPCs | 6 | 6 | 6 |

| SMs | 68 | 48 | 46 |

| FP32 CUDA Cores | 8704 | 3072 | 2944 |

| Tensor Cores | 272 | 384 | 368 |

| RT Cores | 68 | 48 | 46 |

| Boost Clock (MHz) | 1710 | 1815 | 1800 |

| VRAM Speed (Gbps) | 19 | 15.5 | 14 |

| VRAM (GB) | 10 | 8 | 8 |

| VRAM Bus Width | 320 | 256 | 256 |

| ROPs | 96 | 64 | 64 |

| TPCs | 34 | 24 | 23 |

| TMUs | 272 | 192 | 184 |

| GFLOPS FP32 | 29768 | 11151 | 10598 |

| Tensor TFLOPS FP16 (Sparsity) | 119 (238) | 89 | 85 |

| RT TFLOPS | 58 | 26 | 25 |

| Bandwidth (GBps) | 760 | 496 | 448 |

| TDP (watts) | 320 | 250 | 225 |

| Dimensions (mm) | 285x112x38 | 267x116x38 | 267x116x38 |

| Weight (g) | 1355 | 1278 | 1260 |

| Launch Date | Sep-20 | Jul-19 | Sep-18 |

| Launch Price | $699 | $699 | $799 |

Meet GA102: The Heart of the Beast

We have a separate article going deep into the Ampere architecture that powers the GeForce RTX 3080 and other related GPUs. If you want the full rundown of everything that's changed compared to the Turing architecture, we recommend starting there. But here's the highlight reel of the most important changes:

The GA102 is the first GPU from Nvidia to drop into the single digits on lithography, using Samsung's 8N process. The general consensus is that TSMC's N7 node is 'better' overall, but it also costs more and is currently in very high demand — including from Nvidia's own A100. Could the consumer Ampere GPUs have been even better with 7nm? Perhaps. But they might have cost more, only been available in limited quantities, or maybe they would have been delayed a few more months. Regardless, GA102 is still a big and powerful chip, boasting 28.3 billion transistors packed into a 628.4mm square die. If you're wondering, that's 52% more transistors than the TU102 chip used in RTX 2080 Ti, but in a 17% smaller area.

Ampere ends up as a split architecture, with the GA100 taking on data center ambitions while the GA102 and other consumer chips have significant differences. The GA100 focuses far more on FP64 performance for scientific workloads, as well as doubling down on deep learning hardware. Meanwhile, the GA102 drops most of the FP64 functionality and instead includes ray tracing hardware, plus some other architectural enhancements. Let's take a closer look at the Ampere SM found in the GA102 and GA104.

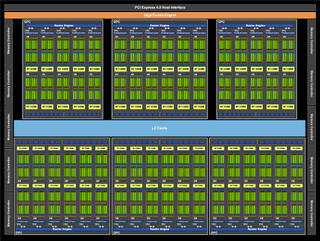

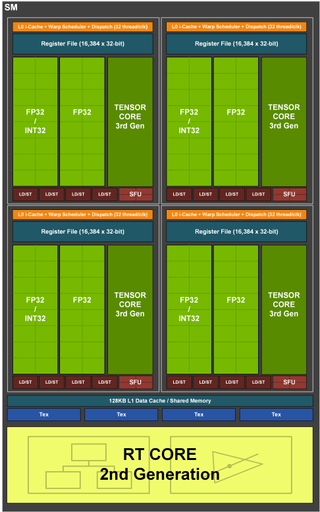

Nvidia GPUs consist of several GPCs (Graphics Processing Clusters), each of which has some number of SMs (Streaming Multiprocessors). Nvidia splits each SM into four partitions that can operate on separate sets of data. With Ampere, each SM partition now has 16 FP32 CUDA cores, 16 FP32/INT CUDA cores, a third-gen Tensor core, load/store units, and a special function unit. The whole SM has access to shared L1 cache and memory, and there's a single second-gen RT core. In total, that means 64 FP32 cores and 64 FP32/INT cores, four Turing cores, and one RT core. Let's break that down a bit more.

The Turing GPUs added support for concurrent FP32 (32-bit floating point) and INT (32-bit integer) operations. FP32 tends to be the most important workload for graphics and games, but there's still a decent amount of INT operations — for things like address calculations, texture lookups, and various other types of code. With Ampere, the INT datapath is upgraded to support INT or FP32, but not at the same time.

If you look at the raw specs, Ampere appears to be a far bigger jump in performance than the 70% we measured. 30 TFLOPS! But it generally won't get anywhere near that high because the second datapath is an either/or situation: It can't do both types of instructions on the pipeline in the same cycle. Nvidia says around 35% of gaming calculations are INT operations, which means you'll end up with something more like 20 TFLOPS of FP32 and 10 TOPS of INT on the RTX 3080.

While we're on the subject, let's also point out that a big part of the increased performance comes from increased power limits. RTX 2080 was a 225W part (for the Founders Edition), and RTX 3080 basically adds 100W to that. That's half again more power for 70% more performance. It's technically a win in overall efficiency, but in the pursuit of performance, Nvidia had to move further to the right on the voltage and frequency curve. Nvidia says RTX 3080 can deliver a 90% improvement in performance-per-watt if you limit performance to the same level on both the 2080 and 3080 … but come on, who wants to limit performance that way? Well, maybe laptops, but let's not go there.

One thing that hasn't changed much is the video ports. Okay, that's only partially true. First, there's a single HDMI port, but it's HDMI 2.1 instead of Turing's HDMI 2.0b, but the three DisplayPort connections remain 1.4a. And last but not least, there's no VirtualLink port this round — apparently, VirtualLink is dead. RIP. The various ports are all capable of 8K60 using DSC (Display Stream Compression), a "visually lossless" technique that's actually not really visually lossless. But you might not notice at 8K.

Getting back to the cores, Nvidia's third-gen tensor cores in GA102 work on 8x4x4 FP16 matrices, so up to 128 matrix operations per cycle. (Turing's tensor cores used 4x4x4 matrices, while the GA100 uses 8x4x8 matrices.) With FMA (fused multiply-add), that's 256 FP operations per cycle, per tensor core. Multiply by the 272 total tensor cores and clock speed, and that gives you 119 TFLOPS of FP16 compute. However, Ampere's tensor cores also add support for fine-grained sparsity — basically, it eliminates wasting time doing multiplications by 0, since the answer is always 0. Sparsity can provide up to twice the FP16 performance in applications that can use it.

The RT cores receive similar enhancements, with up to double the ray/triangle intersection calculations per clock. The RT cores also support a time variable, which is useful for calculating things like motion blur. All told, Nvidia says the 3080’s new RT cores are 1.7 times faster than the RTX 2080’s, and they can be up to five times as fast for motion blur.

There are plenty of other changes as well. The L1 cache/shared memory capacity and bandwidth has been increased to better feed the cores (8704KB vs. 4416KB), and the L2 cache is also 25% larger than before (5120KB vs. 4096KB). The L1 cache can also be configured as varying amounts of L1 vs. shared memory, depending on the needs of the application. Register file size is also nearly 50% larger (17408KB vs. 11776KB) with the RTX 3080. GA102 can also do concurrent RT + graphics + DLSS (previously, using the RT cores would stop the CUDA cores).

Finally, the raster operators (ROPS) have been moved out of the memory controllers and into the GPCs. Each GPC has two ROP partitions of eight ROP units each. This provides more flexibility in performance, so where the GA102 has up to 112 ROPS total, the RTX 3080 disables two memory controllers but only one GPC and ends up with 96 ROPS. This is more critical for the RTX 3070 / GA104, however, which still has 96 ROPS even though it only has eight memory controllers. Each GPC also includes six TPCs (Texture Processing Clusters) with eight TMUs (Texture Mapping Units) and a polymorph engine, though Nvidia only enables 34 TPCs for the 3080.

With the core enhancements out of the way, let's also quickly discuss the memory subsystem. GA102 supports up to twelve 32-bit memory channels, of which ten are enabled on the RTX 3080. Nvidia teamed up with Micron to use its GDDR6X memory, which uses PAM4 signaling to boost data rates even higher than before. Where the RTX 20-series cards topped out at 15.5 Gbps in the 2080 Super and 14 Gbps in the other RTX cards, GDDR6X runs at 19 Gbps in the RTX 3080. Combined with the 320-bit interface, that yields 760 GBps of bandwidth - a 70% improvement over RTX 2080.

The RTX 3080’s memory controller has also been improved, with a new feature called EDR: Error Detection and Replay. When the memory detects a failed transmission, rather than crashing or corrupting data, it simply tries again. It will do this until it's successful, though it's still possible to cause a crash with memory overclocking. The interesting bit is that with EDR, higher memory clocks might be achievable, but still result in lower performance. That's because the EDR ends up reducing memory performance when failed transmissions occur. We'll have more to say on this in the overclocking section.

GeForce RTX 3080 Founders Edition: Design, Cooling, Aesthetics

Nvidia has radically altered the design of its Founders Edition cards for the RTX 30-series. The new design still includes two axial fans, but Nvidia heavily redesigned the PCB and shortened it so that the 'back' of the card (away from the video ports) consists of just a fan, heatpipes, radiator fins, and the usual graphics card shroud. Nvidia says the new design delivers substantial improvements in cooling efficiency, while at the same time lowering noise levels. We'll see the fruits of the design later.

Aesthetics are highly subjective, and we've heard plenty of people like the new design, while others think it looks boring. There's no RGB bling if that's your thing, and the only lighting consists of a white GeForce RTX logo on the top of the card with subtle lighting around the 'X' on both sides of the card (but only half of the 'X' is lit up on the side with the "RTX 3080" logo).

Personally, I think the new card looks quite nice, and it feels very solid in the hand. It's actually about 100g heavier than the previous RTX 2080 design, and as far as I'm aware, it's the heaviest single-GPU card Nvidia has ever created. It's also about 2cm longer than the previous generation cards and uses the typical two-slot width. (The GeForce RTX 3090 is about ready to make the 3080 FE look puny, though, with its massive three-slot cooler.)

Nvidia provided the above images of the teardown of the RTX 3080 Founders Edition. We're not ready to attempt disassembly of our card yet — and frankly, we're out of time — but we may return to the subject soon. We're told getting the card apart is a bit trickier this round, mostly because Nvidia has hidden the screws behind tiny covers.

The main board looks far more densely populated than previous GPUs, with the 10 GDDR6X memory chips surrounding the GPU in the center. You can also see the angled 12-pin power connector and the funky-looking cutout at the end of the PCB. Power delivery is obviously important with a 320W TGP, and you can see all the solid electrolytic capacitors placed to the left and right of the memory chips.

The memory arrangement is also interesting, with four chips on the left and right sides of the GPU, up to three chips above the GPU (two mounting positions are empty for the RTX 3080), and a final single chip below the GPU. Again, Nvidia clearly spent a lot of effort to reduce the size of the board and other components to accommodate the new and improved cooling design. Spoiler: It works very well.

One interesting thing is that the 'front' fan (near the video ports) spins in the usual direction — counterclockwise. The 'back' fan, which will typically face upward when you install the card in an ATX case, spins clockwise. If you look at the fins, that means the back fan spins the opposite direction from what we normally expect. The reason is that Nvidia found this arrangement pulls air through the radiator better and generates less noise. Also note that the back fan is slightly thicker, and the integrated ring helps increase static pressure on both fans while keeping RPMs low.

If you don't like the look of the Founders Edition, rest assured there will be plenty of other options. We have a few third-party RTX 3080 cards in for testing, all of which naturally include RGB lighting. None of the third party cards use the 12-pin power connector, either — not that it really matters, since the required adapter comes with the card. Still, that vertically-mounted 12-pin port just looks a bit less robust if you happen to swap GPUs on a regular basis. I plan to leave the adapter permanently connected and just connect or disconnect the normal 8-pin PEG cables. The 12-pin connector appears to be rated for 25 'cycles,' and I've already burned through half of those (not that I expect it to fail any time soon).

Current page: GeForce RTX 3080 Founders Edition: Hail to the King!

Next Page GeForce RTX 3080: Initial Overclocking ResultsJarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

chickenballs I thought the 3080 was released in September.Reply

Maybe it's considered a "paper-launch"

Nvidia won't like that :Dchalabam said:This article lacks a tensorflow benchmark -

JarredWaltonGPU Reply

FYI, it's the same review that we posted at the launch, just reformatted and paginated now. If I wasn't on break for the holidays, I'd maybe do a bunch of new charts, but I've got other things I'll be doing in that area soon enough.Zeecoder said:This article is outdated. -

MagicPants I still think the shortage is the big news for the 3080.Reply

I might be paranoid, but I think there be something to the fact that the top ebay 3080 scalper "occomputerparts" has sold 97 RTX 3080s from EVGA specifically and shipped them from Brea, California.

Anyone want to guess where EVGA is headquartered??? Brea, California.

Now it could be that some scalper wrote a bot and has been buying them off Amazon, BestBuy, and Newegg. But then why only sell EVGA cards? They'd have to program their bot to ignore cards from other vendors, which wouldn't make any sense. -

TEAMSWITCHER ReplyMagicPants said:I still think the shortage is the big news for the 3080.

I might be paranoid, but I think there be something to the fact that the top ebay 3080 scalper "occomputerparts" has sold 97 RTX 3080s from EVGA specifically and shipped them from Brea, California.

Anyone want to guess where EVGA is headquartered??? Brea, California.

Now it could be that some scalper wrote a bot and has been buying them off Amazon, BestBuy, and Newegg. But then why only sell EVGA cards? They'd have to program their bot to ignore cards from other vendors, which wouldn't make any sense.

It's probably an inside job. Demand for RTX 3000 series is off the charts! I don't see any good reason to buy an AMD GPU other than for a pity-purchase. AMD is pricing their cards less, because they are less. In sheer performance it's just a bit less, but in next generation capabilities .. it's a lot less. I will hold out for an RTX 3080. AMD won't catch up, before Nvidia has filled the demand of the market. Patience will be rewarded. -

LeszekSol What is a point discussing items (namely - Nvidia RTX3080 Founders Edition) which dos not exist in free market? Cards are anavailable since first day of "hiting market" and even Nvidia has no idea (or perhaps plans) when it will become available again. There are other cards based on similar architecture - but actually a little different - so.... WHAT FOR guys you are loosing time to discuss this? Lets forget about NVidia, or let's put it on the shelf with MobyDick books.....Reply -

PureMist Hello,Reply

I have a question about the settings used in the testing for FFXIV. The chart says Medium or Ultra but the game itself only has Maximum and two PC and Laptop settings. So how do these correlate to the actual in game settings? Or are “Medium” and “Ultra” two custom presets you made for testing purposes?

Thank you for your time. -

JarredWaltonGPU Reply

Sorry, my chart generation script defaults to "medium" and "ultra" and I didn't think about this particular game. You're right, the actual settings are different in FFXIV Benchmark. I use the "high" and "maximum" settings instead of medium/ultra for that test. I'll see about updating the script to put in the correct names, though I intend to drop the FFXIV benchmark at some point regardless.PureMist said:Hello,

I have a question about the settings used in the testing for FFXIV. The chart says Medium or Ultra but the game itself only has Maximum and two PC and Laptop settings. So how do these correlate to the actual in game settings? Or are “Medium” and “Ultra” two custom presets you made for testing purposes?

Thank you for your time. -

acsmith1972 The cheapest I see this card for right now is $1800. I really wish these manufacturers wouldn't be pricing these cards based on bitcoin mining. It's once again going to price the rest of us out of getting any decent video cards till BTC drops again.Reply