Nvidia to Reportedly Triple Output of Compute GPUs in 2024: Up to 2 Million H100s

Triple the output, triple the revenue?

Nvidia, which just earned over $10 billion in one quarter on its datacenter-oriented compute GPUs, plans to at least triple output of such products in 2024, according to the Financial Times, which cites sources with knowledge of the matter. The move is very ambitious and if Nvidia manages to pull it off and demand for its A100, H100 and other compute CPUs for artificial intelligence (AI) and high-performance computing (HPC) applications remains strong, this could mean incredible revenue for the company.

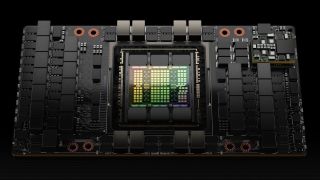

Demand for Nvidia's flagship H100 compute GPU is so high that they are sold out well into 2024, the FT reports. The company intends to increase production of its GH100 processors by at least threefold, the business site claims, citing three individuals familiar with Nvidia's plans. The projected H100 shipments for 2024 range between 1.5 million and 2 million, marking a significant rise from the anticipated 500,000 units this year.

Because Nvidia's CUDA framework is tailored for AI and HPC workloads, there are hundreds of applications that only work on Nvidia's compute GPUs. While both Amazon Web Services and Google have their own custom AI processors for AI training and inference workloads, they also have to buy boatloads of Nvidia compute GPUs as their clients want to run their applications on them.

But increasing the supply of Nvidia H100 compute GPUs, GH200 Grace Hopper supercomputing platform, and products on their base is not going to be easy. Nvidia's GH100 is a complex processor that is rather hard to make. To triple its output, it has to get rid of several bottlenecks.

Firstly, the GH100 compute GPU is a huge piece of silicon with a size of 814 mm^2, so it's pretty hard to make in huge volumes. Although yields of the product are likely reasonably high by now, Nvidia still needs to secure a lot of 4N wafer supply from TSMC to triple output of its GH100-based products. A rough estimate suggests TSMC and Nvidia can get at most 65 chips per 300 mm wafer.

To manufacture 2 million such chips would thus require nearly 31,000 wafers — certainly possible, but it's a sizeable fraction of TSMC's total 5nm-class wafer output, which is around 150,000 per month. And that capacity is currently shared between AMD CPU/GPU, Apple, Nvidia, and other companies.

Secondly, GH100 relies on HBM2E or HBM3 memory and uses TSMC's CoWoS packaging, so Nvidia needs to secure supply on this front as well. Right now, TSMC is struggling to meet demand for CoWoS packaging.

Thirdly, because H100-based devices use HBM2E, HBM3, or HBM3E memory, Nvidia will have to get enough HBM memory packages from companies like Micron, Samsung, and SK Hynix.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Finally, Nvidia's H100 compute cards or SXM modules have to be installed somewhere, so Nvidia will need to ensure that its partners also at least triple output of their AI servers, which is another concern.

But if Nvidia can supply all of the requisite H100 GPUs, it certainly stands to make a massive profit on the endeavor next year.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

JarredWaltonGPU Reply

As noted in the text, at ~65 chips per wafer, 2 million H100 GPUs would require ~31,000 wafers. That's basically 20% of one month's total output for TSMC — not insignificant, but also not a huge issue. And considering how the RTX 40-series has been selling, I suspect reallocating some of the AD10x wafers to GH100 instead would make a lot of sense.hotaru251 said:wonder if they'll cut into their gaming gpu's if they cant get enough otherwise. -

umeng2002_2 Probably will reduce gaming GPU output. It's basic business practice to strangle supply to keep prices up. Especially when those dies can be used to much higher margin products.Reply

I'm sure nVidia's long-term strategy is to stop making gaming GPUs all together.

They've been burned by tech fads before (crypto mining), and there's always a chance "AI" will burn them too. -

bit_user Reply

Usually, when there's a profitable business which is no longer core to a company's strategy, they spin it off into a separate company, rather than shut it down. Or, they might sell it, but I'm not really sure who would & could buy it.umeng2002_2 said:I'm sure nVidia's long-term strategy is to stop making gaming GPUs all together. -

thestryker Reply

So long as the base architecture between consumer and enterprise stays the same they won't get out of the gaming GPU industry barring a massive margin shift.umeng2002_2 said:I'm sure nVidia's long-term strategy is to stop making gaming GPUs all together.

Due to IP issues I can't see a viable path to selling, but a restructure certainly is possible.bit_user said:Usually, when there's a profitable business which is no longer core to a company's strategy, they spin it off into a separate company, rather than shut it down. Or, they might sell it, but I'm not really sure who would & could buy it. -

bit_user Reply

It's been diverging, I'm pretty sure.thestryker said:So long as the base architecture between consumer and enterprise stays the same

Bah, you lack imagination. The parent can certainly extend a license to the shared IP necessary for graphics, probably along with strings attached to restrict the offshoot from turning around and re-entering the core deep learning market.thestryker said:Due to IP issues I can't see a viable path to selling, but a restructure certainly is possible.

Anyway, such a move would probably be years down the road, if ever. -

thestryker Reply

Not really there have just been the two enterprise only architectures and they've used every consumer architecture for enterprise products.bit_user said:It's been diverging, I'm pretty sure.

Desktop: Kepler -> Turing -> Ampere -> Ada

Enterprise: Kepler -> Volta -> Turing -> Ampere -> Hopper/Ada -

oofdragon Nvidia could at some point leave the consumer GPU market if it's that much profitable to just sell enterprise, who knows. I'm usually into AMD GPUs anyway, but we'd be in bad waters without competition.. specially considering graphics kind of stopped in the PS5 lvl for some time now.Reply -

hannibal Nvidia does not move away form consumer GPU... They just will turn to be the Apple of the GPUs. The more expensive, the more desired option. Nvidia does not need to compete with prices anymore, because they can either sell really expensive AI GPUs or expensive consumer GPUs... It is win, win situation to Nvidia!Reply

If sales go down in consumer parts they can increase AI parts and increase consumer prices to compensate. There are enough (84%) of customers who are willing to buy Nvidia GPU, no matter the cost! -

closs.sebastien If you manage your company in a smart way, you will favor the production of the product that generate the biggest marginReply

-> increase production of AI-products if the demand is exploding - whatever the (very high) selling-price

-> decrease the production of public-gpu, to make place for the ai-products

Most Popular