Nvidia's Blackwell GPUs Rumored to Feature Up to 33% More Cores, 512-Bit Bus

Possible core count could of GB100, GB202 GPUs explored.

Well-known hardware leaker @kopite7kimi, who has proven to typically know details about Nvidia's plans, has shared some of his thoughts about possible configurations of Nvidia's next-generation codenamed Blackwell GPUs. If his assumptions, presumably based on knowledge of certain details, are correct, then Nvidia's Blackwell may get quite an upgrade in terms of CUDA core count and memory interface. However, since this information is unofficial, it should be taken with caution.

"As I mentioned before, GA100 is 8 [GPC] * 8 [TPC], and GH100 is 8 [GPC] * 9 [GTC]," @kopite7kimi wrote in an X post. "[Compute] GB100 will have a basic structure like 8 [GPC] * 10 [TPC]. [Client PC] GB202 looks like 12 [GPC] * 8 [TPCs]."

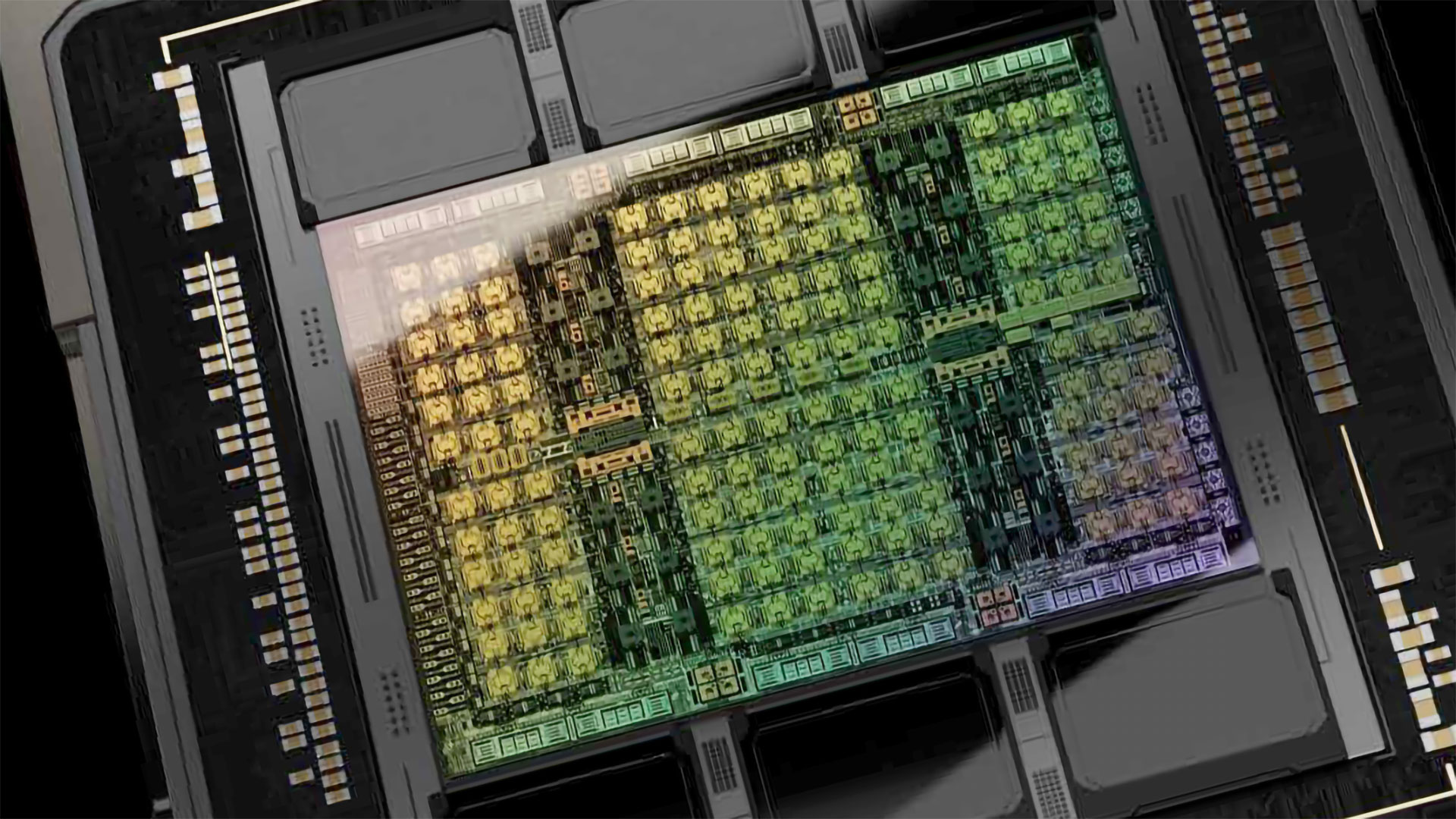

Nvidia's GPUs are organized in groups of large graphics processing clusters (GPCs) comprised of smaller texture processing clusters (TPCs) that, in turn, consist of a group of streaming multiprocessors (SMs) that house actual CUDA cores.

| null | GPC | TPC per GPC | SM per TPC | CUDA Cores per SM | CUDA Core Count | Row 0 - Cell 6 |

| GA100 | 8 | 8 | 2 | 64 | 8192 | Row 1 - Cell 6 |

| GA102 | 7 | 6 | 2 | 128 | 10752 | Row 2 - Cell 6 |

| GH100 | 8 | 9 | 2 | 128 | 18432 | Row 3 - Cell 6 |

| AD102 | 12 | 6 | 2 | 128 | 18432 | Row 4 - Cell 6 |

| GB100 | 8 | 10 | 2 | 128 | 20480 | Row 5 - Cell 6 |

| GB202 | 12 | 8 | 2 | 128 | 24576 | Row 6 - Cell 6 |

Assuming that the renowned hardware leaker is correct and Nvidia is set to maintain the number of streaming multiprocessors per TPC and CUDA cores per SM, then the company's compute GB100 GPU for artificial intelligence (AI) and high-performance computing (HPC) applications will get 20,480 CUDA cores (an 11% increase over GH100) in its full configuration, whereas client PC-oriented GB202 GPU will get 24,576 CUDA cores (a 33% increase over AD102) in its full configuration.

Given the workloads that GB100 will encounter, it is logical for Nvidia to use 'fat' GPCs with as many TPCs per GPC as possible to minimize data exchange between TPCs as much as possible. At the same time, it might be reasonable for Nvidia to make GB202's GPCs somewhat 'fatter' than they are today to simplify the internal organization of the GPU. However, an all-new organization of GPCs will likely require driver optimizations.

In addition to a new microarchitecture and increased number of CUDA cores, Nvidia's GB100 is expected to get a 8,192-bit HBM3/HBM3E memory interface, whereas GB202 is projected to get a 512-bit GDDR7 memory bus, the leaker claims.

About a week ago, @kopite7kimi assumed that Nvidia's Blackwell might be the first GPUs to adopt a multi-chiplet architecture but did not elaborate. It is unclear whether he discussed the number of GPCs and TPCs across one chiplet or across a number of them. Keeping in mind that TSMC N3 process technology (which will presumably be used to make Blackwell GPUs) has limited advantages over N4 when it comes to transistor density and Nvidia's GH100 is already close to the maximum die size possible using existing lithography equipment, it is possible that Nvidia has only managed to squeeze 20,480 CUDA cores into GB100. In this case, it might be reasonable for Nvidia to use two GB100 dies for its next-gen compute GPU.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Assuming that Blackwell GPUs are set to arrive in late 2024 – early 2025 timeframe, the target specifications of Nvidia's next-generation Blackwell processors were set well over a year ago, and by now, the highest-end Blackwell GPUs have probably been taped out. Samples of these devices are probably being tested somewhere in the company's laboratories. As a result, there are hundreds of people at Nvidia who know the specifications of the company's Blackwell GPUs and can share some details with people outside of the chip designer.

That said, it is possible that @kopite7kimi has more or less accurate information about Nvidia's next-generation Blackwell GPUs. Yet, it is also possible that all the details about Nvidia's upcoming GPUs are essentially educated guesses rather than actual solid information.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

jp7189 It seems upside down for the client focused GB202 to have more Cuda cores than the datacenter focused GB100. That is unless GB202 is monolithic and GB100 is only one of a multidie package.Reply -

ohio_buckeye So when does a $2500 dollar 5090 appear? Maybe I sound a bit sarcastic but with what they charge for the 4090 and this is supposed to be faster they might….Reply