RTX 4090 Twice as Fast as RTX 3080 in Overwatch 2 Without DLSS 3

But don't expect more frames per dollar

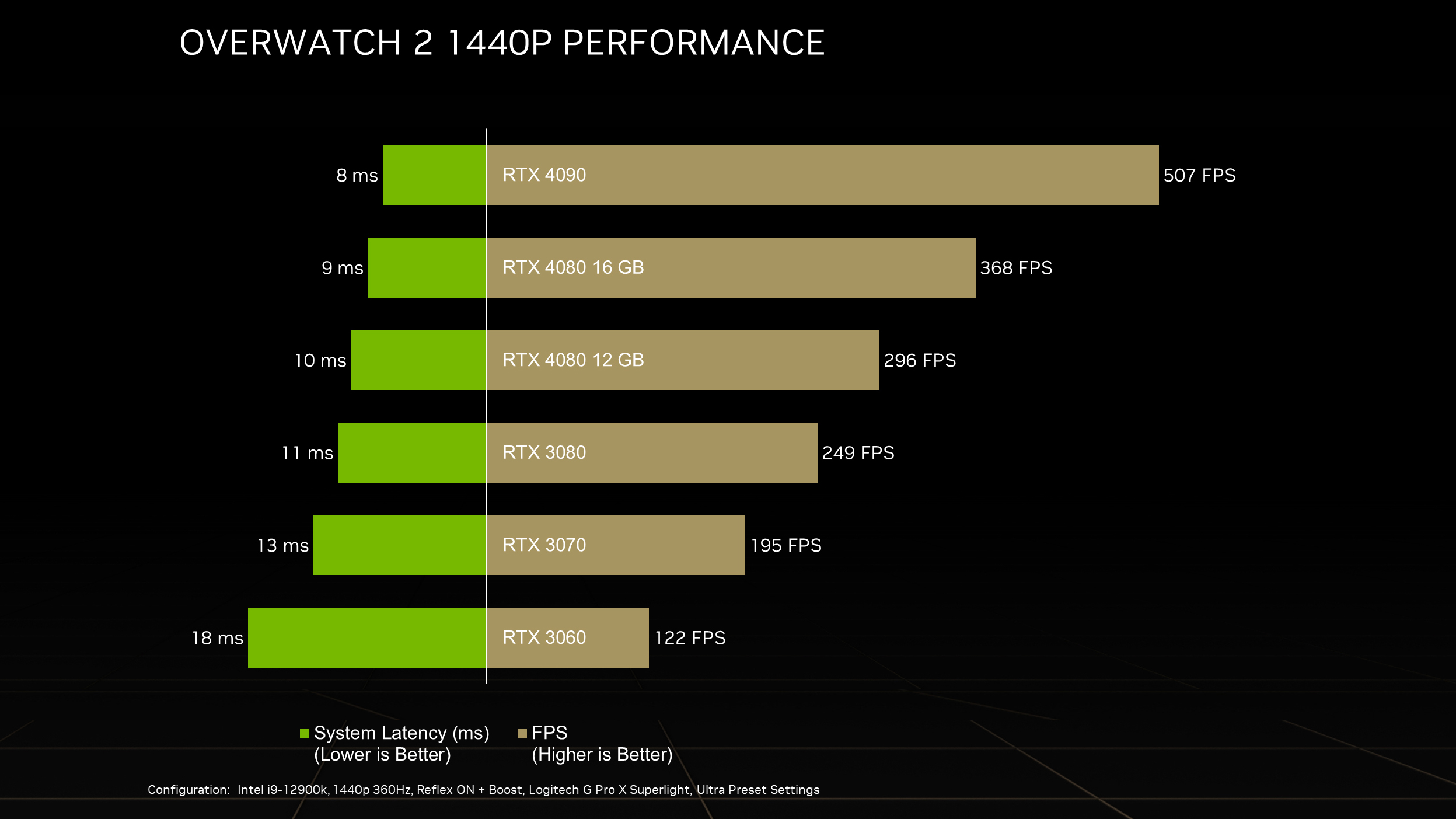

Yesterday Nvidia finally released official RTX 40 series gaming benchmarks — with real frame rate statistics and no artificial frame generation (in the form of DLSS 3) enabled. The benchmark results showed off Overwatch 2 with a 2x frame rate increase from the RTX 4090 in comparison to Nvidia' previous generation RTX 3080.

Nvidia has neglected to show us any raw benchmark data in the form of frames per second (fps) since the RTX 40 series' official announcement at GTC several weeks ago. Nvidia limited the amount of benchmark data it showed at the event, only including performance uplifts in terms of percentage points.

Unfortunately, all Nvidia showed for Overwatch 2 was gaming performance metrics — since the game launched the very same day. This does, however, show us how fast Nvidia's Ada Lovelace GPU architecture can be in pure, raw and, unadulterated rasterization performance with no RTX or DLSS features enabled.

Nvidia benchmarked six GPUs, including the RTX 3060, RTX 3070, RTX 3080*, RTX 4080 12GB, RTX 4080 16GB, and RTX 4090. *For some reason Nvidia neglected to share the memory capacity on the 3080 variant, so it's unclear if this is the 10GB or 12GB version.

The test setup included an Intel Core i9-12900K Alder Lake CPU running Overwatch 2 at ultra quality settings with a target resolution of 1440P.

Starting at the bottom, the RTX 3060 outputted 122 fps; the RTX 3070, 195 fps; the RTX 3080, 249 fps; the RTX 4080 12GB, 296 fps; the RTX 4080 16GB, 368 fps; and finally the RTX 4090, 507 fps.

The RTX 4090 takes the performance crown at 507 fps and is 103% faster than Nvidia's previous generation RTX 3080, and is 37.7% faster than the RTX 4080 16GB.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

That isn't to say the RTX 4080 16GB is a slow card — the 4080 also puts in a good result with a 48% performance jump over its direct predecessor, the RTX 3080. This represents a healthy generation-on-generation lead in terms of performance.

The RTX 4080 12GB is the least impressive, with a small 18% performance lead over the RTX 3080 — which makes us wonder why Nvidia is calling this GPU a 4080 in the first place. (However, the 4080 12GB does offer a 51% performance gain over the RTX 3070.)

Its a shame Nvidia didn't bother to include RTX 3080 Ti, RTX 3090, and RTX 3090 Ti results — but based on the RTX 3080 results, we assume all three of these GPUs sit somewhere around the RTX 4080 12GB's performance bracket.

Performance Per Dollar is Terrible

| Model | Frame Rate | Price | FPS Per Dollar |

| GeForce RTX 4090 | 507 | $1599 | 0.32 |

| GeForce RTX 4080 16GB | 368 | $1199 | 0.31 |

| GeForce RTX 4080 12GB | 296 | $899 | 0.33 |

| MSI Ventus GeForce RTX 3080 12GB | 249 | $769 (as of October 5th) | 0.32 |

| Gigabyte Gaming OC RTX 3070 | 195 | $549 (as of October 5th) | 0.36 |

| MSI Ventus RTX 3060 12GB | 122 | $369 (as of October 5th) | 0.33 |

Unfortunately, Nvidia's impressive rasterization performance doesn't impress when it comes to cost efficiency. You get no additional frames per second with the RTX series, cost-wise.

Almost every single RTX 40 series and RTX 30 series GPU hovers around the 0.32 frames per dollar mark — with the exception of the RTX 3070, which gets you 0.36 frames per dollar.

Prices of the RTX 40 series GPUs were taken from Nvidia's listed MSRPs, while the RTX 30 series prices were taken from real world prices on Newegg.com (to demonstrate what you can buy today). Of course, Nvidia probably didn't use AIB partner cards to test the 30 series GPUs — but all AIB partner and Founders Edition cards perform pretty similarly, so this data should be relatively accurate regardless.

This is not what you want to see from a new generation of GPUs. With the way pricing works, Nvidia's RTX 40 series GPUs look more like higher tier RTX 30 series GPUs, rather than a generational upgrade.

This also means cards such as the RTX 3080 Ti, RTX 3090, and RTX 3090 Ti will be directly competing against the RTX 4080 12GB (and possibly the RTX 4080 16GB), thanks to the massive price discounts seen recently.

We'll know more details about Ada Lovelace's performance in our full review (coming soon), but it's not looking great based on this new Overwatch 2 benchmark data.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

hotaru251 whats the fastest refresh rate on a monitor?Reply

anything over that is kind of pointless atm. -

TechyInAZ Replyhotaru251 said:whats the fastest refresh rate on a monitor?

anything over that is kind of pointless atm.

Not true, for competitive shooters, higher FPS is better - whether or not it exceeds your refresh rate. In general, the higher your FPS is, the lower your input lag will be. -

salgado18 Of course it's not cheaper, the real competitor (RDNA3) is still a couple of months away. Until then, they will sell for as high as they can, using the new technologies and "50% more performance" as marketing push. Once AMD makes their move, prices will slowly adjust to the new reality (slowly, because I also think AMD won't push prices lower than necessary too).Reply -

sizzling Anyone else looking at these new cards and feel there is little point in upgrading with current games? At 1440p 240Hz my 3080 is going a cracking job still, to the point I doubt I’d notice any benefit from an upgrade. It feels like gpu performance has accelerated faster than game requirements?Reply -

lmcnabney Reply

You know input lag is the delay between the input device and the pixels on the screen, right? It is going to be the same if you are playing an old game at 240fps or a brand new one with everything turned up to the max at 40.TechyInAZ said:In general, the higher your FPS is, the lower your input lag will be. -

salgado18 Reply

Upgrade when you need to, not when they tell you to. If your card is doing what you need it to do, why change it? Until heavier games come and it can't perform as you expect, don't give in to the buyer's impulse.sizzling said:Anyone else looking at these new cards and feel there is little point in upgrading with current games? At 1440p 240Hz my 3080 is going a cracking job still, to the point I doubt I’d notice any benefit from an upgrade. It feels like gpu performance has accelerated faster than game requirements?

I say perform as you need because you mention 240Hz, but if you are happy with newer games running at 120 fps and your card does it, it still works, right?

My idea is: I'll get a 1440p card to play 1080p content for at least some 4-5 years, if possible more. If my current R9 280 is running games at 1080p medium/high today, the next card can live as long, right? -

TechyInAZ Replylmcnabney said:You know input lag is the delay between the input device and the pixels on the screen, right? It is going to be the same if you are playing an old game at 240fps or a brand new one with everything turned up to the max at 40.

False, input lag takes into consideration CPU and GPU rendering time as well. The faster your CPU and GPU are spitting out frames, the lower your input delay will be. -

lmcnabney ReplyTechyInAZ said:False, input lag takes into consideration CPU and GPU rendering time as well. The faster your CPU and GPU are spitting out frames, the lower your input delay will be.

The CPU/GPU are a factor only if scaling to a non-native resolution on the monitor. That's why the same input lag is detectable when typing in Word, pointing and clicking on the desktop, playing Halflife 2 at 360fps, or playing Cyberpunk with everything turned on. Using a wired mouse and and upgrading to low-latency displays is how you combat it. Yeah, the MB/CPU/GPU can cause problems, but modern gear is just not variable. They all are about as good as they can get. -

TechyInAZ Replylmcnabney said:The CPU/GPU are a factor only if scaling to a non-native resolution on the monitor. That's why the same input lag is detectable when typing in Word, pointing and clicking on the desktop, playing Halflife 2 at 360fps, or playing Cyberpunk with everything turned on. Using a wired mouse and and upgrading to low-latency displays is how you combat it. Yeah, the MB/CPU/GPU can cause problems, but modern gear is just not variable. They all are about as good as they can get.

I'm not sure where you're getting you're information from but that's all false. Any 3D game, that needs rendering time to output a frame, will have varying levels of input lag, depending on how fast the CPU and GPU can render out frames.

Frames per second is just a unit of time. The slower your FPS, the more lag you will have, because the image is taking longer to be displayed on screen. This can be combated by frame limiters somewhat, but again in general, higher FPS yields lower input lag, due to faster frame rendering.