What Is HDR, And What Does It Mean For Your Monitor?

There's a new technology buzzword--well, new in its meaning, anyway: High Dynamic Range, or HDR. That’s a display technology that necessitates a new type of display, for starters, as well as new HDR-specific content. But what, really, is it?

Let’s Talk About Color

It’s important to first understand how humans perceive color, and how HDR color differs from the Standard Dynamic Range (SDR) commonly used today. In physics, color is derived from the wavelength of light, with the color red as the lowest wavelength of light we can see, up to purple as the highest. The wavelength, or frequency, of light here is directly analogous to the frequency of different sounds.

But although our ears can differentiate between frequencies of sound, our eyes, or the receptors in them, cannot differentiate between frequencies of light--at least, not without a trick. Each receptor in your eye is roughly equivalent to a pixel on the "screen" of what you can see, and each one carries a different response to certain wavelengths (colors) of light.

Specifically, your receptors are divided up and can (primarily) see one of three different colors: red, green, or blue. In order to see the full range of colors, then, your eyes infer information. If a green receptor receives a weak signal that's right next to a red receptor picking up a strong signal, then your eyes infer there must be something dark orange around the location in between these two receptors.

This in turn provides a nice, cheap way to engineer color into a screen. Instead of having to produce each actual wavelength of color, screens today use a combination of red, green, and blue subpixels. Displays can simply vary the amount of light, and thus signal, from each of these subpixel colors to produce what appears to be the real color, at least so far as your eyes are concerned.

How Color Relates To HDR

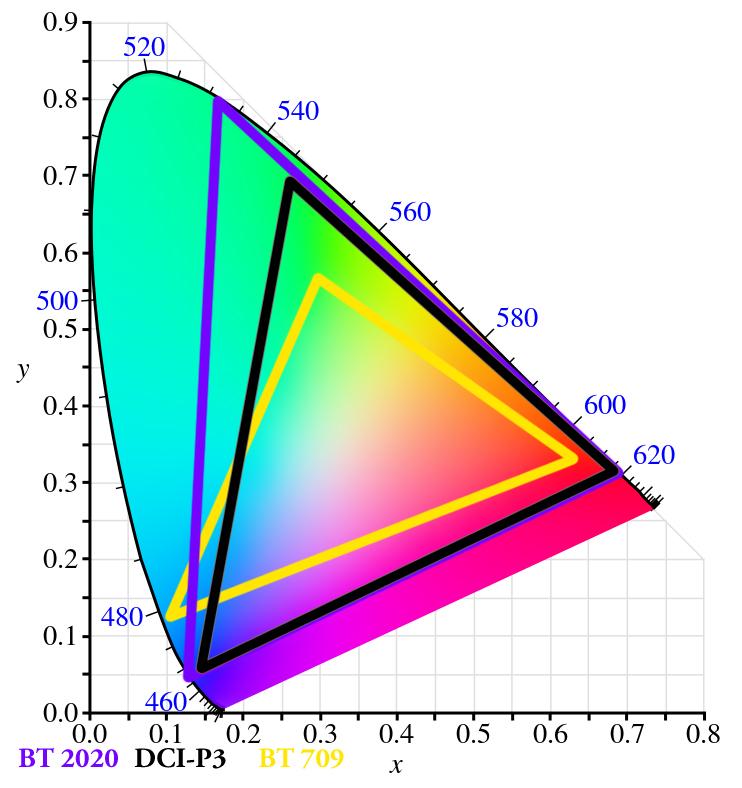

So what does this have to do with SDR versus HDR? This is where color gamuts come in. A color gamut is the range of colors you can produce with the red, green, and blue subpixel colors you have available. Any color in between these three primary colors can be produced, but you can't reproduce a color that is not inside this triangle.

One of the two concepts behind HDR is to expand that triangle of red, green, and blue from the current small one dubbed "BT.709" to something closer to filling the entire range of colors that humans can see (see the chart above). The ultimate goal here is the triangle dubbed “BT.2020.” The problem is that currently, the only way to produce the red, green, and blue colors required for this gamut is to use lasers (which is cool, we know, but impractical). That means expensive laser projectors are the only display devices currently capable of showing all the colors involved--and only certain laser projectors with lasers at the right wavelengths at that.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Today's HDR-equipped displays instead settle for the smaller DCI-P3 gamut, which can be reproduced by OLEDs and LCDs as well as projectors. Although quite a bit smaller than the full BT.2020 gamut, it still contains significantly more colors than the SDR gamut. As a bonus, it’s also the same color gamut that has been used by movies for quite some time, meaning there's already equipment and content out there that supports and uses it.

At some point in the future, though, the plan is that BT.2020 should be adopted by everyone, but that will have to wait until common display technologies can actually support it. And this is only half of HDR; the other half involves bright highlights and dark... well, darkness.

Brightness And Perception

The next thing to understand is how humans perceive brightness, which humans do on a log2 scale. This means that every time brightness (or the amount of photons hitting your eye) doubles, you perceive it as going up only one point on a linear scale (or going up one “stop”). For example, when looking at a scene with a light and a piece of paper, the light could be shooting 32 times more photons into your eye than the paper under it, but to you the light would appear five times brighter than the paper.

You can describe the brightness of this theoretical light and paper using a standardized measurement: nits. One nit is equal to one candela per square meter. A candela is as bright as a lit wax candle, so 1 candle = 1 nit. Meanwhile, a piece of white paper under a bright sun can be around 40,000 nits, or rather 40,000 times brighter than a candle.

Although the paper under a bright sun hits 40K nits, an office interior right next to the paper could be a mere 500 nits. Humans can perceive all of this at once; in fact, humans can "perceive" up to twenty stops of brightness in a single scene. A "stop", remember, is simply a point on that log2 scale, which is to say humans can simultaneously perceive in a scene something a million times brighter than the darkest part of that scene.

Brightness And HDR

So how does all this relate to HDR? First, the goal for HDR was simple: to produce on a display an approximation of an extremely bright scene. Dolby, the first company to start testing for a standard for HDR, found 10,000 nits to be the sweet spot for its test setup. This is why the common Electro-Optical-Transfer-Function, or EOTF, that’s used by all HDR standards caps out at 10K nits of brightness. The EOTF is the mathematical function for transferring an electronic signal into the desired optical signal--i.e. digital to analog function.

For comparison, the reference for BT.709 caps out at 100 nits, meaning on our scale, HDR has six-and-a-half more stops of range than SDR. For reference, SDR covers 1-100 nits, or around six-and-a-half stops of brightness. HDR meanwhile, ideally, covers 13.5 stops (more on that later). Therefore, the range of brightness representable by HDR is double that of SDR, so far as our perception of it is concerned.

Putting It To Use

All of this extra information in brightness and color needs to be stored for output to your display. The information about color and brightness is, in digital terms, stored in binary and output per color. Therefore, X number of bits per color gives you Y amount of information (a “bit” being a digital 1 or 0).

The considerations for the number of bits needed is twofold: The more bits you have, the less banding you perceive. Banding is a "jump" in color or brightness that occurs because not enough information is present about the intervening colors/brightness to present a smooth transition. This was most often seen in the (now mostly unused) original GIF format that could store only 8 bits per the entire image, thus resulting in highly visible banding (only 256 colors per frame!). The downside of using more bits per pixel is that the image takes up more space and thus requires more time to transmit and load onto any device.

The goal, then, is to represent the desired color and brightness with as few bits as possible while also avoiding banding. For SDR. this was 8 bits per color, for a total of 24 bits per pixel of any resulting image. Fortunately for us, binary is also log2, so each bit you add effectively doubles the information you can store.

In this case, it matters more what the actual transmitted level of brightness is (in other words, the nits). Ten bits just doesn’t quite cover enough information to reach above the so-called “Barten threshold” (which is the measured threshold where banding can become visible). On the other hand, 12 bits can cover more than enough to display HDR signals without banding and should be able to cover the REC 2020 gamut with ease, as well. That gets into another topic entirely: competing HDR standards for hardware, for software, and how they’re actually implemented in the real world. But that’s a topic for another time.

-

JonDol I'm wondering if Thomas Soderstrom has drunk too much and wrongly written his name ? If not then welcome on THW :-)Reply -

InvalidError "The goal, then, is to represent the desired color and brightness with as few bits as possible while also avoiding banding. For SDR. this was 8 bits per color"Reply

I disagree: banding is already quite visible at 8bits in the SDR spectrum when you have monochromatic color scales or any other sort of steady gradient across a screen large enough to make the repeated pixel colors readily noticeable. It may have been deemed good enough at the time the standard was defined back in the days of CRT SD TVs where nearby pixels blended into each other to blur boundaries and resolution wasn't high enough to clearly illustrate banding. It isn't on today's FHD LCDs where pixel boundaries are precisely defined and an 8bits gradient across 1920 pixels produces clearly distinguishable bands roughly eight pixels wide. -

TJ Hooker Replywith the color red as the lowest wavelength of light we can see, up to purple as the highest.

Nitpicking, but red is the highest wavelength (lowest frequency) and purple is the lowest wavelength (highest frequency). -

kenzen22b In your monitor reviews that support HDR, are you going to showing:Reply

1. Color charts for results you are able to achieve during calibration.

2. Support for automated calibrations using Cal MAN program (running an automated calibration that stores the results in the monitor) -

Bill Milford This article is not about just HDR (High Dynamic Range), but it is about two technologies, HDR and Deep Color (Extended Gamut i.e. the bigger triangle) and their interaction. The article starts off talking about Deep Color and I was wondering why the article's title wasn't about Deep Color.Reply -

plateLunch @TJ HookerReply

No, the author has it right. Though should be talking about the "longest" wavelength of light, not the lowest.

Light frequency scales typically get drawn as a bar graph with longer wavelengths (lower frequencies) on the left and shorter wavelengths(higher frequencies) on the right. Depending on how the scale is annotated, I can see how one might end up saying "lowest wavelength". But the idea is correct. -

TJ Hooker Reply

Still a pretty poor way to phrase it, given that the meanings of "longest" and "lowest" could almost be considered opposite. To me saying red has the "lowest" wavelength would imply that red's wavelength would be a lower number, which is obviously wrong. I don't think it makes any sense to refer to it as "lowest" just because it may appear on the left side of a graph.20747776 said:@TJ Hooker

No, the author has it right. Though should be talking about the "longest" wavelength of light, not the lowest.

Light frequency scales typically get drawn as a bar graph with longer wavelengths (lower frequencies) on the left and shorter wavelengths(higher frequencies) on the right. Depending on how the scale is annotated, I can see how one might end up saying "lowest wavelength". But the idea is correct.