Intel's NPU Acceleration Library goes open source — Meteor Lake CPUs can now run TinyLlama and other lightweight LLMs

LLMs on the go.

Intel has released its open-source NPU Acceleration Library, enabling Meteor Lake AI PCs to run lightweight LLMs like TinyLlama. It's primarily intended for developers, but ordinary users with some coding experience could use it to get their AI chatbot running on Meteor Lake.

The library is out now on GitHub, and though Intel was supposed to write up a blog post about the NPU Acceleration Library, Intel Software Architect Tony Mongkolsmai shared it early on X. He showed a demo of the software running TinyLlama 1.1B Chat on an MSI Prestige 16 AI Evo laptop equipped with a Meteor Lake CPU and asked it about the pros and cons of smartphones and flip phones. The library works on both Windows and Linux.

For devs that have been asking, check out the newly open sourced Intel NPU Acceleration library. I just tried it out on my MSI Prestige 16 AI Evo machine (windows this time, but the library supports Linux as well) and following the GitHub documentation was able to run TinyLlama… pic.twitter.com/UPMujuKGGTMarch 1, 2024

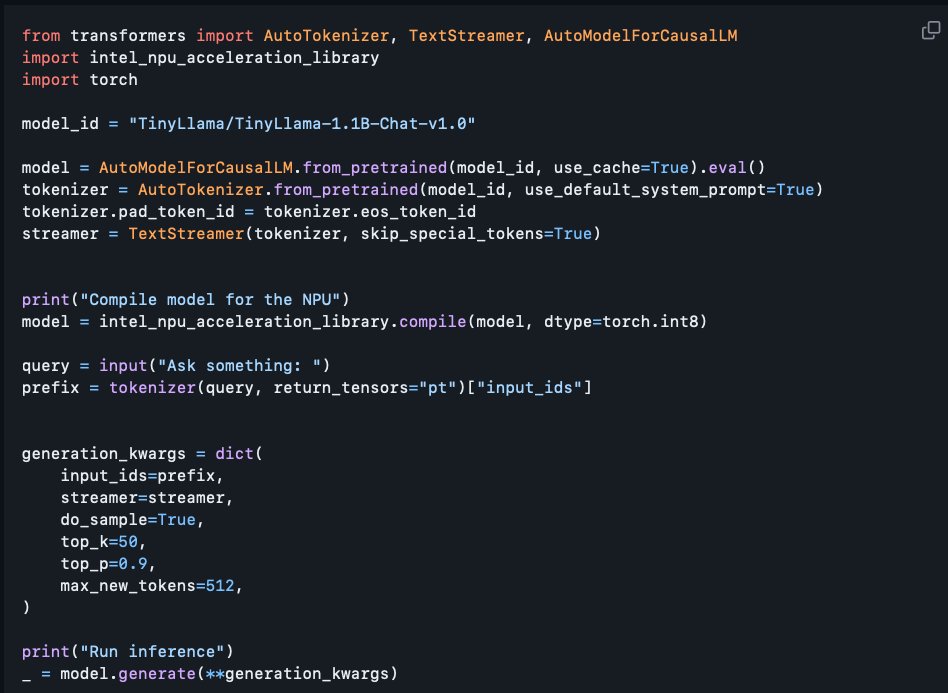

Of course, since the NPU Acceleration Library is made for developers and not ordinary users, it's not a simple task to use it for your purposes. Mongkolsmai shared the code he wrote to get his chatbot running, and it's safe to say if you want the same thing running on your PC, you'll either need a decent understanding of Python or to retype every line shared in the image above and hope it works on your PC.

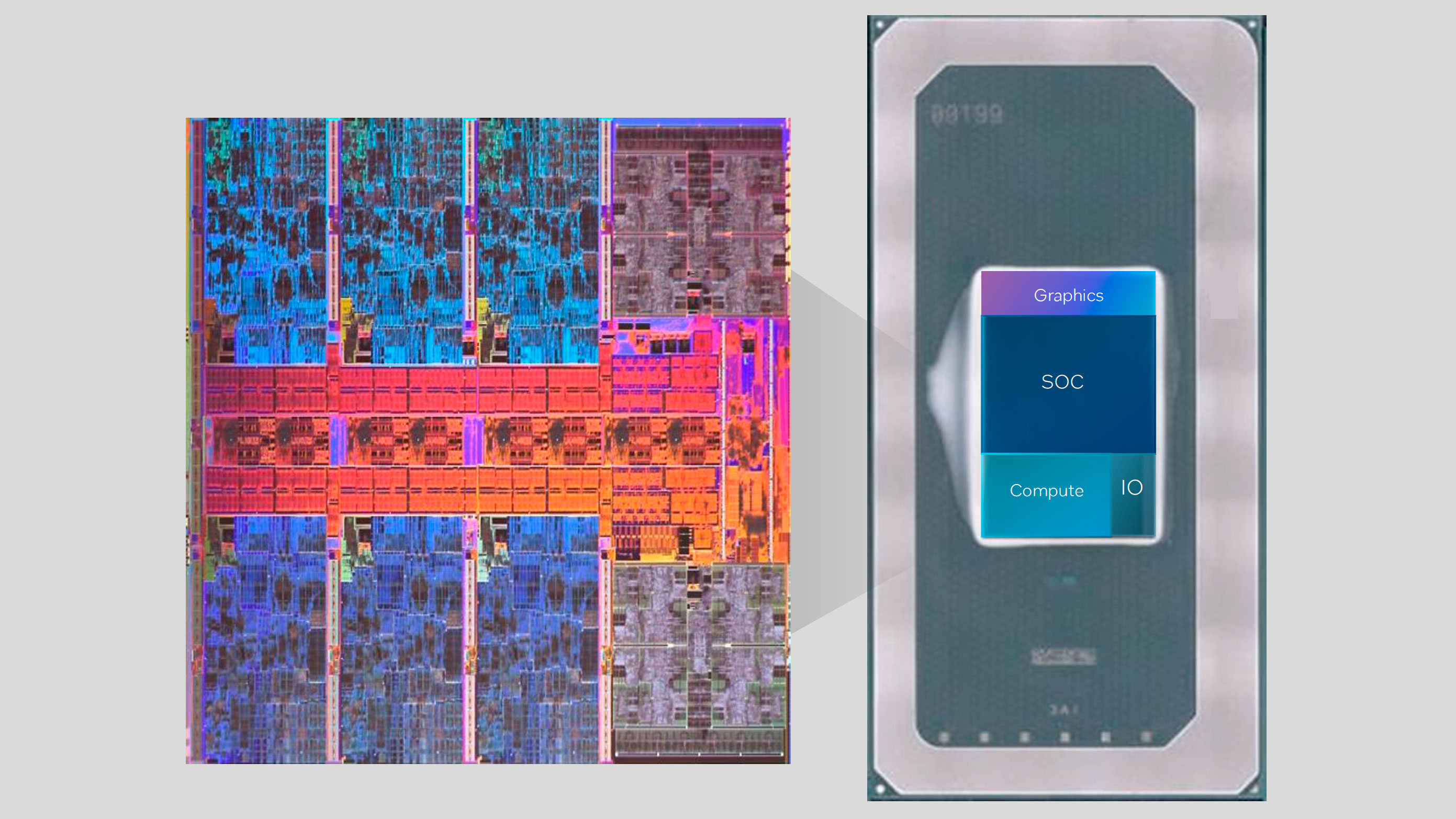

Since the NPU Acceleration Library is explicitly made for NPUs, this means that only Meteor Lake can run it at the moment. Arrow Lake and Lunar Lake CPUs due later this year should widen the field of compatible CPUs. Those upcoming CPUs deliver three times more AI performance over Meteor Lake, likely allowing for running even larger LLMs on laptop and desktop silicon.

The library is not fully featured yet and has only shipped with just under half its planned features. Most notably, it's missing mixed precision inference that can run on the NPU itself, BFloat16 (a popular data format for AI-related workloads), and NPU-GPU heterogeneous compute, presumably allowing both processors to work on the same AI tasks. The NPU Acceleration Library is brand-new, so it's unclear how impactful it will be, but fingers crossed that it will result in some new AI software for AI PCs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Matthew Connatser is a freelancing writer for Tom's Hardware US. He writes articles about CPUs, GPUs, SSDs, and computers in general.

-

bit_user This seems like just wrappers needed to use the NPU from PyTorch. While that's great, the project doesn't contain the parts that really interest me, such as the code for the "SHAVE" DSP cores.Reply

Source: https://www.tomshardware.com/news/intel-details-core-ultra-meteor-lake-architecture-launches-december-14 -

jlake3 ReplyOf course, since the NPU Acceleration Library is made for developers and not ordinary users, it's not a simple task to use it for your purposes.

...so is there no standardized, OS-level framework for all of this new "AI PC"/"AI Laptop" marketing hype to actually tie into? No way for the hardware to tell the OS "Hey, I'm an NPU and here's the data types, standards, and the version levels of those I support"?

I remember hearing that XDNA suffered from a lack of compatible software, the article about the Snapdragon X Elite laptop from the other day mentioned some special setup and requiring the use of the Qualcomm AI stack, and it seems like Intel NPUs as well are something where an average user will need the developer to build support into the program for them.

I'm admittedly not on the generative AI hype train, but there are some uses where I (or people I help with PC things) could be interested in some AI-assisted photo-retouching or webcam background removal that runs on a special accelerator for better performance at lower power (if it's not adding too much to the cost of the PC), and it seems that if I buy something marked for "AI" and touting how many TFLOPS it's NPU can do... there's no guarantee things will actually be able to utilize it now or continue to support it in the future. -

bit_user Reply

I'm not familiar with it, but I guess DirectML might be that, for doing inference on Windows. This looks like a good place to start:jlake3 said:...so is there no standardized, OS-level framework for all of this new "AI PC"/"AI Laptop" marketing hype to actually tie into? No way for the hardware to tell the OS "Hey, I'm an NPU and here's the data types, standards, and the version levels of those I support"?

https://learn.microsoft.com/en-us/windows/ai/

Check the hardware requirements of the software you want to be AI-accelerated.jlake3 said:it seems that if I buy something marked for "AI" and touting how many TFLOPS it's NPU can do... there's no guarantee things will actually be able to utilize it now or continue to support it in the future.

IMO, it's really a lot like the situation we have with GPUs, where you need to check not only for HW requirements but even then you need to look for benchmarks to know how well the app actually runs on a given hardware spec. You cannot simply assume that TOPS translates directly into AI performance, any more than you could assume that the TFLOPS and GB/s of a graphics card predicted game performance. Yes, there's a correlation, but also quite a lot of variation. -

CmdrShepard The situation isn't much different than it was back in the days of first 3D accelerator cards.Reply -

CSMajor Reply

Of course, first you develop and test your model on NPU then you would deploy it via something like DirectML. To do that you could use something like OpenVINO or DirectML. Both which are standards/standardized.jlake3 said:...so is there no standardized, OS-level framework for all of this new "AI PC"/"AI Laptop" marketing hype to actually tie into? No way for the hardware to tell the OS "Hey, I'm an NPU and here's the data types, standards, and the version levels of those I support"?

I remember hearing that XDNA suffered from a lack of compatible software, the article about the Snapdragon X Elite laptop from the other day mentioned some special setup and requiring the use of the Qualcomm AI stack, and it seems like Intel NPUs as well are something where an average user will need the developer to build support into the program for them.

I'm admittedly not on the generative AI hype train, but there are some uses where I (or people I help with PC things) could be interested in some AI-assisted photo-retouching or webcam background removal that runs on a special accelerator for better performance at lower power (if it's not adding too much to the cost of the PC), and it seems that if I buy something marked for "AI" and touting how many TFLOPS it's NPU can do... there's no guarantee things will actually be able to utilize it now or continue to support it in the future.