Mysterious AMD Ryzen AI MAX+ Pro 395 Strix Halo APU emerges on Geekbench — processor expected to officially debut at CES 2025

Chip expected to feature up to 16 Zen 5 cores, Radeon 8600S iGPU, and 40 Compute Units.

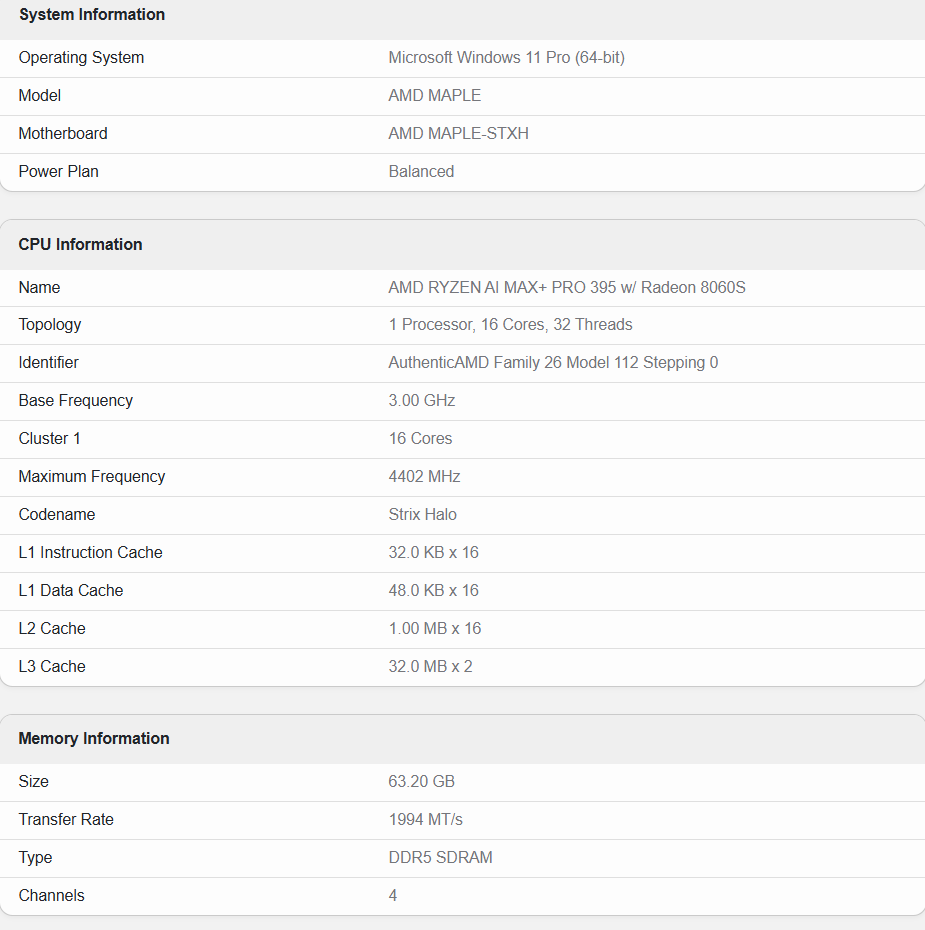

With less than one month left to CES 2025, leaked benchmarks have started to surface starring AMD's flagship Strix Halo APUs. The Ryzen AI MAX+ Pro 395 - now that's a mouthful - has emerged on Geekbench packing 16 CPU cores, 64GB of RAM, and a massive 40 CU (Compute Units) Radeon RX 8600S iGPU (Integrated GPU). Despite the apparent low score - set to improve with further optimizations - the real juicy details lie in the specifications.

For the uninitiated, Strix Halo or the Ryzen AI MAX 300 APUs are said to be AMD's top-of-the-line offering for workstations and laptops next year. The test bench utilizes the "AMD MAPLE-STXH" reference board, designed for the FP11 socket. Likewise, Ryzen AI MAX+ Pro 395—which we'll call Ryzen AI 395 for simplicity's sake—is paired with 64GB of RAM, going as high as 128GB per shipping manifests. The performance isn't anything to write home about at this stage, with the Vulkan score on par with an RTX 2060 resulting from early silicon.

In line with previous rumors, the Ryzen AI 395 features 16 cores based on the Zen 5 microarchitecture and 32 threads. The suspected dual-CCD design lands it 32MBx2 (64MB) of L3 cache alongside 16MB of L2 cache (1MB per core). Geekbench lists the maximum clock speeds at 4.4 GHz, which will likely improve with time.

Strix Halo's graphics solution will fall under AMD's Radeon 8000S umbrella of iGPUs: the Radeon 8060S and Radeon 8050S are based on RDNA 3.5. On an architectural level, the iGPU likely resides in the large SoC die with upwards of 32MB of MALL or Infinity Cache to avoid memory bottlenecks. Geekbench doesn't explicitly list the CU count, but previous leaks hint towards a 40 CU configuration for all Radeon 8060S models—25% more than the RX 7600.

Strix Halo is designed to compete against Apple's M-series silicon and Nvidia's dedicated GPUs in the laptop segment. It may not rival the RTX 4090 laptop GPU, but it could give mid-ranged offerings like the RTX 4070 laptop a run for its money.

Pricing is a valid concern since Strix Point - the Ryzen AI 300 family - is limited in availability and costs a pretty penny if you want the best. Nonetheless, we expect AMD to unveil its Strix Halo lineup of APUs at CES 2025 in addition to Krackan Point and the Radeon RX 8000 series.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

Marlin1975 Seems the GPU would be severally held back by the shared memory bus. Unless there is some onboard memory, like the old AMD boards, this will show good specs but come up short at least for gaming.Reply -

abufrejoval Somehow I think we're still missing a crucial thing or two about the Strix Halo...Reply

So far all we hear is essentially a Dragon Range successor with a beefier IOD, but that doesn't add up.

40 CU should require the equivalent of 300GB/s of bandwidth to stretch their legs and even with that it's still a little low on graphics power to complement those 16 CPU cores: for pure gaming I'd trade twice the CUs for half the CPU cores.

And I can't quite see the wisdom of stuffing these ingredients with this spread in a single box.

So

a) where does it get the bandwidth it seems to need?

b) why bother spending so much on an iGPU that's still no match for those CPU cores in gaming?

c) how that all of that work out in a mobile use case?

d) is there a server angle we're missing?

For a) HBM seems out because of pricing and perhaps heat. A stack or two of Lunar Lake like LPDDR5 RAM on the die carrier might deliver the bandwidth, but where to put it? Strix halo isn't AM5 so there could be a rather roomier die carrier, perhaps even two or more stacks but all that effort doesn't seem to offer deployment scale... unless consoles were to suddenly use that exclusively. But I can't see those going for 16 cores anytime soon.

Btw: did I mention that I'd just love to still have DIMM sockets for normal RAM expansion and pure CPU use?

b) that's the biggest puzzle for me: it's just too much CPU power for what the iGPU can deliver. And without extra memory channels, not even AI would be attractive with them. And with an iGPU this size, adding a dGPU just seems very wasteful. Some might still want that, but that's too niche for AMD. I even wonder if they might significantly reduce PCIe lanes from that IOD, if desktop/workstation isn't really a focus area.

c) How much of that power would be usable at 15/30/45/60/90/120 Watts? How would the devices look like? And how would that translate into the vast numbers that AMD typically requires to build a bespoke chip?

d) My impression is that AMD starts all designs with a server angle first. And only grudgingly serves the less lucrative markets: so are we missing a smart server angle for Strix Halo? -

gamer1227 Reply

Looks it it will have quad channel memory, so bandwith is not that bad.abufrejoval said:Somehow I think we're still missing a crucial thing or two about the Strix Halo...

So far all we hear is essentially a Dragon Range successor with a beefier IOD, but that doesn't add up.

40 CU should require the equivalent of 300GB/s of bandwidth to stretch their legs and even with that it's still a little low on graphics power to complement those 16 CPU cores: for pure gaming I'd trade twice the CUs for half the CPU cores.

And I can't quite see the wisdom of stuffing these ingredients with this spread in a single box.

So

a) where does it get the bandwidth it seems to need?

b) why bother spending so much on an iGPU that's still no match for those CPU cores in gaming?

c) how that all of that work out in a mobile use case?

d) is there a server angle we're missing?

For a) HBM seems out because of pricing and perhaps heat. A stack or two of Lunar Lake like LPDDR5 RAM on the die carrier might deliver the bandwidth, but where to put it? Strix halo isn't AM5 so there could be a rather roomier die carrier, perhaps even two or more stacks but all that effort doesn't seem to offer deployment scale... unless consoles were to suddenly use that exclusively. But I can't see those going for 16 cores anytime soon.

Btw: did I mention that I'd just love to still have DIMM sockets for normal RAM expansion and pure CPU use?

b) that's the biggest puzzle for me: it's just too much CPU power for what the iGPU can deliver. And without extra memory channels, not even AI would be attractive with them. And with an iGPU this size, adding a dGPU just seems very wasteful. Some might still want that, but that's too niche for AMD. I even wonder if they might significantly reduce PCIe lanes from that IOD, if desktop/workstation isn't really a focus area.

c) How much of that power would be usable at 15/30/45/60/90/120 Watts? How would the devices look like? And how would that translate into the vast numbers that AMD typically requires to build a bespoke chip?

d) My impression is that AMD starts all designs with a server angle first. And only grudgingly serves the less lucrative markets: so are we missing a smart server angle for Strix Halo?

B: looks like it is not a gaming APU, the leaks suggest it will be marketed for laptop workstations, wich require a lot of CPU power.

C: the leaks suggest it will max out at 120 Watts, wich is perfect for mid-size workstations, wich are neither ultra thin or bulky.

D: server CPUs dont have iGPUs. -

abufrejoval Reply

Hmm, how that? Externally like a Threadripper would be way too expensive on a desktop motherboard, so it would have to be split in two on-die and two external.gamer1227 said:Looks it it will have quad channel memory, so bandwith is not that bad.

I'd love something like that, but can they sell such a thing in the volumes that justify it?

That's too niche a market for AMD, I'd say: I just can't see it scaling to where they'd get the return they need.gamer1227 said:

B: looks like it is not a gaming APU, the leaks suggest it will be marketed for laptop workstations, wich require a lot of CPU power.

True, such a machine would be attractive for some, if the price was right. But you can build something like that much easier using a dGPU and a normal SoC.gamer1227 said:

C: the leaks suggest it will max out at 120 Watts, wich is perfect for mid-size workstations, wich are neither ultra thin or bulky.

One might argue that the M300 are APUs. And with that IOD they might be able to build GPGPU machines... if they play it really smart.gamer1227 said:

D: server CPUs dont have iGPUs.

Again, I think we're missing something, there has to be a scale solution for that IOD that's not "laptop workstation". -

Marlin1975 Replygamer1227 said:Looks it it will have quad channel memory, so bandwith is not that bad.

B: looks like it is not a gaming APU, the leaks suggest it will be marketed for laptop workstations, wich require a lot of CPU power.

C: the leaks suggest it will max out at 120 Watts, wich is perfect for mid-size workstations, wich are neither ultra thin or bulky.

D: server CPUs dont have iGPUs.

Problem with that is after all that the extra cost may be as much or more than just a separate GPU.

Quad ch memory alone will cost more not just for the extra memory but also PCB design design and testing.

Don't get me wrong. I am curious to see what it can do and how it will play out. But this seems more like a chip made for a stand alone gaming system or some other high end task not well served maybe I am not seeing. -

qwertymac93 Reply

It has a 256-bit LPDDR5X interface, so well over double the bandwidth of a typical desktop CPU.Marlin1975 said:Seems the GPU would be severally held back by the shared memory bus. Unless there is some onboard memory, like the old AMD boards, this will show good specs but come up short at least for gaming.

Overall performance may be comparable to the desktop RX 7600 in some situations.

Strix Halo is not a desktop product, it's for thin and light gaming laptops and workstations. MacBook pro competitors. -

Notton https://www.tomshardware.com/pc-components/cpus/latest-amd-strix-point-leak-highlights-monster-120w-tdp-and-64gb-ram-limitReply

Going off of previous leaks, the cache gives an effective 500GB/s bandwidth, while raw is 273GB/s with LPDDR5X 8533MT/s.

But since then, LPDDR5X 9600MT/s has shipped, so if it ships with that, it's over 300GB/s bandwidth. -

gamer1227 Reply

I think the purpose is not to save money, since it likely will aim at workstations.abufrejoval said:Hmm, how that? Externally like a Threadripper would be way too expensive on a desktop motherboard, so it would have to be split in two on-die and two external.

I'd love something like that, but can they sell such a thing in the volumes that justify it?

That's too niche a market for AMD, I'd say: I just can't see it scaling to where they'd get the return they need.

True, such a machine would be attractive for some, if the price was right. But you can build something like that much easier using a dGPU and a normal SoC.

One might argue that the M300 are APUs. And with that IOD they might be able to build GPGPU machines... if they play it really smart.

Again, I think we're missing something, there has to be a scale solution for that IOD that's not "laptop workstation".

But save space. A dedicated GPU needs its own VRM and package wich uses space, its own RAM dies, by integrating all that, you have a more compact design and thus more space for more SSDs or a larger cooler.

Also, a laptop can be made with LPCAMM2, so that all the memory can be repaired, unlike the GPU VRAM. (Most decent workstations still have replaceable RAM, the Thinkpad P1 already uses LPCAMM2) -

subspruce Reply

for all we know that GPU could use V-Cache.Marlin1975 said:Seems the GPU would be severally held back by the shared memory bus. Unless there is some onboard memory, like the old AMD boards, this will show good specs but come up short at least for gaming.