SanDisk's new High Bandwidth Flash memory enables 4TB of VRAM on GPUs, matches HBM bandwidth at higher capacity

Equipping AI GPUs with 4TB of memory.

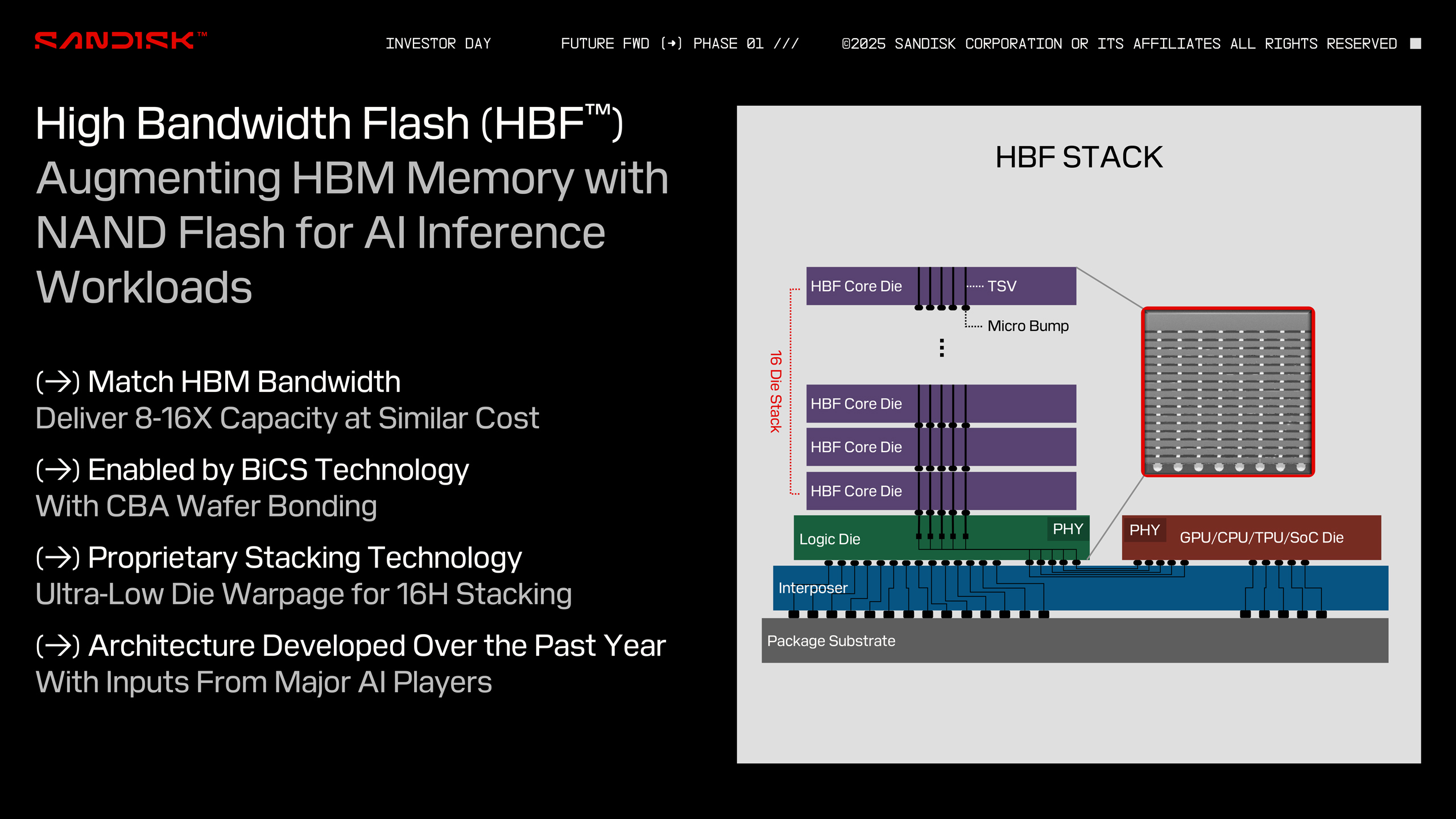

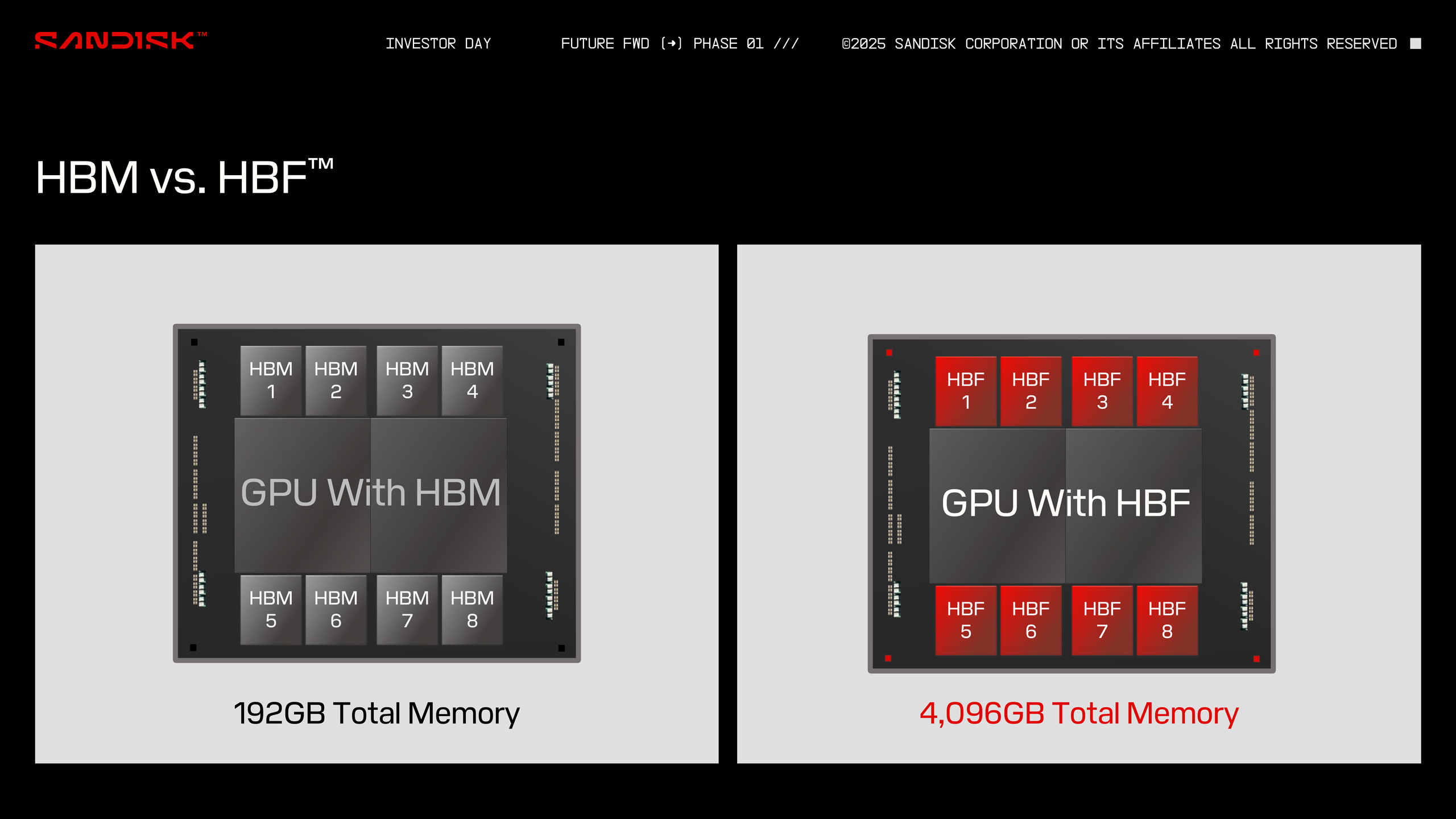

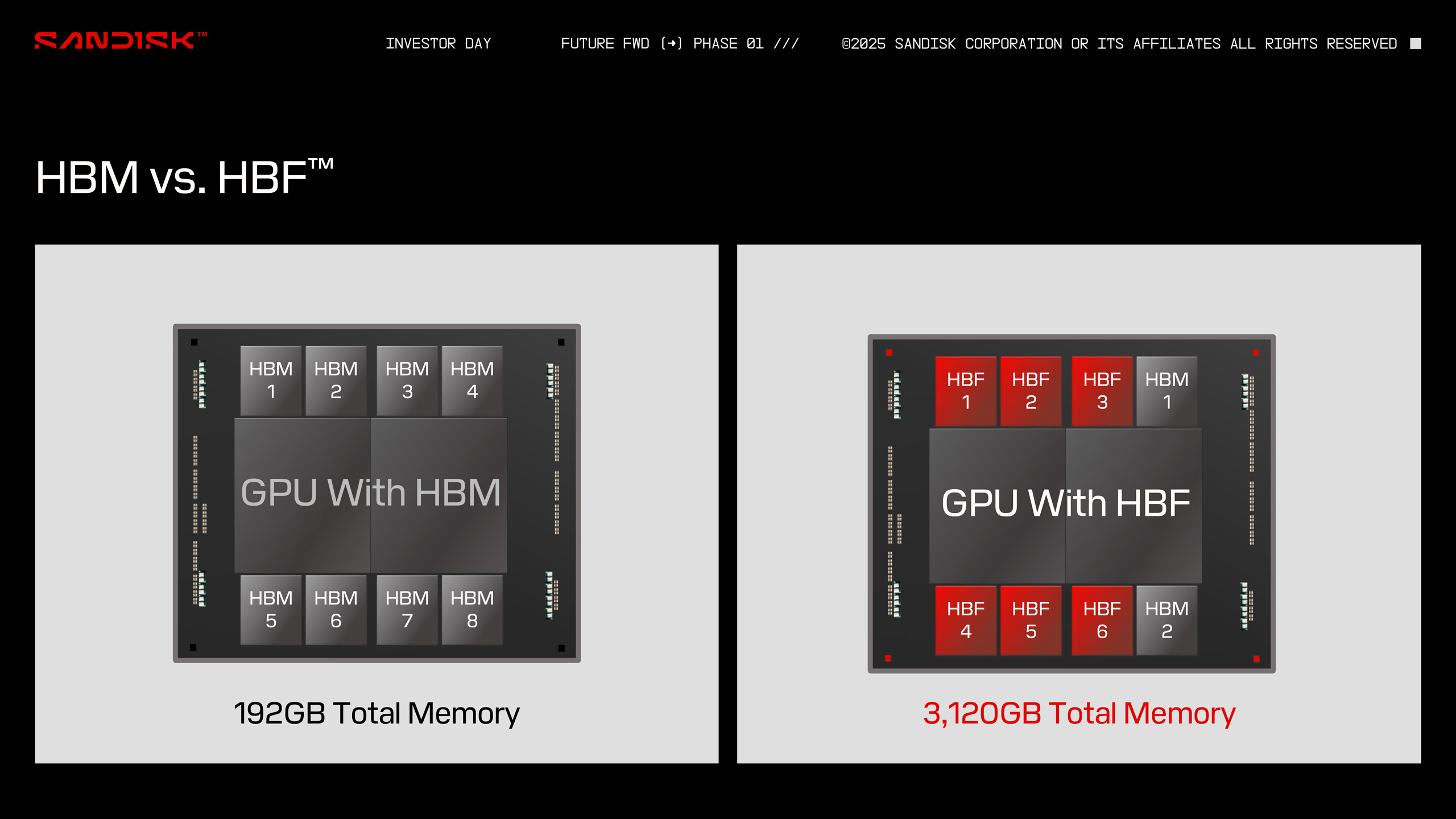

SanDisk on Wednesday introduced an interesting new memory that could wed the capacity of 3D NAND and the extreme bandwidth enabled by high bandwidth memory (HBM). SanDisk's high-bandwidth flash (HBF) memory enables access to multiple high-capacity 3D NAND arrays in parallel, thus providing plenty of bandwidth and capacity. The company positions HBF as a solution for AI inference applications that require high bandwidth and capacity coupled with low power requirements. The first-generation HBF can enable up to 4TB of VRAM capacity on a GPU, and more capacity in future revisions. SanDisk also foresees this tech making its way to cellphones and other types of devices. The company hasn't announced a release date yet.

"We are calling it the HBF technology to augment HBM memory for AI inference workloads," said Alper Ilkbahar, memory technology chief at SanDisk. "We are going to match the bandwidth of HBM memory while delivering 8 to 16 times capacity at a similar cost point."

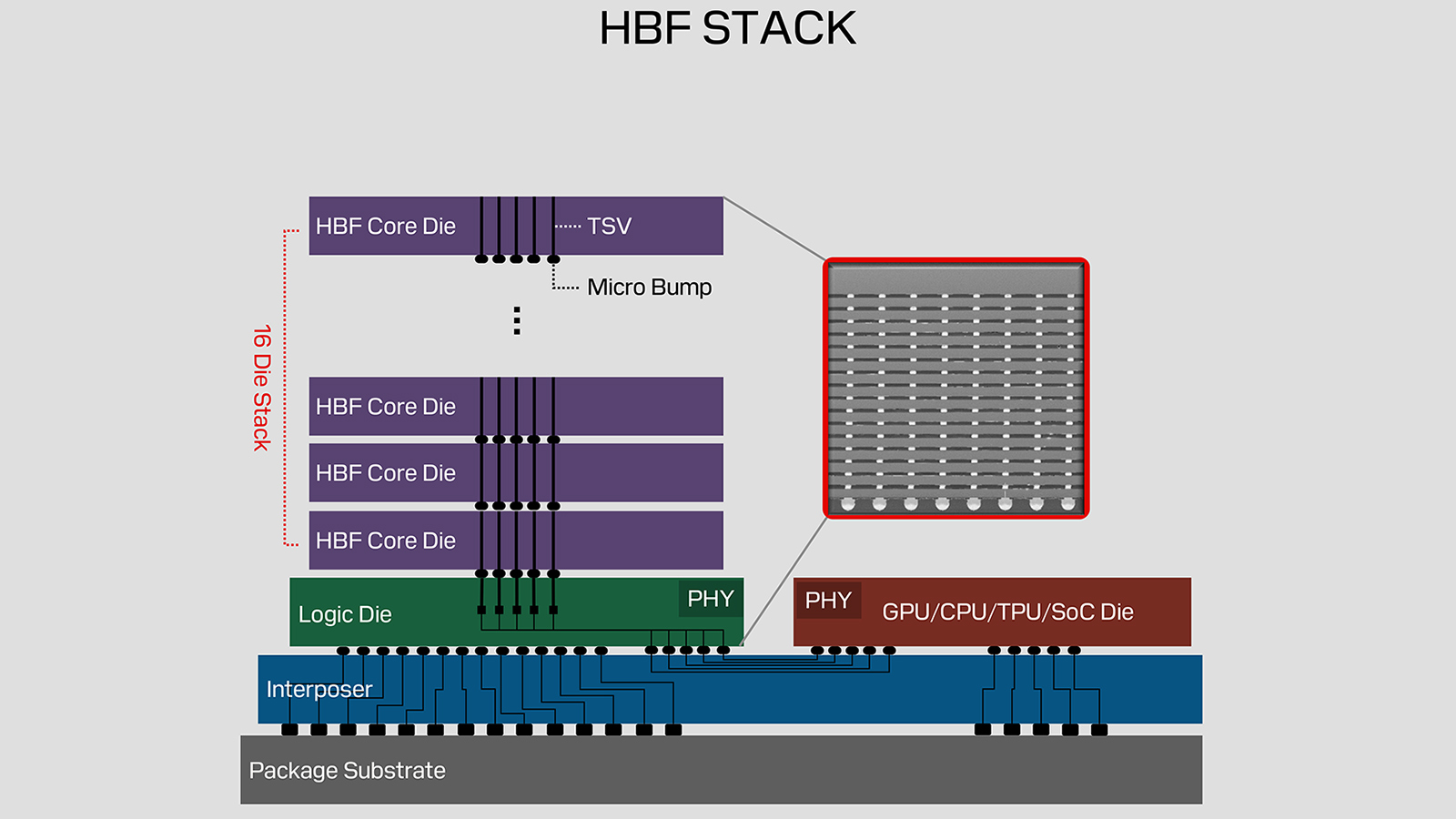

Conceptually, HBF is similar to HBM. It stacks multiple high-capacity, high-performance flash core dies interconnected using through-silicon vias (TSVs) on top of a logic die that can access flash arrays (or rather flash sub-arrays) in parallel. The underlying architecture of HBF is SanDisk's BICS 3D NAND using the CMOS directly bonded to Array (CBA) design that bonds a 3D NAND memory array on top of an I/O die made using logic process technology. That logic may be a key to enabling HBF.

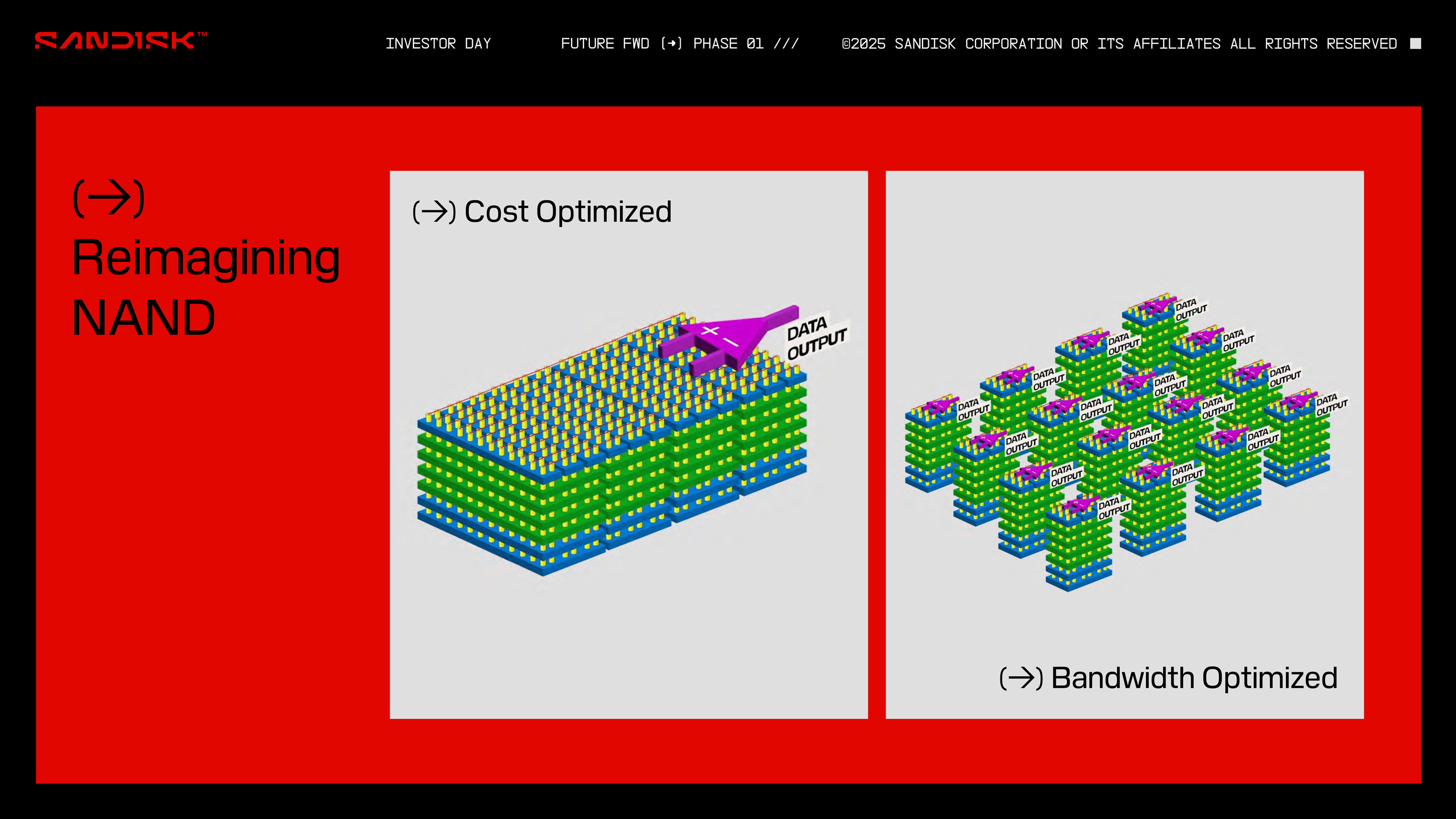

"We challenged our engineers and said, what else could you do with this power of scaling," said Alper Ilkbahar. "The answer they came up with […] was moving to an architecture where we divide up this massive array into many, many arrays and access each of these arrays in parallel. When you do that, you get massive amounts of bandwidth. Now, what can we build with this? We are going to build high bandwidth flash."

Traditional NAND die designs often treat the core NAND flash memory array as planes, pages, and blocks. A block is the smallest erasable area, and a page is the smallest writable area. HBF seems to break the die into 'many, many arrays' so they can be accessed concurrently. Each sub-array (with its own pages and blocks) presumably has its own dedicated read/write path. While this resembles how multi-plane NAND devices work, the HBF concept seems to go far beyond them.

For now, SanDisk says that its 1st-Gen HBF will use 16 HBF core dies. To enable such devices, SanDisk says that it has invented a proprietary stacking technology that features minimal warpage to enable stacking 16 HBF core dies, and a logic die that can simultaneously access data from multiple HBF core dies. The complexity of logic that can handle hundreds or thousands of concurrent data streams should be higher than that of a typical SSD controller.

Unfortunately, SanDisk does not disclose the actual performance numbers of its HBF products, so we can only wonder whether HBF matches the per-stack performance of the original HBM (~ 128 GB/s) or the shiny new HBM3E, which provides 1 TB/s per stack in the case of Nvidia's B200.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The only thing we know from a SanDisk-provided example is that eight HBF stacks feature 4 TB of NAND memory, so each stack can store 512 GB (21x more than one 8-Hi HBM3E stack that has a capacity of 24 GB). A 16-Hi 512 GB HBF stack means that each HBF core die is a 256 Gb 3D NAND device with some complex logic enabling die-level parallelism. Funneling hundreds of gigabytes of data per second from 16 3D NAND ICs is still quite a big deal, and we can only wonder how SanDisk can achieve that.

What we are sure about is that HBF will never match DRAM in per-bit latency, which is why SanDisk stresses that HBF products are aimed at read-intensive, high-throughput applications, such as big AI inference datasets. For many AI inference tasks, the critical factor is high throughput at a feasible cost rather than the ultra-low latency that HBM (or other types of DRAM) provides. So, while HBF may not replace HBM any time soon, it might occupy a spot on the market that requires high-capacity, high-bandwidth, NAND-like cost but not ultra-low latency. To simplify the transition from HBM, HBF has the same electrical interface with some protocol changes, though HBF is not drop-in compatible with HBM.

" We have tried to make it as close as possible mechanically and electrically to the HBM, but there are going to be minor protocol changes required that need to be enabled at the host devices," said Ilkbahar.

SanDisk didn't touch on write endurance. NAND has a finite lifespan that can only tolerate a certain number of writes. While SLC and pSLC technologies offer higher endurance than the TLC and QLC NAND used in consumer SSDs, this comes at the expense of capacity and adds cost. NAND is also typically written to at block granularity, whereas memory is addressable at the cache line level (i.e. typically 128KB for NAND blocks versus 32 bytes for a cache line). That's another key challenge.

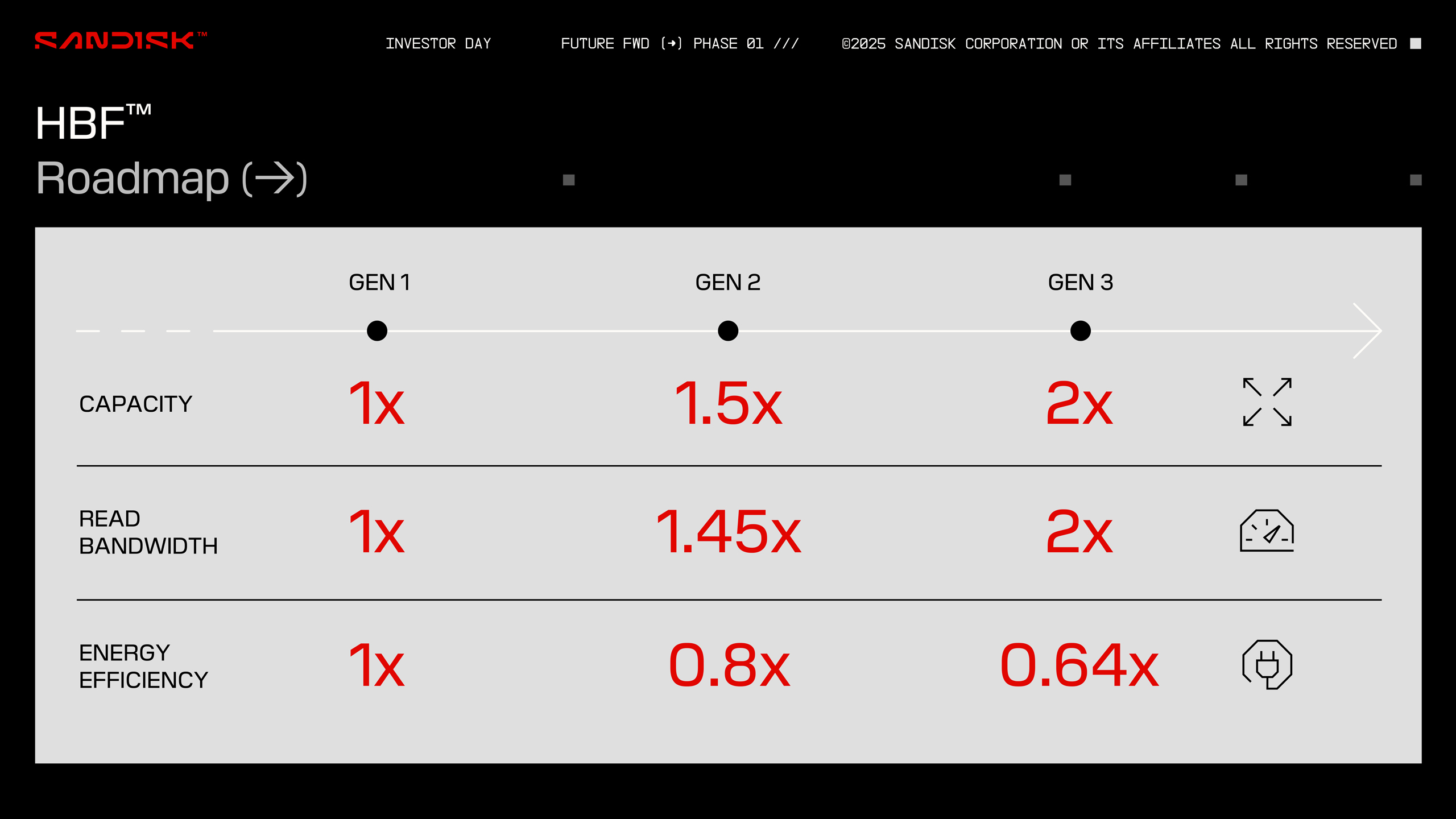

SanDisk has a vision of how its HBF will evolve over three generations. Nonetheless, for now, SanDisk's HBF is largely a work in progress. SanDisk wants HBF to become an open standard with an open ecosystem, so it is forming a technical advisory board consisting of 'industry luminaries and partners.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user Reply

Yes, read latency is certainly a major issue they're having to contend with. The only way I see it being viable is if reads have sufficient coherencey that you can basically stream data from the NAND, rather than waiting until receiving each read command to begin fetching the data.The article said:HBF will never match DRAM in per-bit latency

Also reads, AFAIK. I recall hearing that the old cell design that I think was last used by Micron/Crucial and Intel, about 5 years ago, was particularly susceptible to wear-out from reads. That said, I'm still using some Crucial TLC SSDs of that vintage and they're holding up fine.The article said:SanDisk didn't touch on write endurance. NAND has a finite lifespan that can only tolerate a certain number of writes.

https://thememoryguy.com/3d-nand-benefits-of-charge-traps-over-floating-gates/

Even if less so, I'd suspect charge trap is still susceptible to read wear (I think I've even seen estimates of read-endurance in modern 3D NAND, but I don't recall where), unless someone knows otherwise.

Uhhh... no. HBM is just DRAM and it's not bit-addressable. That's one of the main differences between DRAM and SRAM. HBM3e has a transaction size of 32-byte bursts (i.e. per 32-bit subchannel, not per 1024-bit stack).The article said:NAND is also typically written to at block granularity, whereas memory is bit-addressable. That's another key challenge.

https://www.synopsys.com/glossary/what-is-high-bandwitdth-memory-3.htmlIt's not even specific to HBM, but that seemed the most relevant source to cite. It fundamentally has to do with how DRAM reads & writes work, which operate at row granularity. I'm surprised Anton didn't seem to know that.

https://en.wikipedia.org/wiki/Dynamic_random-access_memory#Operations_to_read_a_data_bit_from_a_DRAM_storage_cell

Also, I found this diagram pretty hilarious:

No, there needs to be some actual DRAM!

That's more believable.

: ) -

A Stoner As often as one would think the information would change, how long would this flash memory last?Reply -

bit_user Reply

As long as the GPU has some regular DRAM (i.e. HBM, GDDR, DDR, LPDDR, etc.), the HBF can be used only to hold an AI model. I think it's a reasonable assumption the set of AI models a given machine needs to inference probably change somewhat infrequently. So, this usage would be classed as "write rarely, read mostly". That said, if such GPUs are used in rentable cloud machines with frequent turnover, write endurance could be an issue.A Stoner said:As often as one would think the information would change, how long would this flash memory last?

While we're talking about endurance, I guess another thing that should be mentioned is the sensitivity of NAND flash to temperature. Stacking a bunch of it next to a big, hot GPU die should mean more frequent self-refreshes are necessary, which could take a toll on endurance. I assume they've accounted for that, while considering the viability of their solution.

BTW, I suspect they're probably using a lower bit-density per cell, maybe even pSLC. That could really help with endurance, as would the abundant capacity + wear-leveling. -

JRStern I likes it!Reply

HBM with dynamic RAM that needs constant refreshes is a dumb, dumb design.

Put in a 100mb of SRAM and 4tb of flash.

Winner.

DRAM? Not on your GPU module, no sir, no ma'am.

... unless HBM4, 5, or 17 already specified something like this, which it should. -

Alvar "Miles" Udell So is this going to actually be a thing, or vaporware like X-NAND?Reply

https://www.tomshardware.com/news/x-nand-technology-gets-patented-qlc-flash-with-slc-speed -

usertests Reply

With the AI market being as hot as it is, the HBF-equipped product might be thrown away after 3-5 years anyway.bit_user said:While we're talking about endurance, I guess another thing that should be mentioned is the sensitivity of NAND flash to temperature. Stacking a bunch of it next to a big, hot GPU die should mean more frequent self-refreshes are necessary, which could take a toll on endurance. I assume they've accounted for that, while considering the viability of their solution. -

bit_user Reply

Why is that dumb? Modern DRAM now has refresh commands the memory controller can simply send, rather than forcing the host to read out data it doesn't need or want. AFAIK, the overhead imposed by refresh is now almost a non-issue, in DRAM.JRStern said:HBM with dynamic RAM that needs constant refreshes is a dumb, dumb design.

As mentioned in the article, you cannot use NAND the same way as DRAM. This design was made for memory that you mostly just read from. It can't be used for training or HPC, because the NAND would burn out in probably a matter of days or weeks.

Finally, NAND has access latencies probably well into the realm of microseconds. This further restricts it to use with regular and predictable access patterns. -

bit_user Reply

That's the standard service life for most datacenter equipment. The concern would be if it burned out far quicker.usertests said:With the AI market being as hot as it is, the HBF-equipped product might be thrown away after 3-5 years anyway. -

Nikolay Mihaylov Reply

NAND's latency is roughly 3 orders of magnitude higher than DRAM. Optane was ~2 orders of magnitude. Would've been a much better fit for this. Maybe called HBO - High Bandwidth Optane. Alas, Intel killed the only original technology they've had in a looong time.bit_user said:Finally, NAND has access latencies probably well into the realm of microseconds. This further restricts it to use with regular and predictable access patterns. -

subspruce Reply

I am pretty sure that true SLC would be used here. When it's being used as memory and the tech itself is a ticking time bomb, picking the time bomb that ticks slowest is the best option.bit_user said:As long as the GPU has some regular DRAM (i.e. HBM, GDDR, DDR, LPDDR, etc.), the HBF can be used only to hold an AI model. I think it's a reasonable assumption the set of AI models a given machine needs to inference probably change somewhat infrequently. So, this usage would be classed as "write rarely, read mostly". That said, if such GPUs are used in rentable cloud machines with frequent turnover, write endurance could be an issue.

While we're talking about endurance, I guess another thing that should be mentioned is the sensitivity of NAND flash to temperature. Stacking a bunch of it next to a big, hot GPU die should mean more frequent self-refreshes are necessary, which could take a toll on endurance. I assume they've accounted for that, while considering the viability of their solution.

BTW, I suspect they're probably using a lower bit-density per cell, maybe even pSLC. That could really help with endurance, as would the abundant capacity + wear-leveling.