Colorful puts two M.2 SSD slots inside upcoming GeForce RTX 50-series GPU — Blackwell GPU repurposes unused PCIe lanes for fast storage

Upcoming iGame Ultra GPU adds two NVMe drives using PCIe bifurcation.

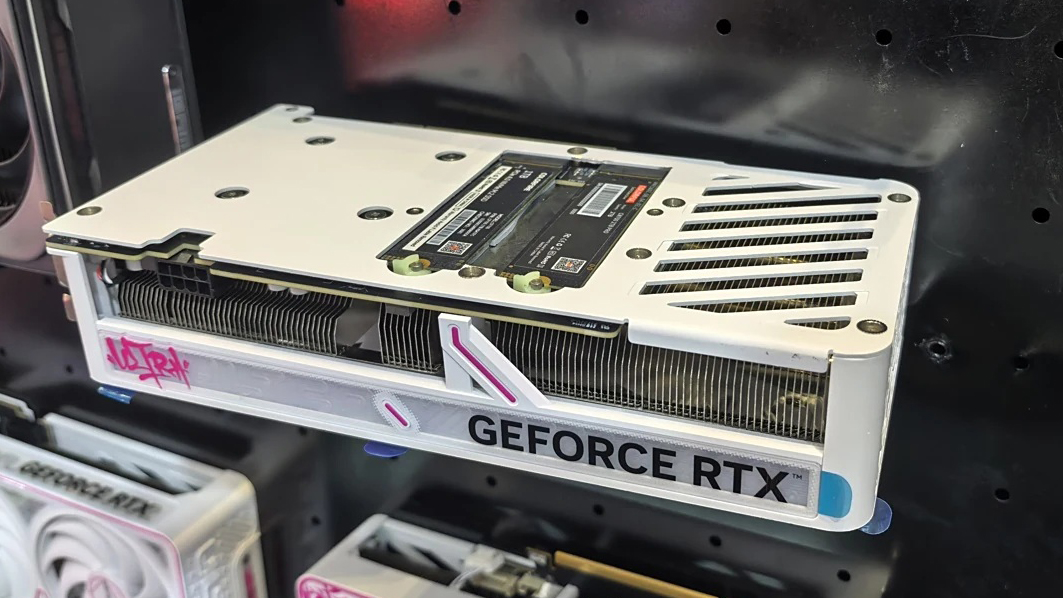

Colorful is working on a new RTX 50-series graphics card lineup that includes support for two M.2 SSD slots, according to a report from IT Home. The GPU was recently spotted at Bilibili World 2025, where the company showcased a prototype from its iGame Ultra series. While the exact model hasn’t been confirmed, the white dual-fan GPU features a cutout in the backplate designed to accommodate two M.2 drives. This effectively allows users to expand their storage directly through the graphics card.

We've seen similar implementations from other GPU manufacturers in the past, including Asus with its RTX 4060 Ti and, more recently, the RTX 5080 ProArt. What’s unique here is that Colorful appears to be taking it a step further with dual-drive support, although Maxsun was one of the first manufacturers to add two SSD slots on their Intel Arc B580 GPU late last year.

There’s no confirmation whether the GPU is an RTX 5050, 5060, or 5060 Ti, since all three of these use a PCIe x8 interface. Gigabyte went ahead and launched the RTX 5060 Ti Eagle GPU earlier this year with a shortened PCB and a halved PCIe x16 connector finger, effectively limiting it to an x8 configuration. In that sense, Colorful is making the right choice by taking advantage of system resources that would otherwise go to waste. When installed in a full PCIe x16 slot, these cards leave unused lanes that can be repurposed for other functions. The company has divided the remaining x8 lanes, allocating x4 lanes to each SSD slot via PCIe bifurcation.

Adding an SSD directly to your GPU does sound interesting, but there are limitations to it. For starters, it seems beneficial only for graphics cards that utilize only PCIe x8 connections. In theory, this concept can also be applied to higher-end GPUs that occupy all x16 lanes, but that would mean splitting the entire bandwidth between the GPU and SSD, potentially limiting the performance of the GPU itself.

Then there's also the question of practicality, as most modern motherboards are already offering multiple M.2 slots, enough to meet the needs of typical users. While it’s an innovative solution, it is only catering to a niche use case that honestly doesn’t exist for most PC gamers or enthusiasts.

Colorful is yet to list its new GPU models with built-in SSD slots on its website. For now, the dual M.2 SSD design remains a prototype, with no word on pricing, availability, or which markets the company plans to target.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Kunal Khullar is a contributing writer at Tom’s Hardware. He is a long time technology journalist and reviewer specializing in PC components and peripherals, and welcomes any and every question around building a PC.

-

Amdlova It's cool idea but the pci bifurcation it's a mess...Reply

How many mothetboard manufacturers implement and show some of that.

You have to pay extra and have tons of headaches to find something compatible.

They need to put a cpu direct on these graphics card all in one :) -

FunSurfer Reply

Its not a cool idea but a rather hot one, as the heat from a gpu is really really good for an ssd...Amdlova said:It's cool idea but the pci bifurcation it's a mess...

How many mothetboard manufacturers implement and show some of that.

You have to pay extra and have tons of headaches to find something compatible.

They need to put a cpu direct on these graphics card all in on -

93QSD5 Reply

Speak for yourself, I'm totally going to get one of these and order a PCI to mini metal frying pan adapter to make scrambled eggs.FunSurfer said:Its not a cool idea but a rather hot one, as the heat from a gpu is really really good for an ssd...

The heat gotta go somewhere right, law of conservation of energy and stuff... -

Notton Are those SSDs attached with the rear side exposed, as in the hot side is sandwiched between PCBs?Reply -

thestryker These are only going to appear in cards with 8 PCIe lanes which means 180W TDP maximum. They're mounted between the flow through section and the GPU which means heat coming off the board should be minimal. If you were using an AIO it would be possible to mount tall heatsinks on the SSDs and there really shouldn't be any heat issues even with sustained load at that point.Reply -

tracker1 With PCIE Gen 5 it makes a lot of sense to do this... Even a 5090 is okay with 16 Gen 4 lakes which is 8 Gen 5 lanes... The x16 slot is a formality.Reply

I would like to see CPUs support 32 lanes though in order to better support a few add in options. For that matter, I think there's a lot of opportunity for accelerator boards again from dedicated AI to additional CO processing units. I'd like to see some creativity in the space again. -

sitehostplus Reply

That's already been done pal. My last two CPU's (Intel i7 and AMD Ryzen 9 7950x3d) both had built in graphics processors. 🤗Amdlova said:

They need to put a cpu direct on these graphics card all in one :) -

Azhrei21 This has to be the dumbest thing I've ever seen. MAYBE you can compensate for the heat off these cards.... More heat is not that great for ssds.... But hey if your GPU dies you get the added bonus of losing two drives probably, and the way gpus are dying these days they'll be fried or melted.Reply -

abufrejoval Reply

That's not my experience.Amdlova said:It's cool idea but the pci bifurcation it's a mess...

I've used bifurcation on big intel Xeons, small Xeons and desktops for at least a decade, and am still using it on plenty of Zen desktops today: it really works quite well, perhaps since it's rather basic. The PCIe root hub (typically the motherboard) just sets this up as per BIOS data and all add-in cards simply conform to the allocations given.

If your mainboard or your BIOS won't support it, you're obviously out of luck. But the biggest issue is really just finding it in the option in the BIOS (there is a lot of variety out there).Amdlova said:How many mothetboard manufacturers implement and show some of that.

You have to pay extra and have tons of headaches to find something compatible.

So far I've always turned out lucky, but I've had a few scares where I thought I had bought the wrong hardware and failed to check for bifurcation support.

And on bigger Xeons, finding the proper slot to bifurcate, can be a bit of a challenge, too.

I've seen comments that hinted at 4+4+4+4 support not always being present on Intel desktops, I no longer have one to check. On a Xeon-D 1541 still operating I'm using it for

Compared to having to employ a PCIe switch for resource sharing, it's ridiculously simple and I'm not aware of anyone charging extra for it. The add-in cards just work, I've never seen an issue there because even x16 GPUs have to make do with as little as an x1 allocation, as miners happily exploited.

I had all sorts of comments race through my head here, but you're basically asking for an APU, perhaps a big one.Amdlova said:They need to put a cpu direct on these graphics card all in one :)

There are reasons for joining things closer together and for separating them: there are reasons, why we have the varieties.

Yet I've actually proposed that myself, mostly because putting a cheap Zen CPU on an add-in board was the cheapest way to get a PCIe switch thrown in for free: the IOD.