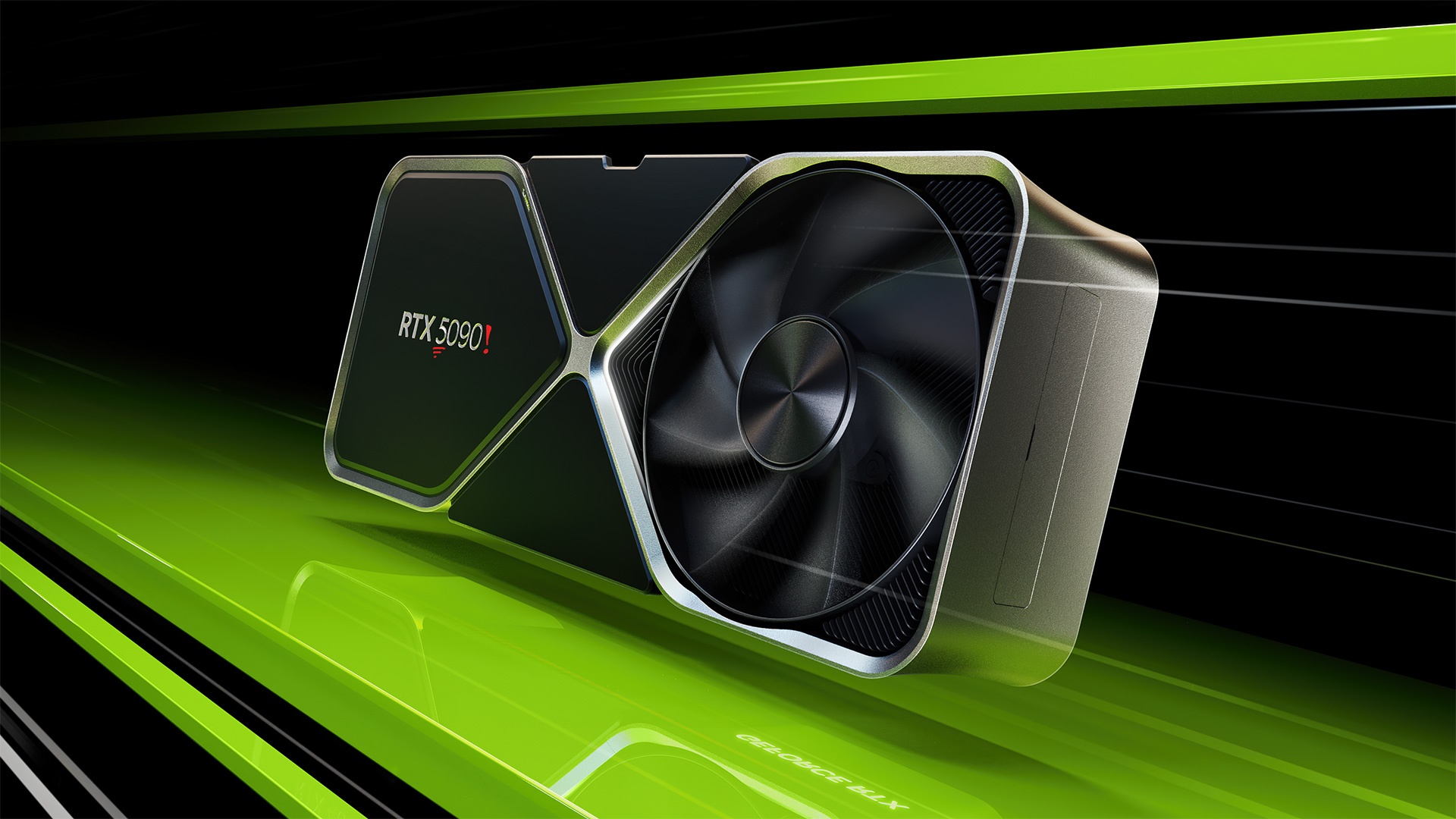

Leak claims RTX 5090 has 600W TGP, RTX 5080 hits 400W — up to 21,760 cores, 32GB VRAM, 512-bit bus

The 5090 sounds a lot like a dual-chip version of the 5080.

Preliminary specifications of Nvidia's GeForce RTX 5080 and GeForce RTX 5090 graphics cards have been published by @kopite7kimi, a reputable hardware leaker who tends to have access to accurate information about Nvidia's upcoming products. If the specifications are correct, then Nvidia's forthcoming GeForce RTX 5090 will be a monster with a 600W total graphics power rating (TGP). Many of these specs line up with previous leaks, just with a bit more detail in some areas. You can see our Nvidia Blackwell RTX 50-series GPUs guide for more details.

Nvidia's GeForce RTX 5090 — the flagship Blackwell GPU for desktop PCs — is expected to be based on the GB202 graphics processor with 21,760 CUDA cores, mated with 32GB of GDDR7 memory using a 512-bit interface. That massive number of FP32 cores will consume enormous amount of power, up to 600W if the leak is accurate. The card will certainly rank among the best graphics cards when it's released, even if the final specifications end up being slightly less impressive. Just don't ask about pricing...

By contrast, Nvidia's GeForce RTX 5080 is said to be powered by the GB203 GPU and will feature 10,752 CUDA cores, which is roughly half of the range-topping offering. RTX 5080 graphics cards are now projected to come with 16GB of GDDR7 memory with a 256-bit interface, with a TGP rating of 400W. With a decent bandwidth uplift enabled by GDDR7, the RTX 5080 should significantly outperform its predecessor in high resolutions and should be a potent graphics card.

Preliminary specifications of Nvidia's GeForce RTX 5000-series graphics cards

| Row 0 - Cell 0 | GPU | CUDA Cores | Memory | TDP | PCB Design |

| GeForce RTX 5080 | GB203-400-A1 | 10,752 | 16GB 256-bit GDDR7 | 400W | PG144/147-SKU45 |

| GeForce RTX 5090 | GB202-300-A1 | 21,760 | 32GB 512-bit GDDR7 | 600W | PG144/145-SKU30 |

While the potentially massive performance of the GeForce RTX 5090 certainly draws attention, another thing that strikes the eye is the huge performance gulf between the flagship RTX 5090 and its smaller RTX 5080 sibling. It's almost exactly half of the range-topping graphics processor in terms of the number of stream processors and memory interfaces. The TGP will be two-thirds of the top-tier card, so clocks might be higher to try to narrow the gap, but this represents an even bigger difference than with the 40-series GPUs.

That potential performance disparity looks strange to say the least, and suggests Nvidia may want to try and create a new tier of performance — or perhaps limit the appeal of certain consumer cards as AI compute alternatives. The RTX 4090 on paper offers 68% more GPU cores, 50% more VRAM, 41% more memory bandwidth, and 13% more L2 cache than the RTX 4080. In practice, CPU limits hold the 4090 back at lower settings, but at 4K ultra it ended up being about 35% faster than the second tier 40-series GPU — and the RTX 3090 was only about 15% faster than the RTX 3080. But these specs, if correct, suggest the 5090 could be up to twice the performance of the 4080.

While we can't say for certain why Nvidia decided to build its next-generation lineup this way, one of the technical explanations could be that Nvidia's GB202 processor may consist of two GB203 dies. Using a multi-chiplet design for Blackwell GPUs has been rumored for a while and the GB100/GB200 datacenter GPUs indeed adopt this architecture. However, using CoWoS-L packaging to enable the high-speed (~10 TB/s) interconnect between dies for a consumer-grade product seems like a very expensive idea.

An alternative to building a multi-chiplet GPU would be to build a monolithic graphics processor with over 21,760 CUDA cores at TSMC's 4nm-class process technology, which would result in a circa 650 mm^2 die. Such a design is hard to yield because of the large die size, which is why redundancies are usually present — the 4090's AD102 chip for example has a maximum of 144 Streaming Multiprocessors (SMs), but only 128 are enabled. So it's not impossible for Nvidia to go that route if it wants to. A monolithic chip would also be very expensive, however, and it would be weird to have such a huge gap between the RTX 5080 and RTX 5090. Other GPUs could try to plug the holes, though, and we could eventually see lower tier parts that might have something like 18,000 functional CUDA cores.

What we do know is that Nvidia uses the same chips for a variety of products: desktop, mobile, professional, and data center GPUs are all based on the same silicon designs. With AI being such a hot item right now, Nvidia might be creating a massive data center part as the first priority, and then productizing it as a consumer offering as well. If that's the case, don't be surprised if pricing ends up being quite a bit higher than the already exorbitantly priced RTX 4090 — and we could even see AI variants arrive before the consumer models.

For now, all the information we have about Blackwell-based graphics cards for client PCs is strictly unofficial. Apply the usual skepticism and know that, until Nvidia says something directly, things can and likely will change. There are still conflicting rumors on the release date as well, with some saying the RTX 50-series won't arrive until early 2025. If correct, that gives ample time for continued tweaking ahead of the launch. Until the official announcement, we can expect the rumor mill to stay busy churning out various theories and specifications.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Notton I think it's the first option. 2x GB203 glued together, but clocked lower.Reply

How else would a dual 400W turn into 600W?

Speculation

Did they choose 600W because they didn't want to add another 16pin 12VHPWR connector?

or does the CoWoS-L interconnect bottleneck and not benefit from more power into the chip?

or they are leaving headroom for an 800W 5090Ti?

I expect the 5090 to run cool, so long as your case can keep up with the total heat it dumps. That is a huge die and would be easy to cool with a vapor chamber. -

Giroro If it has 32GB of memory on a 512-bit bus then it's going to be an AI/Workstation card, and it will be priced accordingly.Reply -

Elusive Ruse I sure hope the 5080 is not coming with the same 16GB of VRAM even if it’s GDDR7.Reply -

Flayed They probably want to limit the vram on the 5080 so that the AI boffs have to buy the 5090Reply -

newtechldtech I dont think the 5090 has two chips.. there is a missing 5080 ti to fill inbetween in the future .Reply -

UnforcedERROR Keeping 16GB on the X080 and below parts is crazy, really. Why only give the TOTL that upgrade?Reply -

thestryker The RTX 4090 has 16384 cores on a cut down AD102 (18432 maximum) and is 602mm^2 on TSMC N5. N4P (assuming they're using the same process for enterprise and client) isn't enough smaller to make up for the core count increase within the same die size. So to me that would mean the 5090 is either harvested GB100 or indeed a dual die solution. With the word about yield issues on early Blackwell (sounded like largely interconnect related) it's possible nvidia was able to pivot and get more usage of the die over for client.Reply

**assuming this leak is accurate -

24GB VRAM should be enough for anyone... well... for me anyway. :ROFLMAO: I have seen anything even come close to maxxing it out so the fact the 5090 will have 32 isn't a dealmaker either way.Reply

-

Percentage wise, can somebody please explain to me the projected performance increase of 5090 over 4090?Reply