Lucky Facebook Marketplace shopper finds souped-up prototype GTX 2080 Ti inside a $500 PC — mythical Nvidia project features 12GB of VRAM and higher memory bandwidth

GTX branding suggests early pre-RTX engineering sample

A Reddit user has managed to get their hands on a rare prototype of Nvidia’s GeForce GTX 2080 Ti GPU. According to photos posted by u/RunRepulsive9867, the card resembles a Founders Edition model from Nvidia’s Turing generation, featuring a silver finish and dual cooling fans. Interestingly, the prototype is branded “GeForce GTX” instead of “RTX,” suggesting that it may have been an early engineering sample produced before Nvidia finalized its decision to introduce the RTX branding to emphasize its ray tracing capabilities.

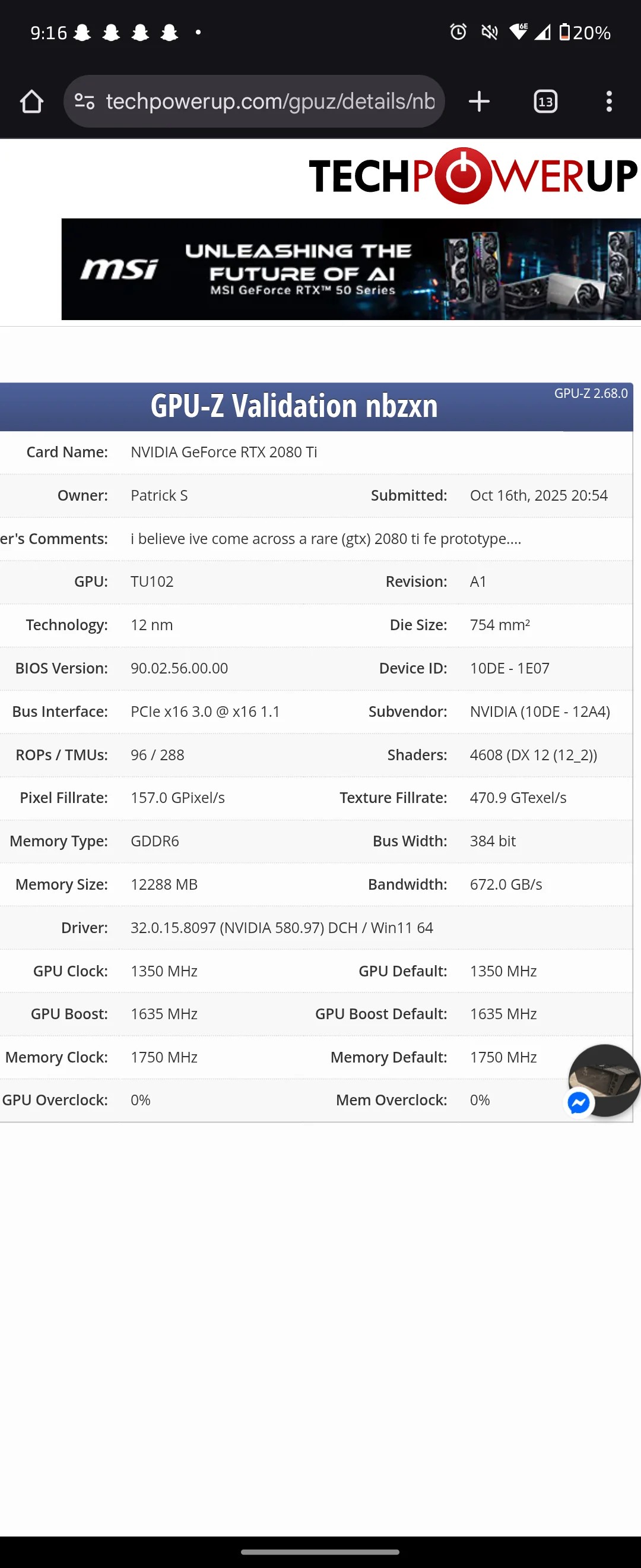

As per the Reddit post, u/RunRepulsive9867 found the unique GPU inside a PC they purchased from Facebook Marketplace for $500. After some tests, the owner of the card verified its specifications using GPU-Z, which confirmed that it was indeed an RTX 2080 Ti internally, but equipped with 12GB of GDDR6 VRAM, compared to the 11GB found on the retail version. It also featured a wider memory bus and higher bandwidth, along with increased ROPs (Render Output Units) and TMUs (Texture Mapping Units), though there was no mention of dedicated RT (Ray Tracing) cores.

Last month, we covered what appears to be the very same prototype when another Reddit user, u/Substantial-Mark-959, managed to get a faulty unit of the same card. The GPU was eventually fixed using a Founders Edition BIOS and modified drivers to function properly. Once operational, the card revealed the same specifications, including the 12GB of VRAM with a wider 384-bit memory bus that pushed total bandwidth to 672 GB/s. Unfortunately, the original Reddit post has been deleted.

Judging by its specifications, it’s possible that Nvidia initially experimented with a more powerful TU102 configuration before finalizing the retail RTX 2080 Ti design. Alternatively, the card may have been part of early testing for a potential Titan or workstation variant that never saw the light of day. Engineering samples and prototypes like these don’t usually make their way into the public as such units are mostly used for internal hardware validation, driver testing, and early performance benchmarking. Their existence is usually secured behind non-disclosure agreements or scrapped before official launch, which makes these working units valuable pieces of hardware for enthusiasts and collectors.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Kunal Khullar is a contributing writer at Tom’s Hardware. He is a long time technology journalist and reviewer specializing in PC components and peripherals, and welcomes any and every question around building a PC.

-

DingusDog 11GB of V-Ram isn't the weirdest thing Nvidia has ever done but it's one of them. Around 2019 people were saying 11GB is overkill and no game will ever need that much. Now 12GB is considered insufficient and soon 16GB won't be enough either.Reply -

John Kiser Reply

12 gb is only insufficient to people that keep trying to push 4k with all the bells and whistles on.DingusDog said:11GB of V-Ram isn't the weirdest thing Nvidia has ever done but it's one of them. Around 2019 people were saying 11GB is overkill and no game will ever need that much. Now 12GB is considered insufficient and soon 16GB won't be enough either. -

upsetkiller ReplyDingusDog said:11GB of V-Ram isn't the weirdest thing Nvidia has ever done but it's one of them. Around 2019 people were saying 11GB is overkill and no game will ever need that much. Now 12GB is considered insufficient and soon 16GB won't be enough either.

A few games by talentless or lazy back for devs don't rally signal that, the overwhelming majority of the games never cross 8gb on my ultra wide 1440p display. Channels like HUB like to create drama for no reason lol, until 2024 I was a on a 10gb 3080 and not a single game gave me trouble. With neural compression starting to be integrated in upcoming games this won't be an issue in the future. -

lily_anatia meanwhile Radeon VII with 16 GB HBM2 at 1 TB/s is only $200 on eBay 🤷🏼Reply

I get that it's a "rare" engineering sample, but I'd say it's still only worth about $100, maybe $150. -

thisisaname Reply

Collectors sometimes pay more than normal people would.lily_anatia said:meanwhile Radeon VII with 16 GB HBM2 at 1 TB/s is only $200 on eBay 🤷🏼

I get that it's a "rare" engineering sample, but I'd say it's still only worth about $100, maybe $150. -

lily_anatia Reply

that's why I said maybe $150.thisisaname said:Collectors sometimes pay more than normal people would. -

thestryker This was likely just an early iteration which we've seen more evidence of lately that nvidia has done. Various 3080 configurations and a different 4090 have popped up. I always find these interesting as a what if type of situation.Reply

As for the VRAM situation anyone who thinks things are fine as they are should consider that it's artificial segmentation and nothing else. VRAM is not a significant driver of video card cost, but it sure does allow them to make bigger margins on cards with more. 12GB VRAM is plenty for the ~4070 performance level (and below) and only time will tell if that's also true for ~5070 performance level, but I think it likely is. 8GB VRAM cards really shouldn't be sold anymore except for true entry level. That is a capacity which is holding development back in a similar fashion to the complaints we hear about the Xbox Series S. While I'm sure developers could get around this limitation while providing a better experience for everyone without its unlikely to make monetary sense.

When I was looking to buy a new card I passed on the 3070 due to VRAM and history quickly proved that to be a smart decision. The 10GB on the 3080 also bothered me which caused me to not get one early when they were reasonably priced. In the end I'm happy I didn't because I ended up with a 12GB model and don't have to worry about VRAM at playable settings for this card. -

dalauder Reply

Exactly what I was thinking. I bought an RTX 2080 Ti for $247 three years ago. Admittedly, that was a solid price, but $500 today for one that will likely have driver problems is terrible.rx7ven said:$500 for a 7 year old GPU is hardly considered "lucky" -

dalauder Reply

That would be true if Nvidia or AMD still made graphics cards under $300. This card is probably worth $220 on eBay. There's simply nothing that will match it for under $250, maybe $300 new.lily_anatia said:meanwhile Radeon VII with 16 GB HBM2 at 1 TB/s is only $200 on eBay 🤷🏼

I get that it's a "rare" engineering sample, but I'd say it's still only worth about $100, maybe $150.