Maxsun unveils Intel dual-GPU Battlemage graphics card with 48GB GDDR6 to compete with Nvidia and AMD

A dual-GPU in 2025, and an Intel one at that

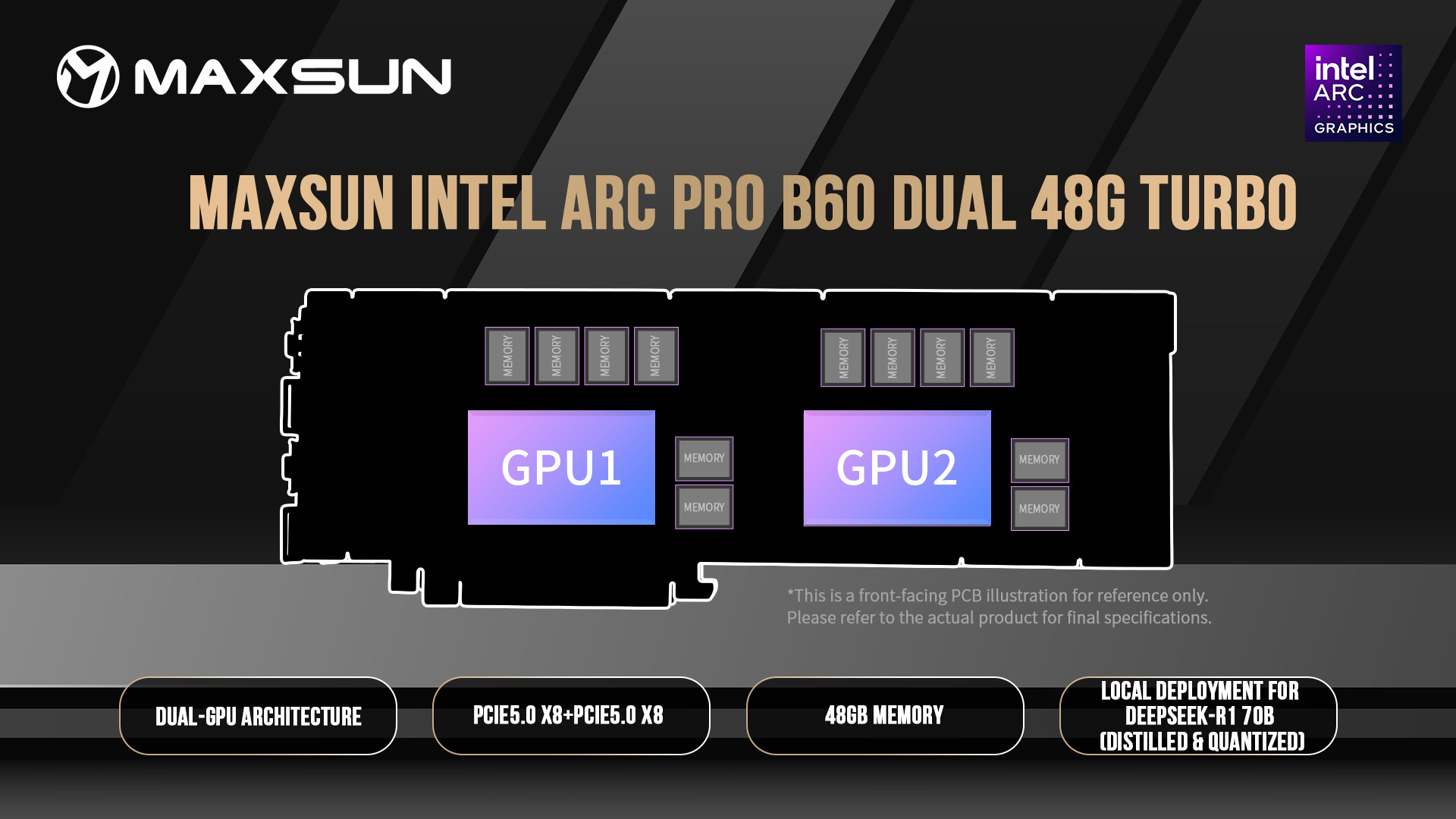

Intel announced the chipmaker's new Arc Pro B50 and Arc Pro B60 at Computex 2025. Maxsun has stitched two of the latter models to produce the Intel Arc Pro B60 Dual 48G Turbo, a dual-GPU solution that provides 48GB of GDDR6 memory designed to address the most challenging AI workloads.

Dual-GPU graphics cards were once popular, but technological advances have made them obsolete. The last consumer-grade dual-GPU graphics card from Nvidia was likely the GeForce GTX Titan Z from 2014, while AMD's was the Radeon Pro Duo from 2016. This means it's been quite a while since chipmakers introduced a dual-GPU product for retail. Although the Arc Pro B60 may not revive the trend, it remains noteworthy since Intel's first foray into the dual-GPU market. Then again, it's an AI graphics card, not a gaming one.

The Intel Arc Pro B60 Dual 48G Turbo essentially combines two Arc Pro B60 graphics cards on one board. It utilizes dual Battlemage BMG-G21 silicon, each accompanied by its own memory. The same silicon powers the Arc B580 but offers double the memory compared to the gaming counterpart.

The GPU inside the Intel Arc Pro B60 Dual 48G Turbo operates according to Intel's reference specifications. It doesn't feature factory overclocks or anything of that sort. Therefore, we're looking at 20 Xe cores, 20 RT units, 160 XMX and 160 Xe vector engines, and a 2,400 MHz clock speed.

Maxsun Intel Arc Pro B60 Dual 48G Turbo Specifications

Component | GPU 1 | GPU 2 |

|---|---|---|

GPU Model | Arc Pro B60 | Arc Pro B60 |

Xe Cores | 20 | 20 |

Ray Tracing Cores | 20 | 20 |

XMX Engines | 160 | 160 |

Xe Vector Engines | 160 | 160 |

GPU Clock (MHz) | 2,400 | 2,400 |

Peak INT8 TOPS | 197 | 197 |

Core Voltage (V) | 1.05 - 1.36 | 1.05 - 1.36 |

Power Phase Design | 6-phase VCCGT + 2-phase VCCDR + 2-phase VCCSA | 6-phase VCCGT + 2-phase VCCDR + 2-phase VCCSA |

Cooling Solution | VC Vapor Chamber + Heatsink Fan Combo | VC Vapor Chamber + Heatsink Fan Combo |

Memory Bus Width | 192-bit | 192-bit |

Video Memory | 24GB GDDR6 | 24GB GDDR6 |

Memory Bandwidth (GB/s) | 456 | 456 |

Memory Speed (Gbps) | 19 | 19 |

PCIe Interface | PCIe 5.0 x8 | PCIe 5.0 x8 |

The Arc Pro B60 has 24GB of GDDR6 memory clocked at 19 GBps—the 192-bit memory interface results in a memory bandwidth of up to 456 GB/s. The biggest selling point is that the Intel Arc Pro B60 Dual 48G Turbo has 48GB of total memory, 50% more than the GeForce RTX 5090 and matching that of the RTX Pro 5000 Blackwell or the Radeon Pro W7900.

With 48GB on one Intel Arc Pro B60 Dual 48G Turbo, users can have multiple graphics cards in a single system to scale the capacity. For example, Intel had a system with two, totaling 96GB, on display. With the correct motherboard, you can easily have four installed for 192GB, akin to Project Battlematrix.

The Intel Arc Pro B60 Dual 48G Turbo is designed to fit into a standard PCIe 5.0 x16 expansion slot; however, there is a catch. Each Arc Pro B60 interacts with your system independently through a bifurcated PCIe 5.0 x8 interface. Thus, it's important to note that the motherboard must support PCIe bifurcation for the PCIe 5.0 slot hosting the Intel Arc Pro B60 Dual 48G Turbo.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Like your typical AI or workstation graphics cards, the Intel Arc Pro B60 Dual 48G Turbo has a blower-type design and occupies two PCI slots. Maxsun has implemented a full-cover vapor chamber for cooling the graphics card's components and an additional metal backplate to help with heat dissipation. The graphics card is 11.8 inches (300mm) in length, so it fits inside the workstation territory, which normally spans from 10.5 to 12 inches.

The TDP for the Arc Pro B60 varies between 120W and 200W, depending on the partner design. In Maxsun's case, the company has specified a total TDP of 400W, meaning one Arc Pro B60 is rated for 200W. For the same reason, the manufacturer has implemented a single 12VHWPR power connector for up to 600W.

Each Arc Pro B60 GPU has one DisplayPort 2.1 output with UHBR20 support and one HDMI 2.01a port. Since there are two of them, the graphics card offers two of each. Regarding resolution support, the maximum is either 8K (7680x4320) at 60 Hz or 4K (3840x2160) at 240 Hz.

Maxsun didn't reveal when or for how much the Intel Arc Pro B60 Dual 48G Turbo will be available for purchase. The Arc Pro B60 costs approximately $500 per unit, so Maxsun's AI graphics card could reasonably retail for around $1,000.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Zhiye Liu is a news editor and memory reviewer at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

- Paul AlcornManaging Editor: News and Emerging Tech

-

-Fran- Was the table meant to show B50 and B60 side by side or am I missing something? :DReply

EDIT: Ah, now I got it. it's literally "GPU chip 1 and 2".

Regards. -

abufrejoval The most import information, how those two GPUs are interconnected between each other, is missing.Reply

And that's most likely because it's bad news: the same two x8 slots, which are also used to talk to the host.

And with that it's really a 2-in-1 card with two distinct 24GB GPUs, not a double-sized GPU with 48GB, a mere space saver.

The value of that approach is extremely limited, you'd have to compensate with model design manpower to exploit it.

In a normal market that wouldn't work, but we are far from that. -

kealii123 Reply

Sorry, I'm not understanding it that well- so this will perform the same (maybe worse) than just buying the two cards separately, and the only real benefit is motherboard space since they share a PCI slot? And maybe it might be cheaper than two separate cards?abufrejoval said:The most import information, how those two GPUs are interconnected between each other, is missing.

And that's most likely because it's bad news: the same two x8 slots, which are also used to talk to the host.

And with that it's really a 2-in-1 card with two distinct 24GB GPUs, not a double-sized GPU with 48GB, a mere space saver.

The value of that approach is extremely limited, you'd have to compensate with model design manpower to exploit it.

In a normal market that wouldn't work, but we are far from that. -

thestryker Reply

That's definitely the point as it enables the top configuration of Intel's "Project Battlematrix":abufrejoval said:And with that it's really a 2-in-1 card with two distinct 24GB GPUs, not a double-sized GPU with 48GB, a mere space saver.

https://i.imgur.com/DOLuMfK.jpeg -

Notton GN did a teardown of the pre-production sample and it looks like it's just 2x GPUs each attached to their own 8x lanes.Reply

I don't see any traces or chip that interconnect the two together, so it seems like a space saving design.

Y8MWbPBP9i0 -

Amdlova Kind cool if have two graphics on one system, If the software don't work in dual graphics you can choose what Gpu will drive the software.Reply

It's like a dual cpu motherboard maybe with some kind of numa memory pool :) -

Eximo Well, maybe some techtuber will get one to test with and run Ashes of the Singularity, would be interesting at the least.Reply

I can't think of any other titles that actually support multi-GPU. -

tmrdev This dual Maxsun card is not proven and is made by a Chinese company, so they must be cutting corners. if it is priced around 700 bucks, it could be a novelty purchaseReply

However, I’m going to move forward with a B60 24 GB card, maybe buy two to start things off.

The Nvidia Empire is collapsing, Intel is quietly becoming the platform for creative work. Get in now while prices are good. -

abufrejoval Reply

Not counting tariffs $700 actually sounds like a small markup, and the only cut corner and risk being production scale. I really can't imagine them selling like hotcakes.tmrdev said:This dual Maxsun card is not proven and is made by a Chinese company, so they must be cutting corners. if it is priced around 700 bucks, it could be a novelty purchase

If you have the space, that should be just as good (or bad), but offer the flexibility to repurpose the two sepearately.tmrdev said:However, I’m going to move forward with a B60 24 GB card, maybe buy two to start things off.

Collapsing is where I believe Intel is far ahead in the lead and that could become an issue in terms of support and long-term value.tmrdev said:The Nvidia Empire is collapsing, Intel is quietly becoming the platform for creative work. Get in now while prices are good.

After some initial evaluation my B580 sits in storage mostly as a spare. At €300 in January it was fair value for the price, but not a great GPU. I fear margins aren't where Intel needs them to carry on. It still retains some of the A770 issues around power consumption and nearly no XeSS (and AI) support in games (apps).

If I was really hard pressed on money, I'd probably go for a 5060 instead, even at 8GB. Otherwise I'd aim for a 5060ti with16GB or save for something bigger.

If I could swap the B580 for a 24GB variant at €50 extra, I'd probably do that, mostly for curiosity's sake. But I wouldn't buy a 24GB variant new: the value of the additional RAM may be much less than what you're hoping for.

I have a 24GB RTX 4090 and there aren't that many off-the-shelf, home-use models that fit that exact RAM size. Most of the 70B stuff really needs much more and even 35B models struggle. Designing and training your own size matching variant, even if you can use an existing open source dataset and model as a base, is way beyond what even Intel seems ready to invest: don't expect anyone else doing that, unless that were their only choice.

I can't see that B60 card fitting any workload I care about. The base B580 isn't great at 1440 gaming and 24GB doesn't improve that. But even with AI/ML 24GB isn't a very useful RAM size while lack of software support may mean that it delivers much less than a CUDA capable card would deliver with similar hardware specs.

Model design and training for denser models might be a niche, but even there you'd much rather use the CUDA ecosystem. For anything LLM even huge piles of these won't do any good, even if you could afford to write your own software stack like DeepSeek did: not for design, not even for training, and not for inference.

I'd be happy to hear your experiences if you dare to try.

In cases like this I like to ensure that I have a 30 day free return window available full time.