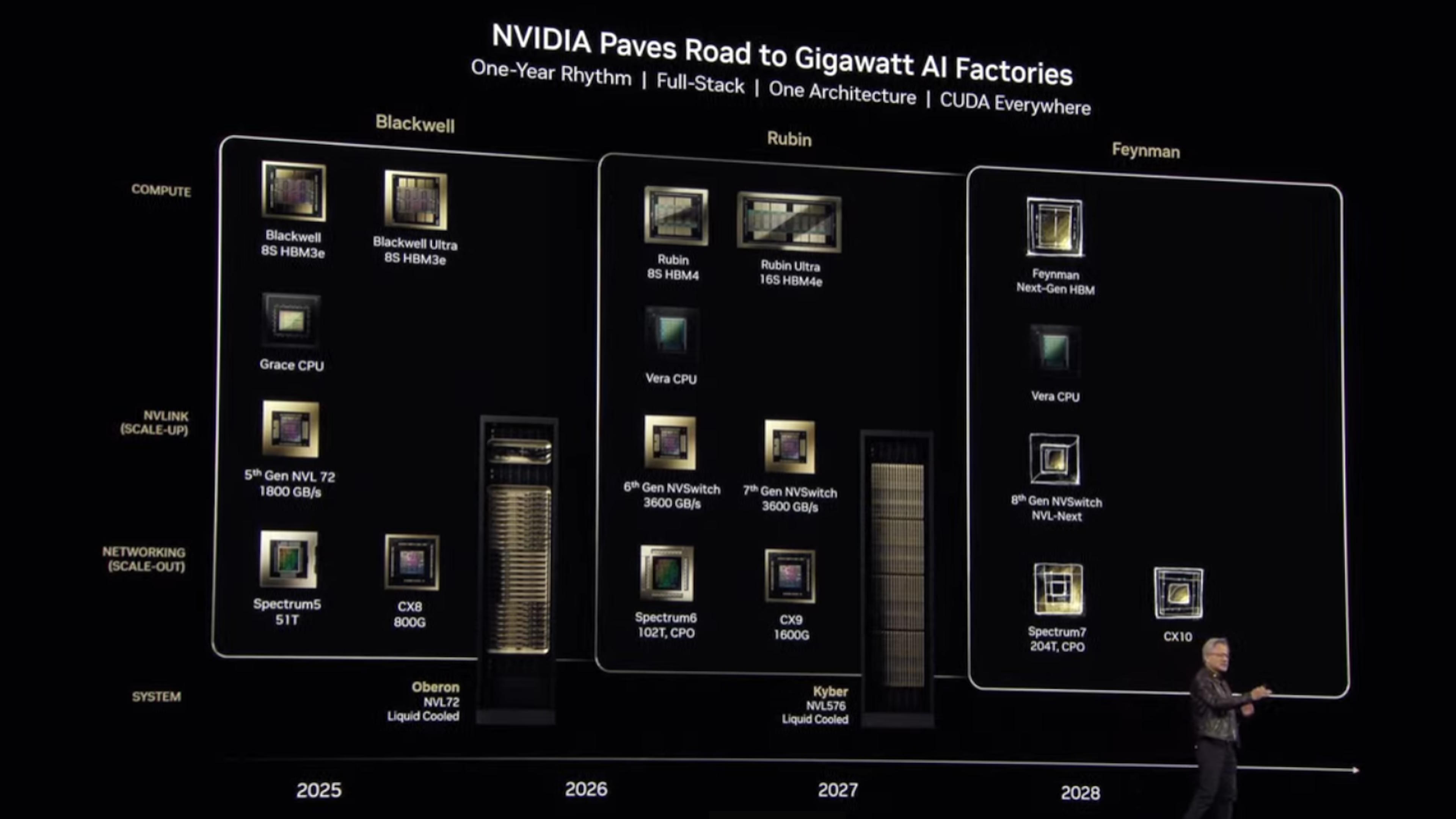

Nvidia announces Rubin GPUs in 2026, Rubin Ultra in 2027, Feynman also added to roadmap

NVL144 and NVL576 configurations coming down the pipe.

Nvidia announced updates to its data center roadmap for 2026 and 2027 at the company's GTC 2025 conference today, showcasing the planned configurations for the upcoming Rubin (named after astronomer Vera Rubin) and Rubin Ultra.

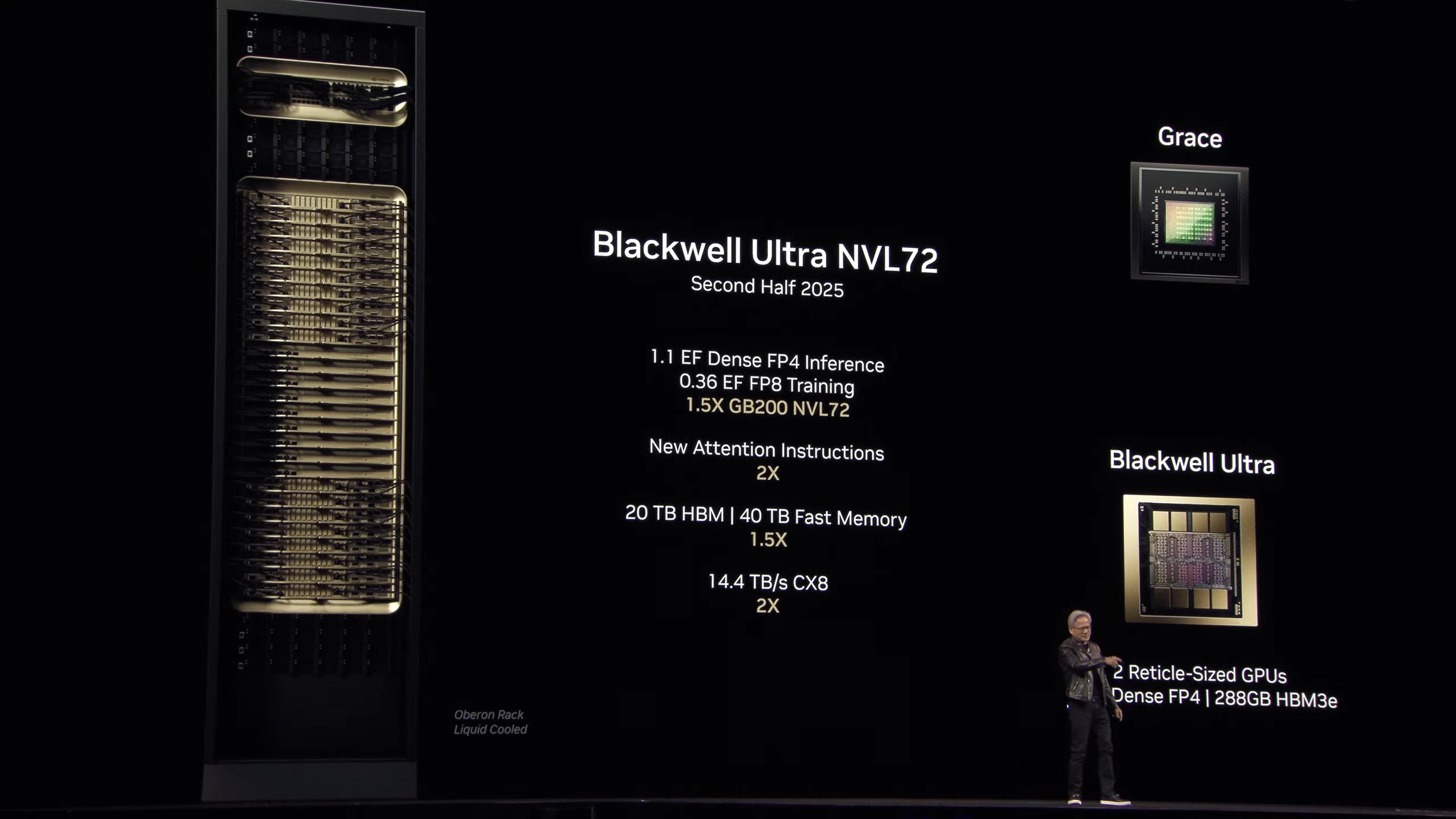

Even though the company has just finished bringing Blackwell B200 into full production, and has Blackwell B300 slated for the second half of 2025, Nvidia is already looking forward to the next two years and helping its partners plan for the upcoming transitions.

One of the interesting points made is that "Blackwell was named wrong." In short, Blackwell B200 actually has two dies per GPU, which CEO Jensen Huang says changes the NVLink topology.

So even though the company calls the current solution Blackwell B200 NVL72, Huang says it would have been more appropriate to call it NV144L. Which is what Nvidia will do with the upcoming Rubin solutions.

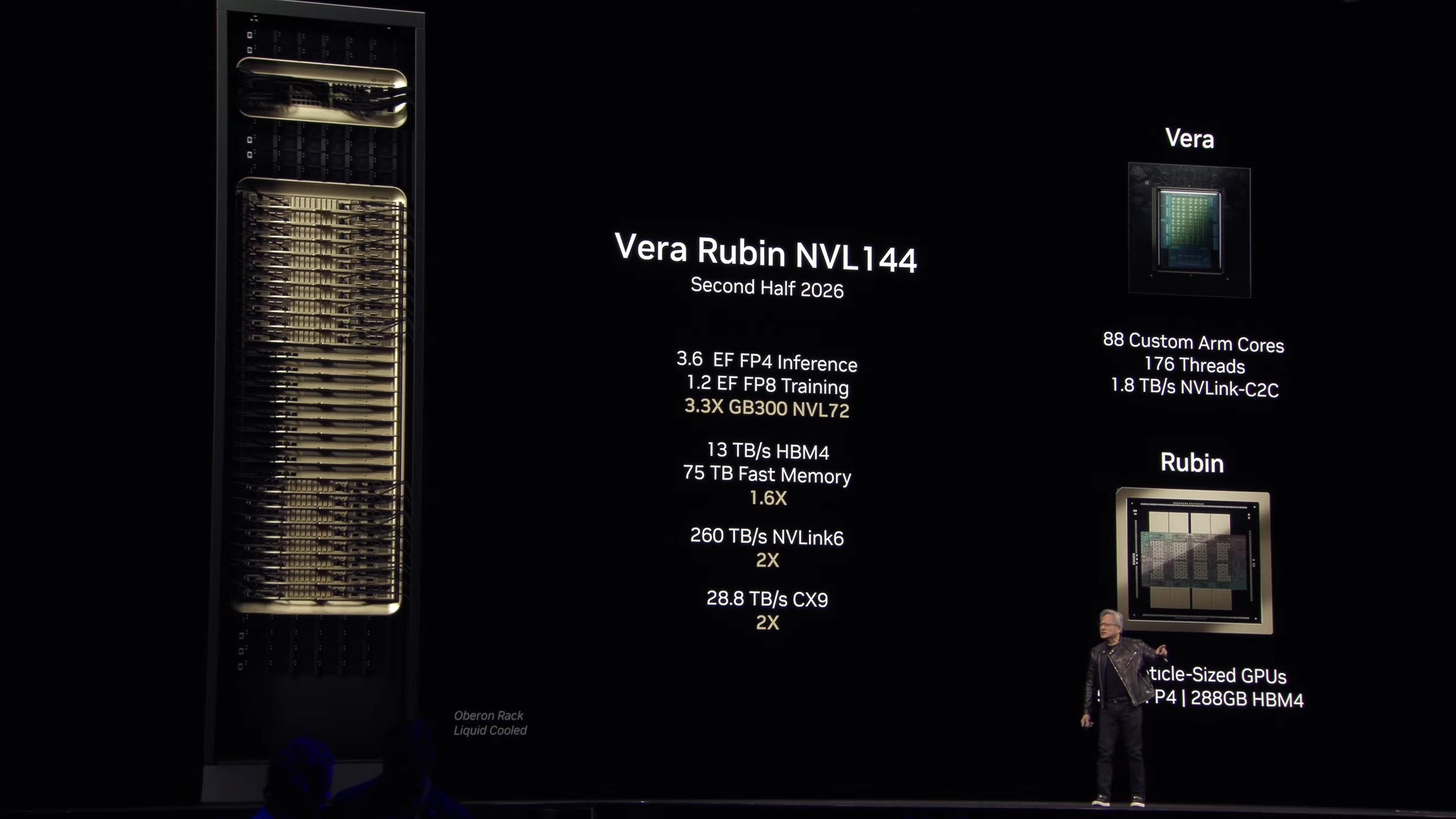

Above we have the Rubin NVL144 rack that will be drop-in compatible with the existing Blackwell NVL72 infrastructure. We have the same configuration data for the Blackwell Ultra B300 NVL72 in the second slide for comparison. Where B300 NVL72 offers 1.1 EFLOPS of dense FP4 compute, Rubin NVL144 — that's with the same 144 total GPU dies — will offer 3.6 EFLOPS of dense FP4.

Rubin will also have 1.2 ExaFLOPS of FP8 training, compared to only 0.36 ExaFLOPS for B300. Overall, it's a 3.3X improvement in compute performance.

Rubin will also mark the shift from HBM3/HBM3e to HBM4, with HBM4e used for Rubin Ultra. Memory capacity will remain at 288GB per GPU, the same as with B300, but the bandwidth will improve from 8 TB/s to 13 TB/s. There will also be a faster NVLink that will double the throughput to 260 TB/s total, and a new CX9 link between racks, with 28.8 TB/s (double the bandwidth of B300 and CX8).

The other half of the Rubin family will be the Vera CPU, replacing the current Grace CPUs. Vera will be a relatively small and compact CPU, with 88 custom ARM cores and 176 threads. It will also have a 1.8 TB/s NVLink core-to-core interface to link with the Rubin GPUs.

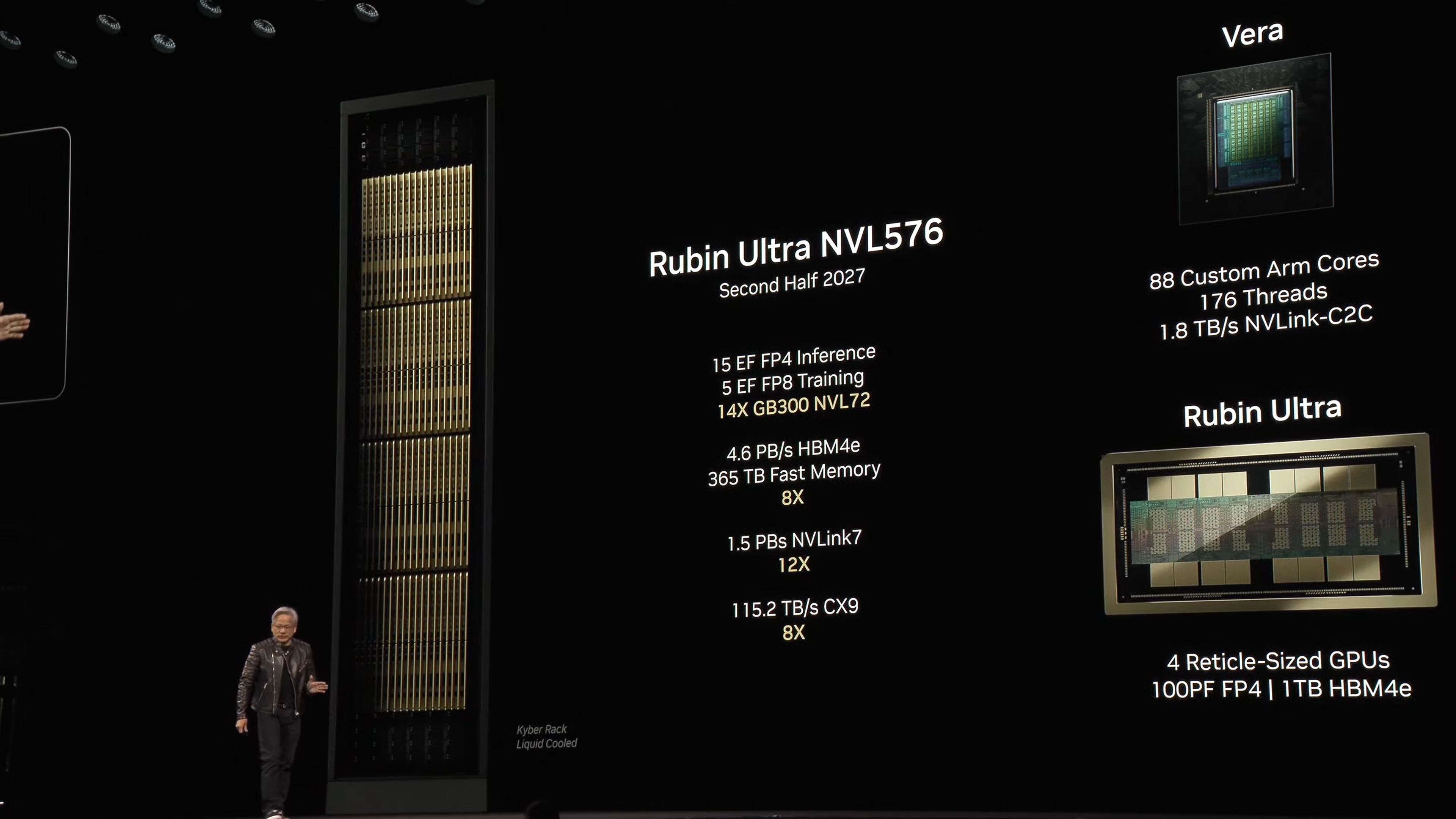

Rubin Ultra will land in the second half of 2027, and while the Vera CPU will remain, the GPU side of things will get another massive boost. The full rack will be replaced by a new layout, NVL576. Yes, that's up to 576 GPUs in a rack, each with an unspecified power consumption.

The inference compute with FP4 will rocket up to 15 ExaFLOPS, with 5 ExaFLOPS of FP8 training compute. It's about 4X the compute of the Rubin NVL144, which makes sense considering it's also four times as many GPUs. The GPUs will feature four GPU dies per package this time, in order to boost the compute density.

Where the NVL144 Rubin solution has 75TB total of "fast memory" (for both CPUs and GPUs) per rack, Rubin Ultra NVL576 will offer 365TB of memory. The GPUs will get HBM4e, but here things are a bit curious: Nvidia lists 4.6 PB/s of HBM4e bandwidth, but with 576 GPUs that works out to 8 TB/s per GPU. That's seemingly less bandwidth per GPU than before.

Perhaps it's a factor of how the four GPU dies are linked together? There will also be 1TB of HBM4e per four reticle-sized GPUs, with 100 PetaFLOPS of FP4 compute.

The NVLink7 interface will be 6X faster than on Rubin, with 1.5 PB/s of throughput. The CX9 interlinks will also see a 4X improvement to 115.2 TB/s between racks — possibly by quadrupling the number of links.

Obviously, there's plenty we don't yet fully know about Rubin and Rubin Ultra, but those details will get fleshed out in the future. Data centers need a lot more planning than consumer GPUs, so Nvidia has shared full details well in advance of the products being ready to ship. And it's not quite done...

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

After Rubin, Nvidia's next data center architecture will be named after theoretical physicist Richard Feynman. Presumably that means we'll get Richard CPUs with Feynman GPUs, if Nvidia keeps with the current pattern.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

usertests ReplyThere will also be 1TB of HBM4e per four reticle-sized GPUs, with 100 PetaFLOPS of FP4 compute.

Has anyone figured out where the increase from 288 GB to 1 TB (maybe 1152 GB?) is coming from?

Is it from 4x more stacks than the 288 GB GPUs (which are using two dies), more layers per stack with HBM4e, density increases, etc.

Edit: Blackwell Ultra uses 8 stacks of 12-Hi HBM3e. Which is 36 GB per stack, 3 GB per layer.

I believe the new one is using 16 stacks. It's probably 16 stacks of 16-Hi HBM4e with 4 GB per layer (64 GB per stack), adding up to 1024 GB total. -

SonoraTechnical meh who cares.. the good stuff won't be affordable, nor readily available... you're dead to me nVidia..Reply -

TheyStoppedit Each card will be 10% faster than it's same tier of the 50 series, (with a 10% power increase) besides the 6090 will be 30% faster than the 5090. The 6090 will be 675W (the extra 75 from the PCIe slot). They won't fix the current balancing (der8auers/buildzoids videos from last month). The 6070 will be advertised as faster than the 5090 with half the VRAM thanks to 8x frame gen. (8 fake frames instead of 4)Reply

6090 - $2499

6080 - $1799

6070Ti - $1499

6070 - $1299

6060Ti - $999

6060 - $799

You read it here first -

usertests Reply

You're dead to Nvidia too. Both sides will change their tune if the bubble bursts.SonoraTechnical said:meh who cares.. the good stuff won't be affordable, nor readily available... you're dead to me nVidia..

Junk prediction. The reason that Blackwell performance gains are so low is simple: It's on the exact same 5nm-class node as Lovelace. The successor moving to a 3nm or 2nm node will make it a lot better.TheyStoppedit said:Each card will be 10% faster than it's same tier of the 50 series, besides the 6090 will be 30% faster than the 5090. The 6070 will be advertised as faster than the 5090 with half the VRAM thanks to 8x frame gen. (8 fake frames instead of 4) -

TheyStoppedit Reply

No, it's because there's no R&D going into performance uplifts anymore. Why? Because all the R&D has gone into data centers/enterprise/AI. This is where the cash cow is. Why would they put a GB202 into a 5090 and charge $2000 when they can put it into a datacenter GPU and charge $30,000?usertests said:Junk prediction. The reason that Blackwell performance gains are so low is simple: It's on the exact same 5nm-class node as Lovelace. The successor moving to a 3nm or 2nm node will make it a lot better.

From here on in, every gen will have 10% uplifts on each tier (besides the 90) and the rest of the performance will be subsidized by fake frames/DLSS. Part of this is because there is no competition from team red. NVidia used to be a gaming GPU company. We are in the midst of a massive AI boom. Do you think NVidia cares about me or you and your stupid frames?

Nope, from now on, all R&D is datacenter/AI/enterprise/fake frames/DLSS. Datacenter/AI/enterprise will get what they want because they pay the most (unless you want to pay $30,000 for a 6090). Gamers, you get whatever chips can't pass QC for Datacenter/AI/enterprise and more fake frames/DLSS and a 20% price hike.

The shareholders will get what they want and your frames will look like garbage to make it happen. This is a business, and there is only one thing that matters: MONEY. -

usertests Reply

This is incorrect for the reason I stated.TheyStoppedit said:From here on in, every gen will have 10% uplifts on each tier (besides the 90) and the rest of the performance will be subsidized by fake frames/DLSS.

They don't care about consumer GPUs right now, but they can pivot back to it whenever they feel the need to. Such as if a bubble bursts. -

Giroro So Nvidia expects tech companies to just buy a new slightly-better multi-billion dollar AI super computer every year? Nobody's found a way to even get close to paying for their current ones yet.Reply

Where is that kind of money supposed to keep coming from?

I mean, in the short term they're trying to get it from global-scale enshittification. But even if every app somehow maintained their current level of engagement while converting every user to a paid subscriber, and filling the screen with a ratio of 100% ads and 0% content/functionality, revenue would still be at least an order of magnitude away from breaking even. It's not a sustainable business model.

It's pointless for Nvidia to try to annualize these products. The next upgrade cycle for these machines will be in at least 5 years, probably more like 10 or 20. Every company already has their machines either built or on order so it's all one-and-done for Nvidia until one of these companies completes their supervillain plan of breaking the stock market, or cracking crypto, or whatever it is they're actually trying to accomplish. -

spongiemaster Reply

Did you bother to read the article? The roadmap is for data center AI accelerators, and has nothing to do with gaming GPU's.TheyStoppedit said:No, it's because there's no R&D going into performance uplifts anymore. Why? Because all the R&D has gone into data centers/enterprise/AI. This is where the cash cow is. Why would they put a GB202 into a 5090 and charge $2000 when they can put it into a datacenter GPU and charge $30,000?

From here on in, every gen will have 10% uplifts on each tier (besides the 90) and the rest of the performance will be subsidized by fake frames/DLSS. Part of this is because there is no competition from team red. NVidia used to be a gaming GPU company. We are in the midst of a massive AI boom. Do you think NVidia cares about me or you and your stupid frames?

Nope, from now on, all R&D is datacenter/AI/enterprise/fake frames/DLSS. Datacenter/AI/enterprise will get what they want because they pay the most (unless you want to pay $30,000 for a 6090). Gamers, you get whatever chips can't pass QC for Datacenter/AI/enterprise and more fake frames/DLSS and a 20% price hike.

The shareholders will get what they want and your frames will look like garbage to make it happen. This is a business, and there is only one thing that matters: MONEY. -

spongiemaster Reply

No, everyone isn't on the same upgrade cycle. Some are still trying to get into the market and the hyper scalers are constantly buying whatever is the latest and greatest to add to what they already have, not simply to upgrade what they have.Giroro said:So Nvidia expects tech companies to just buy a new slightly-better multi-billion dollar AI super computer every year? Nobody's found a way to even get close to paying for their current ones yet. -

spongiemaster Reply

Nvidia doesn't care about individual customers like you either who aren't in the market for thousands of their $40k AI accelerators. Nothing in this article you didn't have time to read before commenting, has anything to do with you.SonoraTechnical said:meh who cares.. the good stuff won't be affordable, nor readily available... you're dead to me nVidia..