Why you can trust Tom's Hardware

Modern GPUs aren't just about gaming. They're used for video encoding, professional applications, and increasingly they're being used for AI. We've revamped our professional and AI test suite to give a more detailed look at the various GPUs. We'll start with the AI benchmarks, as those tend to be more important for a wider range of users.

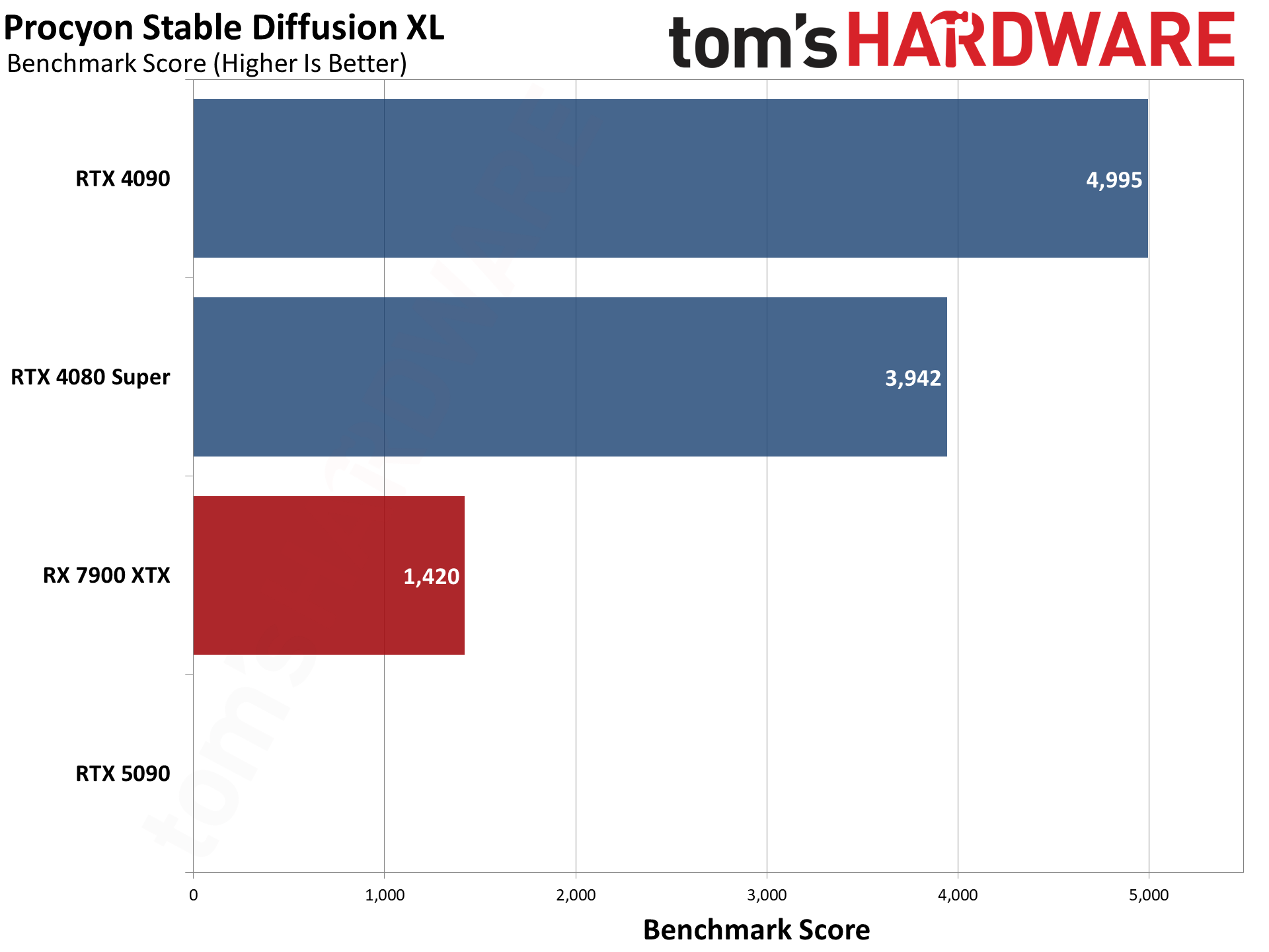

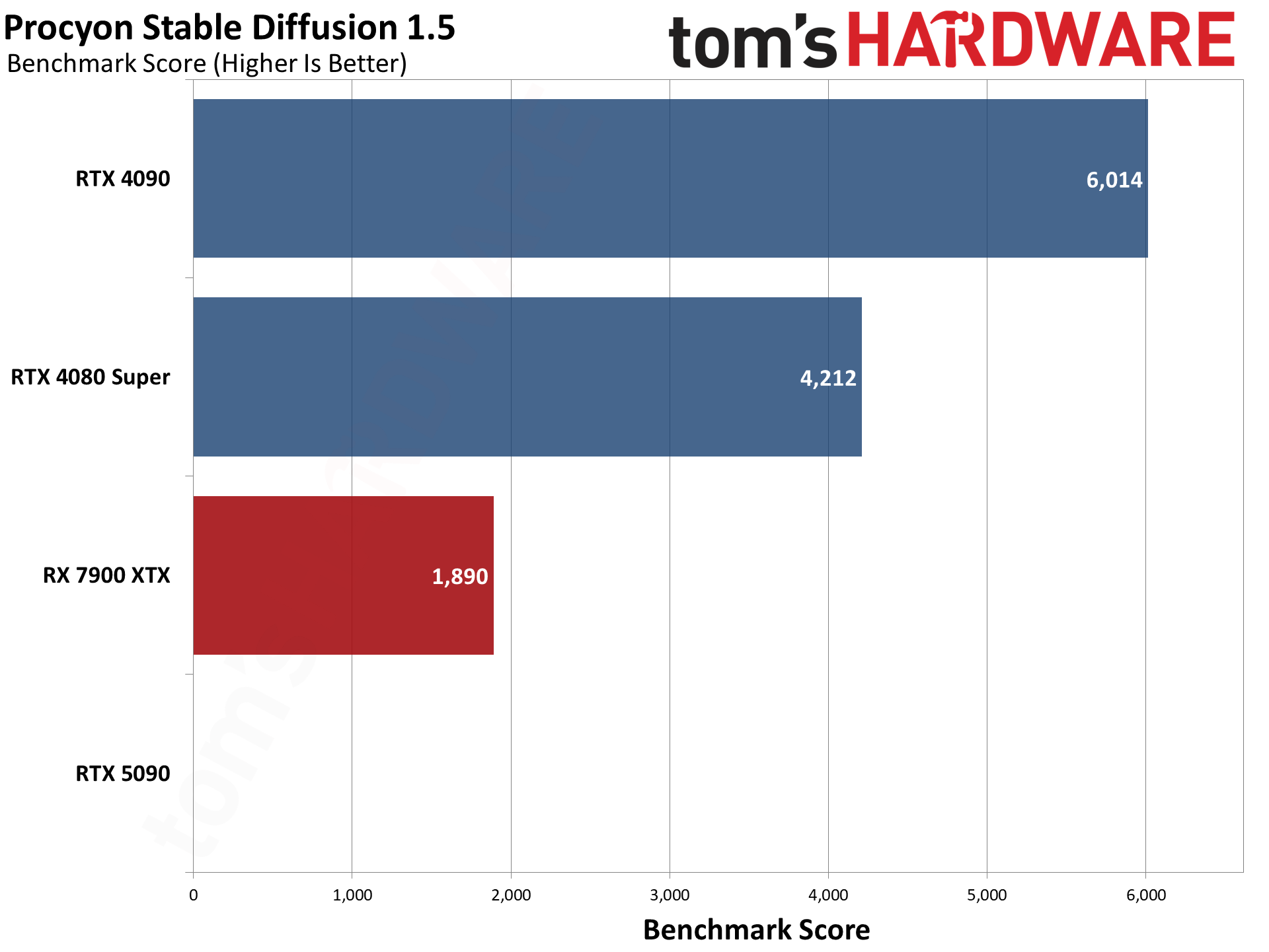

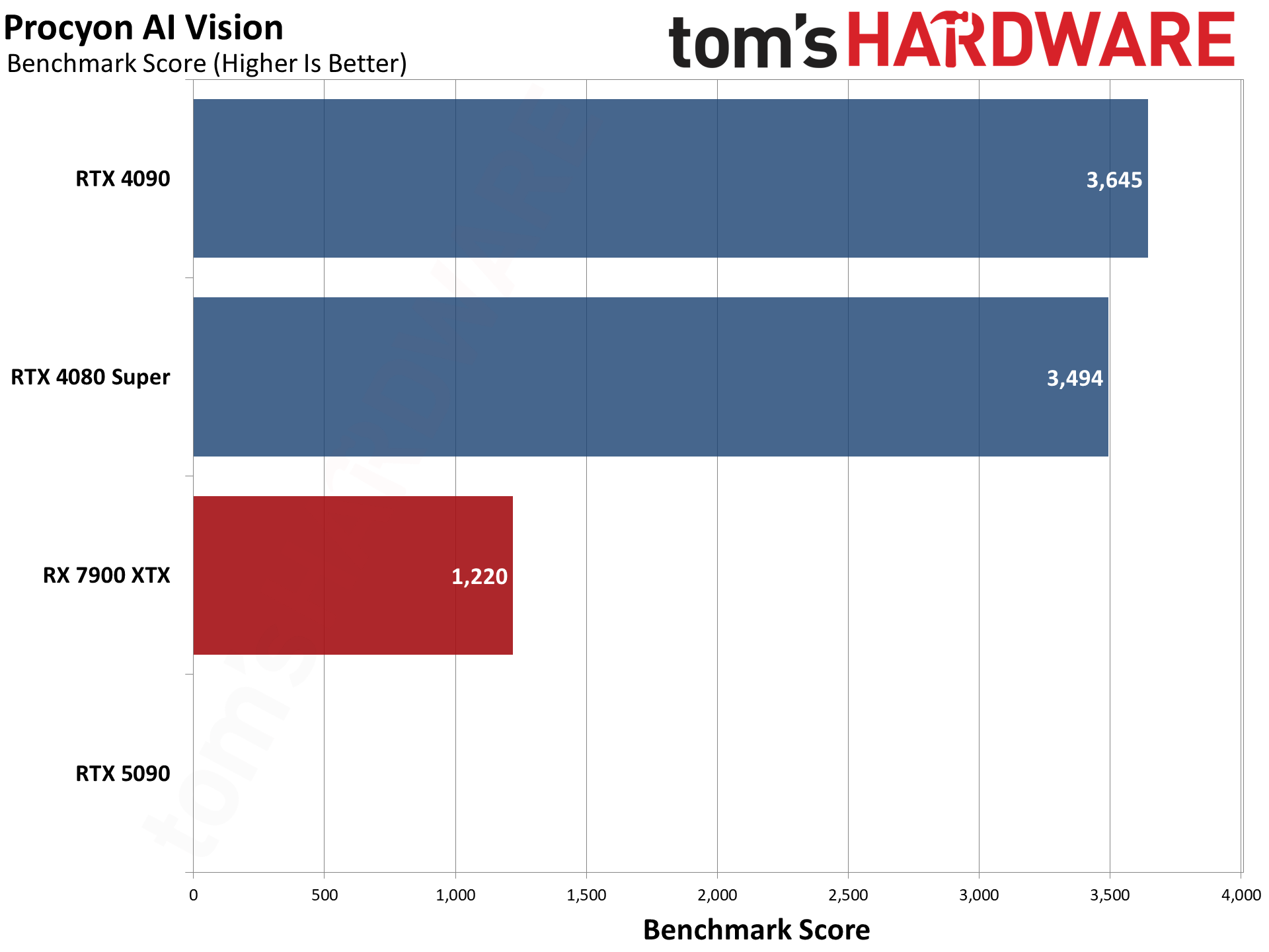

Note that we had some issues getting some of these tests to work on the RTX 5090. Procyon needs to be updated for the tests we normally run.

Procyon has several different AI tests, and for now we've run the AI Vision benchmark along with two different Stable Diffusion image generation tests. The tests have several variants available that are all determined to be roughly equivalent by UL: OpenVINO (Intel), TensorRT (Nvidia), and DirectML (for everything, but mostly AMD). There are also options for FP32, FP16, and INT8 data types, which can give different results. We tested the available options and used the best result for each GPU.

Right now, the RTX 5090 fails to run any of these TensorRT workloads.

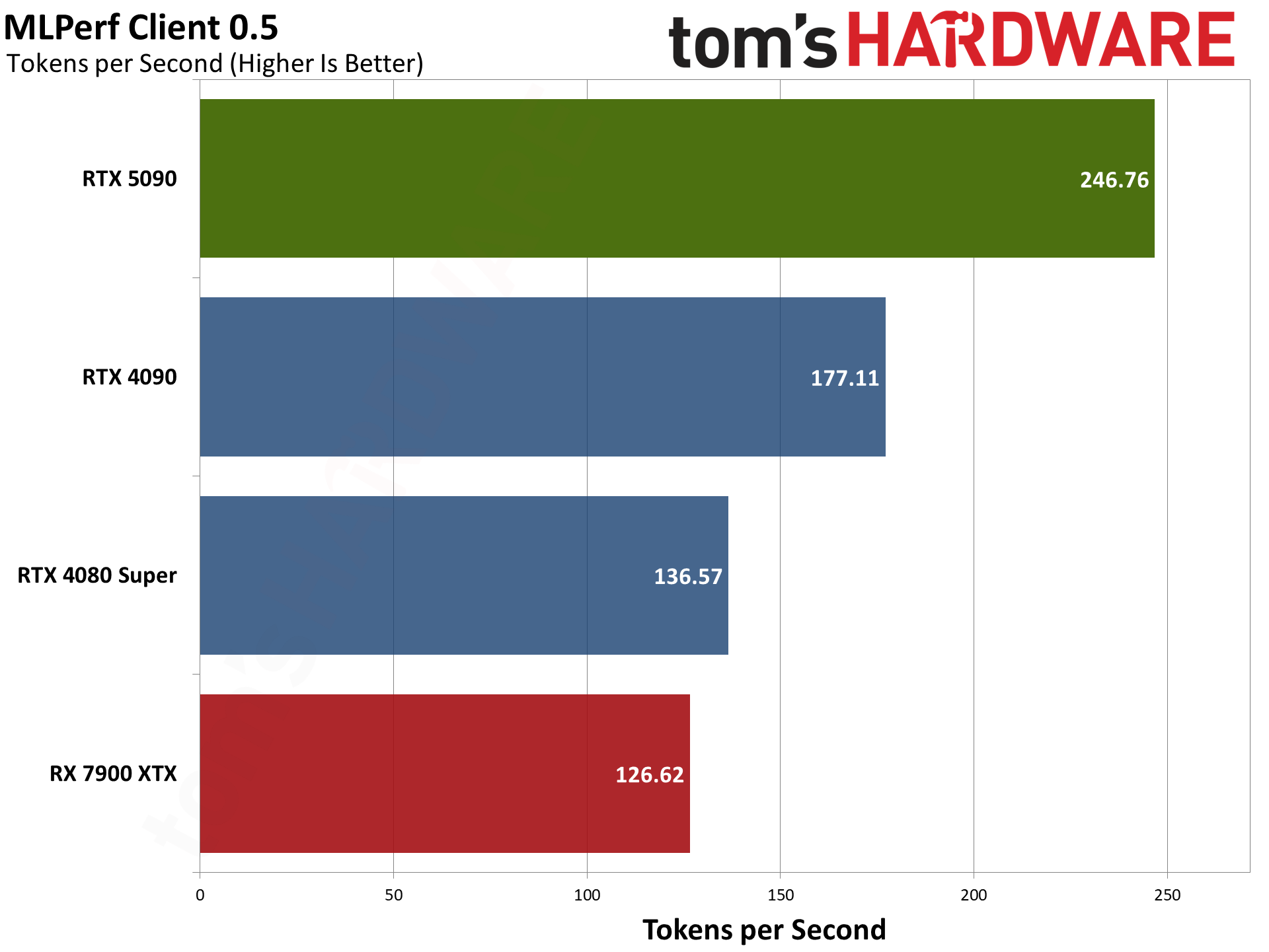

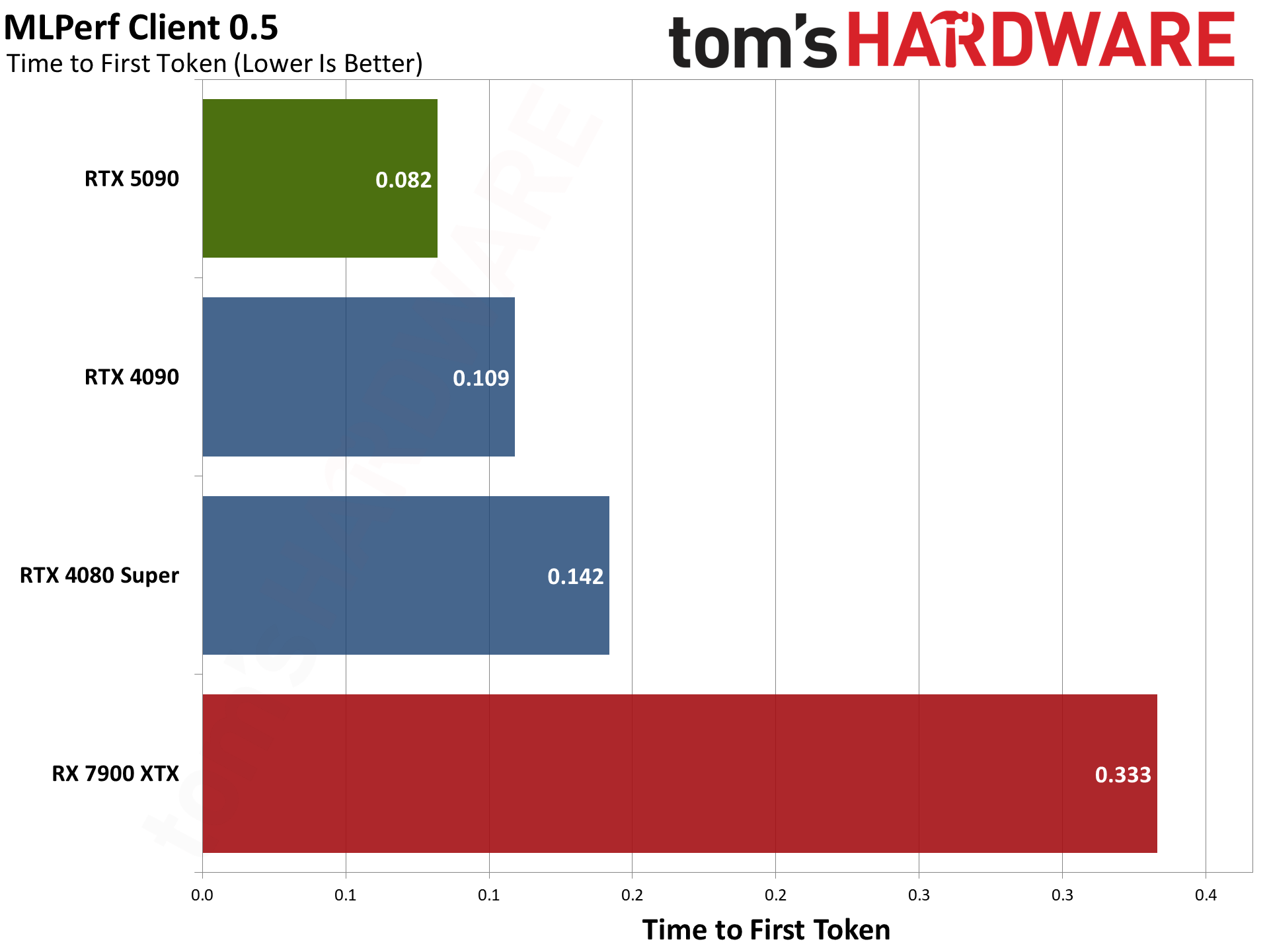

ML Commons' MLPerf Client 0.5 test suite does AI text generation in response to a variety of inputs. There are four different tests, all using the LLaMa 2 7B model, and the benchmark measures the time to first token (how fast a response starts appearing) and the tokens per second after the first token. These are combined using a geometric mean for the overall scores, which we report here.

While AMD, Intel, and Nvidia are all ML Commons partners and were involved with creating and validating the benchmark, it doesn't seem to be quite as vendor agnostic as we would like. AMD and Nvidia GPUs only currently have a DirectML execution path, while Intel has both DirectML and OpenVINO as options. Intel's Arc GPUs score quite a bit higher with OpenVINO than with DirectML.

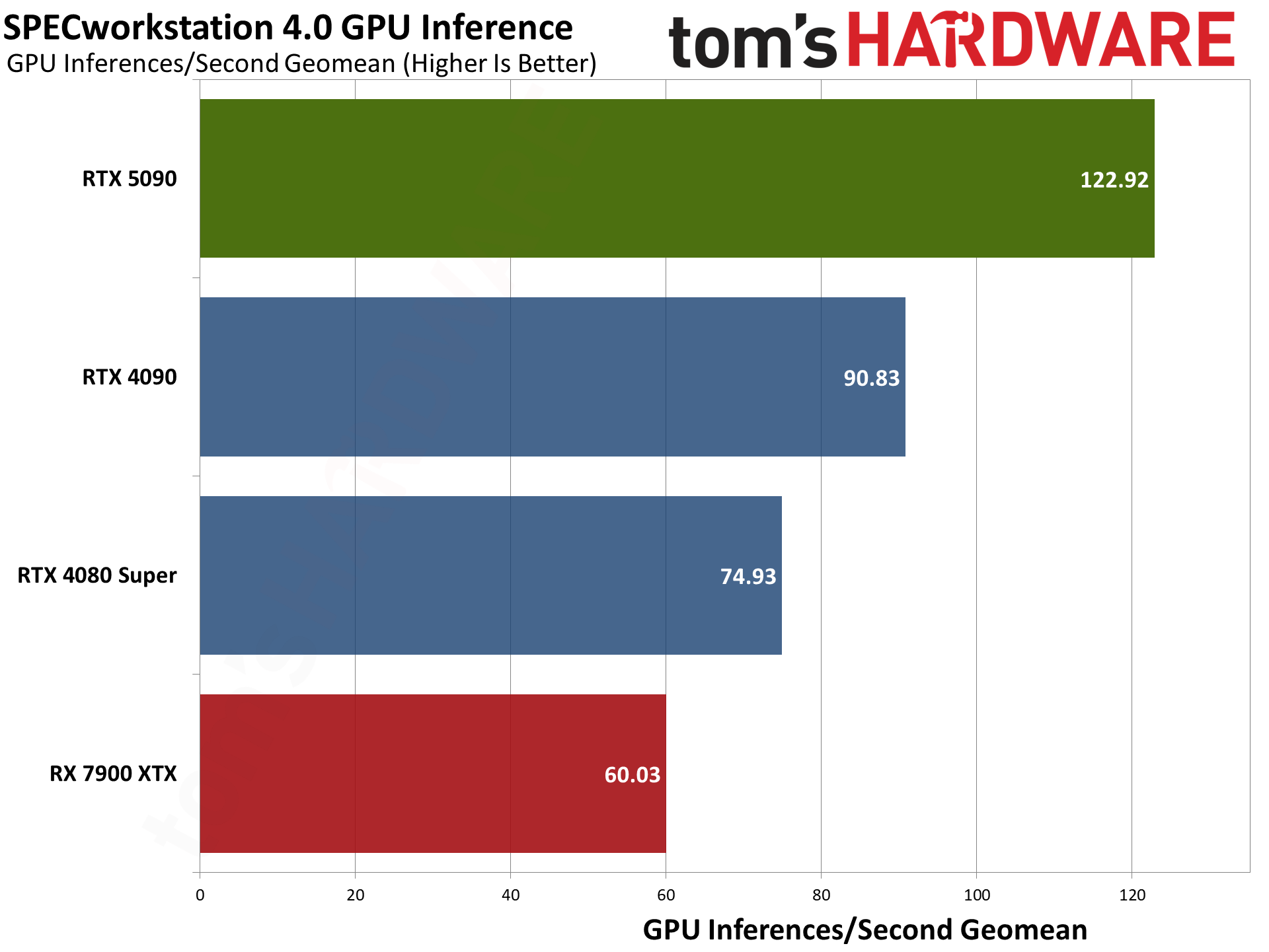

We'll have some additional SPECworkstation 4.0 results below, but there's an AI inference test composed of ResNet50 and SuperResolution workloads that runs on GPUs (and potentially NPUs, though we haven't tested that). We calculate the geometric mean of the four results given in inferences per second, which isn't an official SPEC score but it's more useful for our purposes.

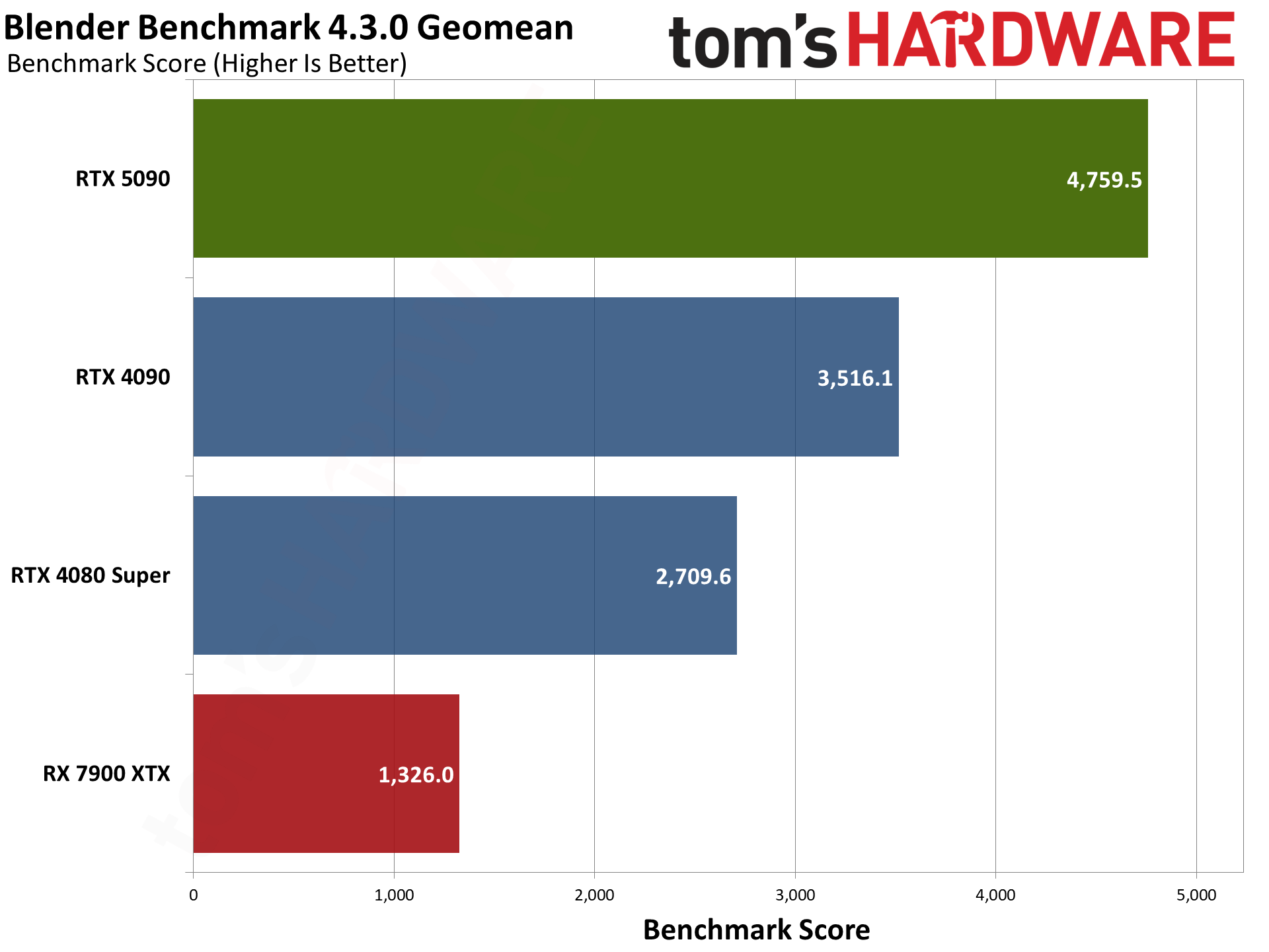

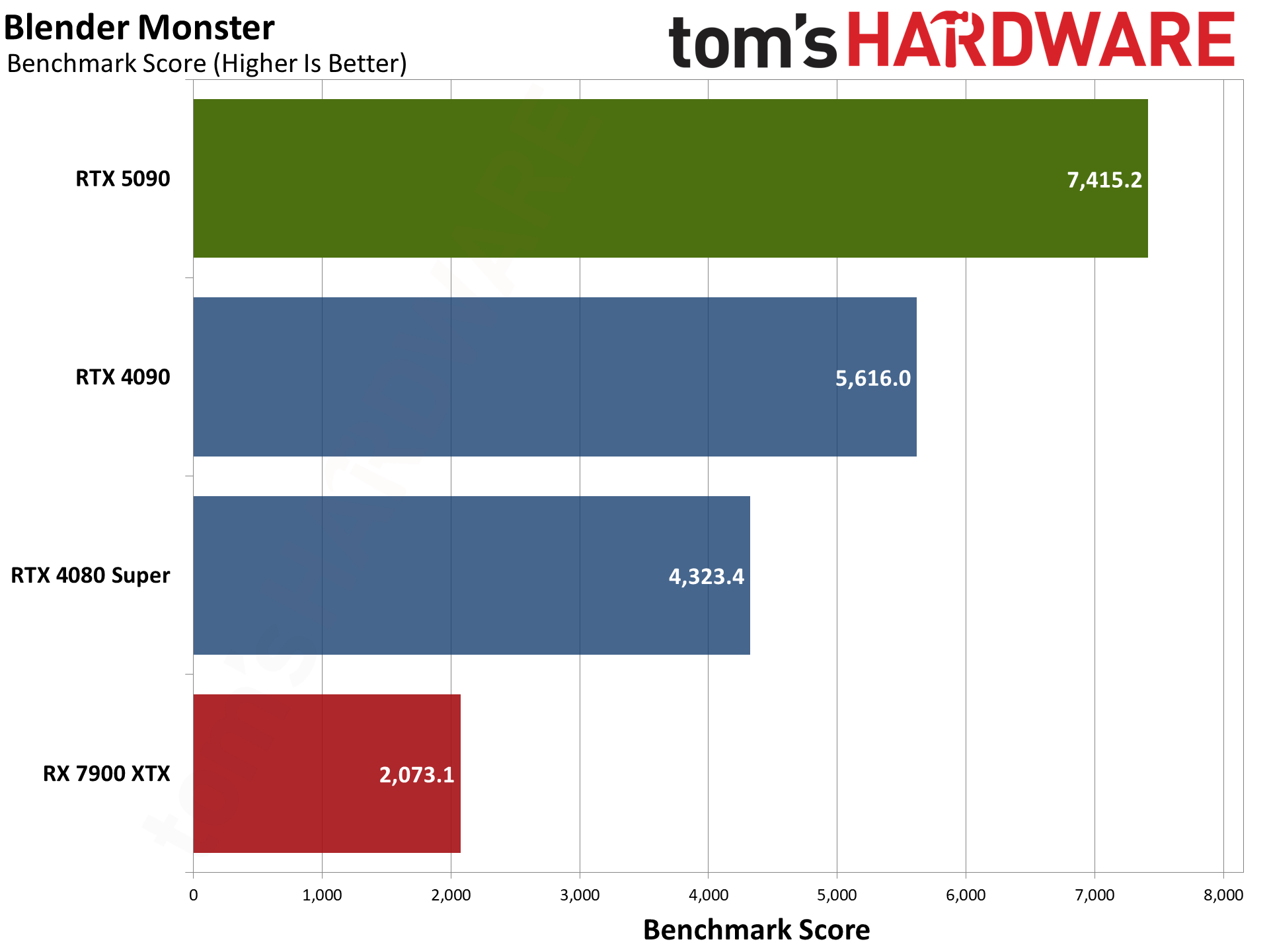

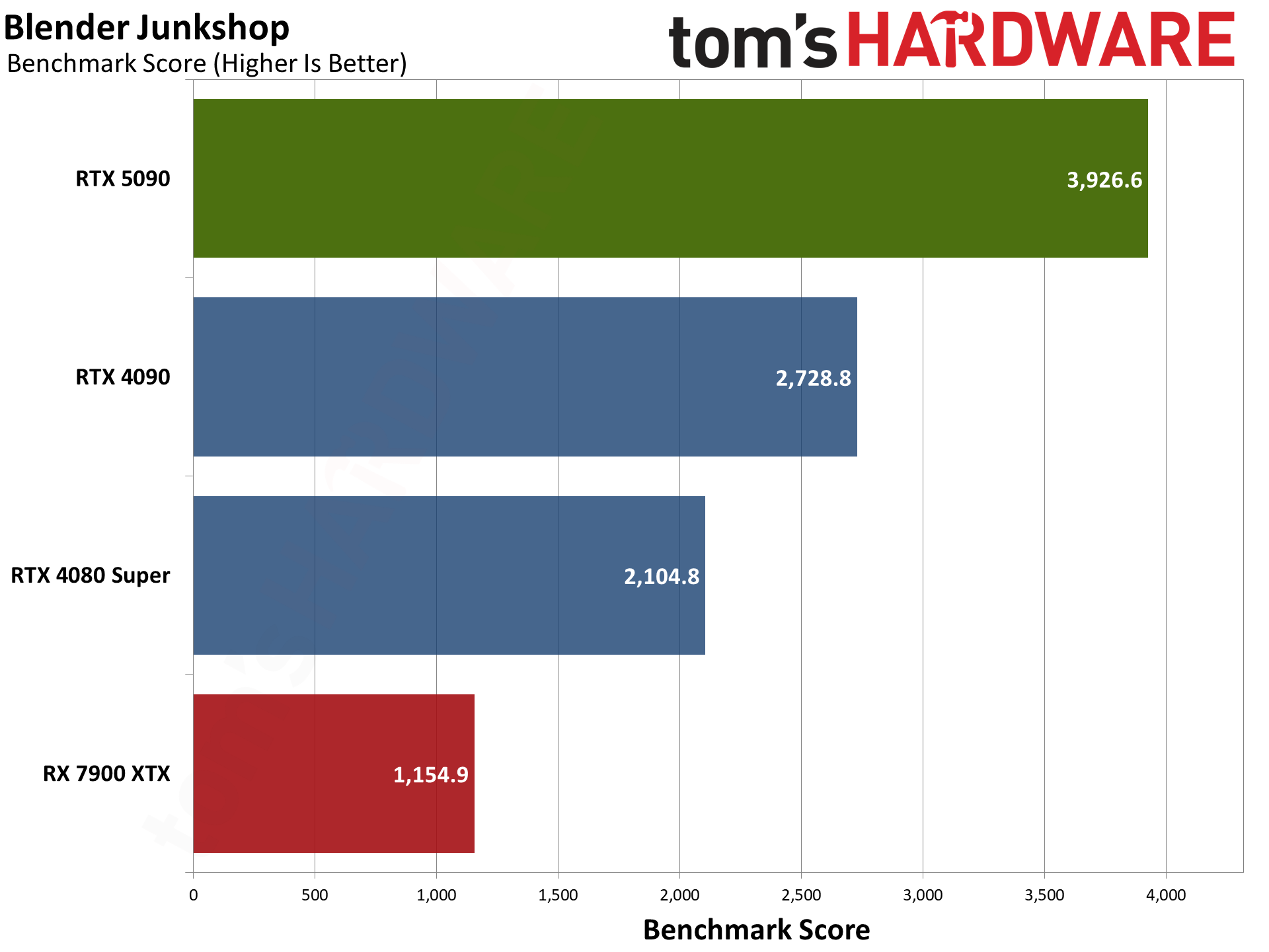

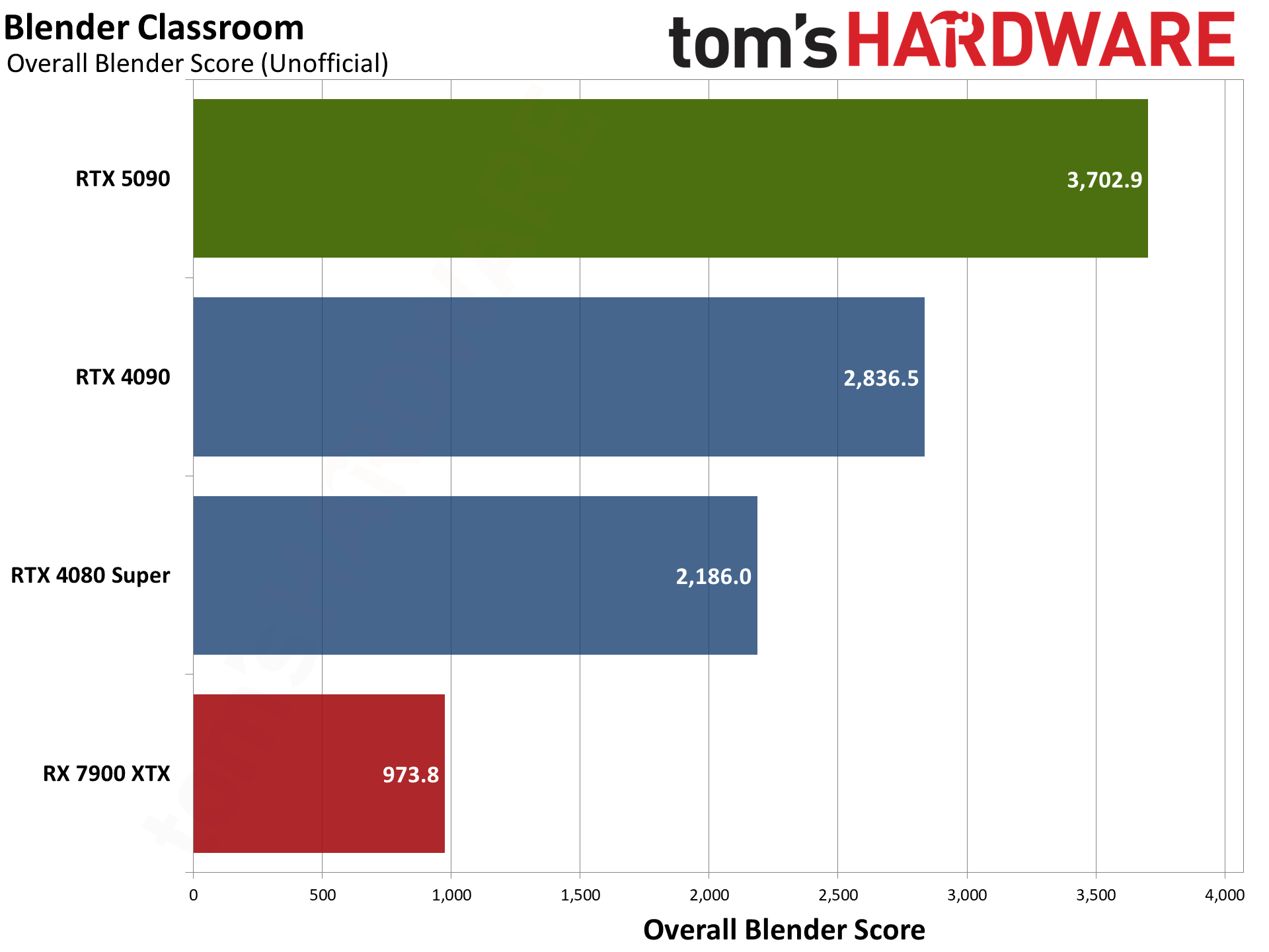

For our professional application tests, we'll start with Blender Benchmark 4.3.0, which has support for Nvidia Optix, Intel OneAPI, and AMD HIP libraries. Those aren't necessarily equivalent in terms of the level of optimizations, but each represents the fastest way to run Blender on a particular GPU at present.

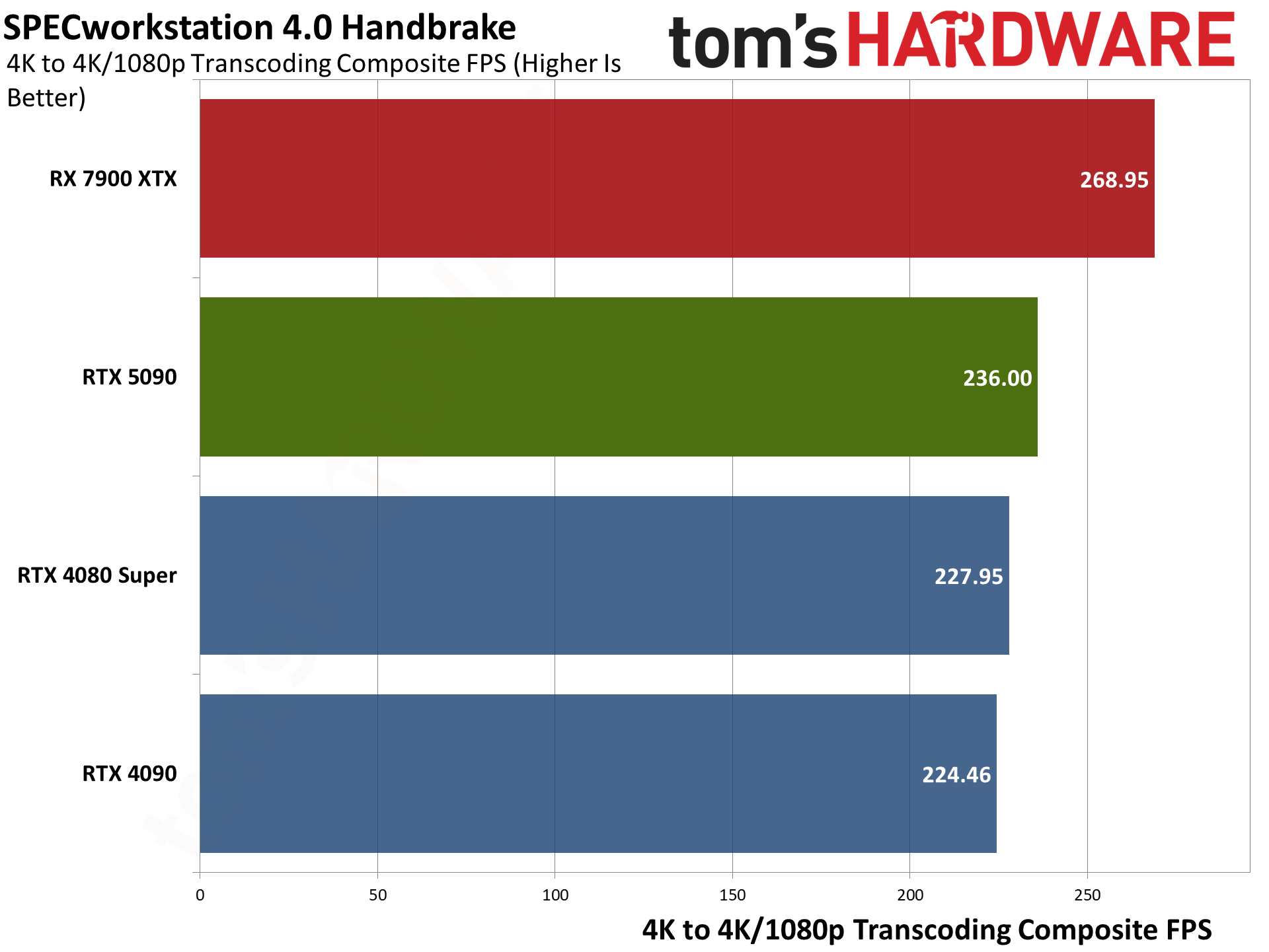

SPECworkstation 4.0 has two other test suites that are of interest in terms of GPU performance. The first is the video transcoding test using HandBrake, a measure of the video engines on the different GPUs and something that can be useful for content creation work. We use the average of the 4K to 4K and 4K to 1080p scores. Note that this only evaluates speed of encoding, not image fidelity.

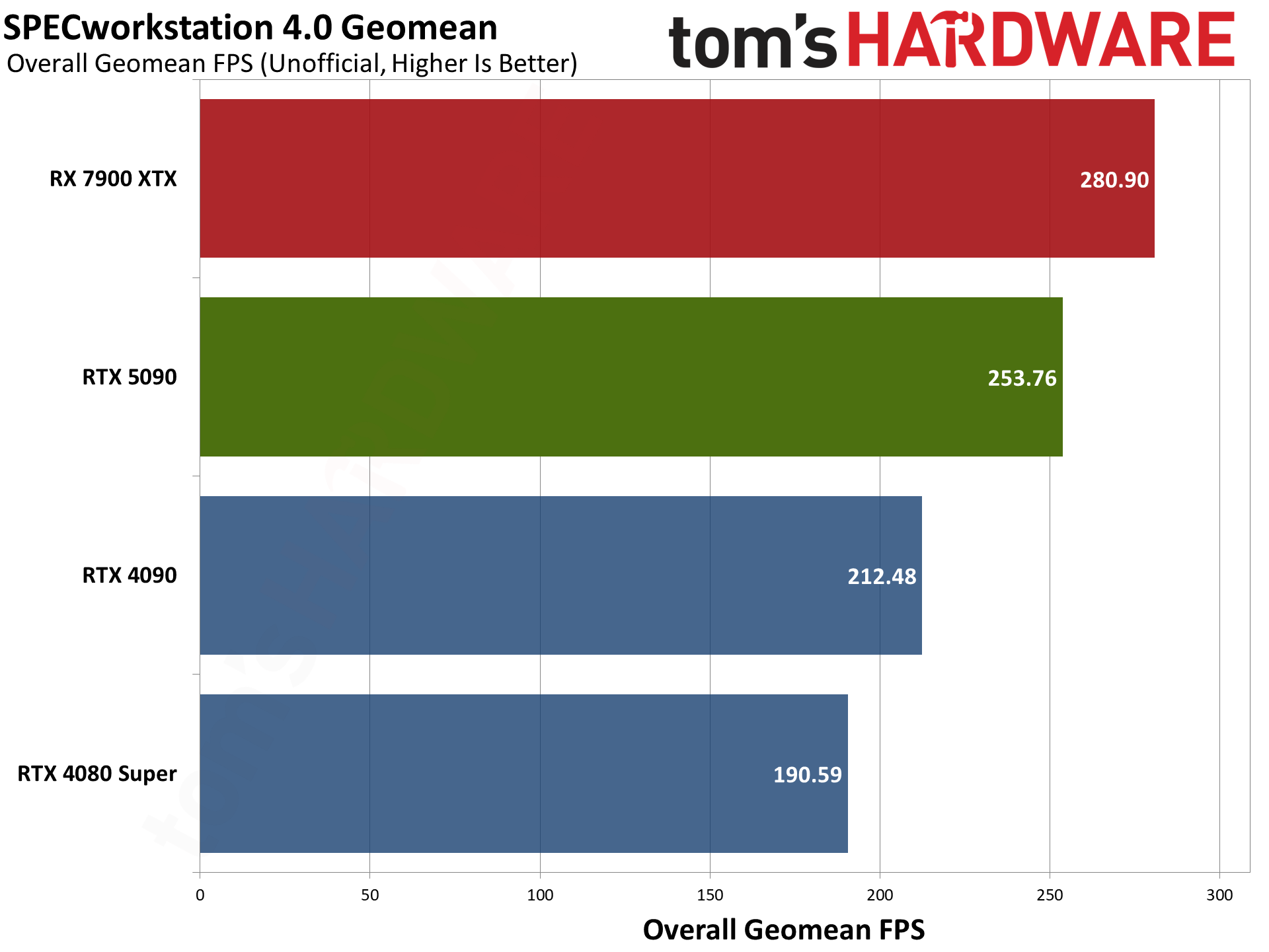

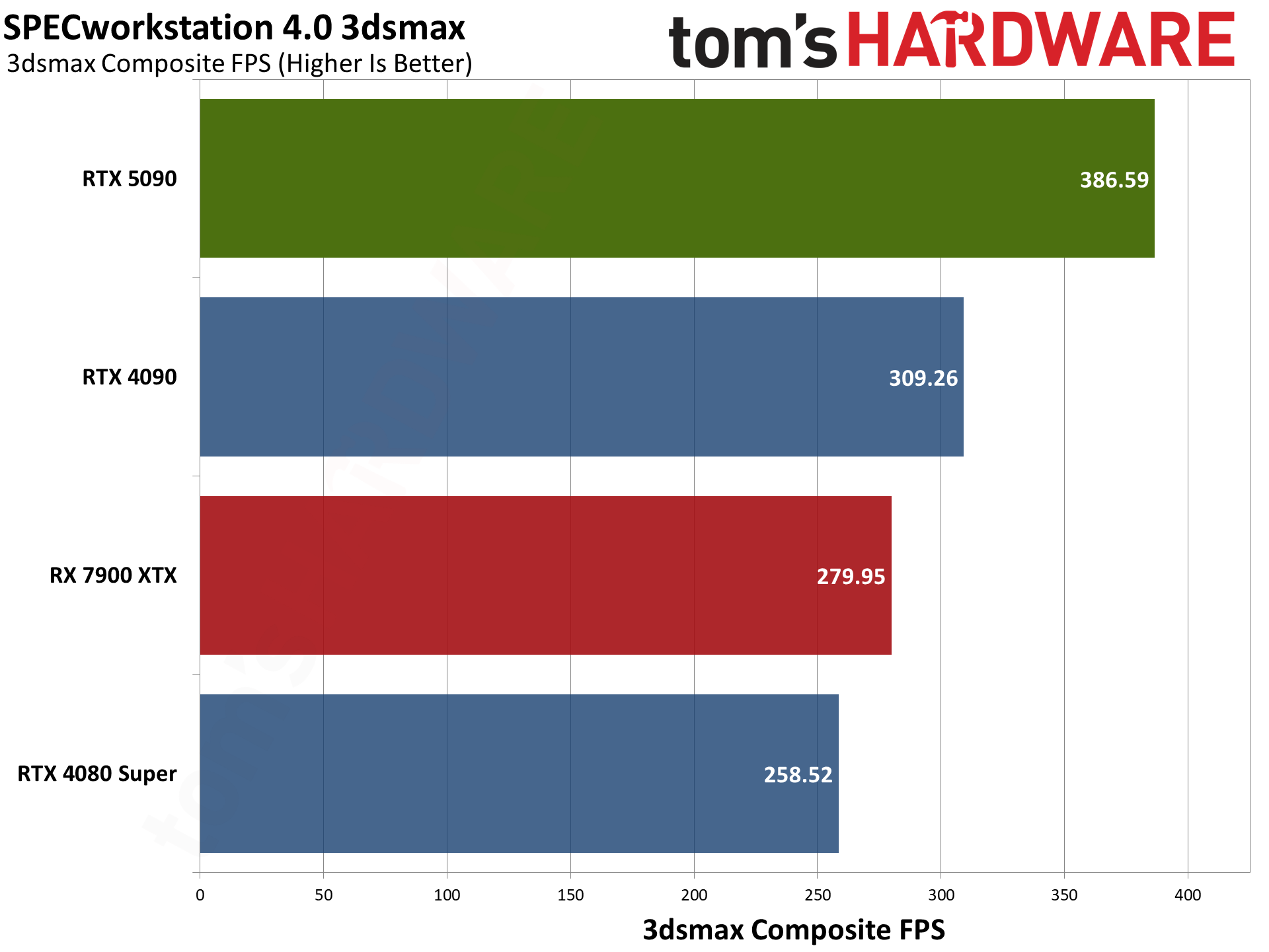

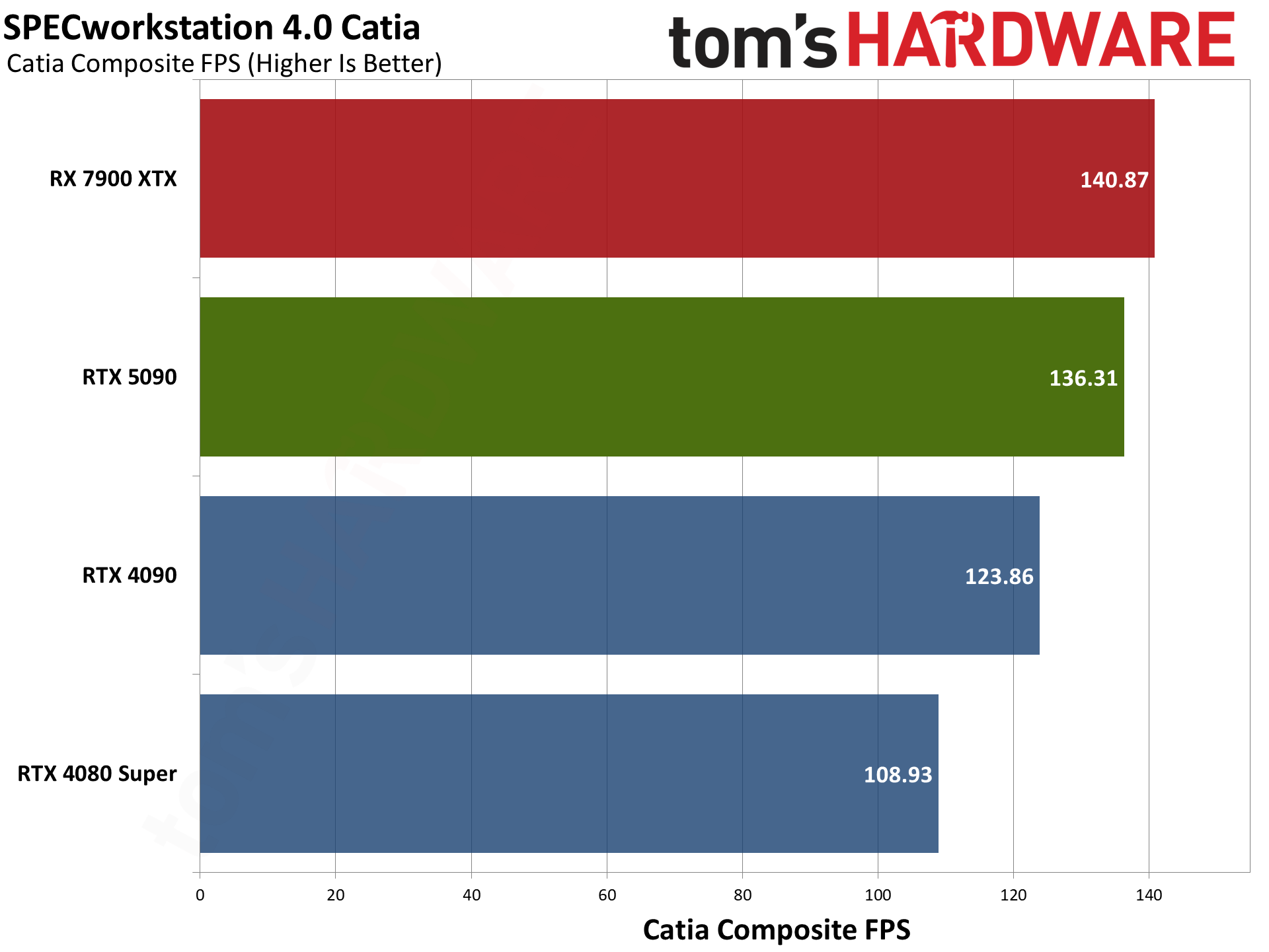

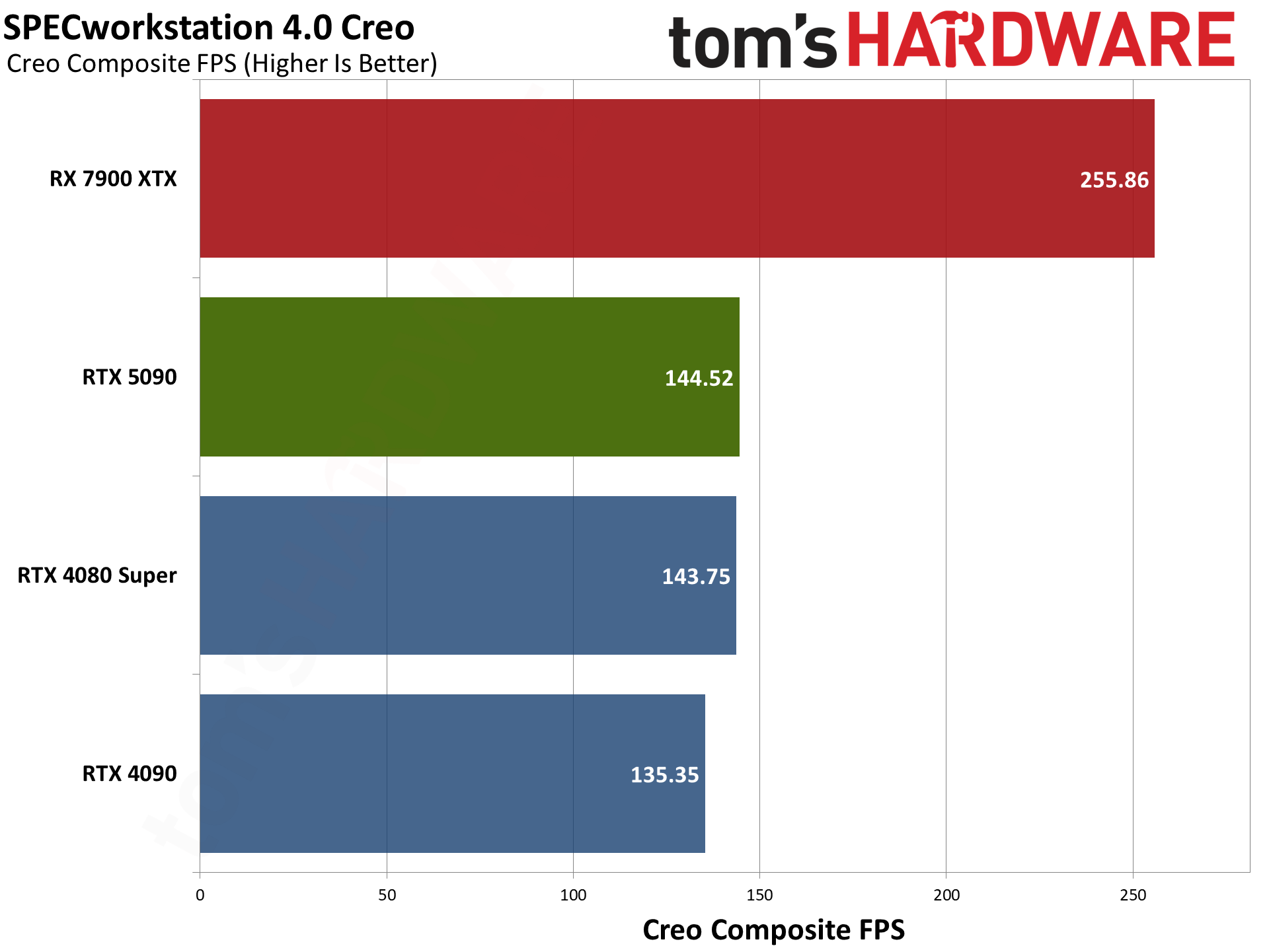

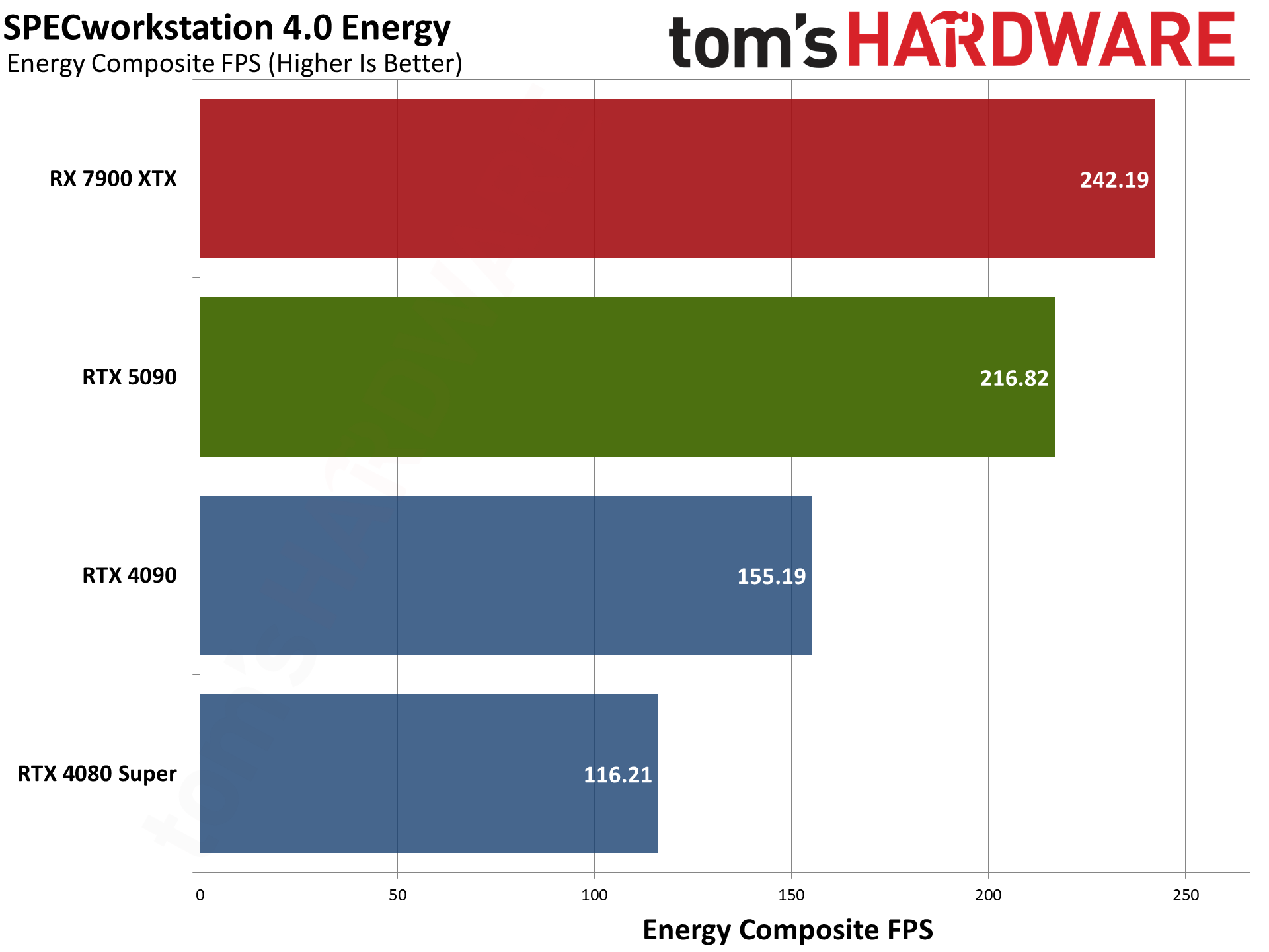

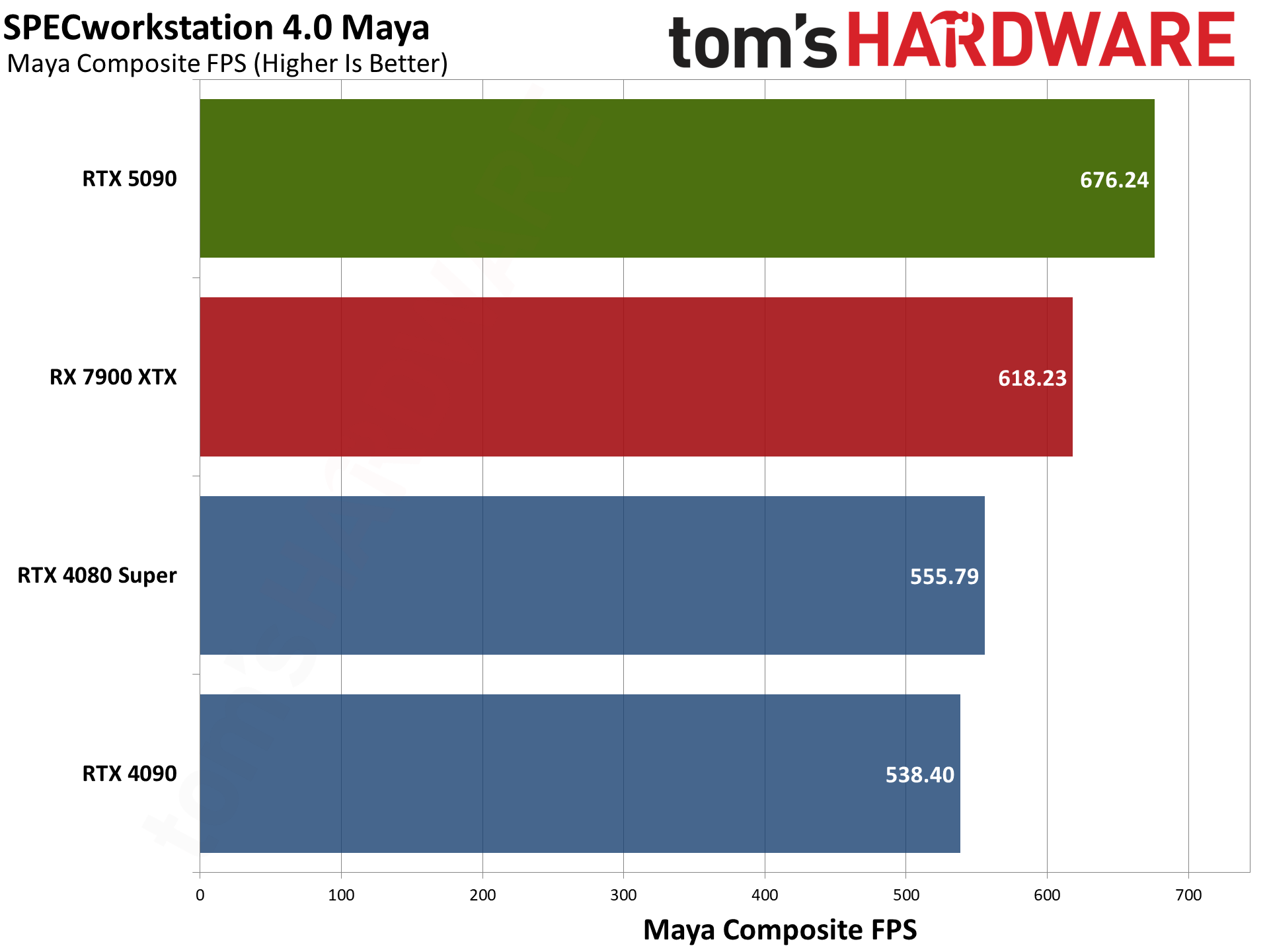

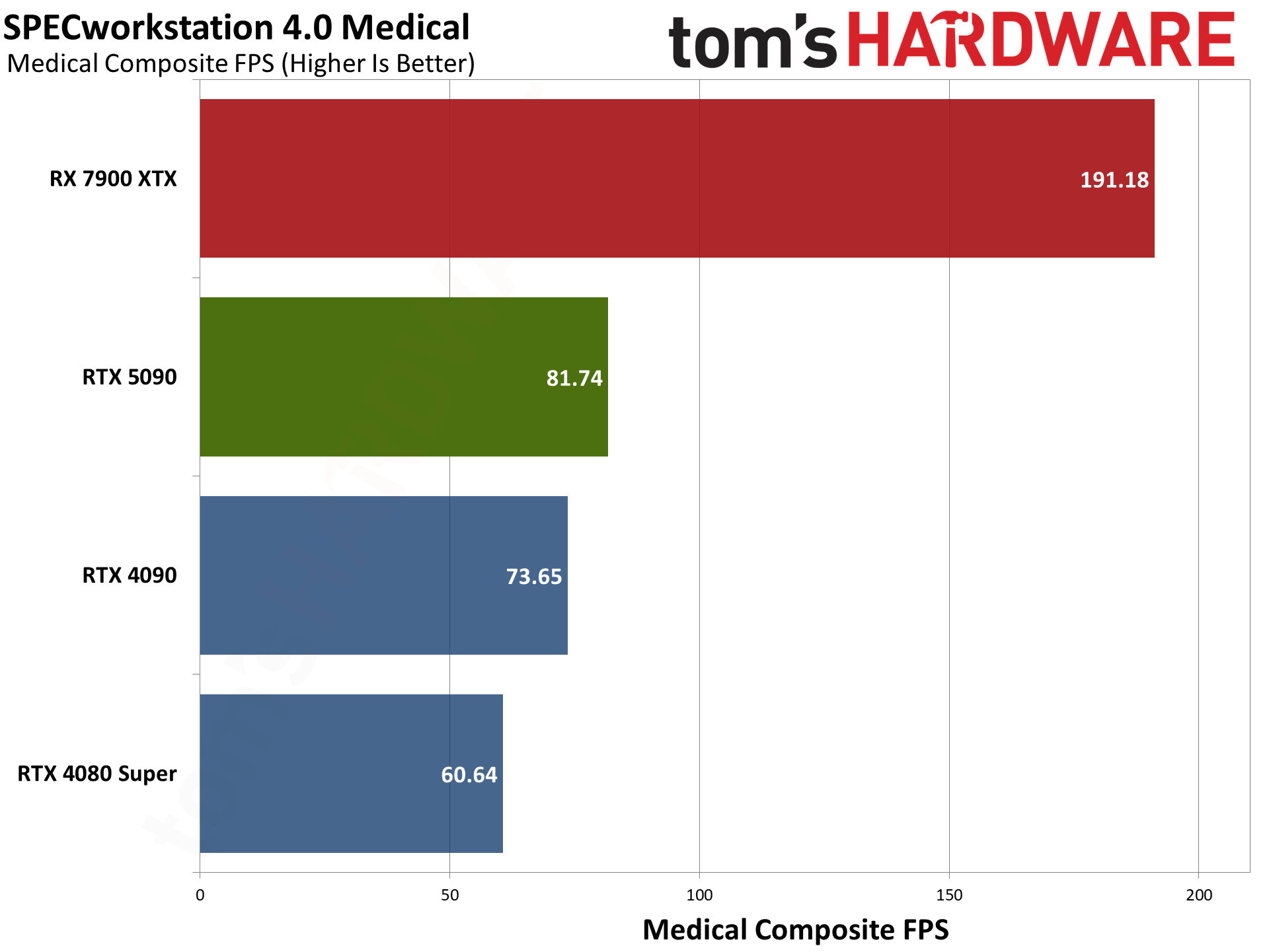

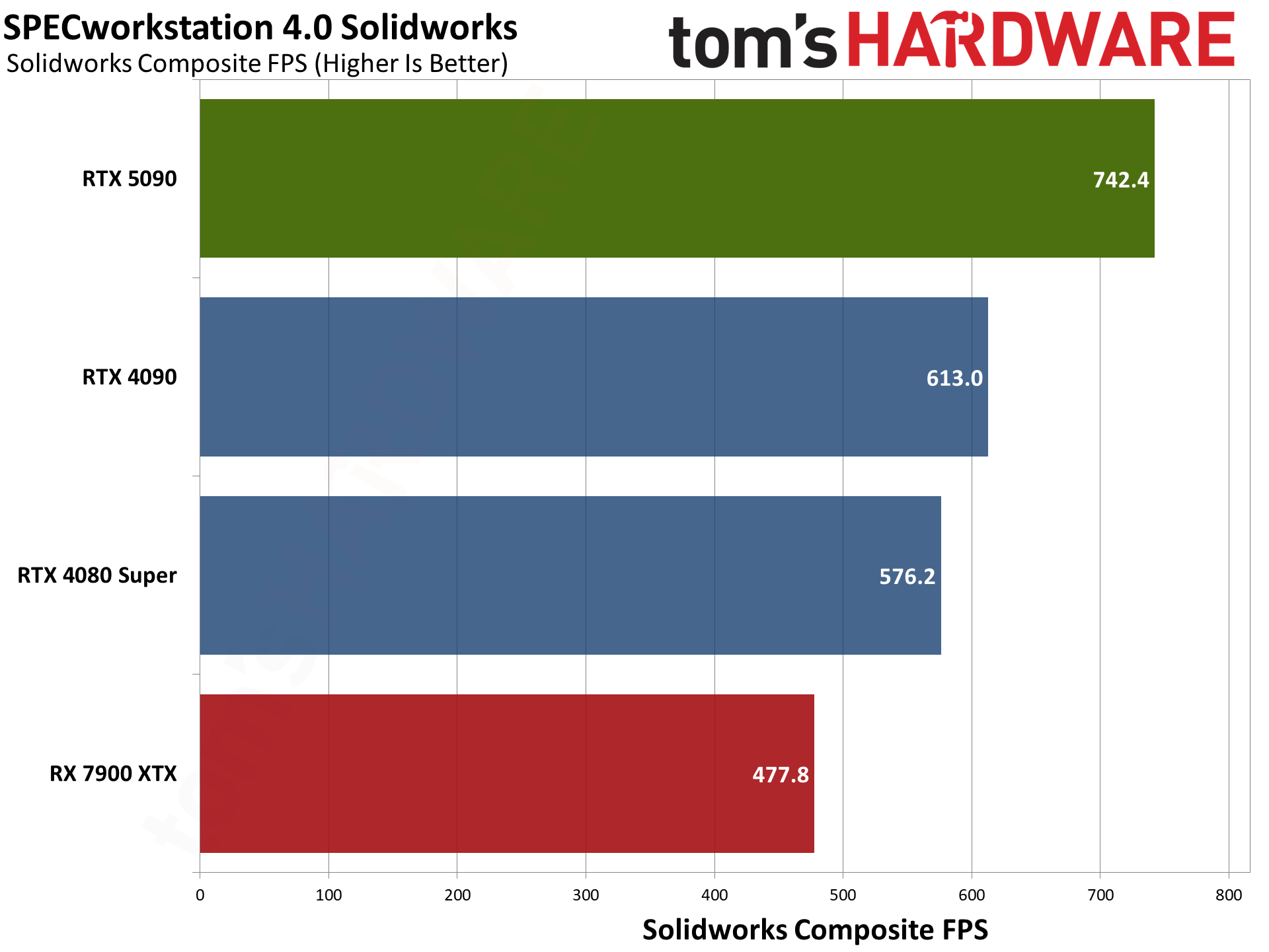

Our final professional app tests consist of SPECworkstation 4.0's viewport graphics suite. This is basically the same tests as SPECviewperf 2020, only updated to the latest versions. (Also, Siemen's NX isn't part of the suite now.) There are seven individual application tests, and we've combined the scores from each into an unofficial overall score using a geometric mean.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Current page: Nvidia RTX 5090: Content Creation, Professional Apps, and AI

Prev Page Nvidia RTX 5090 Full RT and DLSS 4 Testing Next Page Nvidia RTX 5090: Power, Clocks, Temps, and Noise

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Crazyy8 Quick look, raster performance seems a bit underwhelming. Weird that the RTX 5090 can be slower than the 4090(in niche cases). Wasn't going to buy the 5090 anyway, too expensive for a plebe like me. Looking forward to DLSS 4 and how amazing(or not)it'll be.Reply -

Gururu Amazing but expected as far as I am concerned. I don't think there is a lot of need to compare against anything including AMDs new cards. It was brilliant to pair with the 13900 with crazy interesting results. Will read a few more times to glean more details. (y)Reply -

ReplyAdmin said:We also tested the RTX 5090 on our old 13900K test bed, with some at times interesting results. Some games still seem to run faster on Raptor Lake, though overall the 9800X3D delivers higher performance. The margins are of course quite small at 4K ultra.

For me, as a 13900K owner, that's a consolation :cool: -

Gaidax Okay, that IS a sick cooler that actually manages to do the job.Reply

I bet aftermarket 4 slot monstrocities will do better, but for 2 slots 600w that's insane. -

m3city Products like this should receive 3stars max. Great performance but at what cost? Is it the right direction that power draw increases at each iteration? Is it worth to chase max perf each time? For me it would be perfect if 5000 series stayed at same TDP as previous ones - meaning better design, better gpu - with understandably lower increase of perf compared to 4000. And then, 6000 series to have even reversed direction: higher perf with drop of TDP.Reply

And secondary, how come 500W gpu can be air cooled, but nerds on forums will claim you absolutely NEED water cooling for 125W ryzen, cause "highend"?. Yeah, i know 125W means more actual draw. -

redgarl Okay Jarred... you are shilling at this point.Reply

4.5 / 5 for a 2000$ GPU that barely get 27% more performances?

While consuming 100W more than a 4090?

And offering the same cost per frame value as a 4090 from 2 years ago?

Flagship or not, this is horrible.

Not to mention the worst uplift from an Nvidia GPU ever achieved... 27%...

https://i.ibb.co/4fks6Gt/reality.jpg -

redgarl Did you bench into an open bench or a PC case? I am asking because there is some major concerns of overheating because the CPU coolers is choked by the 575W of heat dissipation inside a closed PC Case. If you have an HSF for your CPU, then you are screwed.Reply

https://uploads.disquscdn.com/images/7a5bf4d586b20ffe0aa6281c57d419012a32cbdabd43b3e8050d2aa9a00d6cc1.png -

oofdragon 20% better at 4K and 15% better at 2K, all that having 30% more cores and etc............... got it. Oh boy the 5080 and 5070 are sure going to disappoint a lot of people.Reply

The good news is the RX 9070 will bring 4070 Ti Super performance to the table around $500 including the VRAM, ray tracing and dlss image quality. AMD will prolly counter the multi frame gen nonsense with something like the LSFG 3.0 is doing and smart buyers will finally have a good GPU to replace their 3080 or 6700 XT. -

vanadiel007 They should have given it code name sasquatch, because that's the chance you will be seeing these sell for $2,000 in the coming months.Reply

More like $3,000 and a lot of luck needed to find one in stock.

I pass on it. -

YSCCC a real space heater inside the case, and extremely expensive with not great raw performance increase... sounds like built for those with more money than brain or logic..Reply