Tom's Hardware Verdict

Nvidia's RTX 5090 marks the debut of the new Blackwell architecture, with new features, higher performance, more memory, and a lot more bandwidth. But the drivers could use a bit more time baking in Jensen's oven.

Pros

- +

Fastest GPU around (usually)

- +

32GB of GDDR7 on a 512-bit bus

- +

PCIe 5.0 interface

- +

Potent AI performance

Cons

- -

Driver issues in some games and apps

- -

Extreme price and power

- -

Concerns with availability

- -

Multi Frame Generation marketing

Why you can trust Tom's Hardware

The Nvidia GeForce RTX 5090 Founders Edition has arrived — or at least, the reviews have arrived. It's the fastest GPU we've ever tested, most of the time, and we expect things will continue to improve as drivers mature in the coming weeks. When it lands on retail shelves, the RTX 5090 will undoubtedly reign as one of the best graphics cards around for the next several years.

The card itself — as well as AIB (add-in board) partner cards using the RTX 5090 GPU — won't go on sale until January 30. Once it does, good luck acquiring one. It's an extreme GPU with a $1,999 price tag, though there will certainly be some well-funded gamers looking to upgrade. It also features new AI-centric features, including native FP4 support, and that will very likely generate a lot of interest outside of the gaming realm. With 32GB of VRAM and 3.4 PetaFLOPS of FP4 compute, it should easily eclipse any other consumer-centric GPU in AI workloads.

We've written a lot of supplemental coverage about Nvidia's new Blackwell RTX 50-series GPUs. If you want a primer, or additional information, check out these articles:

• Blackwell architecture

• Neural rendering and DLSS 4

• RTX 50-series Founders Edition cards

• RTX AI PCs and generative AI for games

• Blackwell for professionals and creators

• Blackwell benchmarking 101

Review in Progress...

It's been an extremely busy month so this review is currently a work in progress and our current score is a tentative 4.5 out of 5, subject to adjustment in the next week or so as we fill in more blanks. There are tests that we wanted to run that failed, and several of the games in our new test suite also showed anomalous behavior. We've also revamped our test suite and our test PC, wiping the slate clean and requiring new benchmarks for every graphics card in our GPU benchmarks hierarchy, and while we have reviewed the Intel Arc B580 and Arc B570 and tested some comparable offerings, there are a lot of GPUs that we still need to retest. It takes a lot of time, even without any driver oddities, and we need to do some additional work.

The Nvidia Blackwell RTX 50-series GPUs also bring some new technologies, which require separate testing. Chief among these (for gamers) is the new DLSS 4 with Multi Frame Generation (MFG). That requires new benchmarking methods, and more importantly, we need to spend time with the various DLSS 4 enabled games to get a better idea of how they look and feel.

We already know from experience that DLSS 3 frame generation isn't a magic bullet that makes everything faster and better. It adds latency, and the experience also depends on the GPU, game, settings, and monitor you're using. With MFG potentially doubling the number of AI-generated frames (DLSS 4 can generate 1, 2, or 3 depending on the setting you select), things become even more confusing. MFG as an example running at 240 FPS would mean user input only gets sampled at 60 FPS, so while MFG could make games smoother it might also feel laggy. We'll be testing out some of the early DLSS 4 examples and updating our review in the coming days.

Here are the specifications for the RTX 5090 and its predecessors — the top Nvidia GPUs of the past several generations.

| Graphics Card | RTX 5090 | RTX 4090 | RTX 3090 | RTX 2080 Ti |

|---|---|---|---|---|

| Architecture | GB202 | AD102 | GA102 | TU102 |

| Process Technology | TSMC 4N | TSMC 4N | Samsung 8N | TSMC 12FFN |

| Transistors (Billion) | 92.2 | 76.3 | 28.3 | 18.6 |

| Die size (mm^2) | 750 | 608.4 | 628.4 | 754 |

| SMs / CUs / Xe-Cores | 170 | 128 | 82 | 68 |

| GPU Shaders (ALUs) | 21760 | 16384 | 10496 | 4352 |

| Tensor / AI Cores | 680 | 512 | 328 | 544 |

| Ray Tracing Cores | 170 | 128 | 82 | 68 |

| Boost Clock (MHz) | 2407 | 2520 | 1695 | 1545 |

| VRAM Speed (Gbps) | 28 | 21 | 19.5 | 14 |

| VRAM (GB) | 32 | 24 | 24 | 11 |

| VRAM Bus Width | 512 | 384 | 384 | 352 |

| L2 / Infinity Cache | 96 | 72 | 6 | 5.5 |

| Render Output Units | 176 | 176 | 112 | 88 |

| Texture Mapping Units | 680 | 512 | 328 | 272 |

| TFLOPS FP32 (Boost) | 104.8 | 82.6 | 35.6 | 13.4 |

| TFLOPS FP16 (FP4/FP8 TFLOPS) | 838 (3352) | 661 (1321) | 285 | 108 |

| Bandwidth (GB/s) | 1792 | 1008 | 936 | 616 |

| TBP (watts) | 575 | 450 | 350 | 260 |

| Launch Date | Jan 2025 | Oct 2022 | Sep 2020 | Sep 2018 |

| Launch Price | $1,999 | $1,599 | $1,499 | $1,199 |

The raw specs alone give a hint at the performance potential of the RTX 5090. It has 33% more Streaming Multiprocessors (SMs) than the previous generation RTX 4090, just over double the SMs of the 3090, and 2.5 times as many SMs as the RTX 2080 Ti that kicked off the ray tracing and AI GPU brouhaha. Just as important, it has 33% more VRAM than the 4090, and the GDDR7 runs 33% faster than the GDDR6X memory used on the 4090, yielding a 78% increase in memory bandwidth.

The rated boost clocks on the RTX 5090 have dropped compared to the 4090, but Nvidia's boost clocks have always been rather conservative. Depending on the game and settings used, in some cases the real-world clocks were even higher than before. Except, that's mostly at 1080p and 1440p, where CPU bottlenecks are definitely a factor and the 5090 wasn't hitting anywhere close to the maximum 575W of power use. Typical clocks ranged from 2.5 GHz to 2.85 GHz in our testing (more details on page eight).

But it's not just performance and specs that have increased. The RTX 5090 has an official base MSRP of $1,999 — $400 more than the RTX 4090's base MSRP. That's probably thanks to the demand that Nvidia saw for the 4090, with cards frequently going for over $2,000 during the past two years. We suspect much of that was thanks to businesses buying the cards for AI use and research (not to mention people reportedly trying to smuggle 4090 cards into China). Those same factors will undoubtedly affect the RTX 5090.

Things shouldn't be as bad as the cryptomining shortages of the RTX 30-series era, but we would be shocked if the 5090 isn't difficult to buy in the coming months at anywhere close to $2,000. Nvidia's top GPUs have traditionally been hard to acquire in the first month or two after launch, and that pattern will no doubt continue — and perhaps be even worse than the 4090 launch.

We've already noted that testing is ongoing and that we haven't been able to run all the benchmarks that we'd like. But really, what's the competition to the RTX 5090? Most people will want to see how much faster it is than the RTX 4090, plus a few other high-end / extreme offerings. It's not like someone is looking at an RTX 4070, RX 7700 XT, or Arc B580 but will instead decided to spend 4–8 times as much on a 5090.

AMD's RX 7900 XTX didn't really compete with the 4090, and it certainly won't beat the 5090 — at least, not at 4K. And you really should be running a 4K or higher resolution display if you're thinking about using a 5090 for gaming. We're still running 7900 XTX benchmarks on the new test PC, but we'll have complete results in the next day or so. We also want to add the 3090 to show what a two generation upgrade will deliver, for those using the top Ampere RTX 30-series GPUs.

Other than that? We don't really see anything that will keep up with the 5090 arriving any time soon. And that's just for gaming. For AI workloads that can use the new FP4 number format, Nvidia claims the 5090 can be up to three times as fast as the 4090. It's set to dominate the GPU landscape until the inevitable RTX 6090 or whatever arrives in a couple of years — or perhaps Nvidia will do an RTX 5090 Ti or Titan Blackwell this generation.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Current page: Introducing the Nvidia GeForce RTX 5090

Next Page Nvidia GeForce RTX 5090 Founders EditionJarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Crazyy8 Quick look, raster performance seems a bit underwhelming. Weird that the RTX 5090 can be slower than the 4090(in niche cases). Wasn't going to buy the 5090 anyway, too expensive for a plebe like me. Looking forward to DLSS 4 and how amazing(or not)it'll be.Reply -

Gururu Amazing but expected as far as I am concerned. I don't think there is a lot of need to compare against anything including AMDs new cards. It was brilliant to pair with the 13900 with crazy interesting results. Will read a few more times to glean more details. (y)Reply -

valthuer ReplyAdmin said:We also tested the RTX 5090 on our old 13900K test bed, with some at times interesting results. Some games still seem to run faster on Raptor Lake, though overall the 9800X3D delivers higher performance. The margins are of course quite small at 4K ultra.

For me, as a 13900K owner, that's a consolation :cool: -

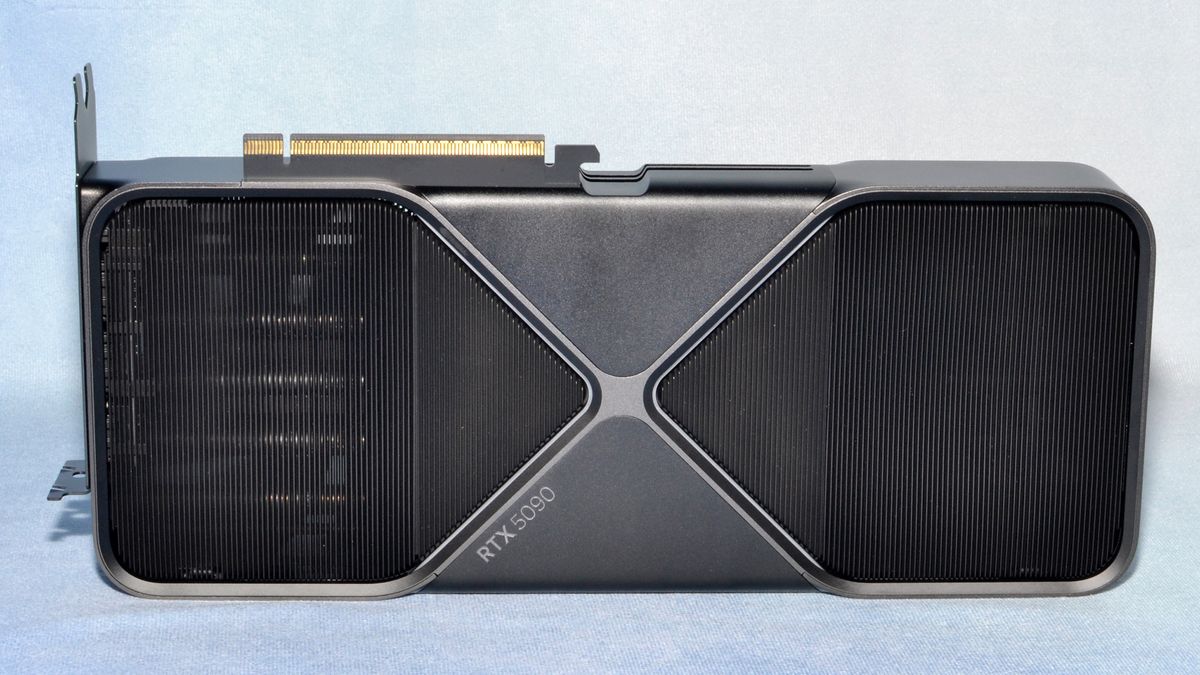

Gaidax Okay, that IS a sick cooler that actually manages to do the job.Reply

I bet aftermarket 4 slot monstrocities will do better, but for 2 slots 600w that's insane. -

m3city Products like this should receive 3stars max. Great performance but at what cost? Is it the right direction that power draw increases at each iteration? Is it worth to chase max perf each time? For me it would be perfect if 5000 series stayed at same TDP as previous ones - meaning better design, better gpu - with understandably lower increase of perf compared to 4000. And then, 6000 series to have even reversed direction: higher perf with drop of TDP.Reply

And secondary, how come 500W gpu can be air cooled, but nerds on forums will claim you absolutely NEED water cooling for 125W ryzen, cause "highend"?. Yeah, i know 125W means more actual draw. -

redgarl Okay Jarred... you are shilling at this point.Reply

4.5 / 5 for a 2000$ GPU that barely get 27% more performances?

While consuming 100W more than a 4090?

And offering the same cost per frame value as a 4090 from 2 years ago?

Flagship or not, this is horrible.

Not to mention the worst uplift from an Nvidia GPU ever achieved... 27%...

https://i.ibb.co/4fks6Gt/reality.jpg -

redgarl Did you bench into an open bench or a PC case? I am asking because there is some major concerns of overheating because the CPU coolers is choked by the 575W of heat dissipation inside a closed PC Case. If you have an HSF for your CPU, then you are screwed.Reply

https://uploads.disquscdn.com/images/7a5bf4d586b20ffe0aa6281c57d419012a32cbdabd43b3e8050d2aa9a00d6cc1.png -

oofdragon 20% better at 4K and 15% better at 2K, all that having 30% more cores and etc............... got it. Oh boy the 5080 and 5070 are sure going to disappoint a lot of people.Reply

The good news is the RX 9070 will bring 4070 Ti Super performance to the table around $500 including the VRAM, ray tracing and dlss image quality. AMD will prolly counter the multi frame gen nonsense with something like the LSFG 3.0 is doing and smart buyers will finally have a good GPU to replace their 3080 or 6700 XT. -

vanadiel007 They should have given it code name sasquatch, because that's the chance you will be seeing these sell for $2,000 in the coming months.Reply

More like $3,000 and a lot of luck needed to find one in stock.

I pass on it. -

YSCCC a real space heater inside the case, and extremely expensive with not great raw performance increase... sounds like built for those with more money than brain or logic..Reply