Nvidia RTX 50 mobile Device IDs have been leaked — Flagship RTX 5090 mobile rumored to sport the GB203 GPU

It appears that Nvidia will reserve the GB202 chip for desktop

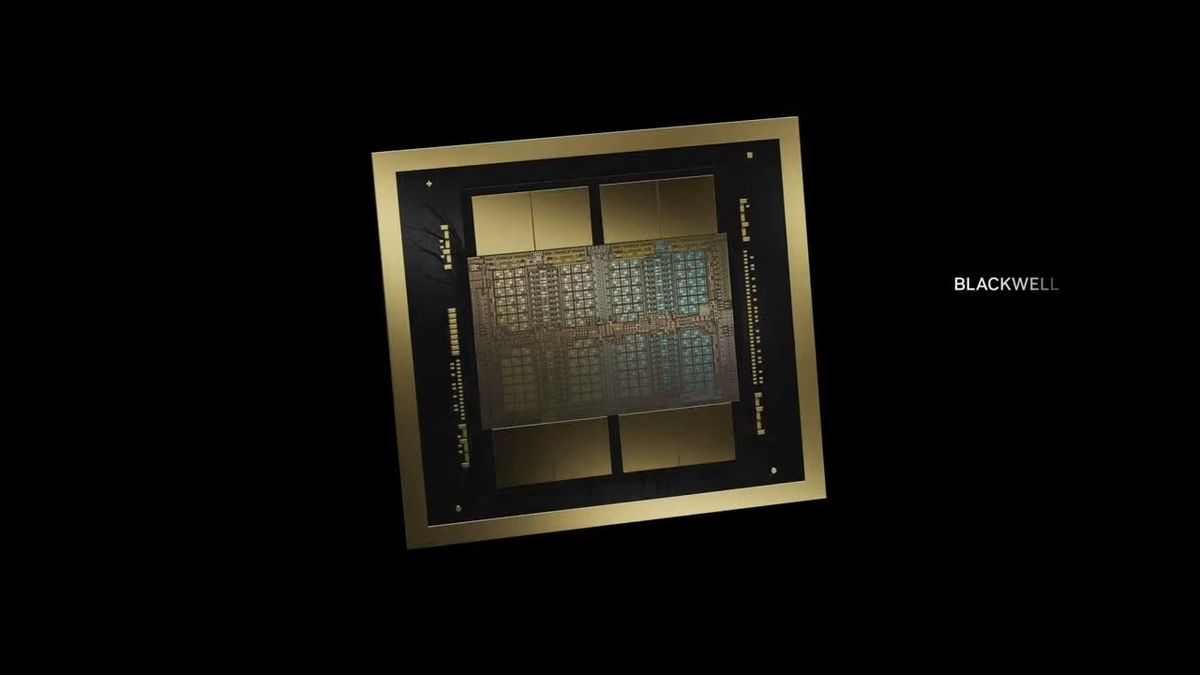

The PCI ID repository has added fresh entries for Nvidia's upcoming Blackwell mobile (RTX 50) GPU lineup. As spotted by Harukaze on X, the RTX 50 mobile family features GPUs from the RTX 5090M down to the RTX 5050M, covering a broad market segment akin to its predecessor. Since this leak only focuses on mobile SKUs, there's a high possibility that Nvidia is sending RTX 50 mobile engineering samples to OEMs as we speak.

The PCI ID database is an archive for hardware devices conforming to the PCI standard. Members of PCI-SIG and other contributors maintain this database, which helps to identify hardware components - GPUs in our case. It is entirely possible that these new PCI IDs were added by an OEM or found through driver patches.

We should remind readers that PCI IDs don't mention the specifications of said products. However, some SKUs have been listed with their respective GPU model. Both RTX 5090M and the RTX 5080M are said to utilize the GB203M GPU, with varying levels of binning. Interestingly, the list also includes an RTX 5070 Ti mobile, which may be a typo since Nvidia typically reveals Ti and SUPER refreshes halfway through any given generation. Echoing its Ada Lovelace lineup, Nvidia is also prepping an RTX 5050 for laptops to serve the budget market.

2980 / GB10229c0 / GB1022c18 / GB203M, GN22 / 5090 Mobile2c19 / GB203M, GN22 / 5080 Mobile2c2c / N22W-ES-A1 / @mooreslawisdead leaked the picture5070 Ti Mobile5070 Mobile5060 Mobile5050 Mobilehttps://t.co/PGWZsH221XOctober 27, 2024

There are two instances of GB102, which we presume is GB202 since Blackwell for mainstream consumers are expected to use the GB2XX moniker. Given its beefy layout, laptop chips will likely not make use of GB202 so it makes sense that this chip is reserved for the RTX 5090 desktop alongside a workstation GPU for professionals.

Based on previous rumors, the RTX 50 mobile family will see no increase in memory capacity as compared to the last-generation. In fact, as per leaks, Blackwell on mobile will only offer up to just 16GB of VRAM - so enthusiasts will need to consider their choices carefully.

Nvidia CEO Jensen Huang is expected to reveal the RTX 50 series at CES 2025, probably rubbing shoulders with rivals like the AMD Radeon 8000 GPUs and even Intel's Battlemage GPUs. Mid- and entry-level Blackwell on desktop and laptop, as is tradition, will be announced at a later date - possibly by Computex 2025 but that is mere speculation.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

usertests Replythe RTX 50 mobile family features GPUs from the RTX 5090M down to the RTX 5050M

It would be great if they actually called them that.

Based on previous rumors, the RTX 50 mobile family will see no increase in memory capacity as compared to the last-generation. In fact, as per leaks, Blackwell on mobile will only offer up to just 16GB of VRAM - so enthusiasts will need to consider their choices carefully.

Not according to MLID. 24 GB for the RTX 5090(M) using 3GB GDDR7 modules.

Gy4HAJdjeRA -

enewmen Can someone explain why all GPU makers are so stingy with offering RAM? Is there a limit to how much RAM is needed for a 1080p screen for example no matter how many textures are used?Reply -

Heiro78 Reply

From my limited experience, more VRAM is needed at higher resolution. So 1440p and 4K. And my speculation on why they limit the VRAM is due to cost sure, but also to segment the different tiers more. So higher end cards are not as capped/ limited. It's the same thing with memory bandwidth. A 4060 8GB could have a 500 GB/s bandwidth and I'm sure that would help it compete more with a 4070 12GB also at 500 GB/s bandwidth. But then the increase in price for the 4070 wouldn't be as legitimate.enewmen said:Can someone explain why all GPU makers are so stingy with offering RAM? Is there a limit to how much RAM is needed for a 1080p screen for example no matter how many textures are used? -

Eximo The increased cost isn't so much the memory, it is the GPU die area for the memory controller. 128 bit bus is a lot cheaper than a 192 or 256 or 384 bit bus. Silicon wafers are a fixed size and cost. The larger the GPU, the fewer you get. The larger the die, also results in decreased yields, further increasing cost.Reply

So the answer to keep bandwidth the same and increase the DRAM package size, or increase the size of the GPU, which means another GPU design, so they just segment as Heiro says to make the minimal number of GPU designs to meet demand.

The move to chiplets should alleviate some of these problems, though it doesn't look like Nvidia has made that move on the consumer side yet. AMD has and it works pretty well. -

P.Amini Reply

Surely they can make two versions for anyone of GPUs, one with lower amount of RAM and one with more (1.5x or 2x) amount of RAM and it gives us much more choices to choose depending our specific needs and budget. Instead they match lower end GPUs with lower amount of RAM which is barely enough and force you to buy higher end models if you want more RAM. Price of products (VGAs included) don't directly relate to the cost of products, supply, demand, marketing plans and policies and even greediness are far more important factors than the cost, as we all know graphics cards are overpriced, especially high end models.Eximo said:The increased cost isn't so much the memory, it is the GPU die area for the memory controller. 128 bit bus is a lot cheaper than a 192 or 256 or 384 bit bus. Silicon wafers are a fixed size and cost. The larger the GPU, the fewer you get. The larger the die, also results in decreased yields, further increasing cost.

So the answer to keep bandwidth the same and increase the DRAM package size, or increase the size of the GPU, which means another GPU design, so they just segment as Heiro says to make the minimal number of GPU designs to meet demand.

The move to chiplets should alleviate some of these problems, though it doesn't look like Nvidia has made that move on the consumer side yet. AMD has and it works pretty well.