Nvidia's data center Blackwell GPUs reportedly overheat, requiring rack redesigns and causing delays for customers

Nvidia redesigns NVL72 servers to tackle overheating problems.

Nvidia's next-generation Blackwell processors are facing significant challenges with overheating when installed in high-capacity server racks, reports The Information. These issues have reportedly led to design changes and delays and raised concerns among customers like Google, Meta, and Microsoft about whether they can deploy Blackwell servers on time.

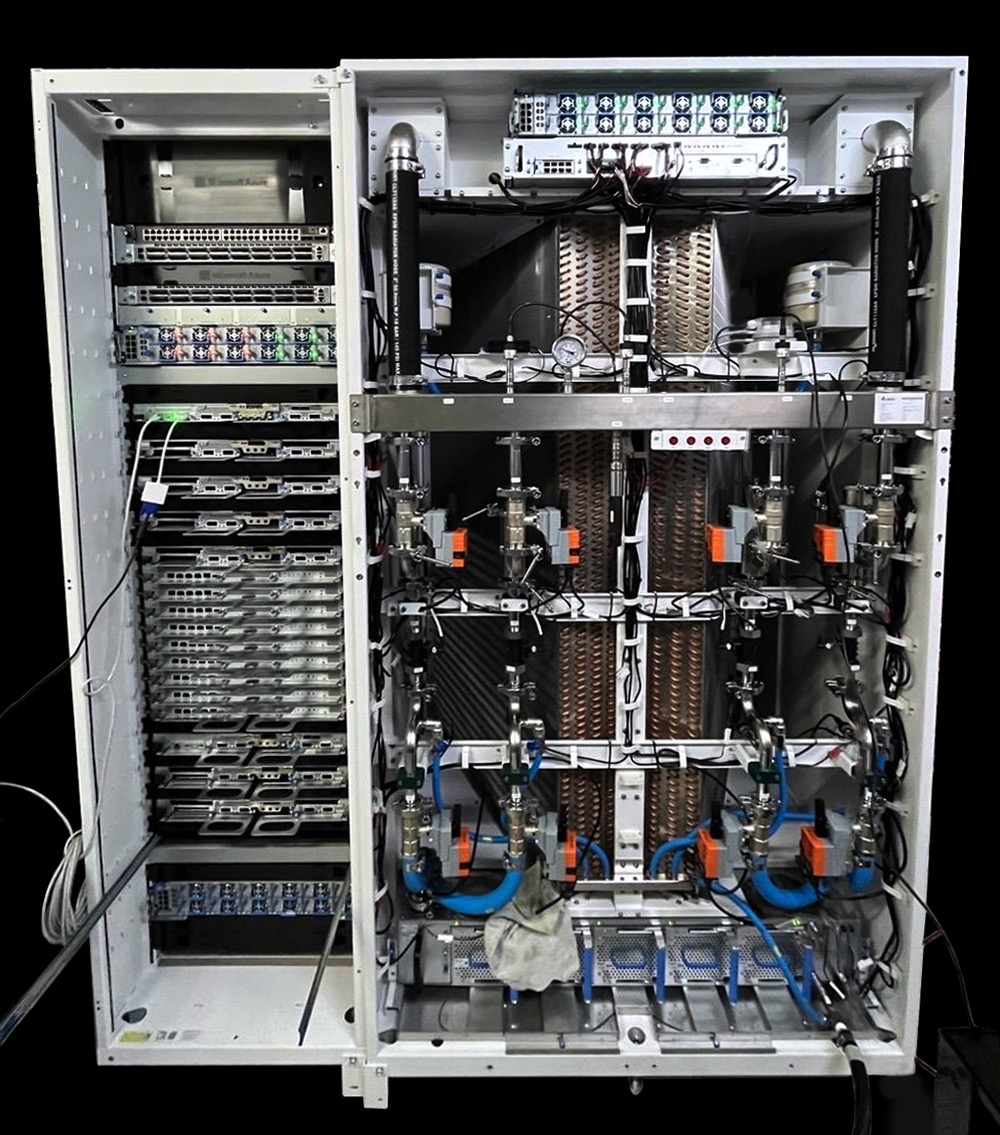

According to insiders familiar with the situation who spoke with The Information, Nvidia's Blackwell GPUs for AI and HPC overheat when used in servers with 72 processors inside. These machines are expected to consume up to 120kW per rack. These problems have caused Nvidia to reevaluate the design of its server racks multiple times, as overheating limits GPU performance and risks damaging components. Customers reportedly worry that these setbacks may hinder their timeline for deploying new processors in their data centers.

Nvidia has reportedly instructed its suppliers to make several design changes to the racks to counteract overheating issues. The company has worked closely with its suppliers and partners to develop engineering revisions to improve server cooling. While these adjustments are standard for such large-scale tech releases, they have nonetheless added to the delay, further pushing back expected shipping dates.

In response to the delays and overheating issues, an Nvidia spokesperson reminded Reuters about the collaborative efforts with cloud providers and described the design changes as part of the normal development process. This partnership with cloud providers and suppliers aims to ensure the final product meets performance and reliability expectations as Nvidia continues to work on resolving these technical challenges.

Previously, Nvidia had to delay the Blackwell production ramp due to the processor's yield-killing design flaw. Nvidia's Blackwell B100 and B200 GPUs use TSMC's CoWoS-L packaging technology to connect their two chiplets. This design includes an RDL interposer with local silicon interconnect (LSI) bridges, which supports data transfer speeds of up to 10 TB/s. The precise positioning of these LSI bridges is essential for the technology to function as intended. However, a mismatch in the thermal expansion characteristics of the GPU chiplets, LSI bridges, RDL interposer, and the motherboard substrate led to warping and system failures. To address this, Nvidia reportedly modified the GPU silicon's top metal layers and bump structures to improve production reliability. Although Nvidia never revealed specific details about these changes, it noted that new masks were necessary as part of the fix.

As a result, the final revision of Blackwell GPUs only entered mass production in late October, which means that Nvidia will be able to ship these processors starting in late January.

Nvidia's clients, including tech giants like Google, Meta, and Microsoft, use Nvidia's GPUs to train their most powerful large language models. Delays in Blackwell AI GPUs naturally affect Nvidia customers' plans and products.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user It seems like Nvidia tried to launch with clock speeds that were too high.Reply

Perhaps they felt compelled to do that, in order to justify the exorbitant pricing. This would also explain why they can't simply tell customers to reduce clock speeds a little, since that would significantly affect the perf/$ and might result in customers asking for a rebate to offset the lost performance.

While Nvidia provides industry leading AI performance, I'm sure they're probably less competitive on the perf/$ front. Intel basically said as much, during recent announcements regarding their repositioning of Gaudi. This is something Nvidia must be keeping an eye on.

TBH, I honestly expected Nvidia to have transitioned their AI-centric products away from a GPU-style architecture, by now, and towards a more purpose-built, dataflow-oriented architecture like most of their competitors have adopted. I probably just underestimated how much effort it would take them to overhaul their software infrastructure, which I guess supports Jim Keller's claim that CUDA is more of a swamp than a moat. -

raminpro ithese days performance not very important,( all hardwares performance near toghather) , temprature is more importantReply

cant understand why they dont use AMD

AMD maybe little be week but have very low heat and low watt

high watt , high temprature = degrade -

wingfinger lack of comprehensive design/engineering when it is required more than ever, and when facilities are/should be available to do that?Reply -

watzupken Well, even if Nvidia made a subpar product, companies and individuals will still throw money at them. So with little consequences, they can basically do what they want isn’t it? All you need is some fluffy and creative marketing on some edge cases from internal testing.Reply -

usertests Reply

I thought the heat was from top Blackwell products essentially gluing two giant dies together, increasing the thermal density.bit_user said:It seems like Nvidia tried to launch with clock speeds that were too high.

https://www.tomshardware.com/pc-components/gpus/nvidias-next-gen-ai-gpu-revealed-blackwell-b200-gpu-delivers-up-to-20-petaflops-of-compute-and-massive-improvements-over-hopper-h100

Clocks are going to play a role but I think the B200's design is a big factor. If I'm reading it correctly it can use 1200W instead of 700W for the H100. -

thestryker Reply

Just to add onto this their biggest customers are diversifying hardware sources already so cutting that performance lead could be especially dangerous there.bit_user said:It seems like Nvidia tried to launch with clock speeds that were too high.

Perhaps they felt compelled to do that, in order to justify the exorbitant pricing. This would also explain why they can't simply tell customers to reduce clock speeds a little, since that would significantly affect the perf/$ and might result in customers asking for a rebate to offset the lost performance.

While Nvidia provides industry leading AI performance, I'm sure they're probably less competitive on the perf/$ front. Intel basically said as much, during recent announcements regarding their repositioning of Gaudi. This is something Nvidia must be keeping an eye on. -

bit_user Reply

I'm not saying otherwise, just that I'm sure it's clocked well beyond the point of diminishing returns. In which case, they could reduce power consumption (and thereby heat), if they'd dial back the clocks a bit. It's such a simple and obvious solution that I thought it was worth exploring the implications of doing it.usertests said:I thought the heat was from top Blackwell products essentially gluing two giant dies together, increasing the thermal density. -

HansSchulze How did we get from October mass production to January shipping?Reply

Heat density is a thing. Even with liquid cooling it is difficult to continuously move thousands of watts from a to b. Temporary fix is to maybe install 2 of 3 boards? Not earth shattering. Problem is that if they change the case itself, there is a crazy amount of networking connectivity inside as well as heavy power cabling. Swapping cases will cost time and money, but i doubt customers will have to wait to start testing their compute modules, like we run Furmark on new systems. Weed out infant m0rtality. Lowering clocks is good after that, since the boards work ok at higher clocks, while they wait for mechanical issues. People still get work done, time doesn't stop for anyone. -

bit_user Reply

Ooo! +1 for furmark. That's one of my favorite stress tests!HansSchulze said:we run Furmark on new systems. Weed out infant m0rtality.

It's even available for Linux, now!

https://geeks3d.com/20240224/furmark-2-1-0-released/ -

spongiemaster Reply

Enterprise hardware is never clocked like that. Efficiency and reliability are significantly more important to enterprise customers than all out performance. Hopper's boost clock is only 1980Mhz, compared to a 4090 that boosts up to 2850MHz. Adding a 2nd chip, Blackwell increases transistor count compared to Hopper from 80 billion to 208 billion, plus an addition 50GB of HBM. That's where the power increase is coming from. TDP increased from 700W to 1000W in the same form factor. If data centers did nothing to improve cooling with that increase in power/heat density, it's no surprise the systems are overheating.bit_user said:I'm not saying otherwise, just that I'm sure it's clocked well beyond the point of diminishing returns.