Nvidia's next-gen Blackwell AI Superchips could cost up to $70,000 — fully-equipped server racks reportedly range up to $3,000,000 or more

Let the billions in revenue commence.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Nvidia's Blackwell GPUs for AI applications will be more expensive than the company's Hopper-based processors, according to analysts from HSBC cited by @firstadopter, a senior writer from Barron's. The analysts claim that one GB200 superchip (CPU+GPUs) could cost up to $70,000. However, Nvidia may be more inclined to sell servers based on the Blackwell GPUs rather than selling chips separately, especially given that the B200 NVL72 servers are projected to cost up to $3 million apiece.

HSBC estimates that Nvidia's 'entry' B100 GPU will have an average selling price (ASP) between $30,000 and $35,000, which is at least within the range of the price of Nvidia's H100. The more powerful GB200, which combines a single Grace CPU with two B200 GPUs, will reportedly cost between $60,000 and $70,000. And let's be real: It might actually end up costing quite a bit more than that, as these are merely analyst estimates.

HSBC estimates pricing for NVIDIA GB200 NVL36 server rack system is $1.8 million and NVL72 is $3 million. Also estimates GB200 ASP is $60,000 to $70,000 and B100 ASP is $30,000 to $35,000.May 13, 2024

Servers based on Nvidia's designs are going to be much more expensive. The Nvidia GB200 NVL36 with 36 GB200 Superchips (18 Grace CPUs and 36 enhanced B200 GPUs) may be sold for $1.8 million on average, whereas the Nvidia GB200 NVL72 with 72 GB200 Superchips (36 CPUs and 72 GPUs) could have a price of around $3 million, according to the alleged HSBC numbers.

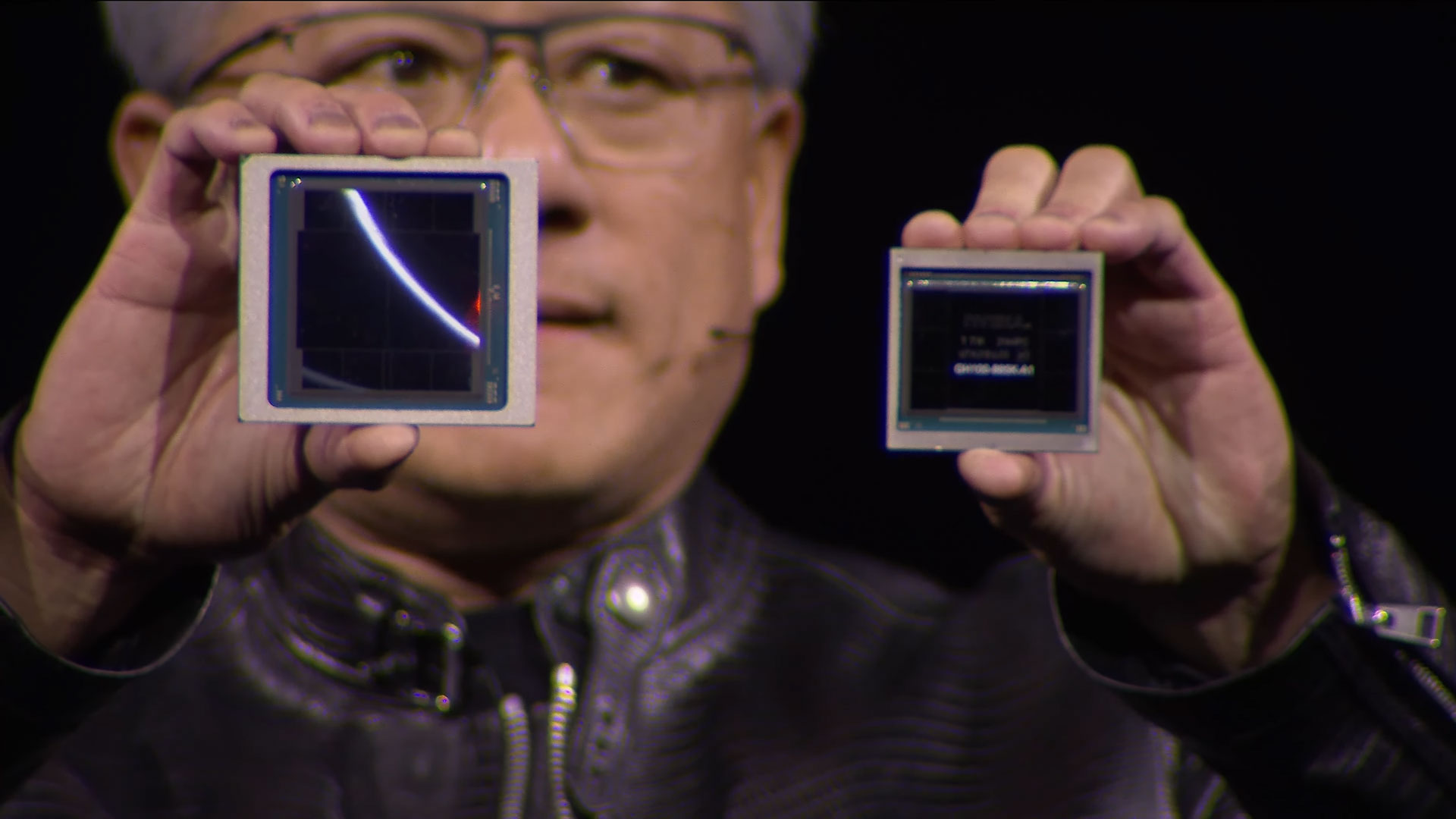

When Nvidia CEO Jensen revealed the Blackwell data center chips at this year's GTC 2024, it was quite obvious that the intent is to move whole racks of servers. Jensen repeatedly stated that when he thinks of a GPU, he's now picturing the NVL72 rack. The whole setup integrates via high bandwidth connections to function as a massive GPU, providing 13,824 GB of total VRAM — a critical factor in training ever-larger LLMs.

Selling whole systems instead of standalone GPUs/Superchips enables Nvidia to absorb some of the premium earned by system integrators, which will increase its revenues and profitability. Considering that Nvidia's rivals AMD and Intel are gaining traction very slowly with their AI processors (e.g., Instinct MI300-series, Gaudi 3), Nvidia can certainly sell its AI processors at a huge premium. As such, the prices allegedly estimated by HSBC are not particularly surprising.

It's also important to highlight differences between H200 and GB200. H200 already commands pricing of up to $40,000 for individual GPUs. GB200 will effectively quadruple the number of GPUs (four silicon dies, two per B200), plus adding the CPU and large PCB for the so-called Superchip. Raw compute for a single GB200 Superchip is 5 petaflops FP16 (10 petaflops with sparsity), compared to 1/2 petaflops (dense/sparse) on H200. That's roughly five times the compute, not even factoring in other architectural upgrades.

It should be kept in mind that the actual prices of data center-grade hardware always depend on the individual contracts, based on the volume of hardware ordered and other negotiations. As such, take these estimated numbers with a helping of salt. Large buyers like Amazon and Microsoft will likely get huge discounts, while smaller clients may have to pay an even higher price that what HSBC reports.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Giroro Has anybody, other than Nvidia, figured out how to make money with one of these things?Reply -

Lucky_SLS ^ They will do that when they figure out how to be competitive with Nvidia's offerings...Reply -

spongiemaster Reply

I don't think he meant the competition, I think he meant the customers. How are companies using these systems for AI tasks to generate revenue.Lucky_SLS said:^ They will do that when they figure out how to be competitive with Nvidia's offerings... -

Lucky_SLS Replyspongiemaster said:I don't think he meant the competition, I think he meant the customers. How are companies using these systems for AI tasks to generate revenue.

When they learn how to be competitive with OpenAI I guess XD

Those GB200 chips are definitely not marketed towards budget startup AI companies. -

palladin9479 ReplyGiroro said:Has anybody, other than Nvidia, figured out how to make money with one of these things?

Yes and no, it depends what market you are in. While everyone is busy drooling over a better chatbot, the real money is in data analytics and forecasting. I've said it before but all these "AI" algorithms are doing is predicting "yes and". The "training" is just analyzing ridiculous amounts of data and building a model that correlates every data point with every other data point to form a giant probability mode. Afterward you can feed it a string of data elements and it'll predict what the next elements should be. You say and it responds as the most likely following elements. The entire thing is just a pattern prediction system, in the case of natural language processing (NPL), which is what ChatGPT does, the patterns are in our English language lexicon.

Now imagine feeding it the daily/hourly percentile price change of the S&P 500 for every day going back thirty or more years as "Training" data. Then putting in this weeks daily/hourly price and letting it "guess" next weeks price values. See how accurate it was, adjust the model, feed more data, then do another estimation run. Keep doing this until it starts to accurately predict the patterns. Congrats you can now almost print money. -

umeng2002_2 ReplyGiroro said:Has anybody, other than Nvidia, figured out how to make money with one of these things?

Take grant money from governments for research and sell half-working AI technology to other companies. -

neojack it's just the same as the internet bubble back in the 90'sReply

remember AOL ? no me neither

major players will have crashed on the ground when the dust will settle.

It's a gold rush, and NVidia sells the shovels.... -

slightnitpick Reply

Really bad idea. You'd also want to feed it the real-time news feed that the traders were trading on back in the day. As well as any other particulars that impact buying and selling on the market that you can think of and that you have means of getting real-time or close to real-time data of today. Random events such as obituaries of company owners, cyclical events such as benefits open enrollment, et cetera.palladin9479 said:Now imagine feeding it the daily/hourly percentile price change of the S&P 500 for every day going back thirty or more years as "Training" data. Then putting in this weeks daily/hourly price and letting it "guess" next weeks price values. See how accurate it was, adjust the model, feed more data, then do another estimation run. Keep doing this until it starts to accurately predict the patterns. Congrats you can now almost print money.

You'll still miss some black swan events, but at least you won't be trading algorithmically based solely on prior trades. That sort of trading is already locked up, and isn't going to be realistically improved with a modern AI engine on modern hardware. -

Dntknwitall To me this is all just a cash grab. It is marketed as the future technology that has value just like all past technology has, and it gets companies like Nvidia filthy Rich then they just keep pumping out new ideas that sell to keep generating flow in a technology that they master and everyone wants. The world is just a game of Monopoly, everyone is trying to get rich but only a few really get there. This is what the meaning of life has become and honestly the future looks really boring and I think that is why major powers are trying to reset the balance, but some (the evil ones) just want to balance it towards themselves. Great future the next generations have to look forward to.Reply