SuperComputing 2017 Slideshow

D-Wave's Mindbending Quantum Computing

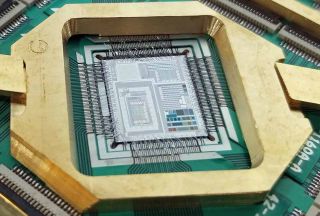

Quantum computing may be the next wave in supercomputing, and a number of companies, including industry behemoths like Intel, Google, and IBM, are drawing the battle lines. Those three industry icons are focusing on using Quantum logic gates, which are more accessible to programmers and mathematicians, but are unfortunately harder to manufacture.

D-Wave's latest quantum processor can search a solution space that is larger than the number of particles in the observable universe--and it accomplishes the feat in 1 microsecond. The company has embraced annealing, which has more robust error correction capabilities. D-Wave announced at the show that its 2000Q system now supports reverse annealing, which can solve some problems up to 150X faster than the standard annealing approach.

D-Wave's quantum chips are built on a standard CMOS process with an overlay of superconducting materials. The company then cools the processor to 400X colder than interstellar space to enable processing. D-Wave's 2,000-Qubit 2000Q supercomputer comes with a hefty $15 million price tag, though the company also offers a cloud-based subscription service.

PEZY's 16,384-thread SC2 Processor

It's hard not to be enamored with PEZY's ultra-dense immersion-cooled supercomputers, but the PEZY-SC2 Many-Core processor is truly a standout achievement.

The 2,048-core chip (MIMD) operates at 1.0 GHz within a 130W TDP envelope. The chip features 56MB of cache memory and supports SMT8, so each core executes eight threads in parallel. That means a single PEZY-SC2 processor brings 16,384 threads to bear. It also features the PCIe Gen 4.0 interface.

Among other highlights, the chip sports a quad-channel memory interface that supports up to 128GB of memory with 153GB/s of bandwidth. That may seem comparatively small, but PEZY's approach relies upon deploying these processors en masse on a spectacular scale.

The 620mm2 die, built on TSMC's 16nm process, offers 8.192 TeraFLOPS of single-precision and 4.1 TeraFLOPS of double-precision compute.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Let's see how PEZY integrates these into a larger supercomputer.

The Pezy-SC2 Brick

PEZY uses its custom -SC2 processors, which serve as accelerators, to create modules which are then assembled into bricks, much like we saw with the company's V100 blades. The PEXY-SC2 brick consists of 32 modules that all have their own allotment of DRAM, and one 16-core Xeon-D processor is deployed per eight PEZY-SC2 processors. The brick also includes slots for four 100Gb/s network interface cards.

Now the completed brick is off to the immersion tank.

PEZY-SC2 Brick Immersion Cooling

A single ZettaScaler immersion tank can house 16 bricks, which works out to 512 PEZY-SC2 processors. That means each tank can bring in 1,048,576 cores and 8,388,608 threads. That's an incredibly mind-bending number, sure, but perhaps the size of the relatively small immersion tank is the most impressive. That's performance density at its finest.

The Gyoukou supercomputer employs 10,000 PEZY-SC2 chips spread over 20 immersion tanks, but the company "only" enabled 1,984 cores per chip, which equates to 19,840,000 cores. Each core wields eight threads, which works out to more than 158 million threads. That system also includes 1,250 sixteen-core Xeon-D processors, adding over 20,000 Intel cores. In aggregate, that's the most cores ever packed into a single supercomputer.

The Gyoukou system is ranked fifth on the Green500 list for power efficiency and fourth on the Top500 list for performance. It delivers up to 19,135 TFLOPS and 14.173 GFLOPS-per-watt.

Incredible numbers aside, the company also has its PEZY-SC3 chips in development, with 8,192 cores and 65,536 threads per chip. That means the core and thread counts of its next-generation ZettaScaler systems will be, well, beyond astounding. We can't wait.

The Vector Processor

NEC is the last company in the world to manufacture Vector processors for supercomputers. NEC's Vector Engine full-height full-length PCIe boards will serve as the key components of the SX-Aurora TSUBASA supercomputer.

NEC claimed that its 16nm FinFET (TSMC) Vector core is the most powerful single core in HPC. It provides up to 307 GigaFLOPS per 1.6 GHz core. Eight cores come together inside the processor, and each core is fed by 150GB/s of memory throughput. That bleeding-edge throughput comes from six HBM2 packages (48GB) that flank the processor. That's a record number of HBM2 packages paired with a single processor. In aggregate, they provide a record of 1.2 TB/s of throughput to the Vector processor.

All told, the Vector Engine delivers 2.45 TeraFLOPS of peak performance and works with the easy-to-use x86/Linux ecosystem. Of course, the larger supercomputer is just as interesting.

The Vector Engine A300-8 Chassis

NEC's SX-Aurora TSUBASA supercomputer employs eight A300-8 chassis (pictured above) that host eight Vector Engines each. Each rack in the system provides up to 157 TeraFLOPS, 76 TB/s of memory bandwidth, and consumes 30KW. NEC's previous-generation SX-ACE model required 10 racks and 170KW of power to achieve the same feat.

What's It Take To Immersion Cool 20 Nvidia Teslas?

We love to watch the pretty bubbles generated by immersion cooling, but there are hidden infrastructure requirements. Allied Control's 8U 2-Phase Immersion Tank, which we'll see in action on the next slide, offers 48kW of cooling capacity, but it requires a sophisticated set of pumps, radiators, and piping to accomplish the feat.

In a data center environment, the large coolers are placed on the outside of the building. In fact, Allied Control powers the world's largest immersion cooling system. The BitFury data center in the Republic of Georgia, Eastern Europe, processes more than five times the workload of the Hong Kong Stock Exchange (HKSE), but accomplishes the feat in 10% of the floor space and uses a mere 7.4% of the exchange's electricity budget for cooling (48MW for HKSE, 1.2MW for BitFury).

So saving money is what it's all about. Let's see the system in action with 20 of Nvidia's finest.

Allied Control Immersion Cooling 20 Tesla GPUs

The Allied Control demo consisted of 20 immersion-cooled Nvidia Tesla GPUs and a dual-Xeon E5 server. The demo system draws 48kW, but the cooling system supports up to 60kW.

The company uses custom ASIC hardware and a special motherboard. The company replaced the standard GPU coolers with a 1mm copper plate that allows it to place the GPUs a mere 2.5mm apart. That's much slimmer than the normal dual-slot coolers we see on this class of GPU.

Allied Control Immersion Cooling Five Nvidia GeForce 1070s

So, we've seen the big version, but what if we could fit it on a desk? The Allied Control demo in Gigabyte's booth consisted of a single-socket Xeon-W system paired with six Nvidia GeForce 1070s. The GeForce GPUs were running under full load, yet stayed below 66C.

Although the immersion cooling tank could fit on the top of the platform, there was some additional equipment underneath. The cabinet was sealed off, though. In either case, we're sure most enthusiasts wouldn't have a problem sacrificing some space for this much bling on their desktop.

Anything You Can Do, OSS Can Do Bigger

One Stop Systems (OSS) holds a special place in our hearts. We put 32 3.2TB Fusion ioMemory SSDs head-to-head against 30 of Intel's NVMe DC P3700 SSDs in the FAS 200 chassis last year. That same core design, albeit with some enhancements in power delivery, can house up to 16 Nvidia Volta V100s in a single chassis. These robust systems are used for mobile data centers, such as for the military, along with supercomputing applications.

Altogether, 16 Volta GPUs wield 336 billion transistors and 13,440 CUDA cores, making this one densely packed solution for heavy parallel compute tasks. If your interests are more focused on heavy storage requirements, the company also offers a wide range of densely-packed PCIe storage enclosures.

Paul Alcorn is the Managing Editor: News and Emerging Tech for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

germz1986 A few of these slides displayed ads instead of the content, or displayed a blank slide. Your website is becoming hard to read on due to all of the ads....Reply -

berezini the whole thing is advertisement, soon toms hardware will be giving out recommended products based on commission they get from sales.Reply -

FritzEiv Due to some technical issues, our normal company video player has some implementation issues when we use videos (instead of still images) in slideshows. We went in and replaced all of those with YouTube versions of the videos on the slides in question, and it looks to be working correctly now. I apologize for the technical difficulty.Reply

(I'd rather not turn the comments on this slideshow into an ad referendum. Please know that I make sure concerns about ads/ad behavior/ad density (including concerns I see mentioned in article comments) reach the right ears. You can feel free to message me privately at any time.) -

COLGeek Some amazing tech showcased here. Just imagine the possibilities. It will be interesting to ponder on the influences to the prosumer/consumer markets.Reply -

bit_user ReplyThe single-socket ThunderX2 system proved to be faster in OpenFOAM and NEMO tests, among several others.

Qualcomm's CPU has also posted some impressive numbers and, while I've no doubt these are on benchmarks that scale well to many cores, this achievement shouldn't be underestimated. I think 2017 will go down in the history of computing as an important milestone in ARM's potential domination of the cloud.

-

bit_user Reply

"bleeding edge" at least used to have the connotation of something that wasn't quite ready for prime-time - like beta quality - or even alpha. However, I suspect that's not what you mean to say, here.20403668 said:IBM's Power Systems AC922 nodes serve as the backbone of Summit's bleeding-edge architecture.

-

bit_user ReplyNEC is the last company in the world to manufacture Vector processors.

This is a rather funny claim.

I think the point might be that it's the last proprietary SIMD CPU to be used exclusively in HPC? Because SIMD is practically everywhere you look. It graced x86 under names like MMX, SSE, and now the AVX family of extensions. Even ARM has had it for more than a decade, and it's a mandatory part of ARMv8-A. Modern GPUs are deeply SIMD, hybridized with massive SMT (according to https://devblogs.nvidia.com/parallelforall/inside-volta/ a single V100 can concurrently execute up to 164k threads).

Anyway, there are some more details on it, here:

http://www.nec.com/en/global/solutions/hpc/sx/architecture.html

I don't see anything remarkable about it, other than how primitive it is by comparison with modern GPUs and CPUs. Rather than award it a badge of honor, I think it's more of a sign of the degree of government subsidies in this sector.

Both this thing and the PEZY-SC2 are eyebrow-raising in the degree to which they differ from GPUs. Whenever you find yourself building something for HPC that doesn't look like a modern GPU, you have to ask why. There are few cases I can see that could possibly justify using either of these instead of a V100. And for those, Xeon Phi stands as a pretty good answer for HPC workloads that demand something more closely resembling a conventional CPU. -

bit_user ReplyThe network infrastructure required to run a massive tradeshow may not seem that exciting, but Supercomputing 17's SCinet network is an exception.

Thanks for this - I didn't expect it to be so extensive, but then I didn't expect they'd have access to "3.63 Tb/s of WAN bandwidth" (still, OMG).

What I was actually wondering about was the size of friggin' power bill for this conference! Did they have to make any special arrangements to power all the dozens-kW display models mentioned? I'd imagine the time of year should've helped with the air conditioning bill.

-

cpsalias For more details on picture 27 - MicroDataCenter visit http://www.zurich.ibm.com/microserver/Reply -

Saga Lout Reply20420018 said:For more details on picture 27 - MicroDataCenter visit http://www.zurich.ibm.com/microserver/

Welcome to Tom's cpsalias. Your IP address points to you being an IBM employee and also got your Spammish looking post undeleted. :D

If you'd like to show up as an Official IBM rep., drop an e-mail to community@tomshardware.com.

Most Popular