Supercomputing 2018: EPYC, Immersion and Quantum Computing

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Supercomputing 2018

This year the annual Supercomputing conference celebrated its 30th anniversary. The conference finds the brightest minds in HPC (High Performance Computing) descending from all around the globe to share the latest in bleeding-edge supercomputing technology. The six-day show is packed with technical breakout technical sessions, research paper presentations, tutorials, panels, workshops, and the annual student cluster competition.

The conference also features an expansive show floor brimming with the latest in high-performance computing technology. We traveled to Dallas, Texas to take in the sights and sounds of the show. Some of the demonstrations are simply mind-blowing. Let's take a look.

Immerse Your Desktop

3M is known for its Flourinert fluid that's used for two-phase immersion-cooling systems in both data centers and HPC systems, but the company also had a compact demo system that contains a Core i7-7700K overclocked to 4.5 GHz on all cores and a Nvidia GTX 1080. The fluid is cooled with a surprisingly small unit that's connected via two tubes. The system was consuming ~250W during the demo, but the components inside were still well within safe operating temperatures. The surprisingly compact system would look great on a desktop, but 3M has no plans to bring it to market.

Traveling To Milan With Volta-Next

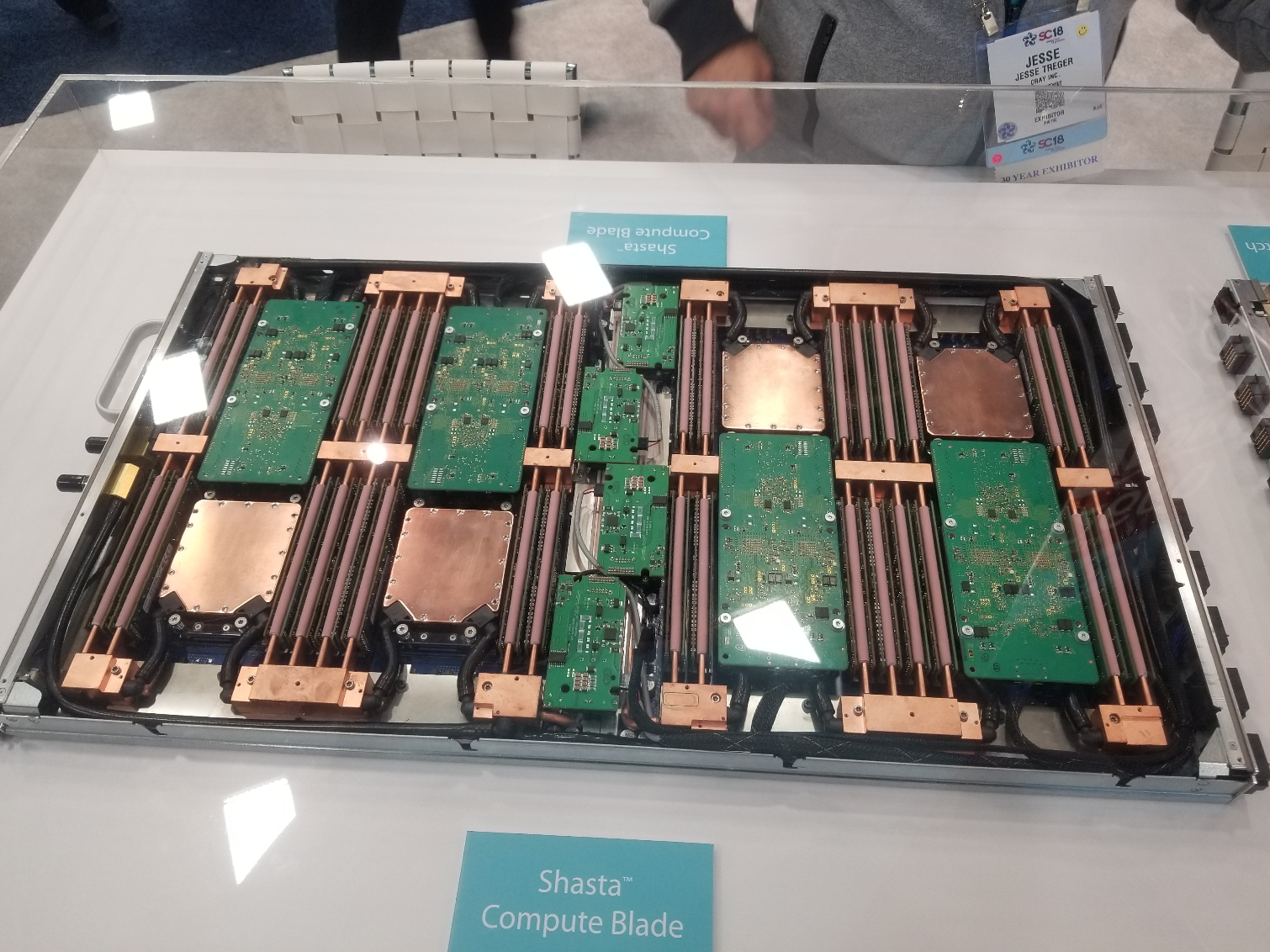

Cray's Shasta compute blade comes with multiple options for different types of compute, with the blade above wielding the power of eight of AMD's next-next-gen Milan processors crammed into one slim 1U chassis. This compute node is part of a larger system that includes GPU nodes and networking blades that all combine into one of the most powerful supercomputers on the planet.

The Department of Energy announced at Supercomputer 2018 that it had selected Cray's Shasta platform paired with Nvidia's "Volta-Next" GPUs and AMD's Milan processors to power its Perlmutter supercomputer. Those two solutions will combine to create an exascale-class machine that will be one of the fastest supercomputers in the world. The Department of Energy plans to deploy the Perlmutter supercomputer in 2020.

China Finds Zen

Sugon has a full-scale Nebula server rack that immersion cools individual compute trays that come bristling with four Nvidia Teslas and four CPUs apiece. This system is particularly interesting because it features the Chinese-manufactured Hygon Dryhana chips that are licensed, and nearly a carbon copy, of AMD’s EPYC processors.

Immersion cooling provides unparalleled performance density, but it requires specialized infrastructure to handle phase-change cooling equipment. Sugon's solution hosts 42 compute blades and comes with a secondary cabinet that includes the phase-change cooling equipment. As you can see in the video above, the sleds come with a quick-release connection that allows for near-hot-pluggable nodes without dealing with complicated tubing connections. Sugon's various HPC systems occupy 57 of the of the 500 listings on the 2018 TOP500 list of supercomputers.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

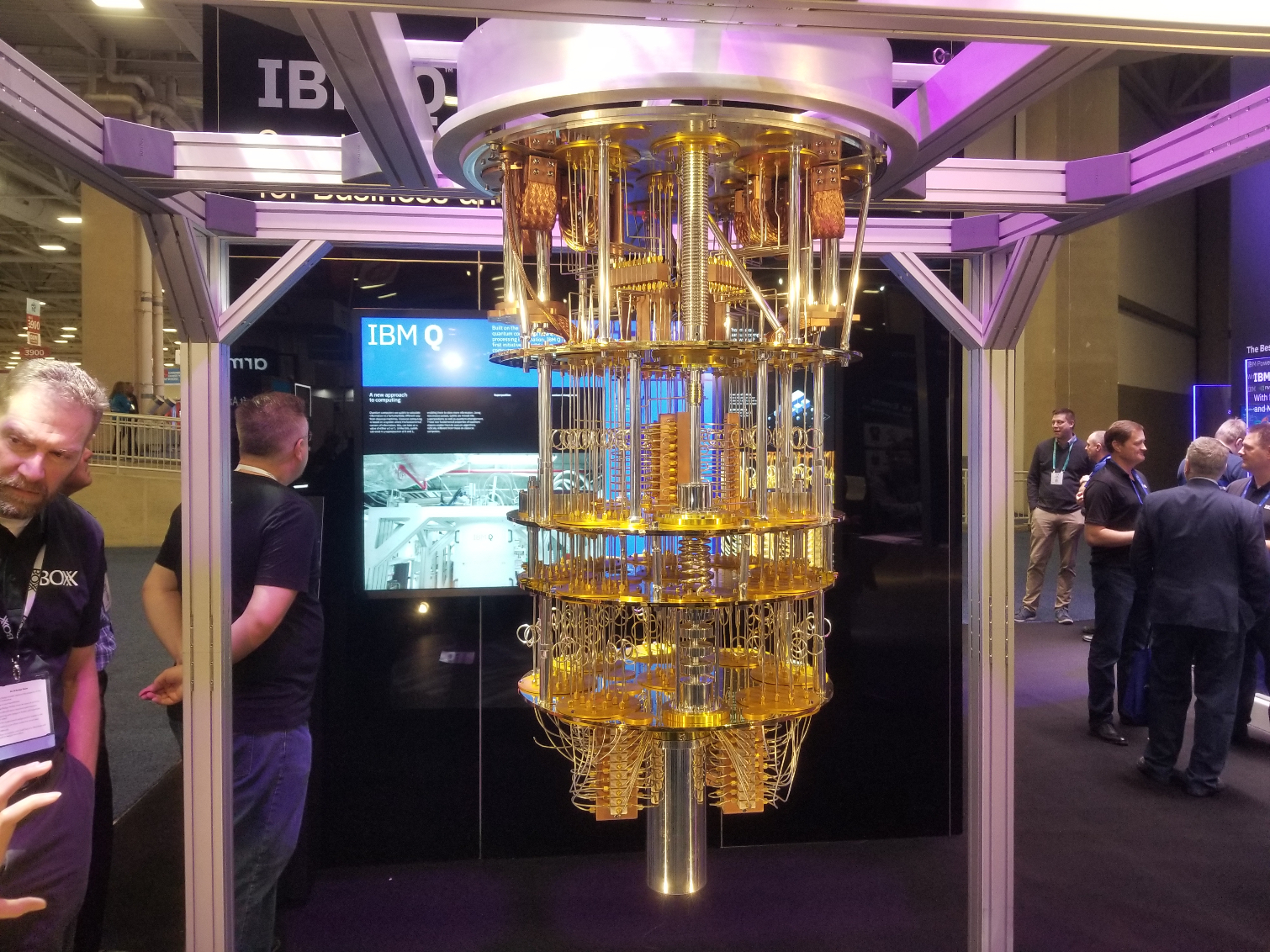

IBM's Quantum Computer

IBM's 50-Qubit quantum computer made an appearance. This chandelier-looking device cools the qubits, which are held on a wafer in the canister at the bottom of the device. The company uses Helium 3 and 4 to cool the uppermost layer of the assembly to 3 Kelvin, but the cooling system becomes progressively cooler as it travels to the bottom of the device.

The silver canister at the bottom of the device falls to a mere 0.15 millikelvins, making it the coldest entity in the universe while the machine is operational. That extreme cold enables the device to churn out enough computational power to rank within the top ten supercomputers in the world, but the power is short-lived: the device only computes for 100 milliseconds. As IBM improves the device, each additional qubit will provide an exponential boost in performance. Therefore, adding one more qubit would make the device as powerful as two of the top ten supercomputers.

Epyc Everywhere

AMD took tentative steps into last years Supercomputing conference with its EPYC Naples processors, but this year the company broke out into a run. EPYC-powered servers made an appearance at nearly every motherboard vendor, but more importantly, they were also present at several major OEMs. Both HPE and Dell had full servers on display, reminding us that the EPYC invasion into the data center is real. Other wins, such as the Perlmutter supercomputer we covered earlier, are for future supercomputers. That tells us that EPYC is here to stay for the long run.

Four TPUs in a Pod

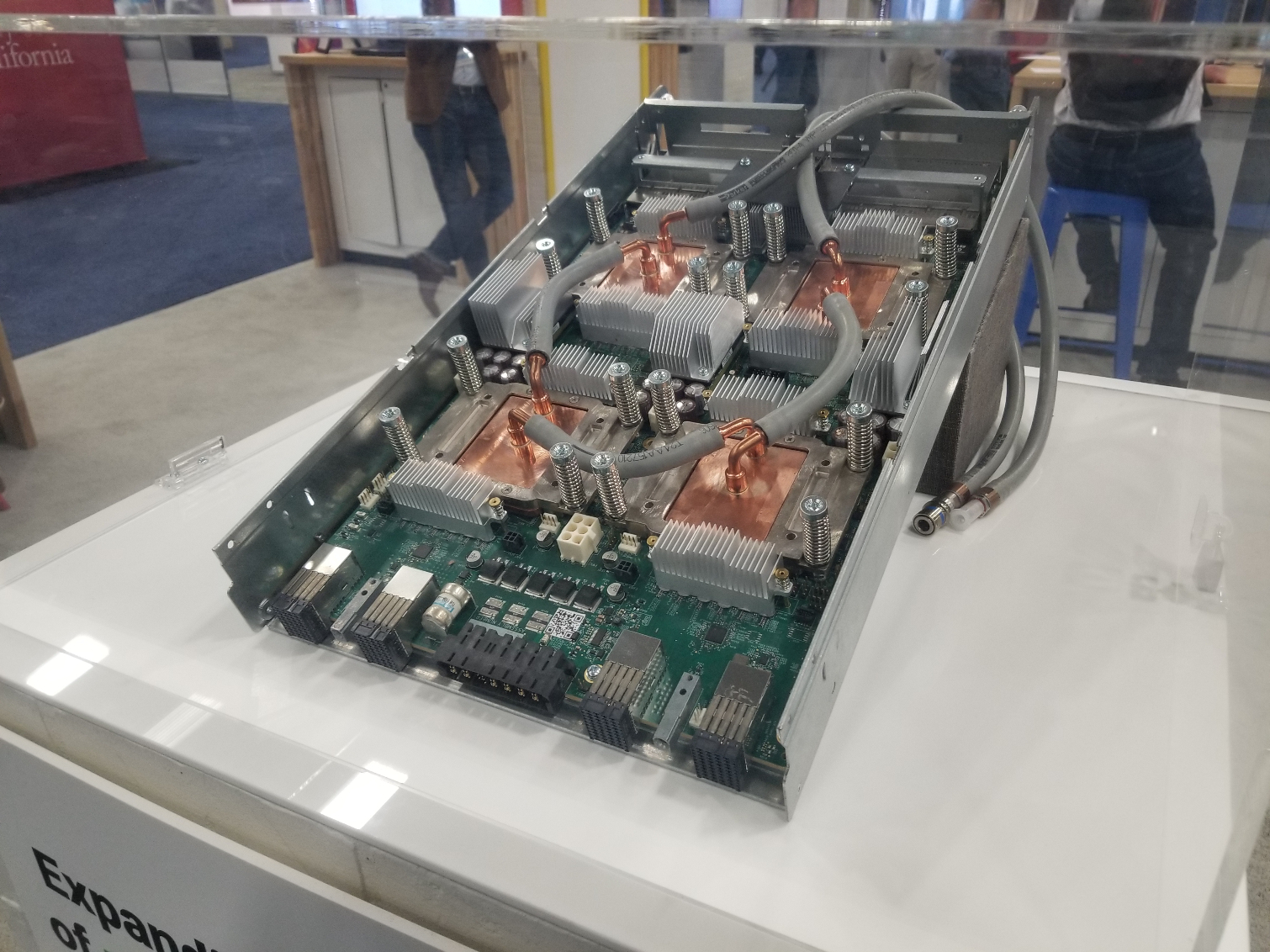

Google announced its latest TPU (Tensor Processing Unit) v3 earlier this year. Google specifically designed these custom ASICs to handle training and inference workloads. The newest version comes watercooled and slides into massive installations called pods. It is thought that over 1,024 TPU v3 processors will come together to form a single pod. Google claims a full pod can deliver well over one hundred petaflops of performance.

Google isn't sharing many specifics of the v3 models, though we do know they can deliver 420 teraflops of performance and come with 128GB of HBM.

Nvidia T4

Nvidia's T4 makes an appearance in several of Supermicro's newest servers, but we expect these speedy AI inference GPUs to experience broad uptake in the data center. The T4 comes bearing the same Turing architecture as Nvidia's GeForce RTX 20-series gaming graphics cards, but is designed for neural networks that process video, speech, search engines, and images.

The Tesla T4 GPU comes equipped with 16GB of GDDR6 that provides up to 320GB/s of bandwidth, 320 Turing Tensor cores, and 2,560 CUDA cores. The T4 features 40 SMs enabled on the TU104 die to optimize for the 75W power profile.

The GPU supports mixed-precision, such as FP32, FP16, and INT8 (performance above). The Tesla T4 also features an INT4 and (experimental) INT1 precision mode, which is a notable advancement over its predecessor, the P4.

Allied Control demo

The Allied Control demo consisted of 20 immersion-cooled Nvidia GTX 2080's and a dual-Xeon E5 server. The company uses custom ASIC hardware and a special motherboard. The company replaced the standard GPU coolers with a 1mm copper plate that allows it to place the GPUs a mere 2.5mm apart. That's much slimmer than the normal dual-slot coolers we see on this class of GPU.

PCIe 4 Can Go Anywhere

One Stop Systems (OSS) designs external GPU and flash storage arrays for a variety of markets that span from military applications to data centers and HPC deployments. We've even tested one of the company's products when we put 32 3.2TB Fusion ioMemory SSDs head-to-head against 30 of Intel's NVMe DC P3700 SSDs.

But the industry moves on, and faster PCIe 4.0 connections between hefty enclosures with multiple GPUs and storage devices will double throughput to the host. OSS designed what it claims is the worlds first PCIe 4.0 cable adapter. The HIB616-x16 Host Bus Adapter supports an optical cable that attaches to a standard Mini-HD SAS connector repurposed to carry a PCIe signal. The connector then slides into the four-port host bus adapter, with each port providing an x4 connection between the two devices. The OSS adapter also works with standard copper cable, but that limits cable length to six meters.

Intel Does MCM, Too.

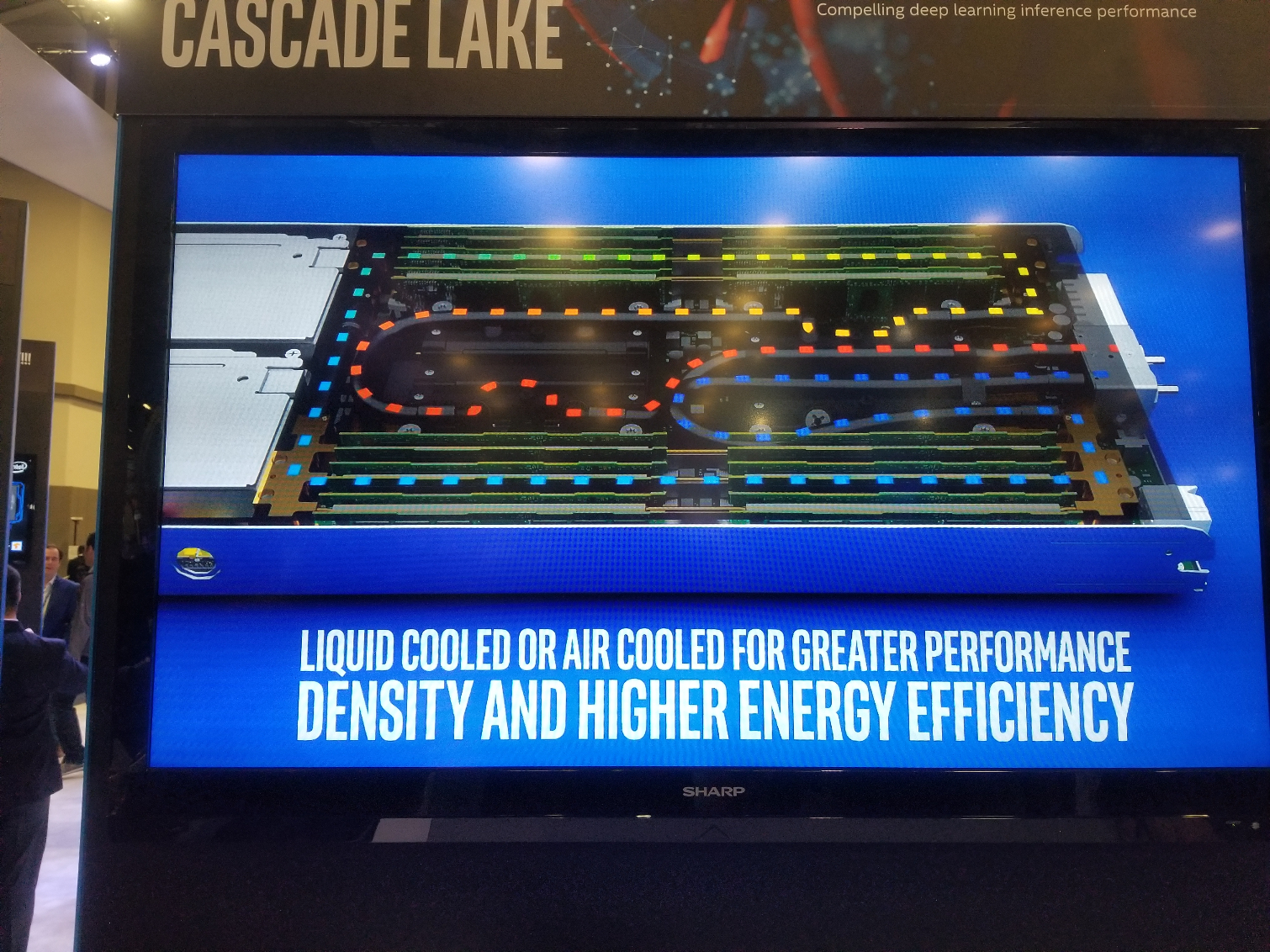

Intel's Cascade Lake-AP (Advanced Performance) processors made quite the wave when the company announced them a few days before the Supercomputing conference, but details were slight. Intel did disclose that the new chips will come with a Multi-Chip Module (MCM) design, meaning they will have more than one die inside a single processor package, similar to AMD's chips.

During the show, we learned from several sources that Intel's top-end Platinum -AP models, which will reportedly stretch up to 350W, will require liquid cooling for stock operation. Meanwhile, lower-end models with more relaxed TDP ratings will still only require air cooling, though water is an option.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.