Modder crams LLM onto Raspberry Pi Zero-powered USB stick, but it isn't fast enough to be practical

Local LLM on a budget.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Local LLM usage is on the rise, and with many setting up PCs or systems to run them, the idea of having an LLM run on a server somewhere in the cloud is quickly becoming outmoded.

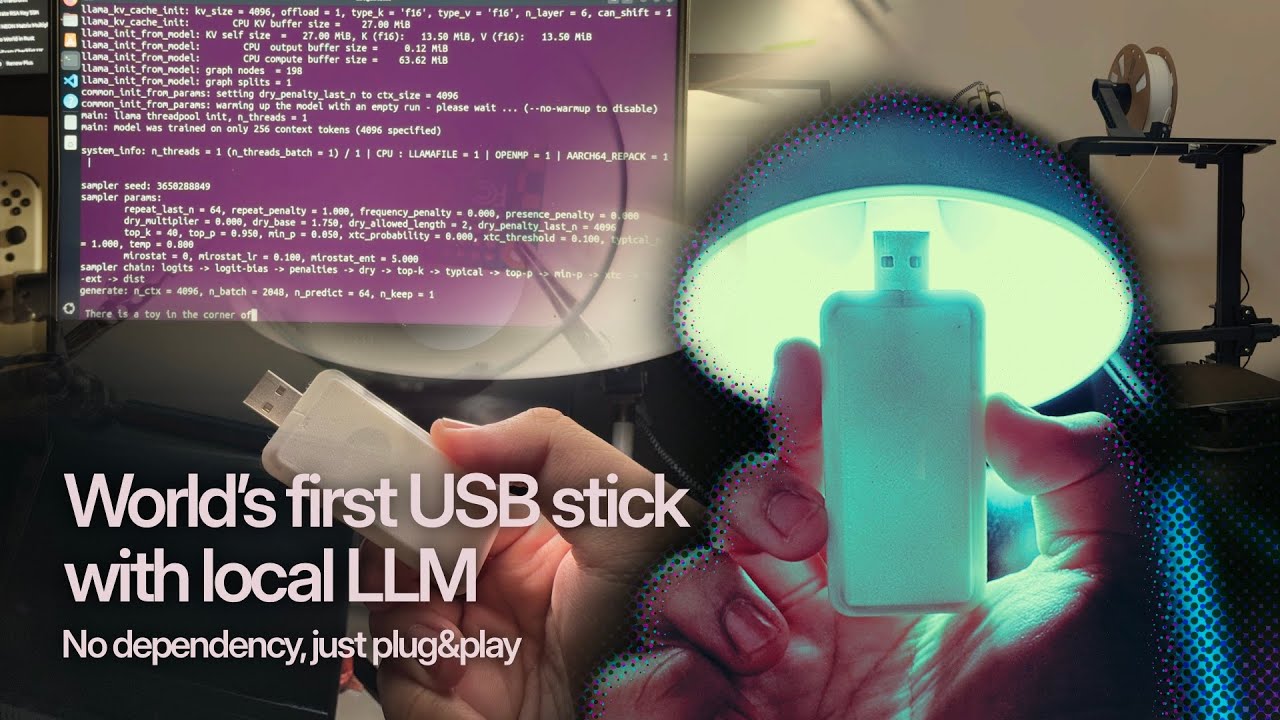

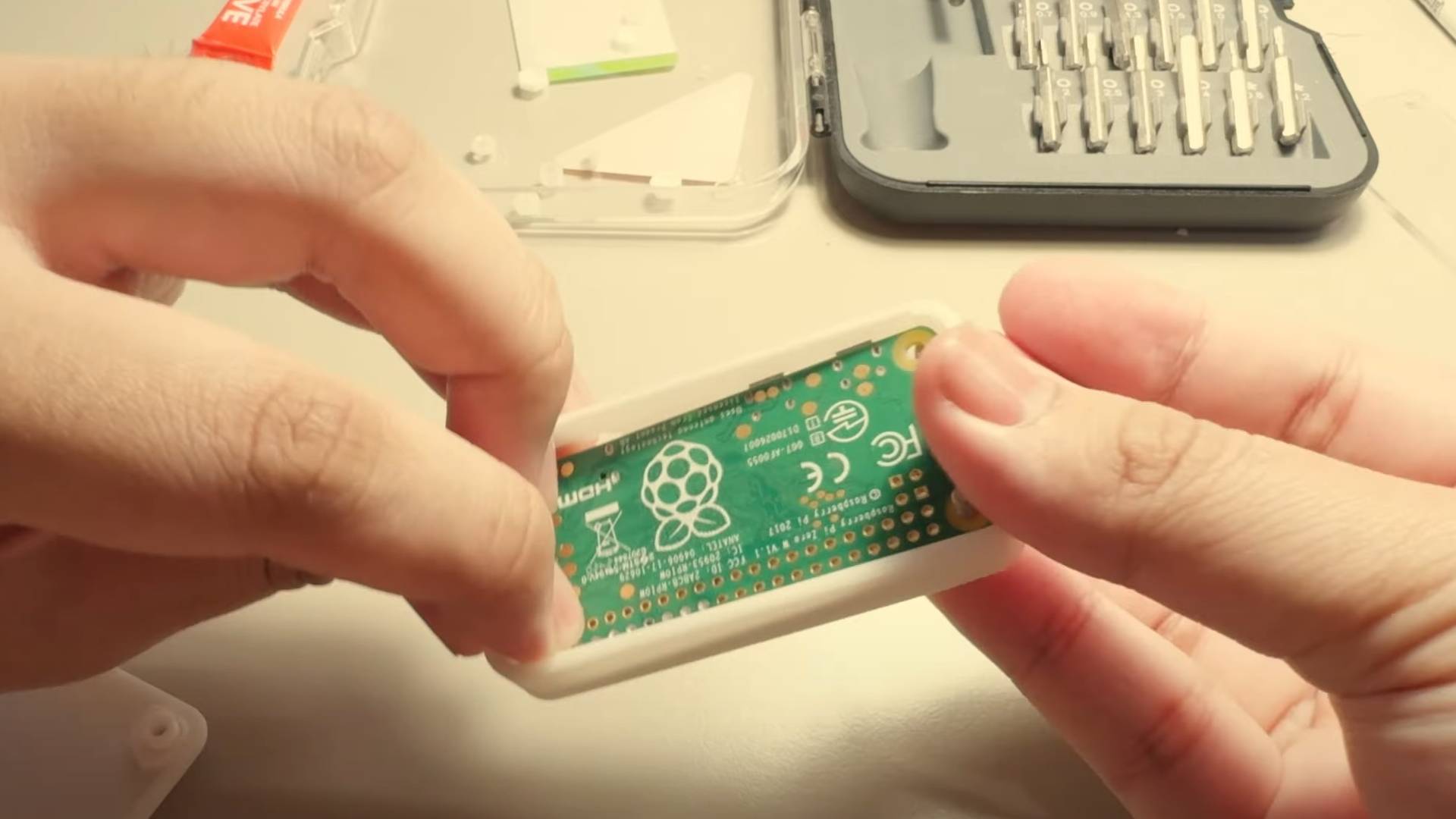

Binh Pham experimented with a Raspberry Pi Zero, effectively turning the device into a small USB drive that can run an LLM locally with no extras needed. The project was largely facilitated thanks to llama.cpp and llamafile, a combination of an instruction set and a series of packages designed to offer a lightweight chatbot experience offline.

But, due to the Pi Zero being eight years old, it wasn't as simple as packing llama.cpp onto the Pi Zero and getting it to run. Firstly, Pham mounted the device onto a USB interface and 3D printed a shell for the device itself.

With all of that addressed, the project gathered more complexity due to the Pi Zero W's 512MB RAM storage limitation. After building llama.cpp onto the device, it failed to compile, and no one else had dared to build llama.cpp onto a Pi Zero, or Pi One.

The root of the issues came down to the Pi Zero's CPU, which runs on ARMv6. To get around this, he had to knuckle down and convert llama.cpp's ARMv8 instruction set and remove any notations or optimizations based on modern hardware.

After editing the llama.cpp source code to get things running on the Pi Zero, he needed to get the software side of the stick working. Then, his attention turned toward getting the software experience as seamless as possible.

The LLM itself is built around submitting text files to the LLM, which serves as the main prompt. So, Pham built his implementation that generates a story based on the text prompt and spits it back out as a fully populated file with generated outputs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

With token limits set to 64, he got several benchmarks in place with several models, ranging from 15M to 136M. The Tiny15M model achieved 223ms-per-token speeds, while the two larger Lamini-T5-Flan-77M model achieved a longer 2.5s-per-token speed, and the SmolLM2-136M model achieved 2.2s-per-token.

The token speeds really mean that it will be too slow to use in many practical applications. While it's an interesting project, using older, lightweight hardware to run a local LLM might not offer much practical use. Instead, you might want to use a much more complex model, like running Deepseek on a Raspberry Pi 5.

Sayem Ahmed is the Subscription Editor at Tom's Hardware. He covers a broad range of deep dives into hardware both new and old, including the CPUs, GPUs, and everything else that uses a semiconductor.