Full Review of NVIDIA's GeForce2 MX

Introduction

With much of NVIDIA's competition busy scrambling to its feet after the GeForce2 GTS release, it seems that another knockout blow is about to hit the competition. The flurry of product releases from NVIDIA has kept most of the competing graphics companies rolling with the punches while it was brewing up yet another surgical strike, the GeForce2 MX. This product may seem harmless at first glance as it's only a low cost consumer solution but don't let that fool you. What you must realize is that NVIDIA isn't just using this GeForce2 derivative as an inexpensive chip that will fill in for the soon to be extinct TNT2 family but also have this piece of hardware take over this segment of the market by setting new performance standards for low cost solutions and on top of all this, inject the mainstream with a GPU that is T&L ready. The big question is: Does it truly perform as well as NVIDIA claims? Armed with my reference GeForce2 MX and 5.30 Detonator drivers, I'm ready to find out.

Before we move into further detail about the GeForce2 MX, let's take a peek at NVIDIA's new product line-up for a better understanding of the big picture. NVIDIA has already established a strong lead with the GeForce2 GTS line of products since its release 2 months ago (see Tom's Take on NVIDIA's New GeForce2 GTS ). The GeForce GTS holds the high-end position and will replace the GeForce line of products. Although it had this segment, it left a big question mark for the rest of the market that covers a majority of the consumers. The TNT2 was assigned to cover this area but it didn't necessarily dominate like its big brother the GeForce2 and left a huge untapped resource open. This is where the GeForce2 MX will come into play. It replaces the TNT2 family of products as the mainstream solution. The chipset has various configuration options that allow manufacturers to focus on several key areas that will range from an extremely low cost board to a mid-range multimedia offering and possibly mobile products. Let's begin our detailed analysis of this promising chipset.

The Chip

The GeForce2 MX (MX standing for Multi-transmitter) chip is based on the GeForce2 GTS but tweaked for versatility, price and mainstream consumer needs. It is based on the same .18 micron technology that the GeForce2 GTS is but clocked slower and has some architectural differences. These differences help cut power consumption and cost but at the same time take away from the performance of the GPU. If you take a look at both rendering engines, you'll immediately see a big difference between the two. First, let's look at the GeForce2 GTS.

As you already know, the GF2 GTS has four rendering pipelines that contain two texturing units each and combine for a very powerful rendering engine. These chips are clocked at 200 MHz and in theory are able achieve a 800 Mpixels/sec or 1.6 Gtexels/sec.

First and foremost, this GPU does indeed have fully functional hardware T&L and will be the first mainstream chip to have it. The T&L unit is the same unit in the GeForce2 GTS but running at a slower speed. Taking a closer look at the GeForce2 MX rendering engine, you'll notice that is has only two pipes. Both are still capable of applying two texels per clock but in the end its already half as efficient as the GeForce2 GTS. To top that off, the GeForce2 MX chips will also be clocked at 175 MHz compared to the 200 MHz of the GeForce2 GTS. The chip does however retain several of the valuable features of the GeForce2 GTS like 4X AGP, the NVIDIA Shading Rasterizer (NSR) and High Definition Video Processor (HDVP). It was noted that the GeForce2 MX HDVP is slightly inferior to that of the GeForce2 GTS, as it doesn't cover some of the higher end formats.

From here things can vary as the GeForce2 MX will be available in a couple of memory configurations. The memory interface can range from 64 bit to 128 bit depending on the manufacturers needs and SDR or DDR memory can be used. Frame buffer sizes can vary between 8 and 64 MBs but 32 MB configurations will probably be most common.

Costs obviously will drop if the narrow 64 bit SDR memory is used but it also greatly hurts performance. However, NVIDIA told us that the 64 bit memory configuration should automatically be equipped with DDR SDRAM, which will equalize the narrower data path with a higher memory clock. Our reference board is based on 128 bit SDRAM clocked at 166 MHz (giving us a theoretical limit of 2.7 GB/sec) so we can only imagine how bad the performance can get if a 64 bit SDR configuration should be used.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

What's New?

A couple of features were added to target the needs of mainstream users and give additional value on top of the high performance graphics engine. I'm referring to TwinView and Digital Vibrance Control.

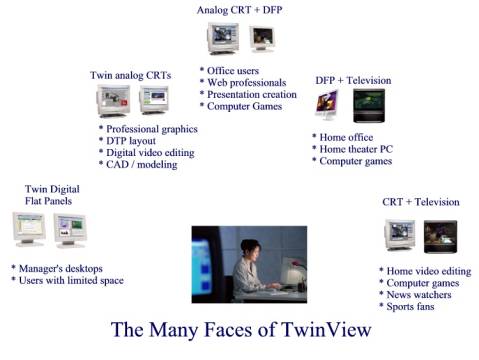

TwinView is similar to the DualHead concept from Matrox in that it allows users to have multiple output devices at once. You can have various combinations between a CRT, Digital Flat Panel (DFP) and TV out. The only limitation that I noticed was that you cannot do dual TV output. The main claim to fame that NVIDIA boasts over Matrox is that they claim to be able to drive two digital displays simultaneously thanks to a Dual Link Transmission-Minimizing Differential Signaling transmitter or TMDS. Keep in mind that this feature is still limited by the type of outputs that the given boards has. If you don't have a DFP connector on your board or a TV-out, you can't possibly use them. This is probably a warm welcome to those who actually need a dual output graphics card, as the G400 series from Matrox are nice cards but don't necessarily meet everyone's 3D performance standards.

Above are some examples of the TwinView configurations.

Digital Vibrance Control gives the user the ability to digitally control the saturation of images. This feature was added so that users can adjust the color saturation similar to the controls of your television set. I'm not really convinced that this feature adds much over the standard gamma controls that we're used to, but this feature may come in handy for fine-tuning visuals on Flat Panels.