G-Sync Technology Preview: Quite Literally A Game Changer

You've forever faced this dilemma: disable V-sync and live with image tearing, or turn V-sync on and tolerate the annoying stutter and lag? Nvidia promises to make that question obsolete with a variable refresh rate technology we're previewing today.

Testing G-Sync Against V-Sync Disabled

I'm basing this on an unofficial poll of Tom's Hardware writers and friends easily accessible to me over Skype (in other words, the sample size is small), but most everyone who understands what V-sync is and what it compromises appears to turn it off. The only time they go back is when running with V-sync disabled is deemed unbearable due to the tearing you experience when frames coming from your GPU don't match the panel's refresh cycle.

As you might imagine, then, the visual impact of running with V-sync disabled is unmistakeable, though also largely affected by the game you're playing and the detail settings you use.

Take Crysis 3, for example. It's easy to really hammer your graphics subsystem using the taxing Very High preset. And because Crysis is a first-person shooter involving plenty of fast motion, the tears you see can be quite substantial. In the example above, output from FCAT is captured between two frames, and you see branches of the tree completely disjointed.

On the other hand, when we force V-sync off in Skyrim, the tearing isn't nearly as bad. Consider that our frame rate is insanely high, and that multiple frames are showing up on-screen per display scan. Thus, the amount of motion per frame is relatively low. There are still issues with playing Skyrim like this, so it's probably not the optimal configuration. But it just goes to show that even running with V-sync turned off yields a varying experience.

This is just a third example in Tomb Raider, where Lara's shoulder is pretty severely misaligned (also look at her hair and tank top strap). Incidentally, Tomb Raider is one of the only games in our suite that lets you choose between double- and triple-buffering if you use V-sync.

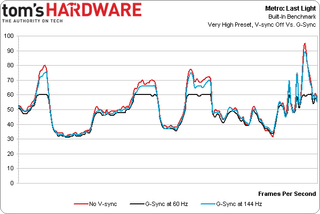

A final chart shows that running Metro: Last Light with G-Sync enabled at 144 Hz basically gives you the same performance as running the game with V-sync turned off. The part you can't see is that there is no tearing. Using the technology on a 60 Hz screen caps you out at 60 FPS, though there is no stuttering or input lag.

At any rate, for those of you (and us) who've spent countless hours watching the same benchmark sequences over and over, this is what we're used to. This is how we measure the absolute performance of graphics cards. So it can be a little jarring to watch the same passages with G-Sync turned on, yielding the fluidity of V-sync enabled without the tearing that accompanies V-sync turned off. Again, I wish it was something I could show you with a video clip, but I'm working on a way to host another event in Bakersfield to allow readers to try G-Sync out for themselves, blindly, to gather more dynamic reactions.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Testing G-Sync Against V-Sync Disabled

Prev Page Testing G-Sync Against V-Sync Enabled Next Page Game Compatibility: Mostly Great-

gamerk316 I consider Gsync to be the most important gaming innovation since DX7. It's going to be one of those "How the HELL did we live without this before?" technologies.Reply -

monsta Totally agree, G Sync is really impressive and the technology we have been waiting for.Reply

What the hell is Mantle? -

wurkfur I personally have a setup that handles 60+ fps in most games and just leave V-Sync on. For me 60 fps is perfectly acceptable and even when I went to my friends house where he had a 120hz monitor with SLI, I couldn't hardly see much difference.Reply

I applaud the advancement, but I have a perfectly functional 26 inch monitor and don't want to have to buy another one AND a compatible GPU just to stop tearing.

At that point I'm looking at $400 to $600 for a relatively paltry gain. If it comes standard on every monitor, I'll reconsider. -

expl0itfinder Competition, competition. Anybody who is flaming over who is better: AMD or nVidia, is clearly missing the point. With nVidia's G-Sync, and AMD's Mantle, we have, for the first time in a while, real market competition in the GPU space. What does that mean for consumers? Lower prices, better products.Reply -

This needs to be not so proprietary for it to become a game changer. As it is, requiring a specific GPU and specific monitor with an additional price premium just isn't compelling and won't reach a wide demographic.Reply

Is it great for those who already happen to fall within the requirements? Sure, but unless Nvidia opens this up or competitors make similar solutions, I feel like this is doomed to be as niche as lightboost, Physx, and, I suspect, Mantle. -

ubercake I'm on page 4, and I can't even contain myself.Reply

Tearing and input lag at 60Hz on a 2560x1440 or 2560x1600 has been the only reason I won't game on one. G-sync will get me there.

This is awesome, outside-of-the-box thinking tech.

I do think Nvidia is making a huge mistake by keeping this to themselves though. This should be a technology implemented with every panel sold and become part of an industry standard for HDTVs, monitors or other viewing solutions! Why not get a licensing payment for all monitors sold with this tech? Or all video cards implementing this tech? It just makes sense.

-

rickard Could the Skyrim stuttering at 60hz w/ Gsync be because the engine operates internally at 64hz? All those Bethesda tech games drop 4 frames every second when vsync'd to 60hz which cause that severe microstutter you see on nearby floors and walls when moving and strafing. Same thing happened in Oblivion, Fallout 3, and New Vegas on PC. You had to use stutter removal mods in conjunction with the script extenders to actually force the game to operate at 60hz and smooth it out with vsync on.Reply

You mention it being smooth when set to 144hz with Gsync, is there any way you cap the display at 64hz and try it with Gsync alone (iPresentinterval=0) and see what happens then? Just wondering if the game is at fault here and if that specific issue is still there in their latest version of the engine.

Alternatively I suppose you could load up Fallout 3 or NV instead and see if the Gsync results match Skyrim. -

Old_Fogie_Late_Bloomer I would be excited for this if it werent for Oculus Rift. I don't mean to be dismissive, this looks awesome...but it isn't Oculus Rift.Reply -

hysteria357 Am I the only one who has never experienced screen tearing? Most of my games run past my refresh rate too....Reply