Early Verdict

This is a perfect performance solution for 1080p or less gaming. This card takes another jab at questioning why the GTX 960 even exists anymore. The performance per dollar from this card will be hard to match, and all gamers on tight budgets should seriously consider the GTX 950 Xtreme Gaming as their gaming solution.

Pros

- +

Very low idle power draw • Near-silent operation • 7GT/s memory • Aggressive factory clock speed

Cons

- -

Limited to 2GB

Why you can trust Tom's Hardware

Introduction And Product 360

Gigabyte's GeForce GTX 950 Xtreme Gaming is the company's top offering equipped with GM206-250. It employs a custom PCB design, the familiar Windforce cooling solution and a highly overclocked processor that was cherry-picked by the company's GPU Gauntlet sorting process.

Nvidia launched its GeForce GTX 950 back in August, targeting gamers with FHD monitors (particularly the ones competing in multiplayer online battle arena titles like League of Legends). The 950 uses the same GPU found in Nvidia's GeForce GTX 960, but the GM206 processor is trimmed down somewhat. Instead of 1024 CUDA cores, the 950 wields 768. Cutting two SMMs also reduces the texture unit count from 64 to 48, while the back-end retains 32 ROPs. Nvidia's reference specification defines a base GPU clock rate of 1024MHz, with a typical GPU Boost setting closer to 1188MHz. The GeForce GTX 950 is only offered with 2GB of memory; there is no 4GB option. On-board GDDR5 operates at 6.6 Gb/s across an aggregate 128-bit bus.

The 950 includes a similar list of features as other Maxwell-based GPUs. It supports G-Sync adaptive refresh rate technology and the entire suite of VisualFX tools, including HBAO+ and TXAA. ShadowPlay is enabled as well, utilizing Nvidia's NVEnc hardware-based encoding engine. In fact, the GM206 processor offers Nvidia's most advanced implementation of PureVideo available. Its VDPAU feature set F adds full acceleration for HEVC/H.265 decoding.

When paired with Nvidia's GeForce Experience software, the GTX 950 can optimize MOBA games for responsiveness. This feature will expand to other cards in Nvidia's line-up. But in August, when the GTX 950 launched, it was the first card with this capability. Input response is critical in those titles and Nvidia found that multi-frame buffering was causing delays. To combat this issue, multi-frame buffering can be disabled completely.

We previously looked at a GTX 950 Strix card from Asus that was also overclocked out of the box. Gigabyte's GeForce GTX 950 Xtreme Gaming boasts even higher frequencies than the Strix. Let's take a closer look.

Product 360

As mentioned, the GPU Gigabyte uses is selected from the most efficient that the company finds during its GPU Gauntlet selection process. It claims this process guarantees higher overclocks compared to random selection, which makes sense.

The base clock from a reference-class GTX 950 is 1024MHz with a typically GPU Boost frequency of 1188MHz. Gigabyte's Xtreme Gaming card sports a 1203MHz frequency typically able to hit 1405MHz.

The GTX 950 is similar to the more expensive GeForce GTX 960, though one of its key differences is the memory subsystem. Nvidia's spec calls for 6.6 GT/s memory, whereas the 960 employs a 7 GT/s transfer rate. Gigabyte goes that extra step with its GTX 950 Xtreme Gaming and pairs it with 2GB of 7 GT/s memory. Looking at the specs on paper, it appears that Gigabyte is cutting the rug out from its own 960s.

The GTX 950 Xtreme is equipped with a dual-fan Windforce cooler to keep the on-board components running coolly. Gigabyte has been using the same fan design for some time now, and it's proven effective. Each blade has a triangular protrusion in front of a row of five indented strips. Gigabyte says this design greatly reduces air turbulence and increases cooling capacity. Air traveling through the fans passes over the sink's horizontal slats, which helps cool the two 6mm copper heat pipes that directly contact the GPU and pass through the sink in opposing directions. The voltage circuitry doesn't touch the main sink; however, Gigabyte installs a separate heat sink on the VRM to cool it as well.

The word that comes to mind when I look at the GTX 950 Xtreme Gaming's shroud is "exoskeleton." The plastic surrounding the fans and covering the heat sink leaves a lot of room to peer inside. This is undoubtedly intended to maximize heat transfer. Rather than wrapping around and attaching to the PCB, enveloping the whole heat sink, Gigabyte attaches its shroud directly to the heat sink fins. As a result, the plastic looks like it's suspended just above the card. But even the sides that wrap around make no contact with the heat sink or PCB.

This card's cooling solution extends an inch and a half beyond the 7.25-inch printed circuit board (the back plate and plastic shroud make up the last half-inch of length). It appears as though the longer cover was only included to make room for the second fan. The blades extend just beyond the end of the heat sink fins, so it's a mystery why Gigabyte didn't just extend the fins as well.

A robust thermal solution is necessary for enabling aggressive overclocks. But to ensure reliability, you need more than just a good cooler. Gigabyte engineered the GTX 950 Xtreme Gaming to last by including the same high-quality chokes and capacitors found on the Titan X. The company also coats its PCB to protect it from moisture, dust and corrosion.

The card does feature nods to the gaming aesthetic as well. On its top side, Gigabyte embeds an illuminated Windforce logo. When the card is off, the logo is plain white. But when it's on, Gigabyte's OC Guru III software lets you configure a backlight. Choose between seven different colors, always-on, breathing or corresponding to GPU activity. In addition to the logo, Gigabyte adds a pair of lights that indicate when the card is in silent mode and its fans are stopped. These can be disabled in the software, if you want.

The top edge of the card includes one SLI connector, allowing two cards to operate cooperatively. Gigabyte chooses to add a single eight-pin auxiliary power connector, which is also found along the top edge, facing upward. There is a cutout in the PCB that lets the plug be installed with its lock-tab facing outward, simplifying removal. It'd be nice to see other add-in card manufacturers add little touches like this.

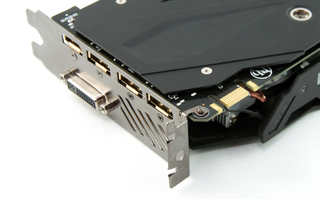

At first glance, it looks like Gigabyte went with Nvidia's reference suite of display outputs. You get three DisplayPort interfaces and one HDMI port in a row, along with a DVI-D connector right below. But upon closer inspection, the layout is different. Nvidia puts one DisplayPort output up top, followed by the HDMI port and then two more DP connectors underneath. Gigabyte has the three DisplayPort interfaces next to each other, with HDMI at the bottom of the row.

Gigabyte doesn't include a lot of extras with its GeForce GTX 950 Xtreme Gaming. There's a DVI-to-VGA adapter and a nifty metal case badge bearing the Xtreme Gaming logo.

The packaging itself is very much over-engineered, though. Considering the size of the box, I was expecting a much larger card. However, the box was stuffed with thick foam that seems intended to absorb rough handling.

MORE: Best Graphics CardsMORE: All Graphics Content

Kevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.

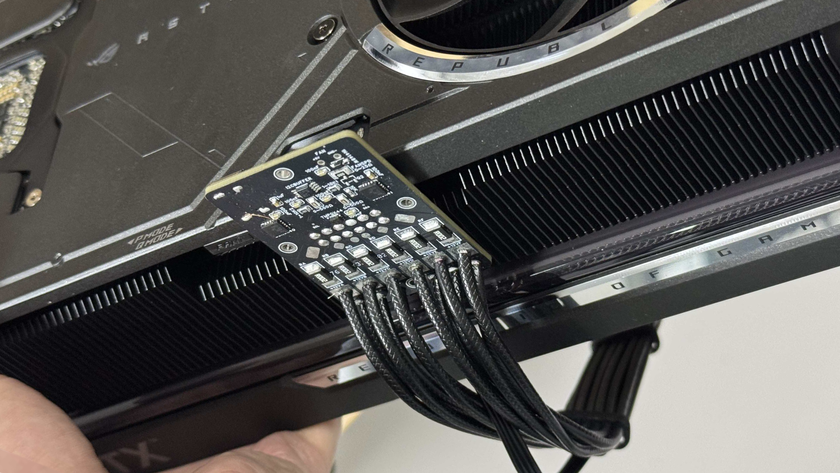

Prototype RTX 50-series power connector designed to prevent melting with current overload alarm, per-pin sensing

AMD Radeon RX 9070 series prices leak on Micro Center — starting at nearly $700 for XT versions

Nvidia's Priority Access program reportedly kicks off, invitations sent out for RTX 5090 customers

-

chaosmassive for future benchmark, please set to 1366x768 instead of 720p as bare minimumReply

because 720p panel pretty rare nowadays, game with resolution 720p scaled up for bigger screen, its really blur or small (no scaled up) -

kcarbotte Replyfor future benchmark, please set to 1366x768 instead of 720p as bare minimum

because 720p panel pretty rare nowadays, game with resolution 720p scaled up for bigger screen, its really blur or small (no scaled up)

All of the tests were done at 1366x768.

Where do you see 720p?

-

rush21hit I have been comparing test result for 950 from many sites now and that leaves me to a solid decision; GTX 750Ti. I'm having the aging HD6670 right now.Reply

Even the bare bone version still needed 6pin power and still rated 90Watt, let alone the overbuilt. As someone who uses a mere Seasonic's 350Watt PSU, I find the 950 a hard sell for me. Add in CPU OC factor and my 3 HDD, I believe my PSU is constrained enough and only have a little bit more headroom to give for GPU.

If only it doesn't require any additional power pin and a bit lower TDP.

Welp, that's it. Ordering the 750Ti now...whoa! it's $100 now? yaayyy -

ozicom I decided to buy a 750Ti past but my needs have changed. I'm not a gamer but i want to buy a 40" UHD TV and use it as screen but when i dig about this i saw that i have to use a graphics card with HDMI 2.0 or i have to buy a TV with DP port which is very rare. So this need took me to search for a budget GTX 950 - actually i'm not an Nvidia fan but AMD think to add HDMI 2.0 to it's products in 2016. When we move from CRT to LCD TV's most of the new gen LCD TV had DVI port but now they create different ports which can't be converted and it makes us think again and again to decide what to buy.Reply -

InvalidError Reply

There are adapters between HDMI, DP and DVI. HDMI to/from DVI is just a passive dongle either way.17165047 said:now they create different ports which can't be converted

-

Larry Litmanen Obviously these companies know their buying base far better than i do, but to me the appeal of 750TI was that you did not need to upgrade your PSU. So if you have a regular HP or Dell you can upgrade and game better.Reply

I guess these companies feel like most people who buy a dedicated GPU probably have a good PSU. -

TechyInAZ Looks great! Right off the bat it was my favorite GTX 950 card since Gigabyte put some excellent aesthetics into the card, but I will still go with EVGA.Reply -

matthoward85 Anyone know what the SLI equivalent would be comparable to? greater or less than a gtx 980?Reply