MSI Optix MAG341CQ Curved Ultra-Wide Gaming Monitor Review: A Price Breakthrough

Why you can trust Tom's Hardware

Grayscale, Gamma and Color

The MAG341CQ is an sRGB+ monitor, meaning its color gamut falls between the sRGB and DCI-P3 specs. Unfortunately, it doesn’t have an option for sRGB, but you might prefer the extra color saturation if you’re willing to accept lower accuracy.

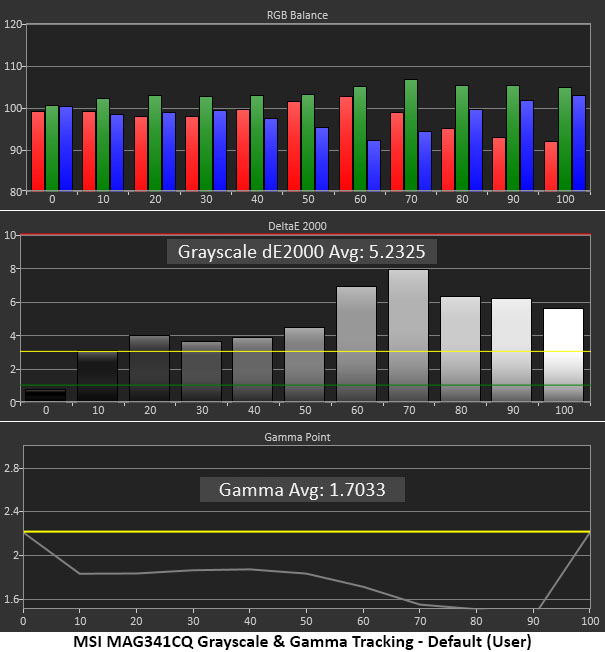

Grayscale & Gamma Tracking

We describe our grayscale and gamma tests in detail here.

The MAG341CQ’s grayscale errors aren’t grievous, but the green tint in most brightness levels was visible to the naked eye. Green errors were easiest to see, thanks to human eyesight naturally favoring that primary color.

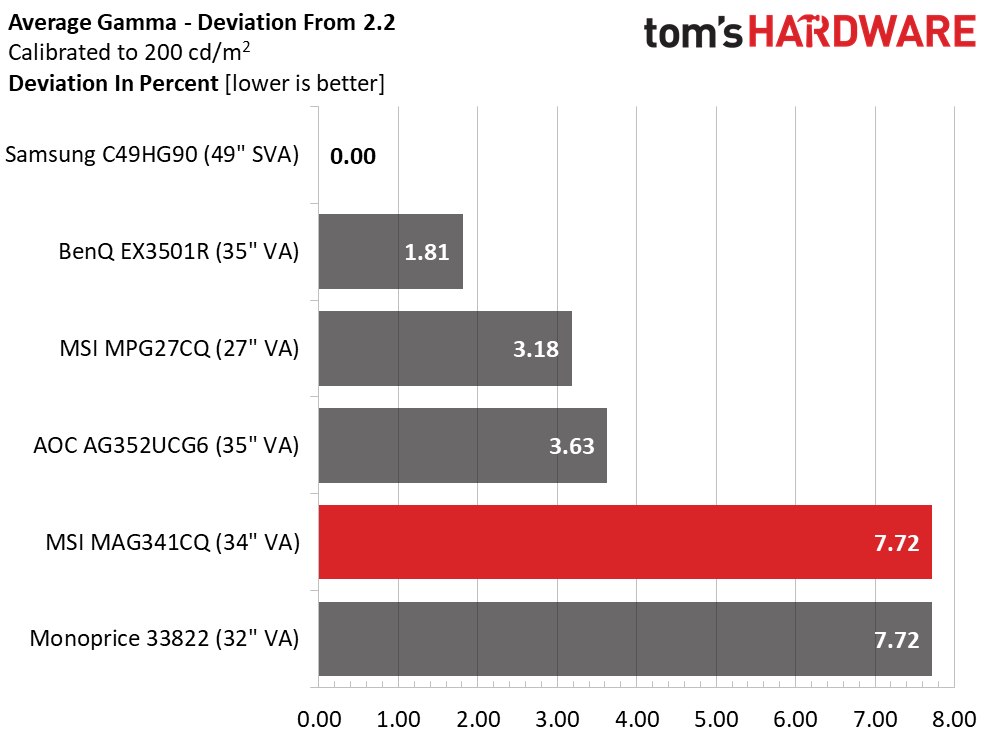

Another issue is gamma tracking, which is very light. The default setting is 1.8, which makes no sense for any content we’re aware of. The first chart above reflects the Warm color temp preset.

Adjusting the RGB sliders in the Custom color temp mode (second graph) offered a visible improvement. We couldn’t eliminate the red errors at 60 and 70 percent, but they were difficult to see in actual content. Changing the gamma preset to 2.2 and lowering contrast two clicks restored most of the lost image depth as well. We’re not happy with the dip at 90 percent, but this chart is a vast improvement over the default one.

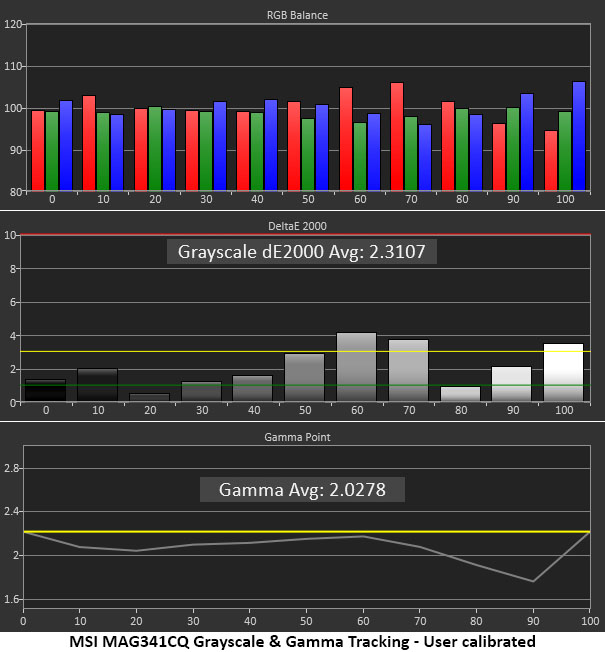

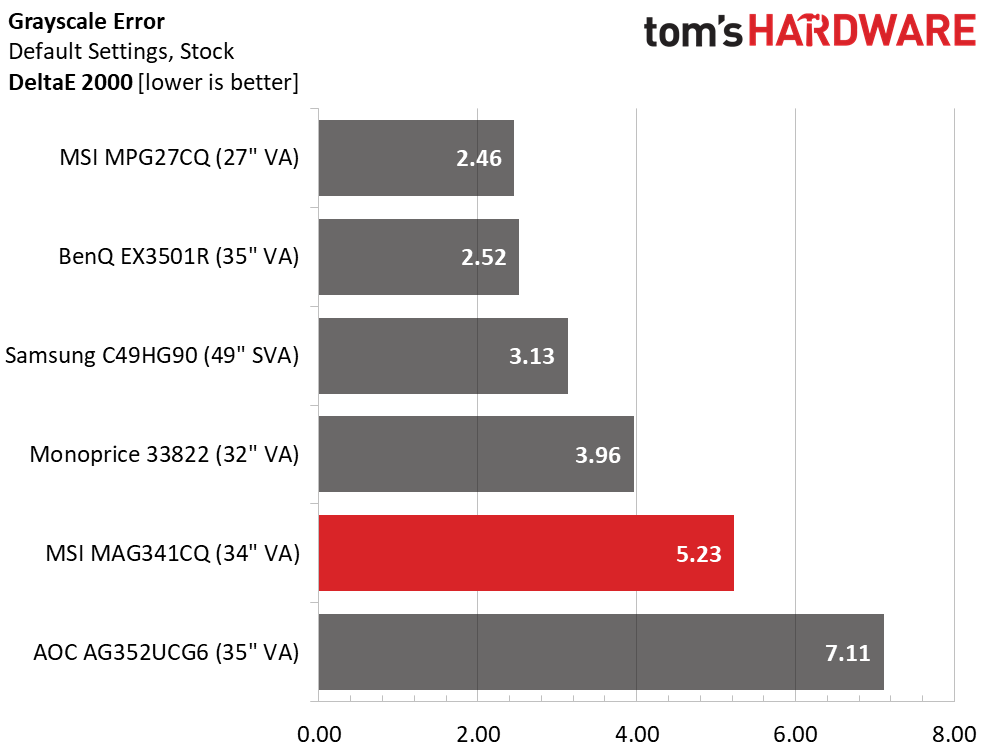

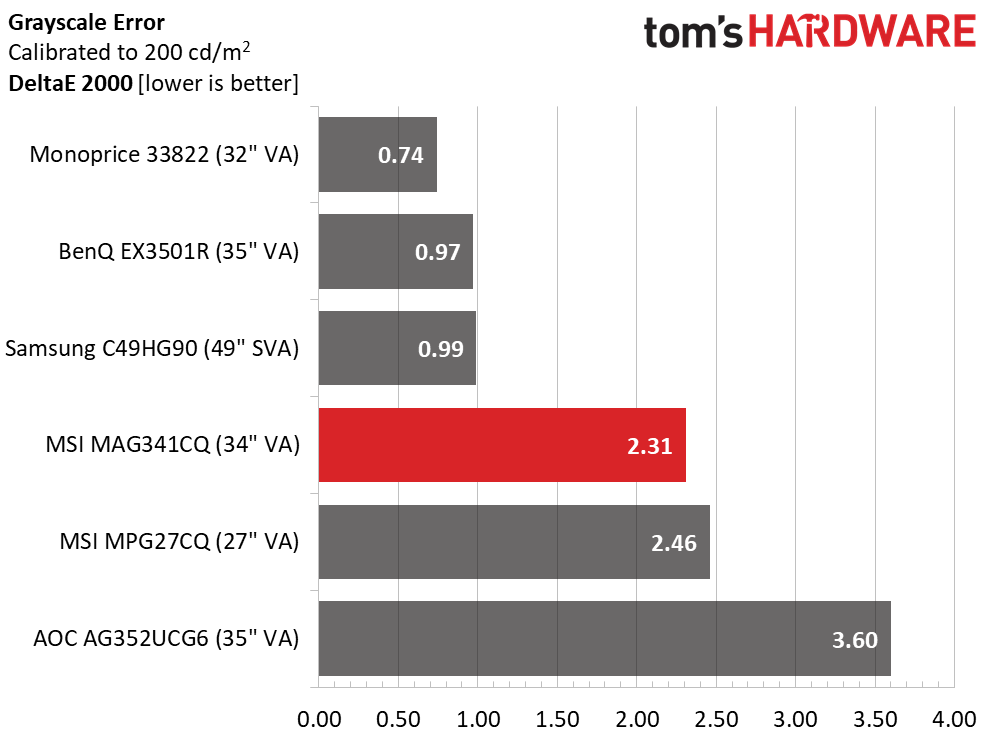

Comparisons

With calibration to 200 nits, the grayscale error dropped from 5.23 to 2.31dE, not only improved perceived contrast, but also helped color gamut accuracy. Adjusting the white point made secondary colors fall into line.

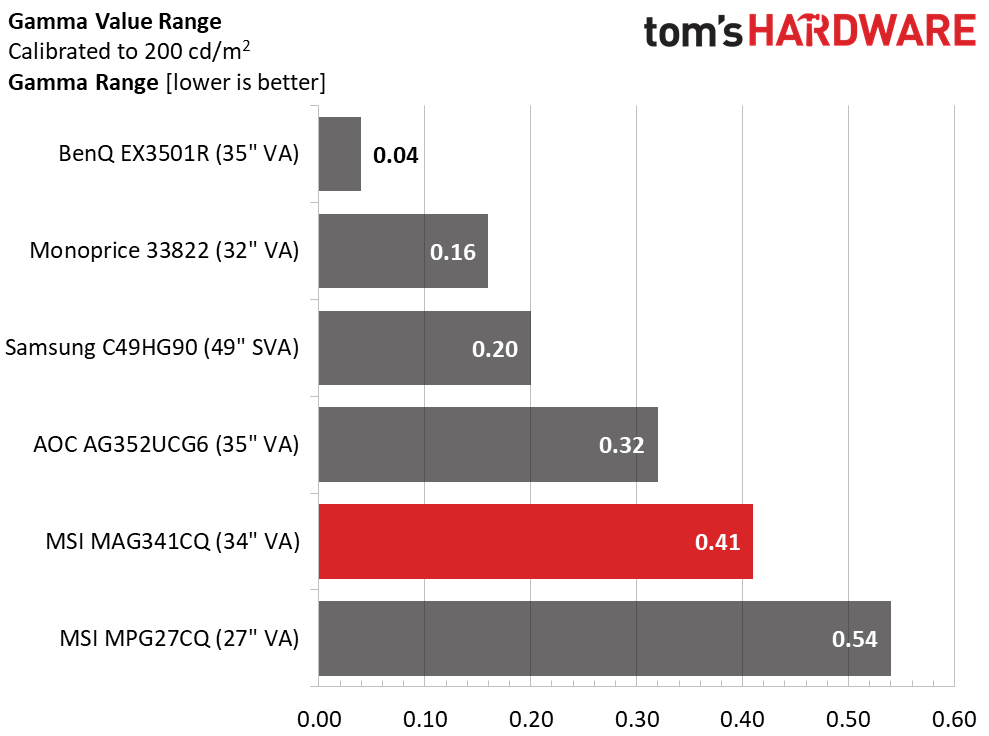

Our gamma tweak also had a positive impact on color and contrast, but there was still room for improvement. Our MAG341CQ sample could only muster an average gamma value of 2.03, mainly due to the dip at 90 percent shown in the calibrated grayscale and gamma tracking chart. And its range of values is a bit wider than all but the MPG27CQ.

Color Gamut Accuracy

For details on our color gamut testing and volume calculations, click here.

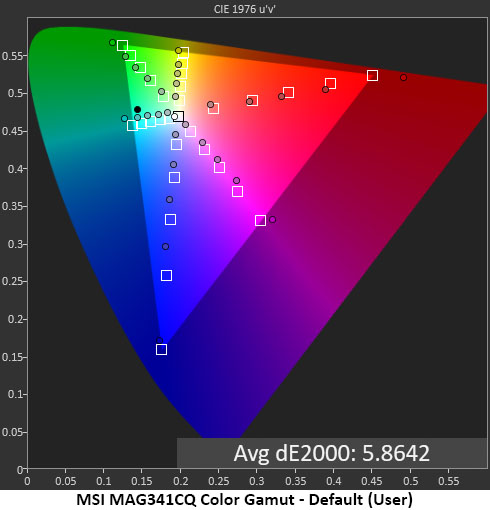

MSI has taken an interesting approach to color reproduction with the MAG341CQ. While it’s nearly a DCI-P3 monitor, the inner saturation points (20-80 percent) come closer to the sRGB spec. That’s a good thing because most content will look natural and reasonably accurate. Only the outermost points (100 percent) are significantly oversaturated. Bright images will show vivid color, which many will prefer. Our issue with the default result is that the targets are inconsistent—some points are undersaturated while others are over.

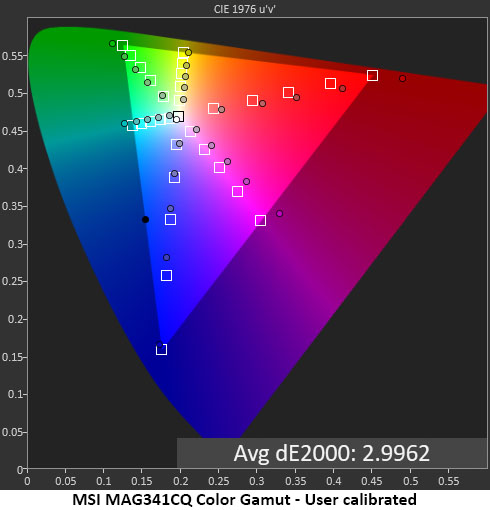

Calibration tightened up the tracking quite a bit, with all points becoming a bit oversaturated. While this isn’t ideal, it is more consistent and therefore makes all content look better. This is the main reason to make the adjustments recommended on page 1. Grayscale calibration is the key to good color tracking. We’ve made some positive improvements here.

Comparisons

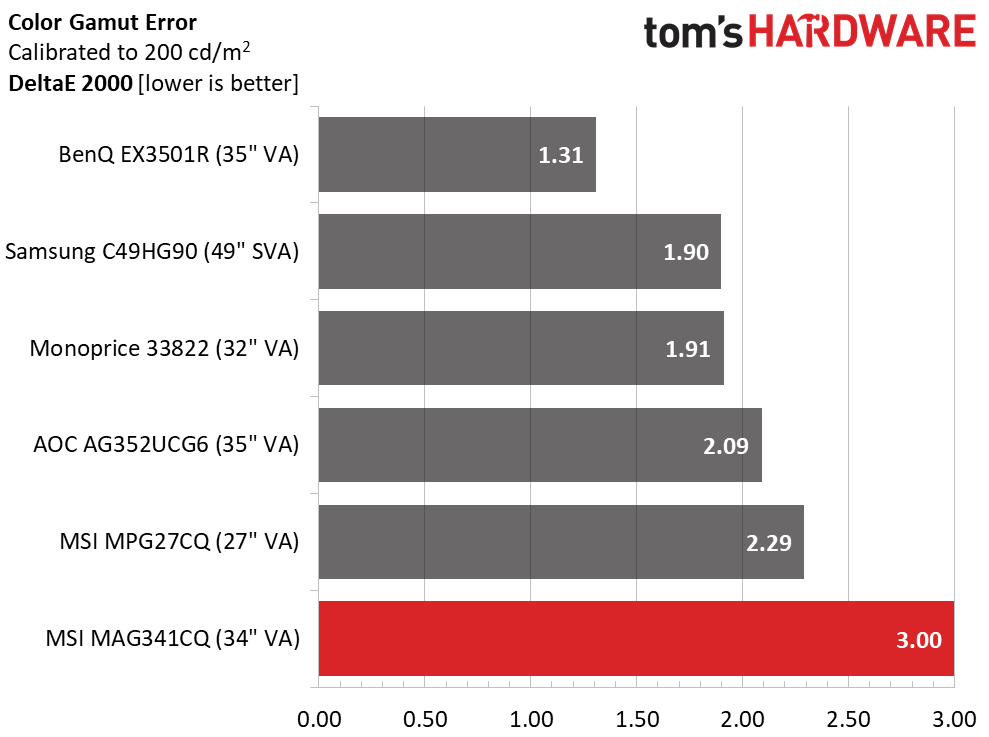

Even with calibration, the MAG341CQ lagged behind all the other monitors in color accuracy. While an average error of 3dE isn’t bad, the other screens did better. An sRGB option would have been a step in the right direction. In the past, we might have said these were good results for a gaming monitor. But in today’s market, few screens stray far from the proper specs. MSI might consider a firmware update to fix these issues.

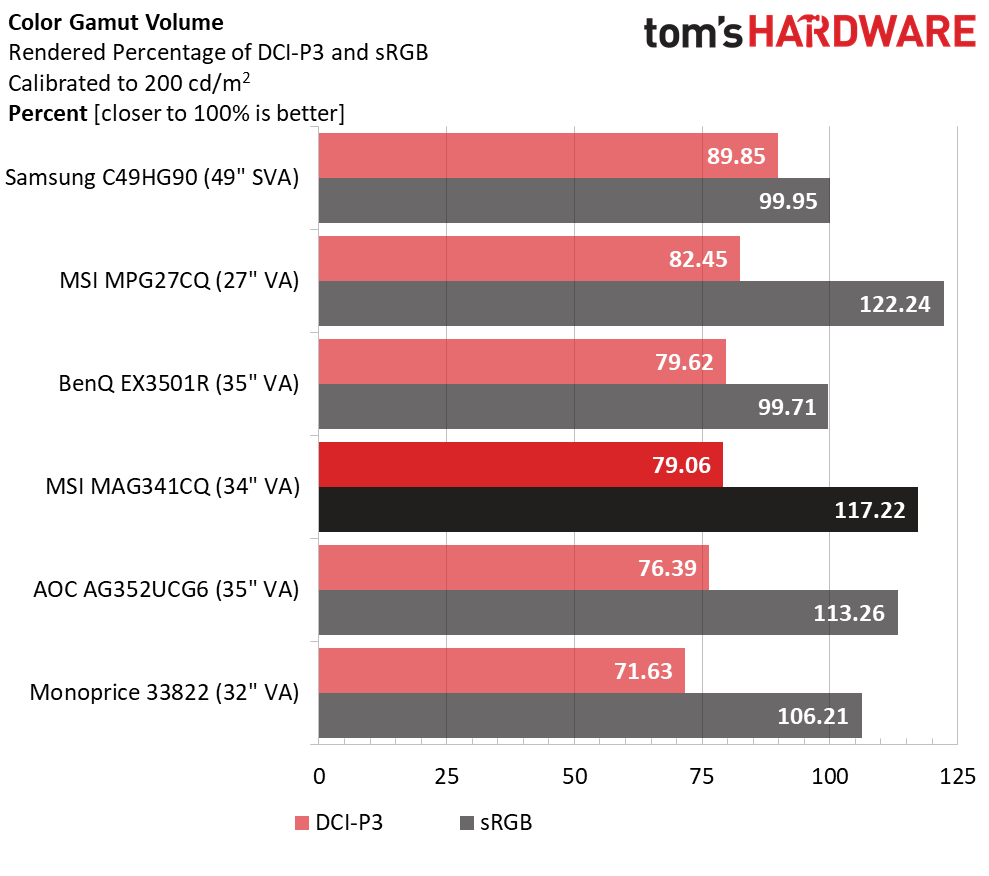

As an extended color display, the MAG341CQ performed well. Those looking for the largest possible gamut volume will find it among the more colorful at this price point. Out of comparison group, only the MPG27CQ showed greater sRGB volume. And rendering nearly 80 percent of DCI-P3 is a plus. Note, if you need to use it for color-critical applications, a custom monitor profile is a must.

MORE: Best Gaming Monitors

MORE: How We Test Monitors

MORE: All Monitor Content

Current page: Grayscale, Gamma and Color

Prev Page Brightness and Contrast Next Page Viewing Angles, Uniformity, Response and LagStay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Christian Eberle is a Contributing Editor for Tom's Hardware US. He's a veteran reviewer of A/V equipment, specializing in monitors. Christian began his obsession with tech when he built his first PC in 1991, a 286 running DOS 3.0 at a blazing 12MHz. In 2006, he undertook training from the Imaging Science Foundation in video calibration and testing and thus started a passion for precise imaging that persists to this day. He is also a professional musician with a degree from the New England Conservatory as a classical bassoonist which he used to good effect as a performer with the West Point Army Band from 1987 to 2013. He enjoys watching movies and listening to high-end audio in his custom-built home theater and can be seen riding trails near his home on a race-ready ICE VTX recumbent trike. Christian enjoys the endless summer in Florida where he lives with his wife and Chihuahua and plays with orchestras around the state.

-

Energy96 Why do these “high end” gaming monitors always seem to come with free sync instead of the Nvidia G-sync. Most people willing to shell out $450 and up on a monitor are going to be running Nvidia cards which makes the feature useless. With an Nvidia card it isn’t even worth considering a monitor that doesn’t support G-sync.Reply -

mitch074 @energy96: because including G-sync requires a proprietary Nvidia scaler, which is very expensive, while Freesync is based off a standard and thus much cheaper. So, someone owning an Nvidia card would have to pay 600 bucks for a similarly featured screen.Reply -

Energy96 This is completely false. Free sync does not work with Nvidia cards, only Radeon. There is a sort of hack work around but it’s worse than just buying a g-sync monitor.Reply -

Energy96 @energy96: because including G-sync requires a proprietary Nvidia scaler, which is very expensive, while Freesync is based off a standard and thus much cheaper. So, someone owning an Nvidia card would have to pay 600 bucks for a similarly featured screen.Reply

I know this. My point was most people who buy a Radeon card are doing it because they are on a budget. It’s unlikely they will have the funds for a high end gaming monitor that is $450+. That’s more than they likely would have spent on the Radeon card.

Majority of people dropping that much or more on a gaming monitor will be running Nvidia cards. I know it adds cost but if you are running Nvidia a free sync monitor is out of the question. Free sync seems pointless in any monitor that is much over $300. -

cryoburner Reply

The real question you should be asking is why a supposedly "high end" graphics card doesn't support a standard like VESA Adaptive-Sync, otherwise branded as FreeSync. You should be complaining on Nvidia's graphics card reviews that they still don't support the open standard for adaptive sync, not that a monitor doesn't support Nvidia's proprietary version of the technology that requires special hardware from Nvidia to do pretty much the same thing. It's not just AMD that will be supporting VESA Adaptive-Sync either, as Intel representatives have stated on at least a couple occasions that they intend to include support for it in the future, likely with their upcoming graphics cards. Microsoft's Xbox consoles also support FreeSync, albeit a less-standard implementation over HDMI.21646897 said:Why do these “high end” gaming monitors always seem to come with free sync instead of the Nvidia G-sync. Most people willing to shell out $450 and up on a monitor are going to be running Nvidia cards which makes the feature useless. With an Nvidia card it isn’t even worth considering a monitor that doesn’t support G-sync.

Nvidia doesn't support it because they want to sell you an overpriced chipset as a part of your monitor, and they want you arbitrarily locked into their hardware ecosystem once competition heats up at the high-end. I suspect that even they may support it eventually though. They're just holding out so that they can price-gouge their customers as long as they can.

-

Energy96 I can agree with this, but currently it’s the only choice we have. I’m not downgrading to a Radeon card. It would be nice if some of these monitors at least offered versions with it. I know a few do but the selection is very limited.Reply -

mitch074 Reply

A 1440p monitor and a Vega 56 go well together - if you have a 1080/1080ti/2070/2080/2080ti then yes it's a "downgrade", but if you run a triple screen off a Radeon card already, then it's not.21651950 said:I can agree with this, but currently it’s the only choice we have. I’m not downgrading to a Radeon card. It would be nice if some of these monitors at least offered versions with it. I know a few do but the selection is very limited.

I think my RX480 could run Dirt Rally on that thing quite well, for example - not everybody buy these things for a 144fps+ shooter. -

Dosflores Reply21650670 said:Free sync seems pointless in any monitor that is much over $300.

FreeSync isn't expensive to implement, unlike G-Sync, so why should companies not implement it? You seem to think that the only reason there can ever be to buy a new monitor is adaptive sync, which isn't true. People may want a bigger screen, higher resolution, higher refresh rate, better contrast… If they want G-Sync, they can expend extra on it. If they don't think it is worth it, they can save money. FreeSync doesn't harm anyone.

Buying a $450 monitor seems pointless to me after having spent $1200+ on a graphics card. The kind of monitor that Nvidia thinks is a good match for it is something like this:

https://www.tomshardware.com/reviews/asus-rog-swift-pg27u,5804.html