Intel SSD 910 Review: PCI Express-Based Enterprise Storage

PCIe-based SSDs have evolved rapidly, with companies like Fusion-io, LSI, and OCZ leading the way. Intel is betting that its reputation for quality and reliability in the enterprise sector are enough to overcome that head-start as it launches SSD 910.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Default Versus Maximum Performance Mode

The Intel SSD 910 offers two performance modes for the 800 GB SKU; Default and Maximum Performance.

| Performance Mode | Default | Maximum |

|---|---|---|

| User Capacity | 800 GB | 800 GB |

| PCIe Compliant | Yes | Maybe |

| Interface | PCIe 2.0 x8, Half-Height, Half-Length | |

| Sequential Read | 2 GB/s | 2 GB/s |

| Sequential Write | 1 GB/s | 1.5 GB/s |

| 4K Random Read | 180 000 IOPS | 180 000 IOPS |

| 4K Random Write | 75 000 IOPS | 75 000 IOPS |

| Power Consumption (Active) | <25 W | 28 W (38 W Max) |

| Power Consumption (Idle) | 12 W | 12 W |

| Required Airlfow | 200 LFM | 300 LFM |

| Write Endurance | 14 PB | 14 PB |

Intel refers to each storage controller and its corresponding 200 GB of user-accessible flash memory as a module. In Default mode, each of the two or four NAND modules is throttled to make sure that the total device power falls within the PCIe power specification's envelope. In Maximum Performance mode, any two NAND modules can be accessed at full speed and still be considered PCIe-compliant. Stressing all four NAND modules in Maximum Performance mode violates the PCIe specification, and may cause trouble in some systems.

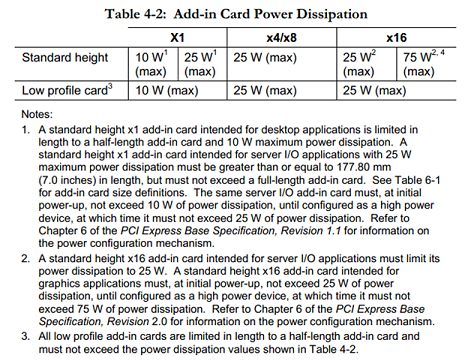

As you can see in the image above, the power dissipation limit for a PCI Express x4 or x8 card is 25 W. Maximum Performance mode is specified by Intel to top out at 38 W, though. Now, will your specific server drive this device correctly without a compatibility problem? It should. But you'll certainly want to check with your server vendor to be sure. We dropped the SSD 910 into a half-dozen systems and didn't run into trouble with any of them.

More concerning is the amount of airflow required in Maximum Performance mode. The base requirement of 200 Linear Feet per Minute (LFM) is fairly common for server-oriented add-in cards. Bumping up to 300 LFM might be a challenge in servers with adjacent cards installed, especially if those cards are also PCIe-based SSDs or high-powered RAID cards.

We do appreciate the fact that Intel allows for this option, and that the company is very clear about the implications of enabling it. If you are concerned about power and cooling (enterprise customers should be), check out the performance results in Default mode first.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Default Versus Maximum Performance Mode

Prev Page When One SSD Is Actually Four Next Page Test Setup And Benchmarks-

that is one fast Sequential read speed. It to bad that they will be $1000+ market and out of reach of all but the server/ workstation crowdReply

-

The OCZ is tested with compressible data? talk about best case scenario. what were the incompressible results like?Reply

-

s3anister PCI-E Solid State Storage is great but I can't help but wonder; where is the Memristor? The true performance gains to be had are with massive RAM-disks that aren't volatile.Reply -

apache_lives The most important and un-comparable factor here is 5 years later those Intel SSD's will still be functional, any other brand im surprised they last 5 months in normal machines with the failure rates i have seen first hand - OCZ, GSkill etc there all horrible i bought an Intel SSD for this reason - THEY WORK.Reply

Review sites never cover real world use - that is to live with it day in day out (reliability), its not all about raw speed and performance. -

ZakTheEvil Yeah, consumer SSD reliability is a bit of disappointment. At best they seem to be as reliable as hard drives.Reply -

georgeisdead This is a note to address several articles I have come across lately that state intel's reputation for quality and reliability in the SSD market as if it is a given. These comments are from my personal experience with intel's drives. I have owned 3 intel solid state drives, one X25-M G1, and two X25-M G2's. The X25-M G1 failed after 2 years while one of the G2 drives failed after 2.5 years. Now, I am not an expert on MTBF and reliability, but in my opinion this is a pretty poor track record. It is entirely possible that this is a coinicidence, however both drives failed in the same manner, from the same problem (determined by a third party data recovery specialist): Bad NAND flash.Reply

As best I understand it as it was descibed by the company that analyzed these failed drives, a block of NAND flash either went bad or became inaccessible by the controller rendering the drives useless and unable to be accessed by normal means of hooking it up to a SATA or USB port. Two drives, different NAND (50 nm for the G1 and 34 nm for the G2), same failure mode.

Once again, this is not definitive, just my observations but to me, I think review sites need to be a little more cautious about how they qualify intel's reputation for quality and reliability because from my perspective, intel has neither and I have since began using crucial SSD's. Hopefully, I will see much longer life from these new drives. -

jdamon113 I would like to see something like this stacked in our EMC, Could this drive with a rack of othere just like it, run 24/7 for 3 + years, Sure we replace a drive here and there in our EMC, but the unit as a whole has never went down in its 5 year life.Reply

Intel, you should test these drive in that real world application. EMC, VM-ware and several data bases carve out some LUN's and Push the envelope. In this situation, should the device prove worthy, the 4000 price tag will come down very fast, and the data center will put it trust in product, So for those reading this for your personal home workstation and gaming ridge, you need not apply in this arena.

Intel is just about 18-months 2 years of owning the data center, Even EMC is powered by intel. -

jaquith Enterprise e.g. SQL you need SLC otherwise you'd be making a career replacing drives. The cost is down time and replacement. I can write more 'stuff' but it's that simple. For our IDX and similar read data it about reliability and capacity.Reply -

willard razor7104that is one fast Sequential read speed. It to bad that they will be $1000+ market and out of reach of all but the server/ workstation crowdThat's because this was not designed for consumers. It's not like they're marking the price up 1000% for shits and giggles. Enterprise hardware costs more to make because it must be much faster and much more reliable.Reply

This drive, and every other piece of enterprise hardware out there, was never meant to be used by consumers. -

drewriley jimbob rubaeThe OCZ is tested with compressible data? talk about best case scenario. what were the incompressible results like?Reply

Check out the Sequential Performance page, lists both compressible and incompressible. For all the other tests, random (incompressible) data was used.