Amazon Web Services hints at 1000 Watt next-gen Trainium AI chip — AWS lays the groundwork for liquid-cooled data centers to house new AI chips

Tell us your AI chips will use 1000W or more without telling us your AI chips will use 1000W or more.

Amazon Web Services (AWS) is trying to earn a place at the head table of AI computing. In a field mostly dominated by Nvidia, AWS recently suggested its own AI chips might cross a critical power threshold soon that could make them competitive with Nvidia’s Blackwell chips as the company’s vice president of infrastructure hinted that the Trainium3 AI chips might draw more than 1,000 watts of electricity.

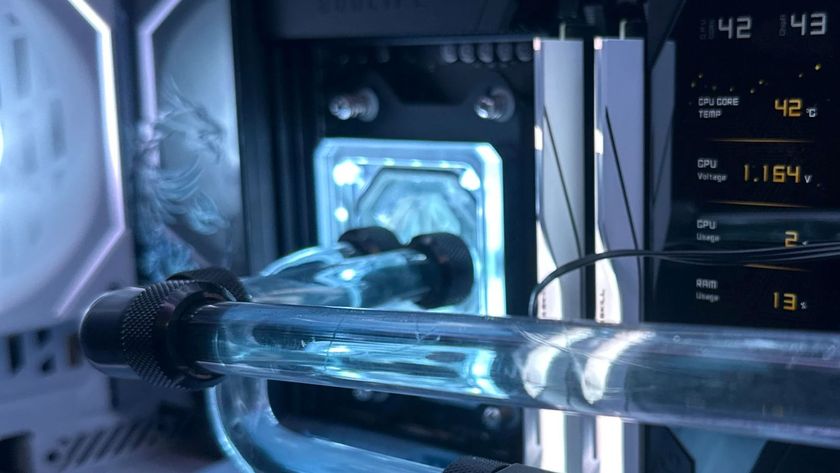

It’s important to add a caveat here: AWS VP of Infrastructure Prasad Kalyanaraman has yet to specifically give wattage requirements for the upcoming Trainium3 chip, or even its predecessor Trainium2. What he said was that liquid cooling would be required for AI chips that use more than 1,000 watts of power.

The executive went on to tell Fierce Network that Trainium2 doesn’t require liquid cooling, but Trainium3 would. "The current generation of chips don't require liquid cooling, but the next generation will require liquid cooling. When a chip goes above 1,000 watts, that's when they require liquid cooling," he said.

Currently, Nvidia’s beefiest AI chip requires 700W of power, but its forthcoming B200 is estimated to need 1,000W. Should AWS design the Trainium3 to use 1,000W of power or more, that could bring it closer to being competitive with the current leader in AI. Of course, if it fails to breach the 1,500W mark, it may not remain competitive for long.

Although Nvidia has yet to release the new Blackwell AI chips, it has already begun planning the next-generation Rubin chip. The Rubin AI chip and Intel’s rumored AI chip are expected to consume 1,500W of power.

Kalyanaraman said most of AWS’s data centers currently rely on air cooling. The company is preparing for the eventual need for liquid cooling, which requires advance planning. The current lead times for the coolant distribution units necessary for liquid cooling can be a year or longer.

Again, AWS hasn't said for certain that its Trainium3 AI chips will need 1,000W of power or more. The VP is merely suggesting, inferring, that this will be the case. If AWS is beginning to plan to convert its data centers to liquid cooling, and Kalyanaraman says chips drawing more than 1,000W of power need liquid cooling, that would seem like a strong indicator of what AWS has planned for its next-generation AI chips.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

In the same interview, Kalyanaraman outlined other changes AWS is planning for its data centers. For example, Kalyanaraman said the company plans to install next-generation network switches that support throughput speeds up to 51.2 Tbps. Its existing switches mostly top out at 12.8 Tbps.

The Amazon division is also planning rack layouts to avoid stranding power along any of its aisles. To accomplish this, AWS needs to map out where to place AI, memory, storage, and general-purpose compute servers to ensure every aisle of the data centers makes the most of the available power.

So, will AWS roll out AI chips that require over 1,000 watts of power? It certainly sounds that way if you take the statements in context with one another and if we take current industry trends for next-gen AI accelerators into account, but this cannot be absolutely confirmed until someone at AWS explicitly says so.

Jeff Butts has been covering tech news for more than a decade, and his IT experience predates the internet. Yes, he remembers when 9600 baud was “fast.” He especially enjoys covering DIY and Maker topics, along with anything on the bleeding edge of technology.