Foxconn confirms limited availability of Nvidia's Blackwell GPUs this year

But Nvidia Blackwell's availability will improve next year.

Foxconn has confirmed that its first servers based on Nvidia's Blackwell GPUs will be available this year. However, they will only be available in low quantities. The company stressed that even if it cannot ship GB200-based machines in sizeable quantities this year, demand for AI servers is so high that it will meet its sales targets by selling existing Nvidia Hopper-powered machines.

"We are on track to develop and prepare the manufacturing of the new [Nvidia] AI server to start shipping in small volumes in the last quarter of 2024 and increase the production volume in the first quarter of next year," said James Wu, a spokesperson for Foxconn, in an earnings call with analysts and investors, reports Nikkei.

Foxconn is heavily invested in its AI server business, which now represents 40% of its overall server operations. The company holds a 40% share of the global AI server market and anticipates that this segment will soon become a "trillion-dollar" enterprise. Demand for servers using Nvidia's current-generation H100 and H200 GPUs remains very strong, giving Foxconn confidence that it will achieve its revenue targets for the year's second half.

Foxconn is said to be the main assembler of Nvidia's NVL72 machines based on GB200 nodes that use one 72-core Grace processor and two B200 GPUs. The problem is that Nvidia will not be able to ship loads of Blackwell GPUs this year.

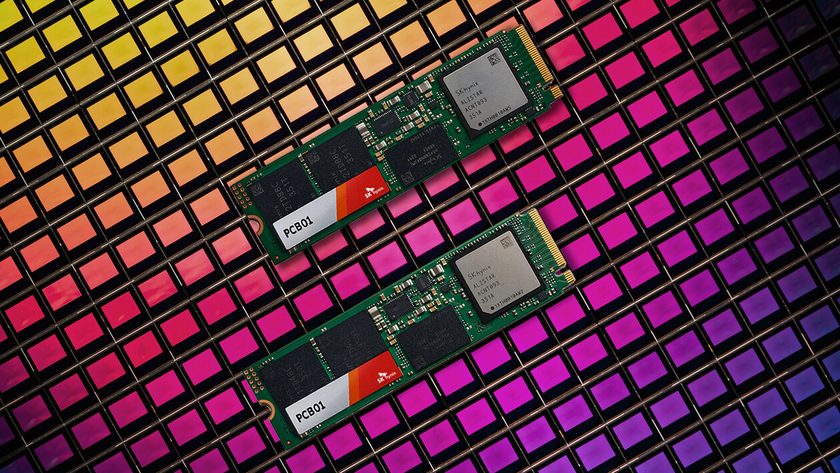

Unlike H100 and H200, which rely on TSMC's CoWoS-S packaging, Nvidia's B100 and B200 GPUs are the first to use TSMC's CoWoS-L packaging that uses an RDL interposer with local silicon interconnect (LSI) bridges to connect chiplets. Precise placement of these bridge dies is crucial, especially for maintaining the 10 TB/s interconnect between compute dies. Rumors suggest a design issue involving a mismatch in the coefficient of thermal expansion (CTE) among the GPU chiplets, LSI bridges, RDL interposer, and motherboard substrate, potentially causing warping and failure of the system-in-packaging. Analysts also suggest that redesigning the top metal layers and bumps of the Blackwell GPU silicon may be needed, leading to delays.

Consequently, Nvidia is expected to produce low volumes of the 1000W B200 GPUs in Q4 2024 for HGX servers, with limited availability of GB200-based NVL36 and NVL72 servers, which Foxconn is reportedly discussing.

To meet the demand for lower and mid-range AI systems, Nvidia is developing the B200A GPU, featuring monolithic B102 silicon with 144 GB of HBM3E memory, using the established CoWoS-S packaging. This model, expected in Q2 2025, will offer up to 144 GB of HBM3E memory and 4 TB/s memory bandwidth.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

JRStern Hey, anyone remember "nanotechnology"? About twenty years ago. What ever happened to that? Theranos was just the most famous. Gets tricky down there. Similarly "monolithic" attempts at chip packaging has come and gone in Silicon Valley every five years since what, 1970? Not saying it's impossible or anything but TANSTAAFL.Reply