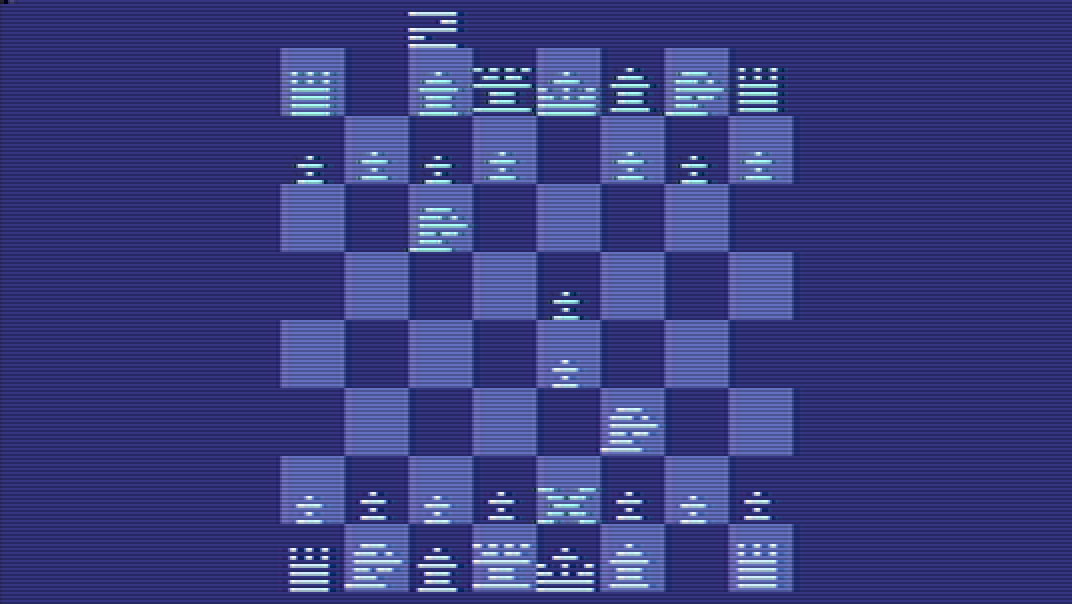

Google Gemini crumbles in the face of Atari Chess challenge — admits it would 'struggle immensely' against 1.19 MHz machine, says canceling the match most sensible course of action

After a pre-game chat, Gemini swung from being confident to admitting it would ‘struggle immensely’ against the ancient console.

Google Gemini decided to call off a chess match against the ancient 1.19 MHz Atari 2600 console after a friendly pre-game reminder about what happened to ChatGPT and Microsoft’s Copilot. Citrix Architecture and Delivery specialist Robert Jr. Caruso, now well known for his AI vs Atari Chess challenges, revealed Gemini chickened out, in a chat with The Register.

As was the case with the ChatGPT and Microsoft’s Copilot chess challenges, Caruso reveals that Gemini was initially brimming with confidence regarding its Chess prowess. It was comfortable, if not eager, to throw down the gauntlet against the Atari 2600. At the beginning of Caruso’s chat with Gemini, the chatbot boasted of being able to “think millions of moves ahead and evaluate endless positions.” That sounds familiar in a proverbial ‘pride goeth before destruction’ kind of way.

Caruso then kindly reminded Gemini that he had previously organized Atari Chess bouts with ChatGPT and Microsoft’s Copilot. The Citrix expert went on to explicitly explain to Gemini that other LLMs had displayed outstanding levels of “misplaced confidence,” ahead of their chess matches against the ancient console.

Gemini must have then thought a bit deeper about what exactly would be involved in the chess challenge, and admitted to Caruso that it had been hallucinating regarding the magnitude of its abilities. It added that it now felt that it would “struggle immensely” in a match against the Atari 2600. “Canceling the match is likely the most time-efficient and sensible decision,” concluded Gemini.

LLMs aren't CPMs (Chess Playing Models)

So, now we have more confirmation, if needed, that today’s LLMs aren’t designed to be chess champs, and a little machine introspection is all that is required for them to think better of participating in such a challenge. This is even advisable when challenged by the incredibly constrained Atari 2600 with its puny MOS Technology 6507 9-bit processor, accompanied by just 128 bytes of RAM.

Due to the way these AIs, or LLMs, are created from linguistic theory and machine learning models, they are much more adept at talking about than playing the game of kings.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

George³ So much money for the hardware the model runs on, so much money spent on coding, and so much money on electricity and other supplies to train it, and it follows that investing in "AI" is nothing but a waste of tremendous amount of money.Reply -

Alvar "Miles" Udell Now that TH has reported on these things with questionable setups they need to repeat the tests themselves or admit they could all have been artificially skewed to fail just for headlines.Reply

Like the Copilot one, use think deeper and algebraic chess notation to play. -

George³ Reply

What do the settings have to do with it? If you make a deliberate optimization to win a specific race, it will be your victory, not Gemini's.Alvar Miles Udell said:Now that TH has reported on these things with questionable setups they need to repeat the tests themselves or admit they could all have been artificially skewed to fail just for headlines.

Like the Copilot one, use think deeper and algebraic chess notation to play. -

monotreme It is very interesting that each time that these posts have been made about the Atari beating the LLM, there isn't video evidence.Reply

Seems really easy to provide.

Anyway, I've been skeptical since the ChatGPT claim. -

JRStern But LLMs don't even know the rules.Reply

So what can they do, search for chess notation and take the most common next move?

But I doubt that they were trained with many complete games, maybe none at all.

What next, see if it can hit big league pitching? -

seagalfan Reply

What on earth are you talking about? First: chess is not at all a barometer for intelligence or succes of artificial intelligence and second succes at a pointless game is not the purpose of developing LLM. Maybe we should put you up against the atari chess machine and if you lose I guess all that food and resources that goes in to sustaining you is a waste.George³ said:So much money for the hardware the model runs on, so much money spent on coding, and so much money on electricity and other supplies to train it, and it follows that investing in "AI" is nothing but a waste of tremendous amount of money. -

Alex/AT So basically LLMs talk BS without any practical merit.Reply

And indeed, this is well known. Trying to hype 'em otherwise is just merely hyping 'em. -

Alvar "Miles" Udell ReplyGeorge³ said:What do the settings have to do with it? If you make a deliberate optimization to win a specific race, it will be your victory, not Gemini's.

If you limit sources and make deliberately vague prompts to LLMs you get junk in return. -

razor512 There needs to be an AI with more limited LLM capabilities but with a range of basic functions useful in a range of games.Reply

One area where a small AI model can be truly revolutionary, are NPCs in games with LLM and gameplay capabilities.

For example, imagine Imagine NPCs reacting more realistically if your character does something really weird in the game world, or allow for dynamic interactions outside of any specific story line.

Or imagine adding fun Easter eggs into a game where an NPC can have options for custom text inputs. For example, imagine a version of Elden Ring where instead of the 2nd phase of the battle, you could convince Maliketh to allow you to pet him instead.

https://i.imgur.com/PUj1fgl.jpeg