Huawei to open-source its UB-Mesh data center-scale interconnect soon, details technical aspects — one interconnect to rule them all is designed to replace everything from PCIe to TCP/IP

1.25 TB/s per device, open source, but will it gain traction?

Huawei used its Hot Chips 2025 slot to introduce its UB-Mesh technology that is designed to unify all interconnections across AI data centers — both inside nodes and outside nodes — with a single protocol. The company also said that it will announce it is opening up the protocol for all users for free at its event next month. The technology is meant to replace PCIe, CXL, NVLink, and TCP/IP protocols with one single protocol to cut latency, control costs, and improve reliability in gigawatt-class datacenters. To push the initiative, Huawei plans to open-source the specification. But will it gain traction?

"Next month we have a conference, where we are going to announce that the UB-Mesh protocol will be published and disclosed to anybody like a free license," said Heng Liao, chief scientist of HiSilicon, Huawei's processor arm. "This is a very new technology; we are seeing competing standardization efforts from different camps. […] Depending on how successful we are in deploying actual systems and demand from partners and customers, we can talk about turning it into some kind of standard."

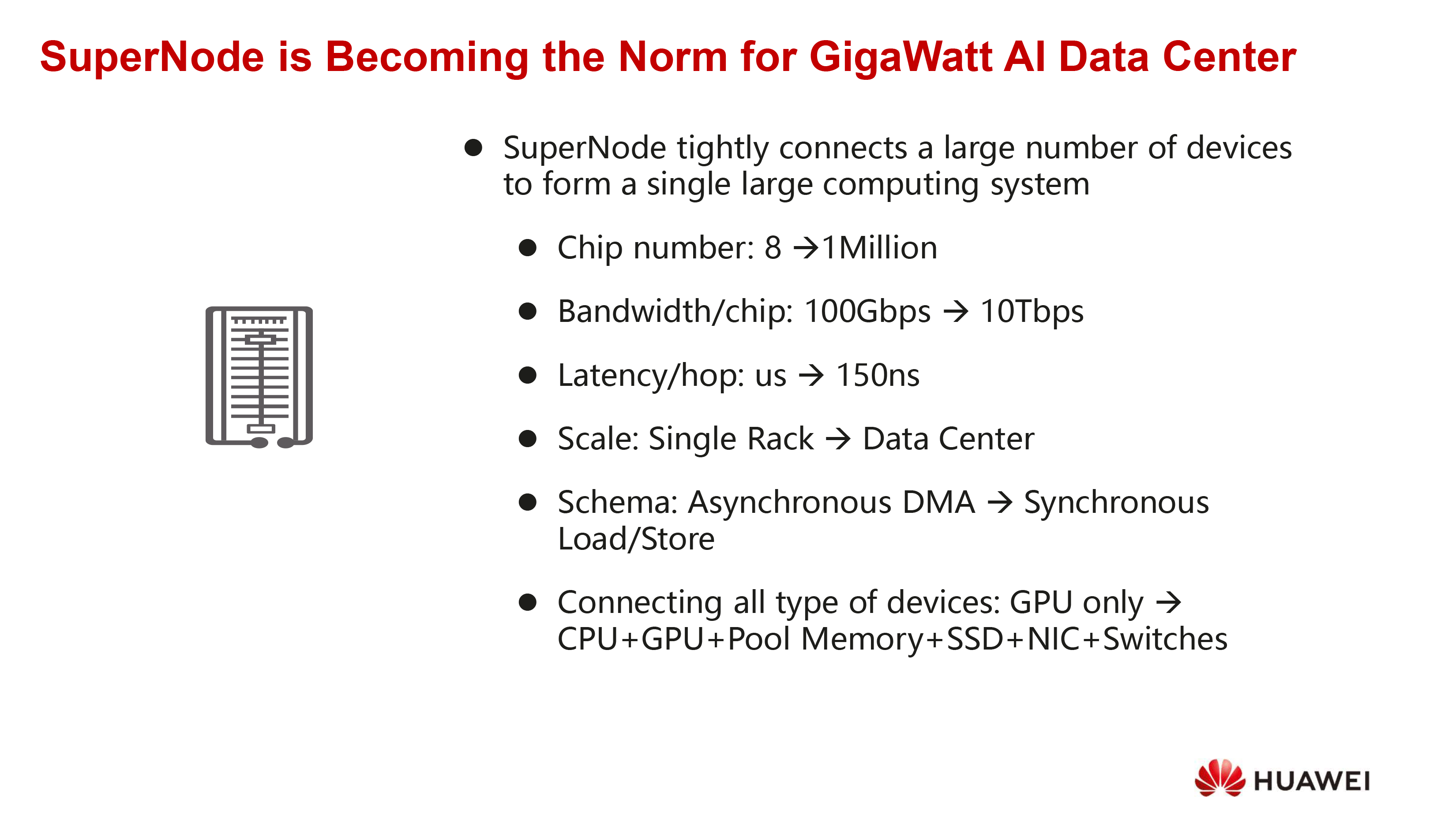

From a cluster to SuperNode

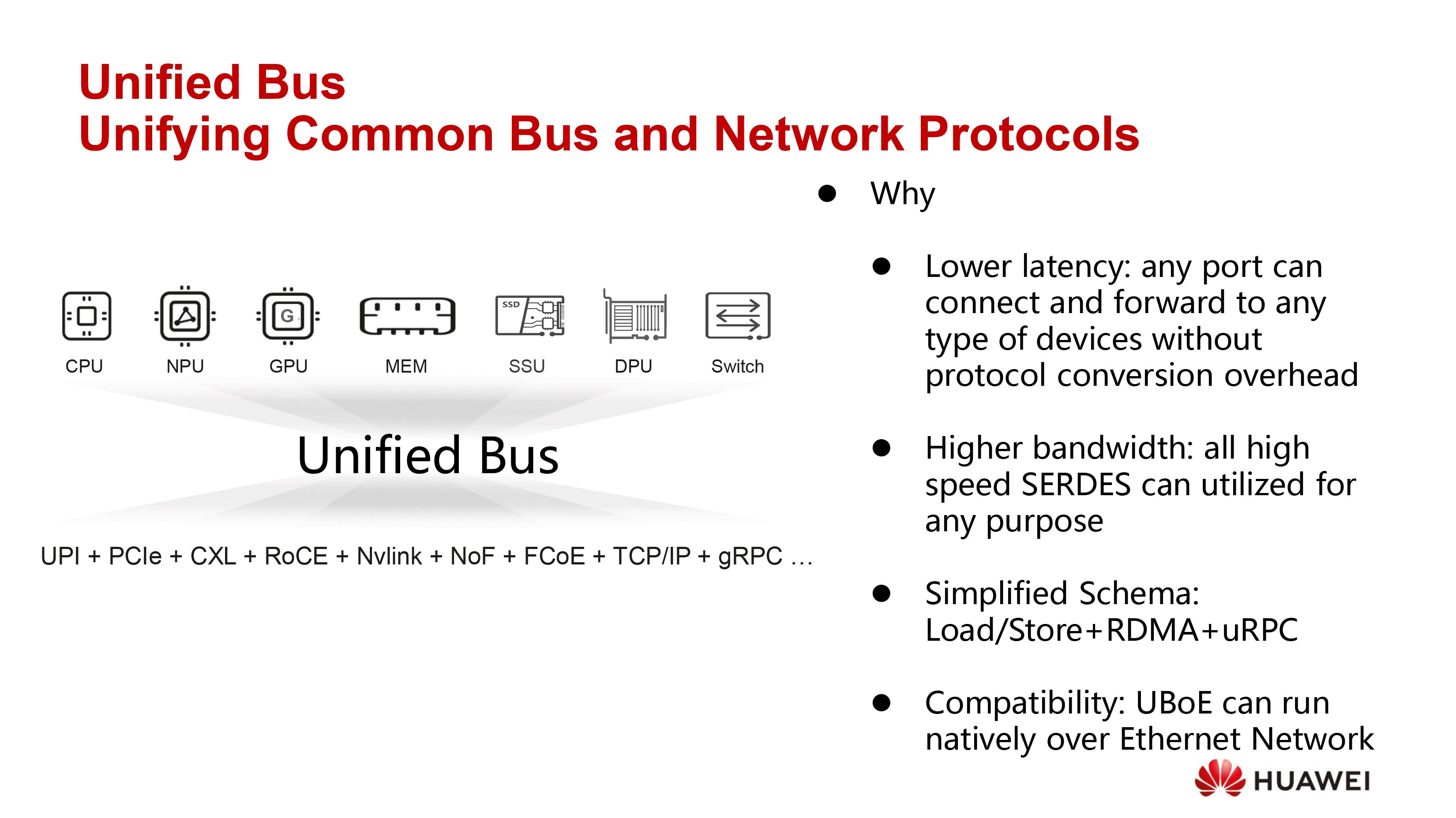

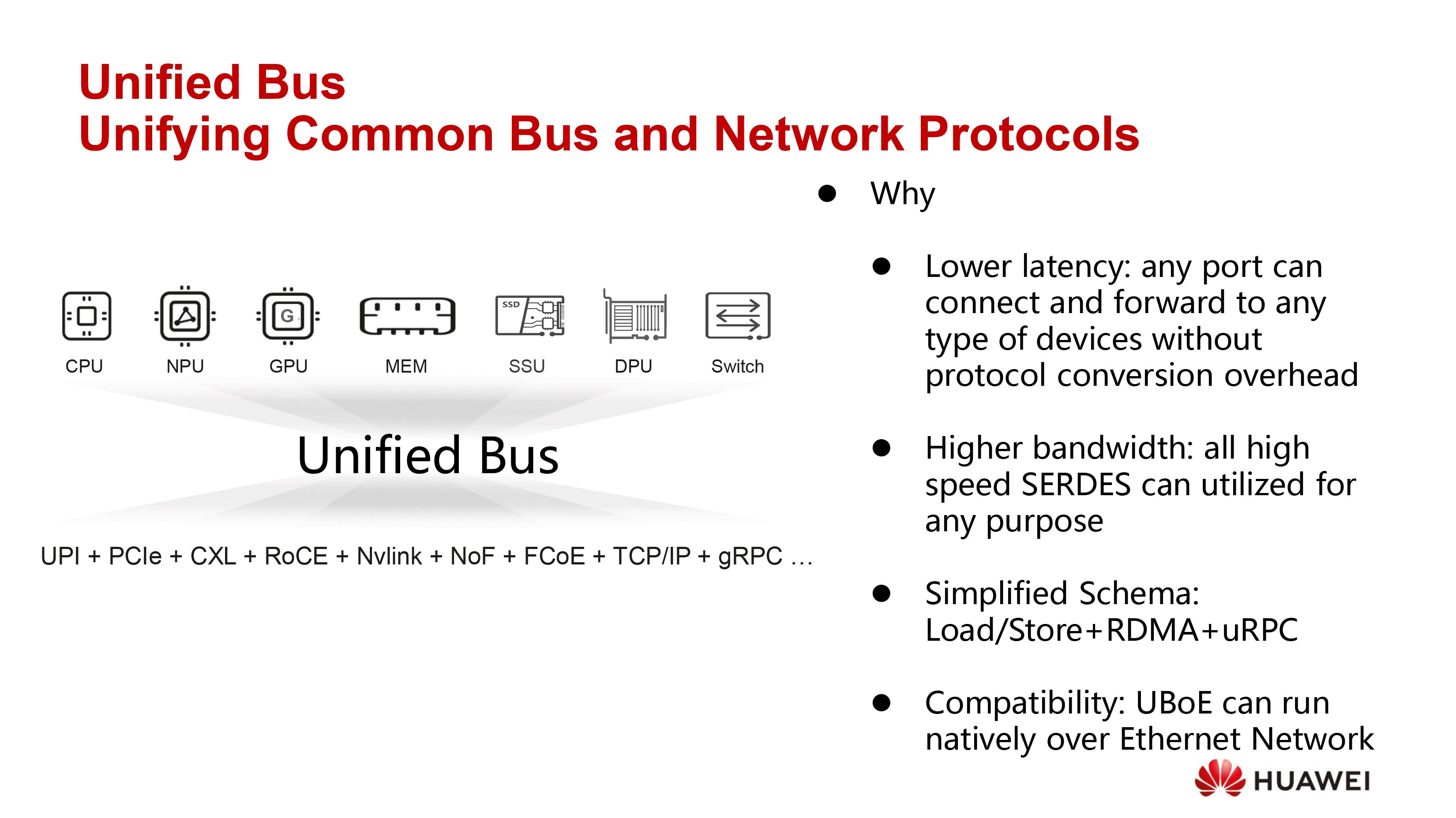

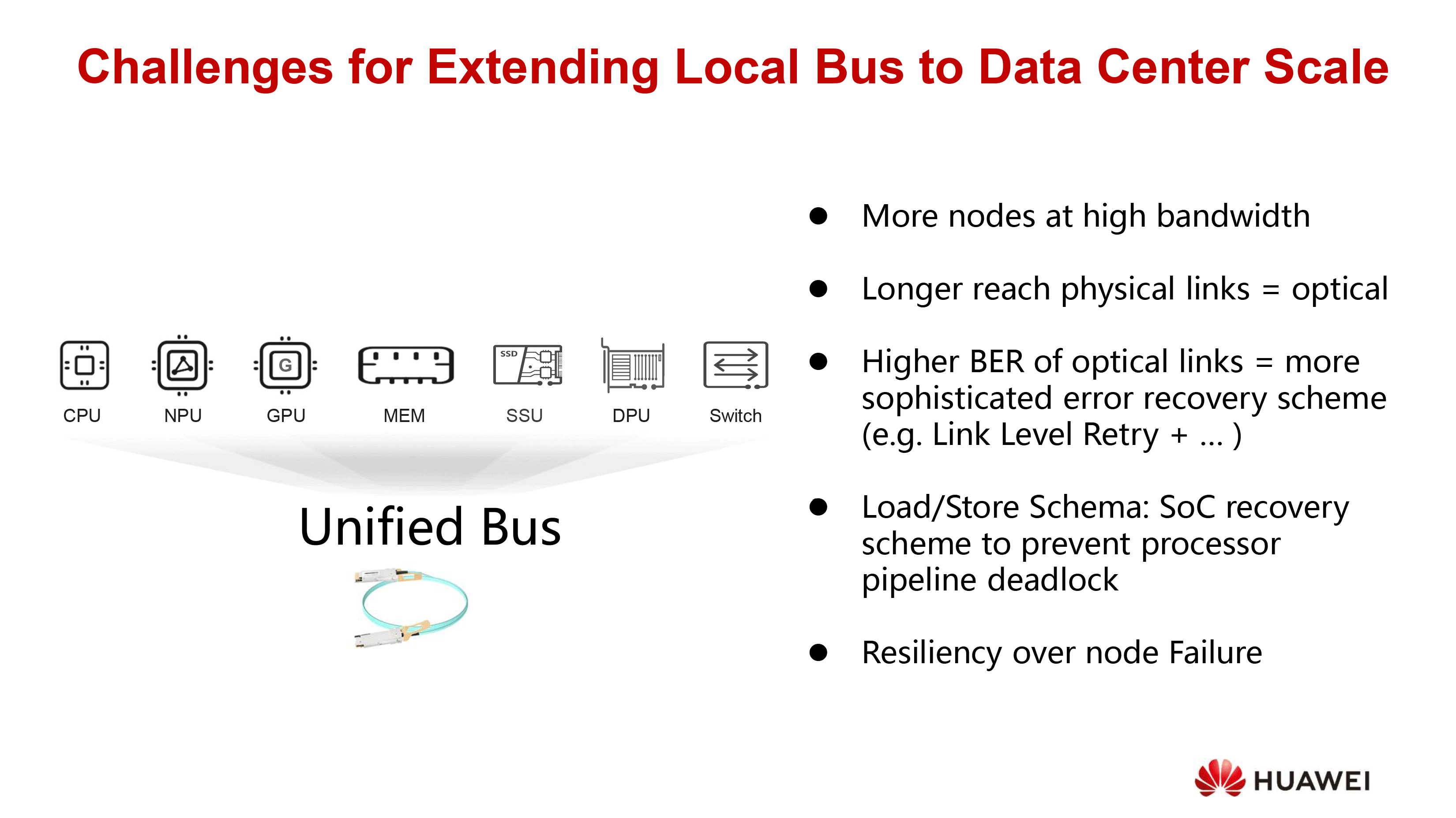

While AI data centers for training and inference should perform like one big inherently parallel processor, they consist of individual racks, servers, CPUs, GPUs, memory, SSDs, NICs, switches, and other components that connect to each other using different buses and protocols, such as UPI, PCIe, CXL, RoCE, NVLink, UALink, TCP/IP, and upcoming Ultra Ethernet, just to name a few. Protocol conversions require power, increase latency and cost, and introduce potential points of failure, all factors that can scale catastrophically in gigawatt-class data centers with millions of processors.

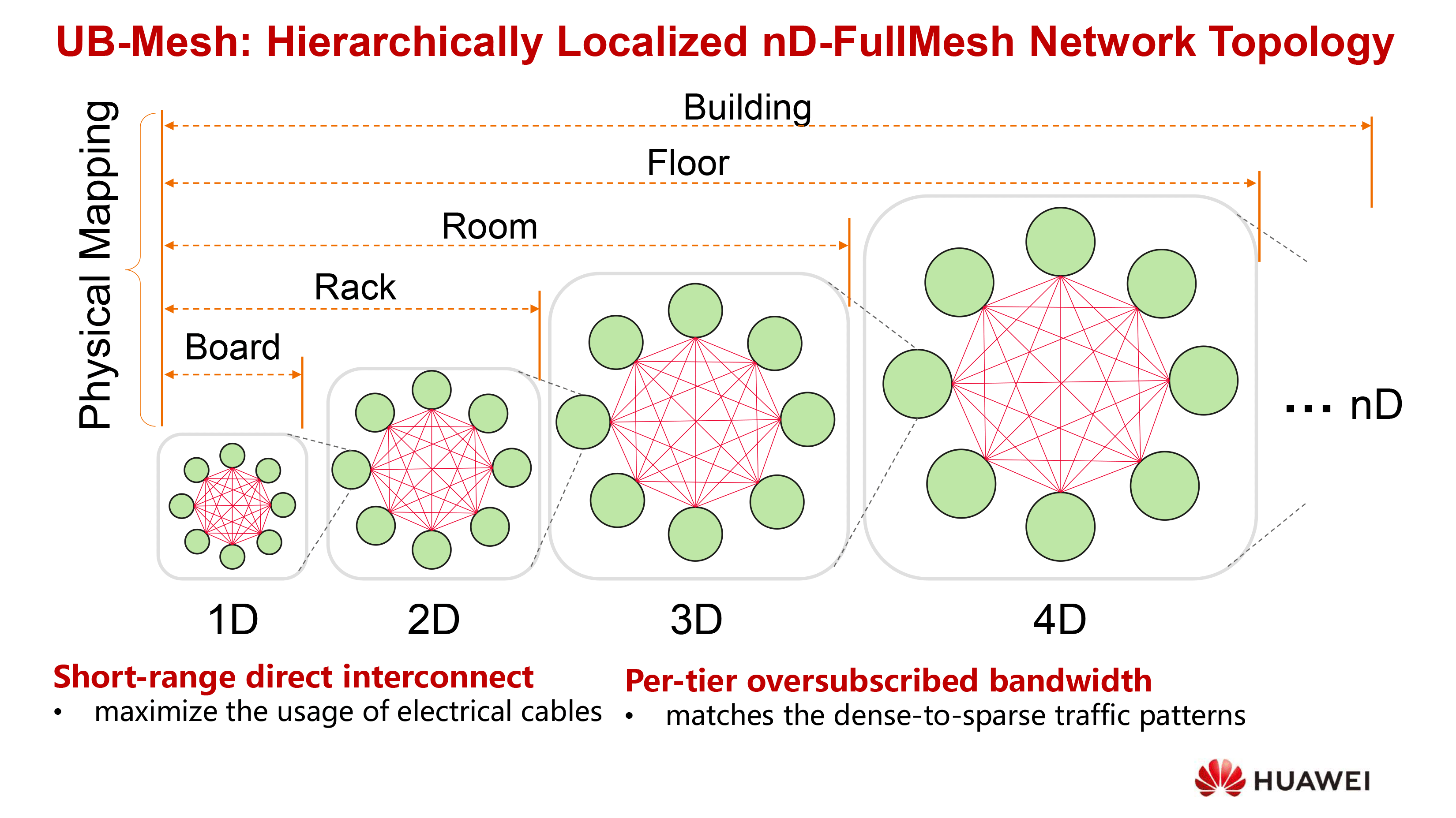

Instead of juggling a plethora of links and protocols, Huawei proposes one unified framework called UB-Mesh that enables any port to talk to any other without translation. That simplicity cuts out conversion delays, streamlines design, and still leaves room to operate over Ethernet when needed, essentially converting the whole data center into a UB-Mesh-connected coherent SuperNode.

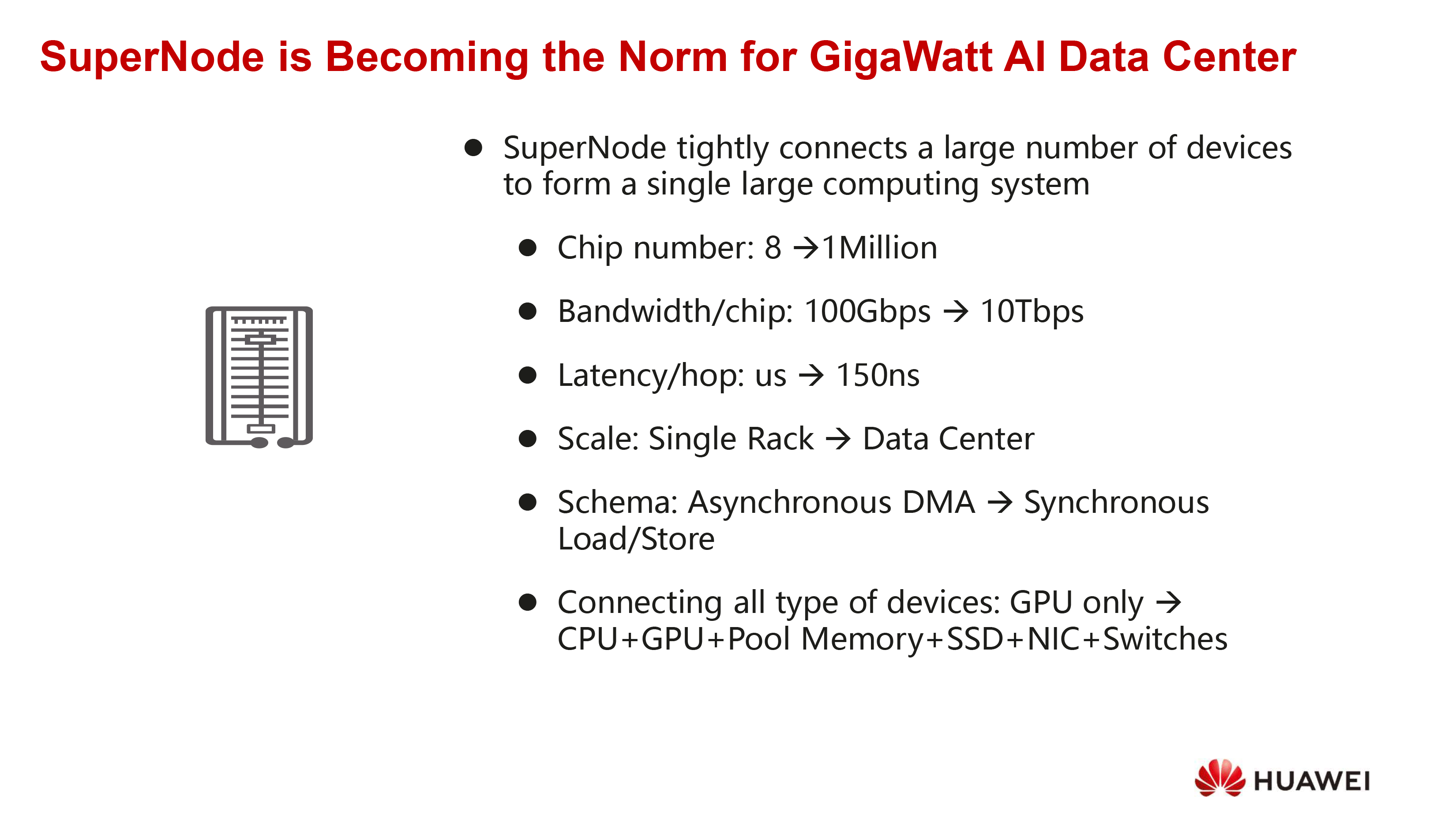

Huawei defines SuperNode as a data center-scale AI architecture that unifies up to 1,000,000 processors (whether these are CPUs, GPUs, NPUs), pooled memory, SSDs, NICs, and switches into one system with per-chip bandwidth rising from 100 Gbps to 10 Tbps (1.25 TB/s, beyond what even PCIe 8.0 is set to provide), hop latency reduced from microseconds to ~150 ns, and overall design shifting from asynchronous DMA toward synchronous load/store semantics.

This structure is designed to lower latency, allow all high-speed SERDES connections to be reused flexibly, and even support operation over Ethernet for backward compatibility.

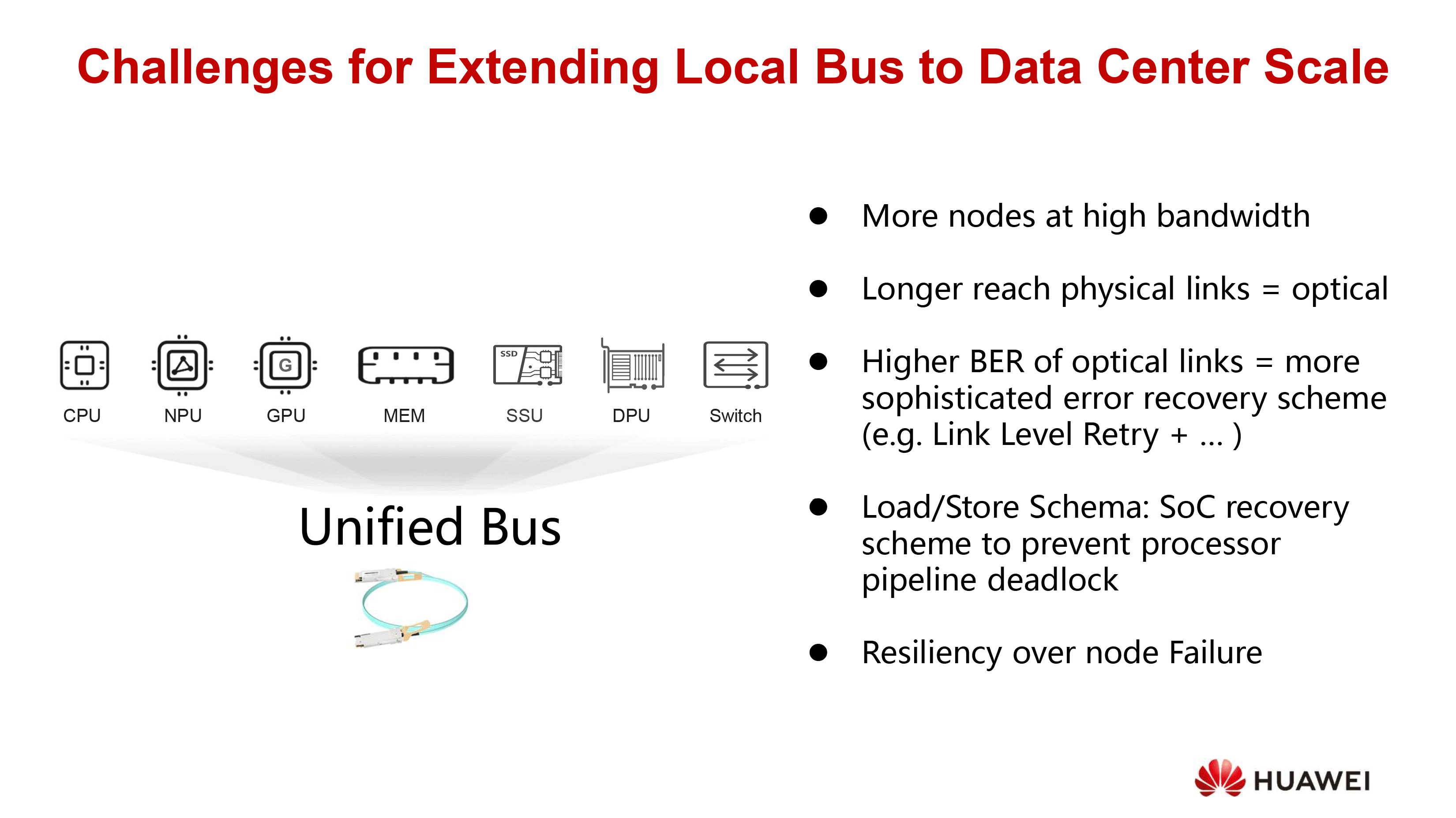

New technical challenges

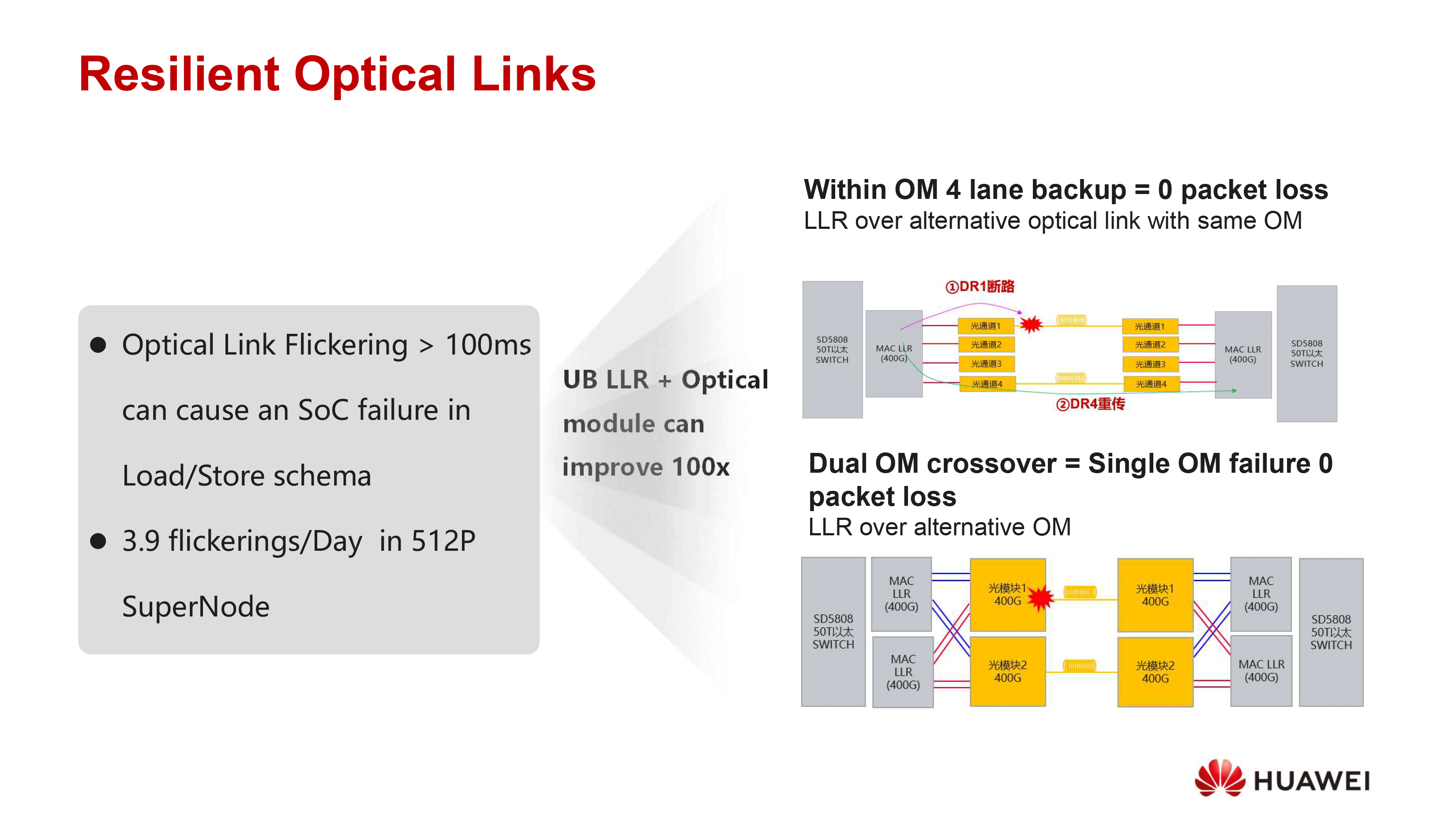

However, Huawei admits that scaling this concept across a data center introduces new challenges, particularly the move from copper (which still connects inside the rack) to pluggable optical links. Fiber optics are unavoidable for long distances but comes with error rates that are way higher than electrical connections. To manage this, Huawei proposes link-level retry mechanisms, backup lanes within optical modules, and crossover designs that connect controllers to multiple modules. These measures are designed to ensure continuous operation even when individual links or modules fail, though they obviously increase costs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

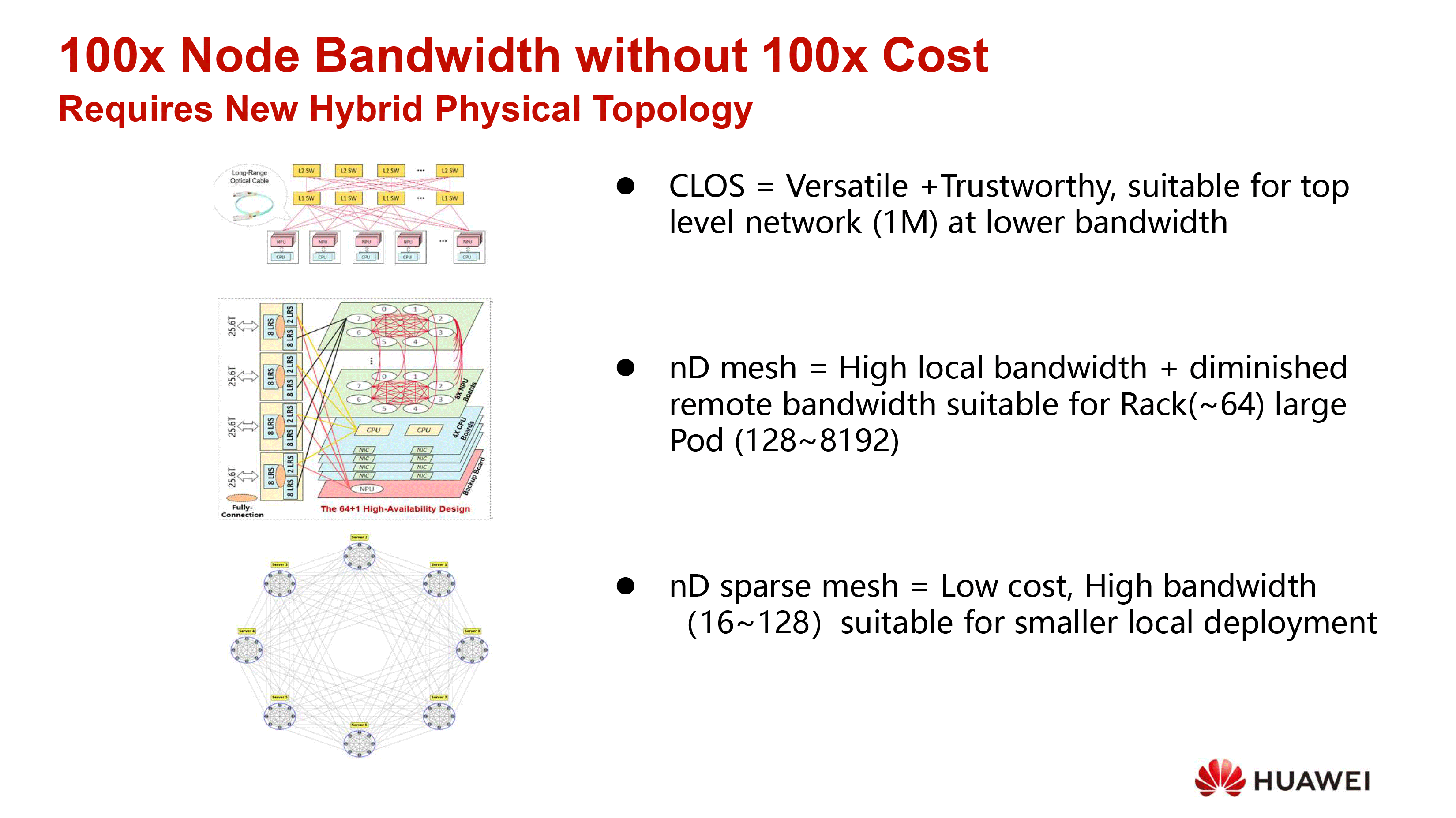

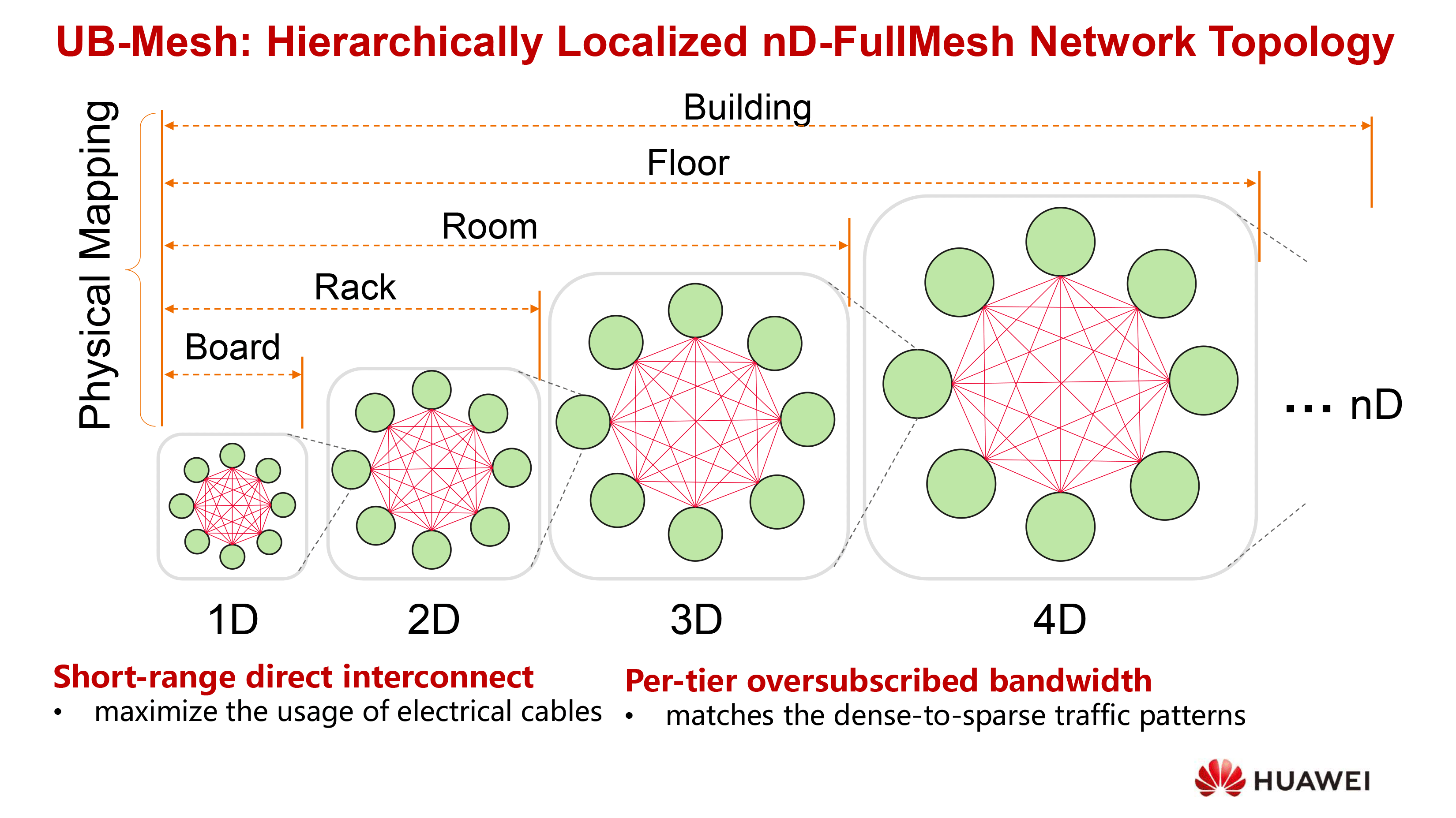

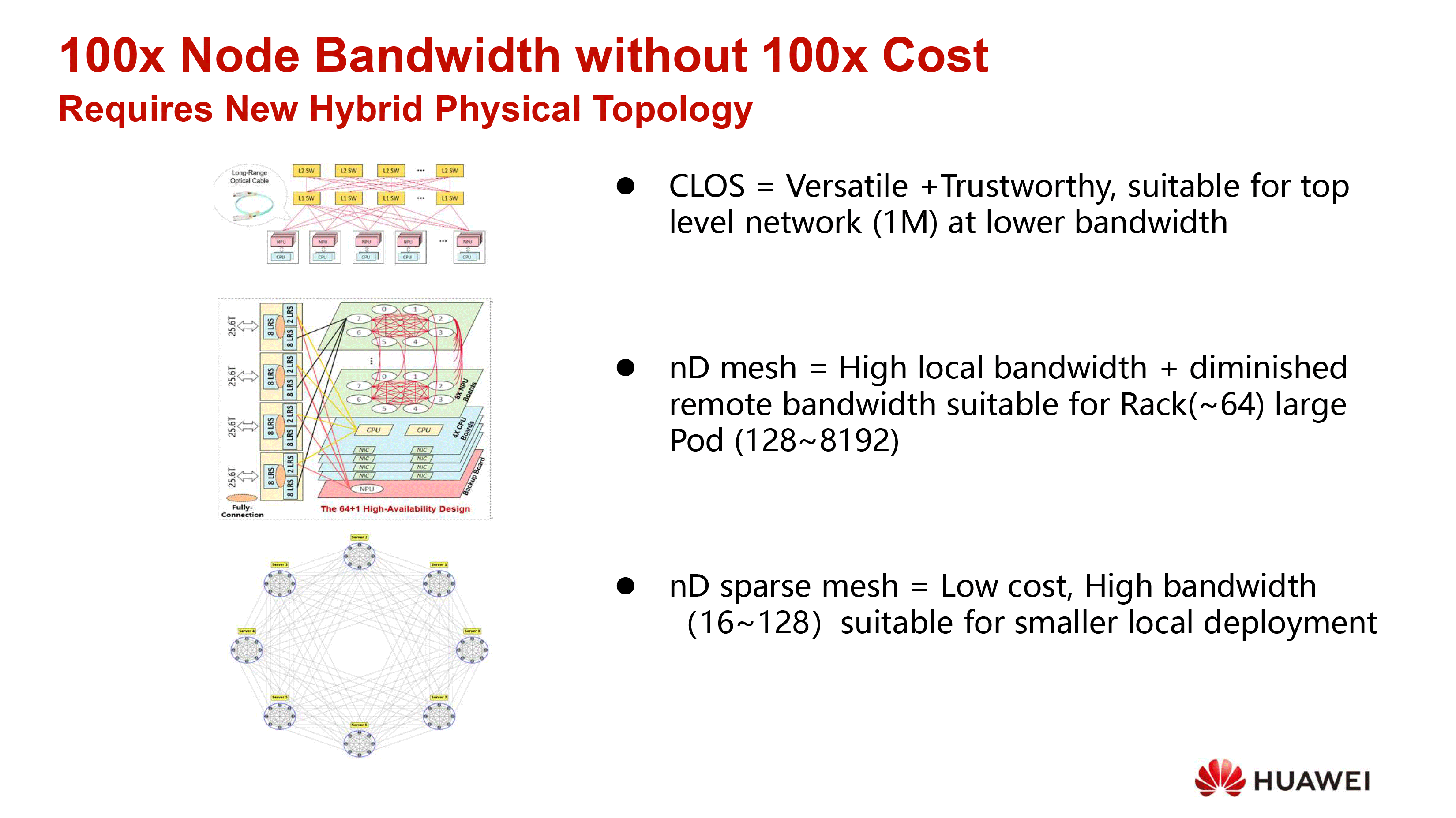

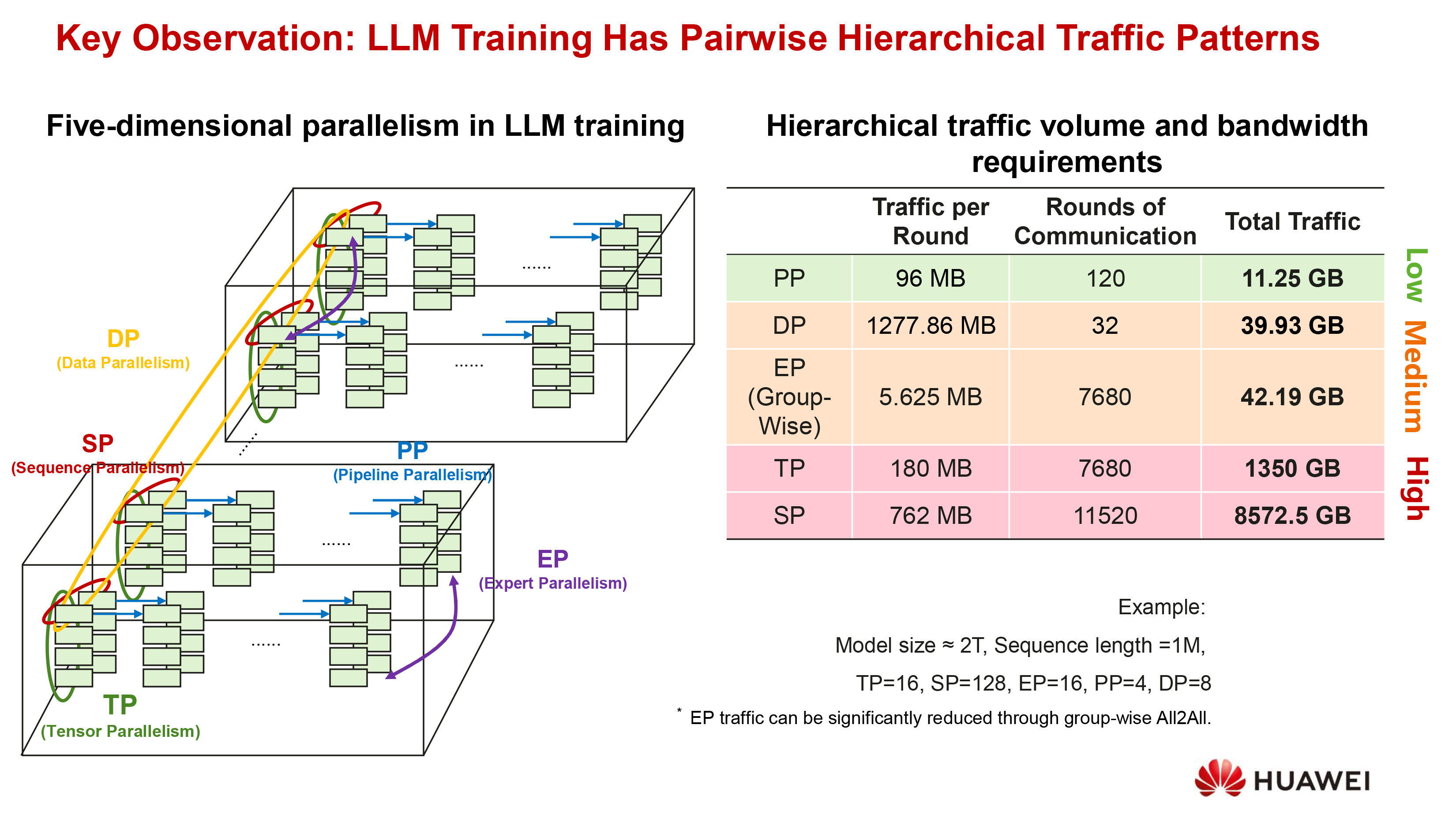

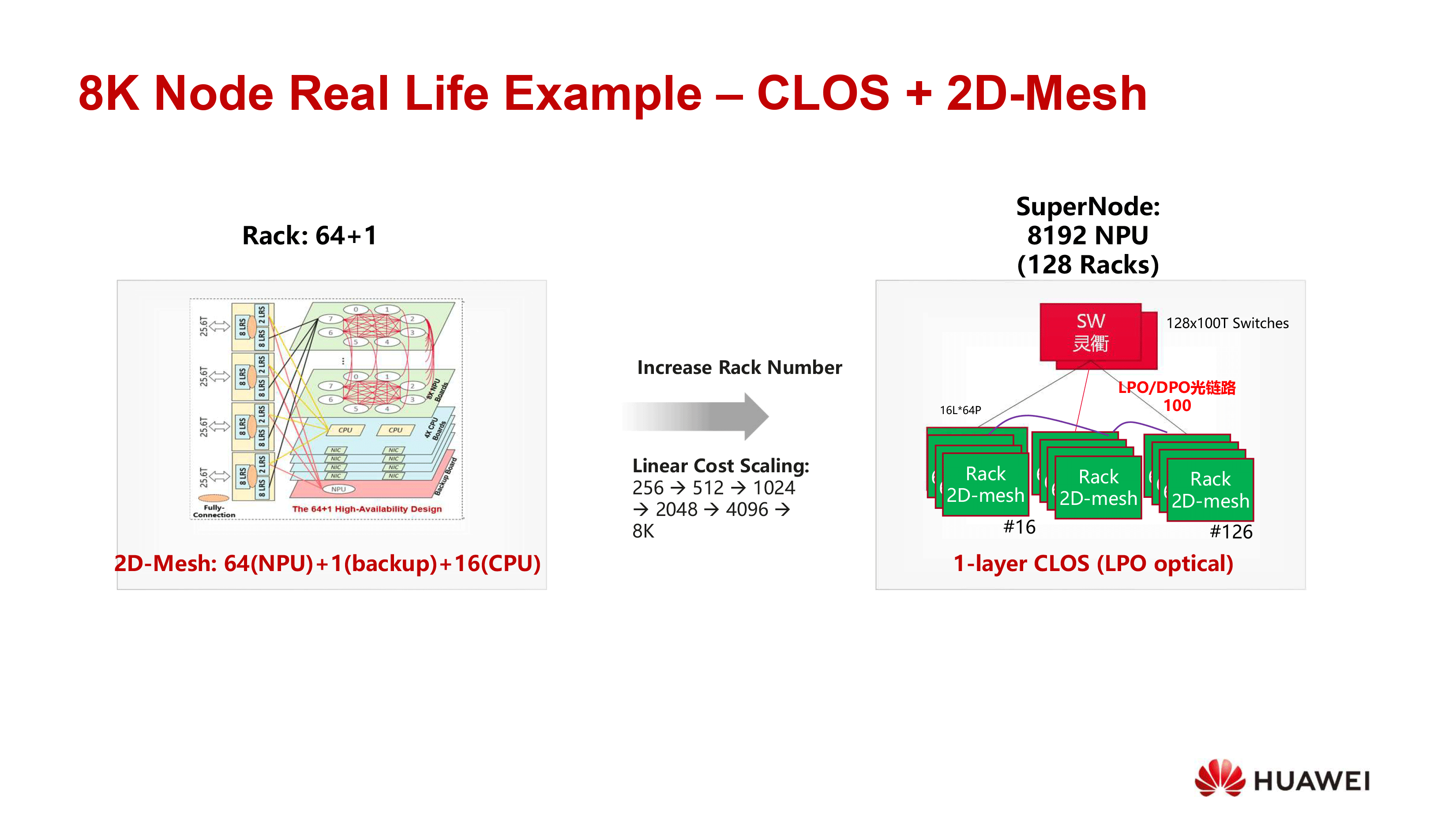

The network topology in UB-Mesh is hybrid. At the top level, a CLOS structure would tie together racks across a hall. Beneath that, multi-dimensional meshes would link tens of nodes inside each rack. This hybrid model is meant to avoid the runaway expense of conventional designs as systems grow to tens or hundreds of thousands of nodes.

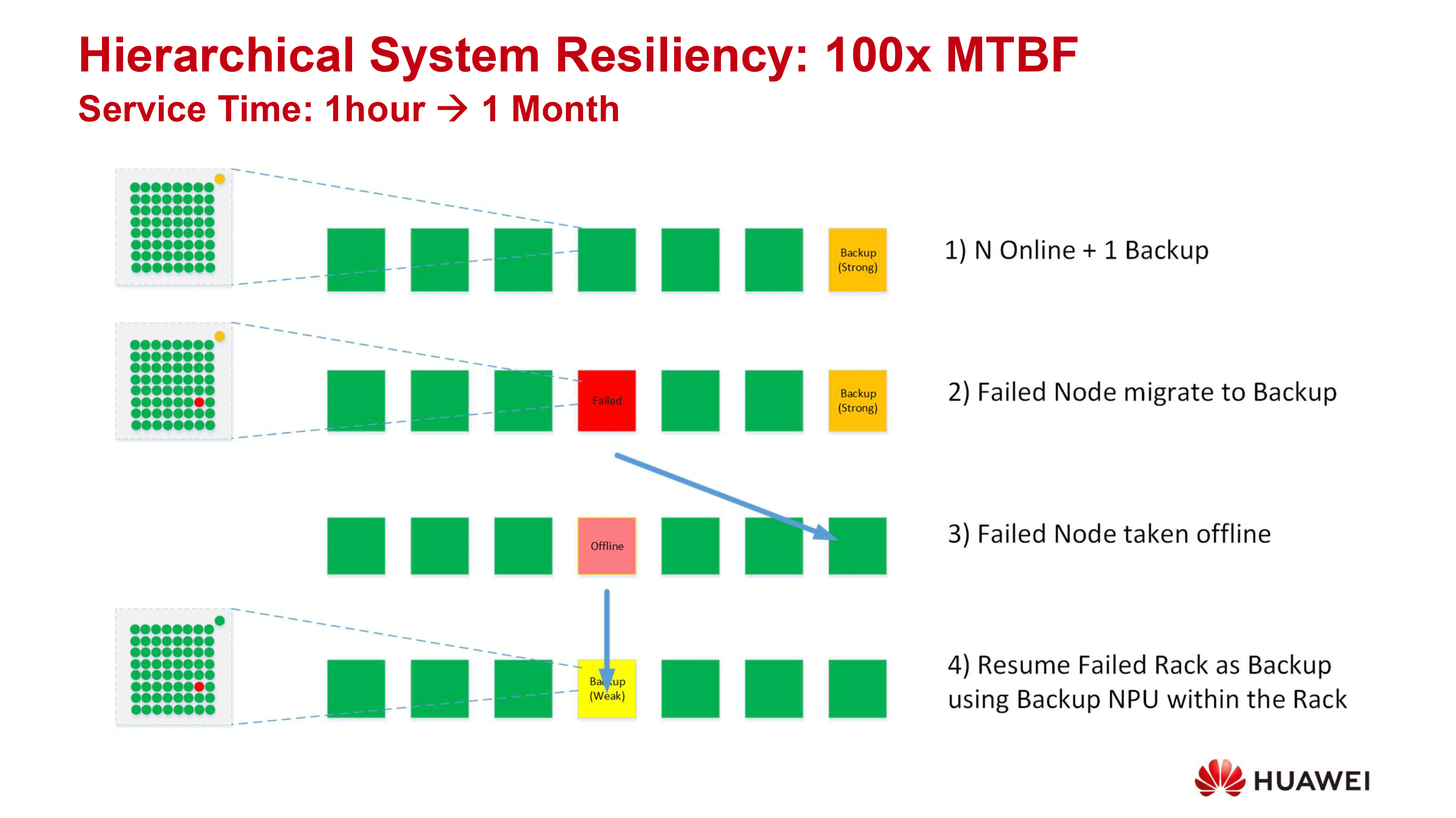

Also, reliability has to be implemented beyond individual links. Huawei outlined a system model where hot-spare racks automatically take over when another rack fails. Then faulty racks are repaired and rotated back in to maintain availability. This design extends mean time between failures by orders of magnitude, a scale of improvement necessary for million-chip systems, according to Huawei.

Lower costs

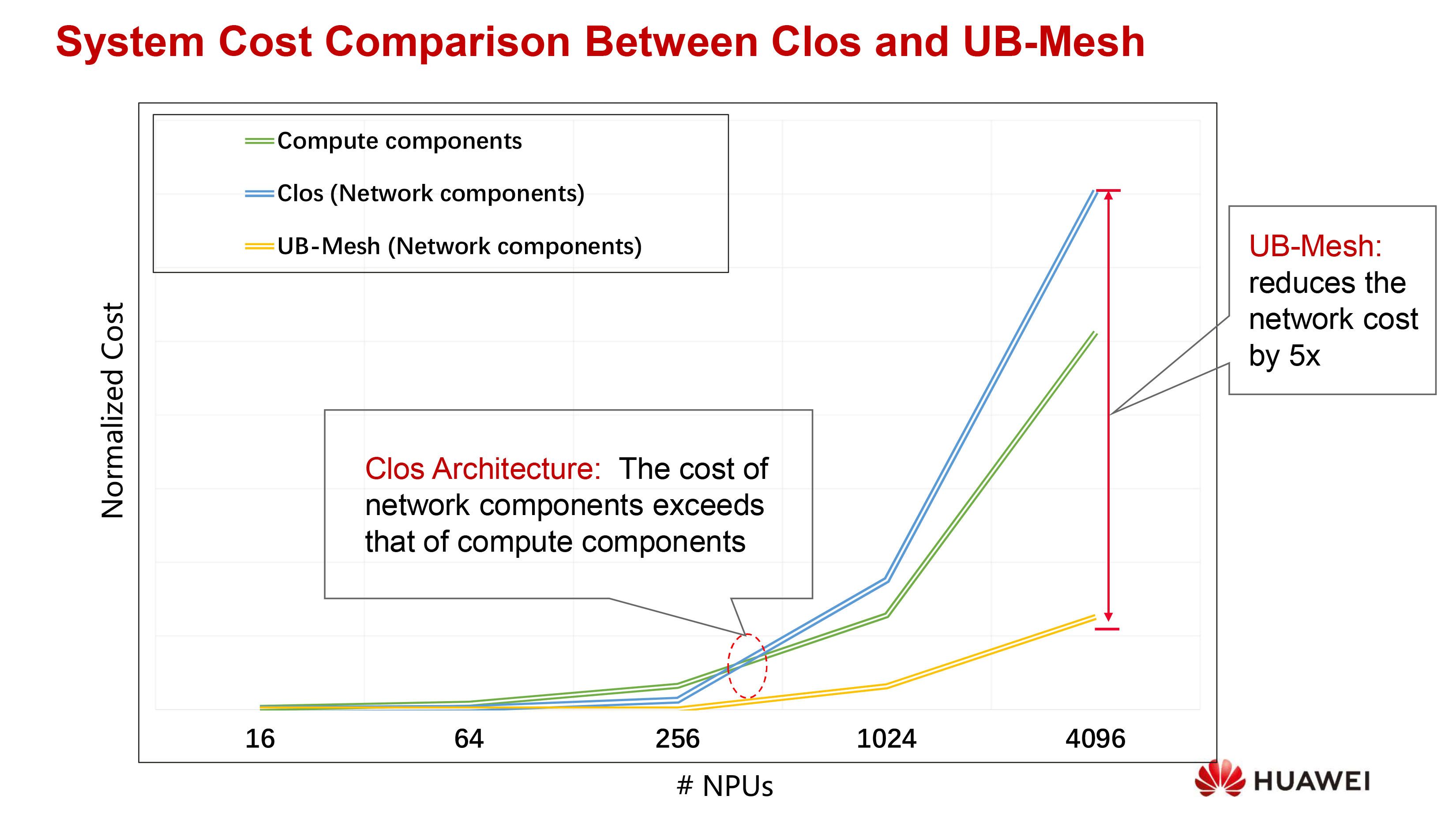

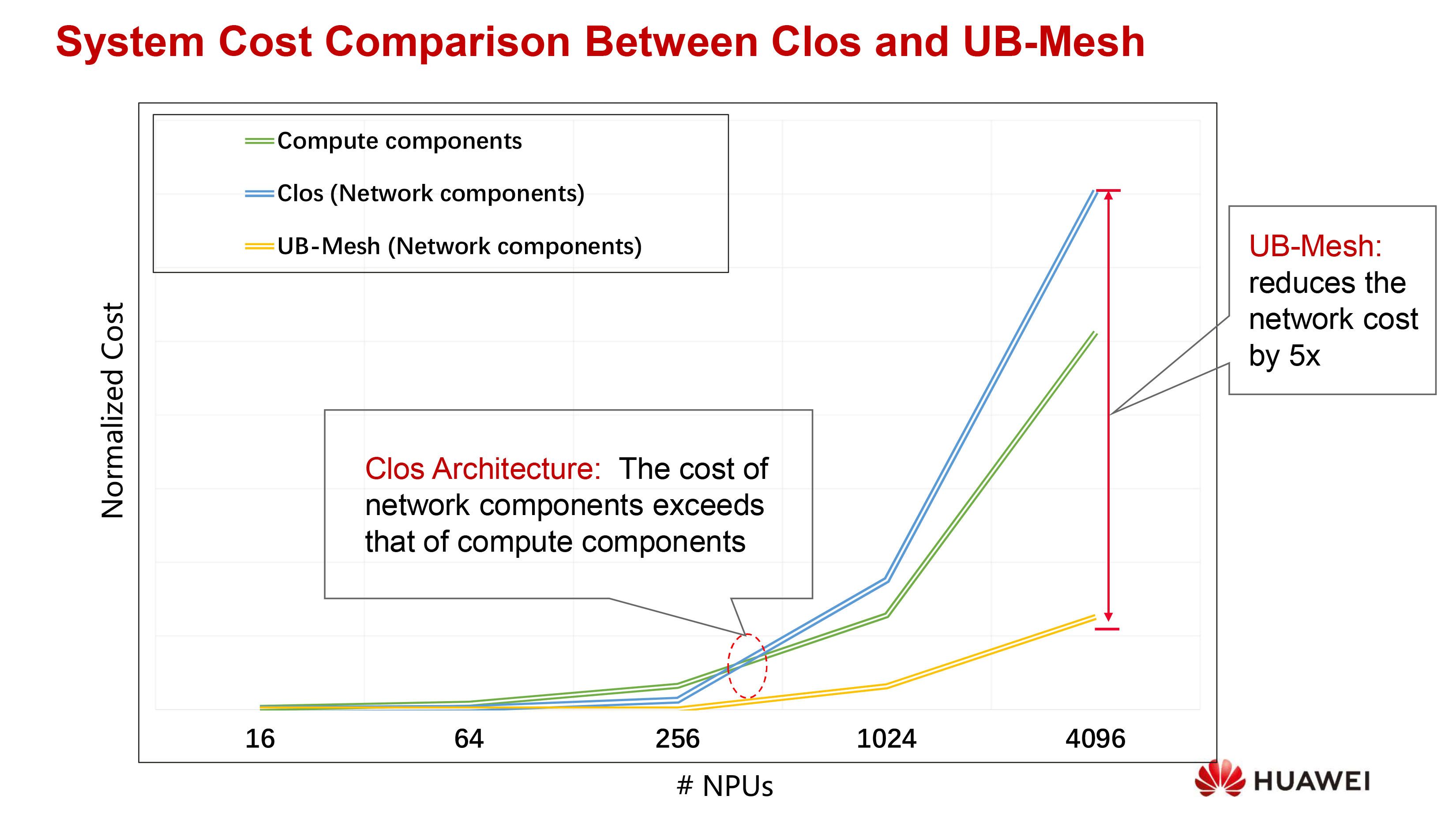

From a cost perspective, the difference is stark, based on data from Huawei. Traditional interconnects tend to see linear growth in costs as the number of nodes increases, which means that they can eventually eclipse the price of AI accelerators (such as Nvidia's H100 or B200) themselves. UB-Mesh, by contrast, scales in a sub-linear fashion, adding capacity without proportionally increasing cost. Huawei even pointed to a practical 8,192-node system combining CLOS and 2D mesh elements as proof of feasibility.

Strategic implications

With UB-Mesh and the SuperNode, Huawei is offering a systems-level architecture designed to support massive AI clusters in China and abroad. If the technologies take off, then Huawei will reduce (or rather cease) dependence on Western standards like PCIe, NVLink, UALink, and even TCP/IP inside its next-generation data centers. Rather than competing with AMD, Intel, and Nvidia on CPUs, GPUs, or even rack-scale solutions, Huawei is trying to build a data center-scale offering.

But the question is, will the initiative be adopted by anyone beyond Huawei, as it remains to be seen whether the company's customers will be interested in getting their data center infrastructure from a single supplier. To that end, Huawei is opening up the UB-Mesh link protocol for the world to evaluate. If Huawei is successful with its own deployments and there is enough interest from third parties, then it can turn UB-Mesh into a standard and perhaps even standardize the SuperNode architecture itself.

However, it remains to be seen whether the industry is interested. Nvidia relies on its own NVLink connections inside the rack and Ethernet or InfiniBand across the data center. Other companies like AMD, Broadcom, and Intel are pushing UALink for inter-pod communications and Ultra Ethernet for data center-wide connections. Both technologies are standardized and supported by a wide range of companies, enabling flexibility and reducing costs.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Jony1234 Antov shilov has a systematic ill will toward technology and advances made by Chinese companies, constantly using language laden with prejudice or underestimation."Chinese vendor claims..." (dubious tone)"Allegedly achieves...""Without independent verification...""Geopolitical motivations may be driving this narrative."Reply

But when it comes to NVIDEA

"NVIDIA unveiled a breakthrough...

""AMD takes the fight to NVIDIA..."

❌ Applies this rigor selectively

❌ Ignores real adoption outside the West

❌ Underestimates systemic innovation (software + architecture)

❌ Repeats geopolitical narratives without question

In fact, Tom's hardware seems unprofessional in many "guesses" -

bit_user Reply

Open standard. I've been harping about this for years. I'm losing hope that the writers/editors here will ever get it.The title said:Huawei to open-source its UB-Mesh

In general, "open source" only applies when it's the design of a specific software package or chip that's being made public. An "open standard" is when you publish the specification, so people can create their own implementations of it.

I don't see why that's so hard to understand or remember. One is literally the source code, while another is some sort of interface standard.

Tagging @Paul Alcorn on this.

CXL already does this.The article said:hop latency reduced from microseconds to ~150 ns

Do you mean error or failure rates? It sounds like the latter - there's a difference!The article said:Fiber optics are unavoidable for long distances but comes with error rates that are way higher than electrical connections

They don't seem to show sub-linear scaling. The graph you cite is "normalized", presumably showing the per-node cost, as the number of nodes increases.The article said:Traditional interconnects tend to see linear growth in costs as the number of nodes increases, which means that they can eventually eclipse the price of AI accelerators (such as Nvidia's H100 or B200) themselves. UB-Mesh, by contrast, scales in a sub-linear fashion, adding capacity without proportionally increasing cost.

In general, the only way I can see how connectivity cost could ever scale sub-linearly is if the relative amount of redundancy decreases as you scale. This could front-load the cost of the redundancy in a way that can offset the additional switching overhead from adding more nodes, at a certain point. Even then, I think the overall trend would still be super-linear, once you scale up large enough.

The old saying goes: "never bet against Ethernet!"The article said:it remains to be seen whether the industry is interested. Nvidia relies on its own NVLink connections inside the rack and Ethernet or InfiniBand across the data center. Other companies like AMD, Broadcom, and Intel are pushing UALink for inter-pod communications and Ultra Ethernet for data center-wide connections.

I think the wildcard would be if there's government support behind UB-Mesh. That could make it ubiquitous in Chinese datacenters (and any markets they expand into), eventually forcing anyone who wants into that market to adopt the standard. -

bit_user Reply

It's not bias, it's factual. When reporting on a press release, everything that hasn't been independently verified needs to be caveated. This is what good journalistic practice looks like.Jony1234 said:language laden with prejudice or underestimation."Chinese vendor claims..." (dubious tone)"Allegedly achieves...""Without independent verification...""Geopolitical motivations may be driving this narrative."

Now, if you can actually point to independent verification of these claims, that would be helpful and most welcome. But, I don't think you can, because essentially what they're announcing is a plan, and not actual products or implementations.

I'm sure that you can find similar caveats in his reporting of announcements from non-Chinese companies. For instance, here's an article about Tachyum's Prodigy CPU:

"However, despite these ambitious claims, no prototypes have been publicly demonstrated to confirm that the processor's architecture is both functional and capable of delivering these results."

https://www.tomshardware.com/pc-components/cpus/tachyum-builds-the-final-prodigy-fpga-prototype-delays-prodigy-processor-to-2025