Meta to build 2GW data center with over 1.3 million Nvidia AI GPUs — invest $65B in AI in 2025

Reuters reports that Meta plans to invest between $60 billion and $65 billion in 2025 to enhance its artificial intelligence (AI) and other infrastructure. This marks a significant leap from its estimated $38 billion—$40 billion spending in 2024, citing Mark Zuckerberg's post on Facebook. The company aims to solidify its competitive position in the AI industry against rivals like Google, Microsoft, and Open AI. However, $65 billion is slightly smaller than Microsoft's $80 billion.

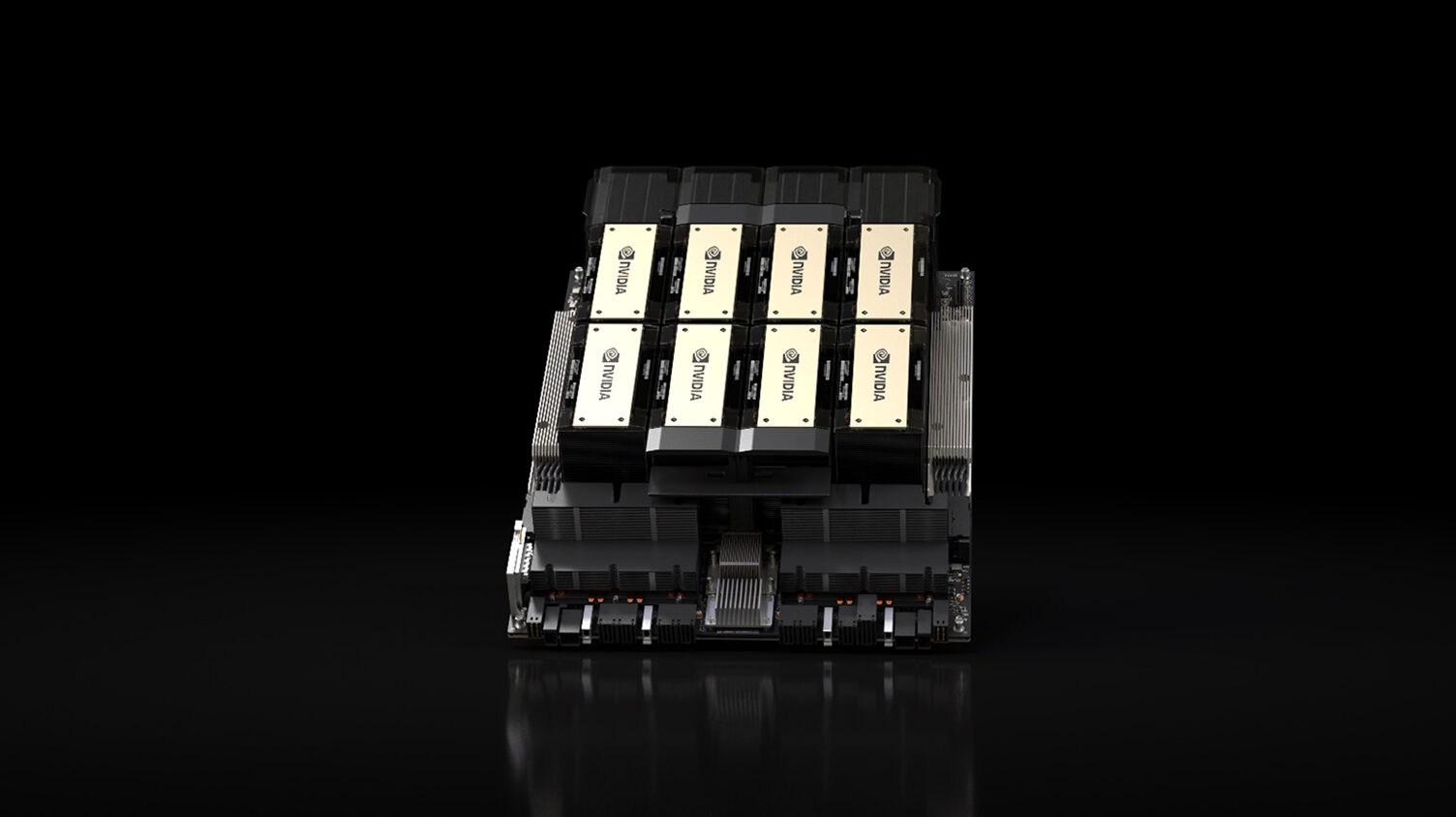

The report says that Meta will construct a 2GW data center using some 1.3 million Nvidia [presumably H100] GPUs as part of the plan. The spending probably includes the company's custom data center-grade processors, too. Yet, we are speculating here.

"We are planning to invest $60 billion — $65 billion in CapEx this year while also growing our AI teams significantly, and we have the capital to continue investing in the years ahead," Zuckerberg said in a Facebook post. "This is a massive effort, and over the coming years it will drive our core products and business, unlock historic innovation, and extend American technology leadership."

The announcement comes amidst escalating competition in AI investments. Microsoft is allocating $80 billion for data centers in fiscal 2025, Amazon expects to exceed $75 billion in spending for the same year, and OpenAI, SoftBank, and Oracle have committed $500 billion to their Stargate initiative (yet it remains to be seen where precisely those Stargate money will come from). According to Reuters, this spending far exceeds analyst estimates of $50.25 billion for Meta’s 2025 capital expenditures.

"This will be a defining year for AI. In 2025, I expect Meta AI will be the leading assistant serving more than 1 billion people, Llama 4 will become the leading state of the art model, and we'll build an AI engineer that will start contributing increasing amounts of code to our R&D efforts," Zuckerberg wrote.

Unlike its rivals, Meta offers open access to Llama AI models to end users in the U.S. The company allegedly monetizes this with its primary business, Facebook. Meta has also advanced its AI offerings with products like Ray-Ban smart glasses. By 2025, Meta expects its AI assistant to reach over 1 billion users, up from 600 million monthly active users last year.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

phead128 DeepSeek V3 and R1 trained on $5 million budget and 2043 H800s, with performance exceeding GPT 4o and o1, already rendered the massive NPU farm clusters obsolete. Same with Sky-T1 model out of Berkeley trained an 01 peer for $450.Reply -

bit_user Reply

Performance according to what metric? I don't see any of those on the OpenLLM Leaderboard:phead128 said:DeepSeek V3 and R1 trained on $5 million budget and 2043 H800s, with performance exceeding GPT 4o and o1, already rendered the massive NPU farm clusters obsolete. Same with Sky-T1 model out of Berkeley trained an 01 peer for $450.

https://huggingface.co/spaces/open-llm-leaderboard/open_llm_leaderboard#/ -

phead128 Reply

Huggingface only has open sourced models on their leaderboard, so no GPT or Claude.bit_user said:Performance according to what metric? I don't see any of those on the OpenLLM Leaderboard:

https://huggingface.co/spaces/open-llm-leaderboard/open_llm_leaderboard#/

Here is an ELO-style leaderboard with OpenAI, Anthropic, Google, DeepSeek models included, where users are blind tested AI chat responses.

https://lmarena.ai/?leaderboard -

bit_user Reply

It's not just GPT and Claude missing. I didn't see any of the models you listed!phead128 said:Huggingface only has open sourced models on their leaderboard, so no GPT or Claude.

That's just based on votes by the public. They're not ranking it on skills tests, like Huggingface's scores do.phead128 said:Here is an ELO-style leaderboard with OpenAI, Anthropic, Google, DeepSeek models included, where users are blind tested AI chat responses.

https://lmarena.ai/?leaderboard

What I'm getting at is that I can believe you can train a specialized model with a lot fewer resources and end up with something competitive. Training one that's as versatile as leading contenders on Huggingface's LLM leaderboard is a lot harder and probably takes disproportionately more resources. -

jp7189 I never thought I'd be a fan of Meta/Facebook, but i am a HUGE fan of llama specifically because it's open source/open weight and allows people to add their own fine tune training on top of it.Reply -

phead128 Reply

These are ranked by blinded votes on best anonymous AI responses, not based on some popularity contest.bit_user said:That's just based on votes by the public.

For skills based test, those are available on DeepSeek 's publication.bit_user said:They're not ranking it on skills tests, like Huggingface's scores do.

https://github.com/deepseek-ai/DeepSeek-V3/raw/main/figures/benchmark.png

Also, for DeepSeek R-1's publicationhttps://github.com/deepseek-ai/DeepSeek-R1/raw/main/figures/benchmark.jpg

The MMLU Pro and MMLU scores for DeepSeek/GPT/Claude/Google really blows everything out of the water compared to any model on HuggingFace (which peaks at 70 points for the top model)

Except as shown by the MMLU Pro scores, DeepSeek/GPT/Claude/Google blows every open source model on the HuggingFace's LLM board out of the water, and they aren't even listed on HuggingFace's leader board. It's likely because it's voluntary submission.bit_user said:What I'm getting at is that I can believe you can train a specialized model with a lot fewer resources and end up with something competitive. Training one that's as versatile as leading contenders on Huggingface's LLM leaderboard is a lot harder and probably takes disproportionately more resources. -

bit_user Reply

But the questions are just a pool submitted by the public and not sorted by skill. So, you can still have one specialized model that's really good at certain prompts winning out over a more general and versatile model.phead128 said:These are ranked by blinded votes on best anonymous AI responses, not based on some popularity contest.

Why didn't they submit it to Hugging Face's LLM leaderboard, at least in addition to their comparison against GPT and Claude?phead128 said:For skills based test, those are available on DeepSeek 's publication.

https://github.com/deepseek-ai/DeepSeek-V3/raw/main/figures/benchmark.png -

phead128 Reply

Maybe because HuggingFace's LLM leaderboard is not good?bit_user said:Why didn't they submit it to Hugging Face's LLM leaderboard, at least in addition to their comparison against GPT and Claude?

HuggingFace’s leaderboards show how truly blind they are because they actively hurting the open source movement by tricking it into creating a bunch of models that are useless for real usage.

https://semianalysis.com/2023/08/28/google-gemini-eats-the-world-gemini/

That explains why no respectable LLM is listed on there, because they are useless (except maybe MMLU Pro). -

zsydeepsky Replybit_user said:Why didn't they submit it to Hugging Face's LLM leaderboard, at least in addition to their comparison against GPT and Claude?

probably because huggingface havn't completed the test yet? I just checked the leaderboard, DeepSeek-R1 has 3 models finished the test and has score there:

deepseek-ai/DeepSeek-R1-Distill-Qwen-14Bdeepseek-ai/DeepSeek-R1-Distill-Llama-70Bdeepseek-ai/DeepSeek-R1-Distill-Qwen-32B

anyway, there are other tests done by 3rd party, which aim to provide more challenging tests (since the current tests became too simple/too familiar for new models), like the "Humanity's Last Exam", which actually shows DeepSeek can solve hard questions better than GPT-O1 (or any other model)

I personally also compared those 2 models, uploaded the code framework, and asked them to give opinions and analysis,. DeepSeek-R1 does provide WAY BETTER insights than GPT-O1.