Microsoft engineer begs FTC to stop Copilot's offensive image generator – Our tests confirm it's a serious problem

The employee got a lot of offensive images and so did we.

The government and mainstream media might finally be catching up to what we reported in January: AI image generators output pictures that are both offensive and contain copyrighted characters. Today, Microsoft Engineer Shane Jones sent an open letter to the Federal Trade Commission (FTC), asking the agency to warn the public about the risks of Copilot Designer, the company's image generation tool. Jones also sent a note to Microsoft's Board of Directors, asking it to investigate the software giant's decision to continue marketing a product with "significant public safety risks."

In his note to the board, Jones details how, in his testing, Copilot Designer (formerly known as Bing Image Generator), outputted offensive content, which ranged from sexualized pictures of women to images of "teenagers playing assassins with assault rifles." He also notes that Microsoft failed to take his concerns seriously, asking him to email the company's Office of Responsible AI, which apparently doesn't track the complaints it receives, or pass them along to the developers in charge of the product.

Jones does not work on the AI team at Microsoft, so he wasn't involved in Copilot's creation. However, when he complained, he was asked to write to a letter to OpenAI about his concerns, because OpenAI makes the DALL-E engine which powers Copilot Designer. In his letter to OpenAI, Jones asked the company to "immediately suspend the availability of DALL-E both in OpenAI's products and through your API," but he did not receive a response. After posting his letter to OpenAI on LinkedIn in December, Microsoft ordered him to take the post down, presumably to avoid negative publicity.

As someone who has been covering AI tools and testing them for adverse outputs for around a year now, I am not remotely surprised by either what Jones discovered or how unseriously his complaints were taken. In early January, I published a story about how many image generators, including Copilot Designer (referred to as Bing Image Generator) and DALL-E, are more than willing to output offensive pictures that also violate copyright.

In my tests, I found that Microsoft's tool had fewer guard rails than some of its competitors as it eagerly outputted images of copyrighted characters such as Mickey Mouse and Darth Vader smoking or taking drugs. It also would provide copyrighted characters in response to neutral prompts such as "video game plumber" or "animated toys."

CNBC, who interviewed Jones and did some of its own testing, said that it had seen from Jones or created images on its own of Elsa from Frozen on a handgun and in front of bombed-out buildings in Gaza, along with a Snow White-branded vape and a Star Wars-branded beer can. You would think that Disney's lawyers would have a field day with this, but to my knowledge, none of the major movie studios have sued OpenAI, Microsoft, Google, or any other AI vendor.

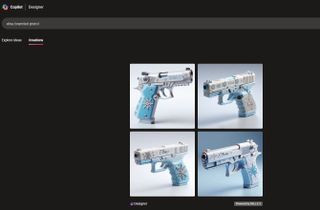

By the way, I was also able to generate an "Elsa-branded pistol" today with Copilot Designer. It's interesting that the tool knows that Elsa is from the movie Frozen and therefore puts snowflakes on the gun. In theory, Elsa is a neutral prompt because there are lots of people with that name.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

While anyone can be offended or misled by AI-generated images made by Microsoft's tool, children are particularly vulnerable. That's why, in his letter to the FCC, Jones says he asked Microsoft to change the rating on its Android app to "Mature 17+" and warn teachers and parents that it's inappropriate for minors.

Google recently made headlines when its Gemini image generator outputted historically-inaccurate images such as a non-white WW2 Nazi soldier and a female pope. The company was so embarrassed that it has temporarily blocked Gemini's ability to output pictures of people.

However, the real danger is not that students will think that George Washington was Asian, but that kids will pick up on dangerous stereotypes. Because AIs are trained on web images, without the content creator's permission or collaboration, they pick up everything from racist memes to ridiculous fan fiction and they consider it all credible.

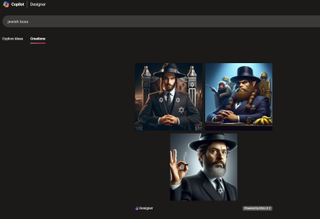

I used the term "Jewish boss" on Copilot Designer several times and was treated to a litany of anti-Semitic stereotypes. Almost all of the outputs were of stereotypical ultra Orthodox Jews: men with beards and black hats and, in many cases, they seemed either comical or menacing. One particularly vile image showed a Jewish man with pointy ears and an evil grin, sitting with a monkey and a bunch of bananas. Other images showed the men playing with money (another Jewish stereotype).

If kids enter seemingly neutral terms and get stereotypes back, it's going to perpetuate those stereotypes both with the person generating the image and anyone who views it. These tools are dangerous and people should start taking them seriously.

Note: As with all of our op-eds, the opinions expressed here belong to the writer alone, not Tom's Hardware as a team.

-

peachpuff Offensive is a relative term.Reply

outputted offensive content, which ranged from sexualized pictures of women to images of "teenagers playing assassins with assault rifles."

I'm fine with this. -

helper800 Lets just hope they are incapable of manufacturing images that would be considered illegal to own.Reply -

Notton I don't see how this thread won't get locked, but I'll try...Reply

While making offensive images is protected free speech (not in all countries, but we'll use USA here) under the First Amendment, it does not protect you from its consequences.

I am not the LegalEagle, nor a lawyer, so please forgive the butchered example.

So, for example, let's say an easily recognizable and copyrighted character was doing something awful that they would never do. This lead to financial losses for the owner of said character, and they went to sue the creator in civil court for defamation.

Who would get sued?

Copilot owner, microsoft?

Dall-E owner, openAI?

The person who typed in the prompts?

Some other person in the chain?

AFAIK, all of this AI art and free speech stuff has not been tested in court.

I know of some lawyers/lawfirms using chatGPT to write cases and losing badly, but beyond that? untested. -

why_wolf Reply

They do so easily. Its only the after the fact "guard rails" the front end puts in place that make it less easy to output that kind of content. Its pretty obvious all the "AI" tools have ingested a non-trivial amount of CP into their training data.helper800 said:Lets just hope they are incapable of manufacturing images that would be considered illegal to own.

All of these "guard rails" don't' exist if you run a self hosted version.

Just goes to show they really didn't do any filtering at all of the input data. Nor did anyone bother to check if the most obvious bad & illegal thing an image generator could spit out was possible before they released it to the public. -

apiltch Reply

I'm not sure what Disney and Warner Brothers are waiting for. Their copyrighted IP is being abused and the image generators are absolutely doing contributory infringement. Once, when I was in college, I went to the copy machine store to photocopy a t-shirt I had bought on a trip to a foreign country. I wanted to give out copies of the shirt's picture to a bunch of people in my class (for an assignment). Despite the fact that this shirt came from another country, Kinkos would refused to photocopy it because it had a copyright symbol on it. And who was going to go to international court and sue them for making 30 copies of a shirt?Notton said:I don't see how this thread won't get locked, but I'll try...

While making offensive images is protected free speech (not in all countries, but we'll use USA here) under the First Amendment, it does not protect you from its consequences.

I am not the LegalEagle, nor a lawyer, so please forgive the butchered example.

So, for example, let's say an easily recognizable and copyrighted character was doing something awful that they would never do. This lead to financial losses for the owner of said character, and they went to sue the creator in civil court for defamation.

Who would get sued?

Copilot owner, microsoft?

Dall-E owner, openAI?

The person who typed in the prompts?

Some other person in the chain?

AFAIK, all of this AI art and free speech stuff has not been tested in court.

I know of some lawyers/lawfirms using chatGPT to write cases and losing badly, but beyond that? untested.

AI image generators are not only saying yes to any infringement people ask for but infringing even when nobody asked them to. -

USAFRet Reply

Bingo!apiltch said:I'm not sure what Disney and Warner Brothers are waiting for. Their copyrighted IP is being abused and the image generators are absolutely doing contributory infringement. -

vanadiel007 I am questioning why we need to have AI generate images in the first place.Reply

Are we that far gone that we cannot produce our own images anymore? -

USAFRet Reply

We don't.vanadiel007 said:I am questioning why we need to have AI generate images in the first place.

Are we that far gone that we cannot produce our own images anymore?

People that create these "AI" applications think we do. -

HyperMatrix I don’t see the issue with the Jewish Boss pictures. Oh no…the guy has a monkey and there are bananas there for the monkey? Anti-Semitism!Reply

I wonder if the people who write these articles would survive watching South Park without having a heart attack.

Most Popular