Nvidia criticizes AI PCs, says Microsoft's 45 TOPS requirement is only good enough for 'basic' AI tasks

Nvidia says its GPUs provide substantially better AI-performance than today's bleeding edge NPUs.

Nvidia recently showcased the capabilities of its RTX consumer GPUs at a press event, according to Benchlife.Info. The company pointed out how its GPUs are better than regular NPU-equipped PCs at handling AI tasks. It also presented several benchmarks, which showed its GPUs outperforming competing notebooks with AI-hardware acceleration including the MacBook Pro featuring Apple's top-of-the-line M3 Max chip.

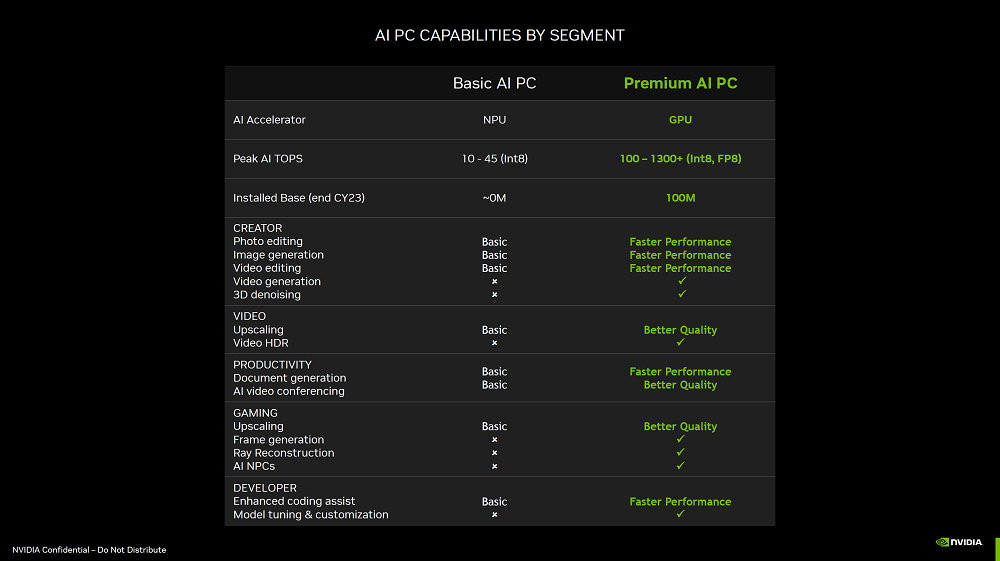

The entire event was centered around how Nvidia's RTX GPUs outperform modern-day "AI PCs" equipped with NPUs. According to Nvidia, the 10–45 TOPS performance rating found in modern Intel, AMD, Apple, and Qualcomm processors is only enough for "basic" AI workloads. The company gave several examples, including photo editing, image generation, image upscaling, and enhanced coding assistance through AI, which it claimed NPU-equipped AI PCs are either incapable of handling or are only capable of executing at a very basic level. Nvidia's GPUs, on the other hand, can handle all AI tasks and execute them with better performance and/or quality, naturally.

Nvidia stated that its RTX GPUs are much more performant than NPUs and can achieve anywhere between 100 to 1300+ TOPS, depending on the GPU. The Nvidia event went so far as to categorize its RTX GPUs as "Premium AI" equipment, while regulating NPUs to a lower "Basic AI" equipment category. (Nvidia also added another category, cloud computing, which it categorized as "Heavy AI" equipment boasting thousands of TOPS of performance, no doubt a nod to its H100 and B200 Blackwell enterprise GPUs.)

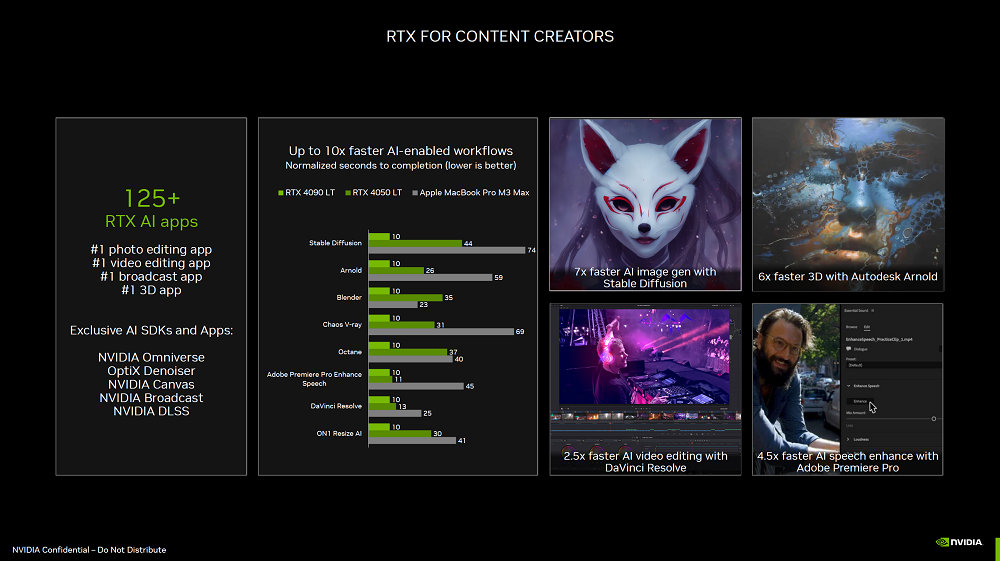

Nvidia shared several AI-focused benchmarks, which compared the latest RTX 40-series GPUs against some of its competitors. For content creation, Nvidia showed a benchmark comparing the RTX 4090 Laptop GPU, the RTX 4050 Laptop GPU, and the Apple Macbook Pro M3 Max in several content creation applications that use AI — featuring Stable Diffusion, Arnold, Blender, Chaos V-ray, Octane, Adobe Premier Pro Enhance Speech, DaVinci Resolve, and ON1 Resize AI. The benchmark showed the RTX 4090 Laptop GPU outperforming the M3 Max-equipped Macbook Pro by over 7x in the most extreme cases, and the RTX 4050 Laptop GPU outperforming the same Macbook Pro by over 2x. On average, the mobile RTX 4090 outperformed the M3 Max by 5x while the RTX 4050 LT outperformed it by 50–100 percent.

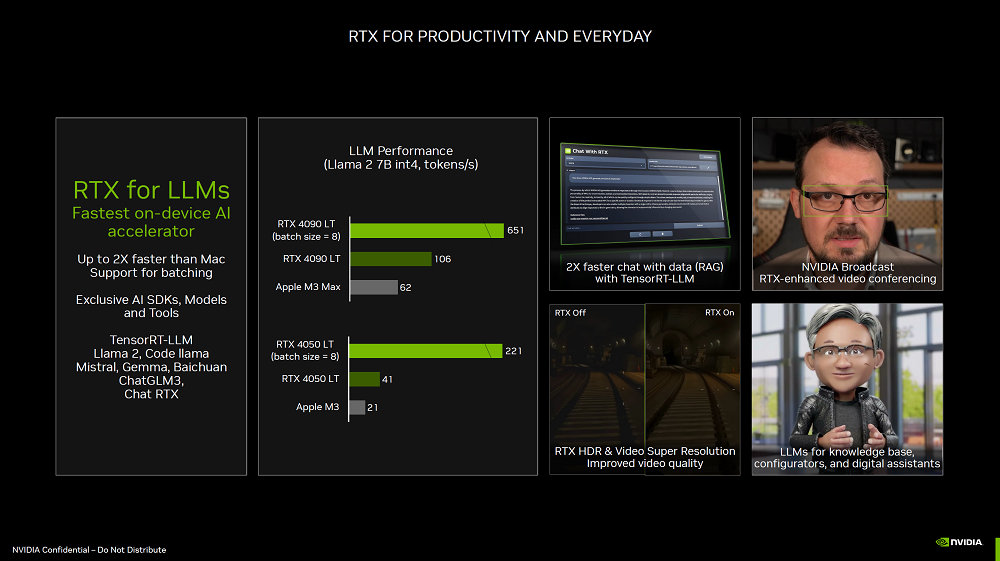

Another benchmark Nvidia showed encompassed large language models (LLMs), utilizing a Llama 2 7B int4 LLM workload. Nvidia pitted the mobile RTX 4090 against the M3 Max and the mobile RTX 4050 against Apple's baseline M3 chip. The RTX 4090 was 42% faster than the M3 Max, but with a batch size of eight, the chip was 90% faster. Similarly, the mobile RTX 4050 was 48% faster than the Apple M3, but with a batch size of eight, the RTX 4050 was 90% quicker. Batch size changes are an optimization that can improve AI performance, depending on the architecture.

Nvidia showed a third benchmark using UL Procyon Stable Diffusion 1.5 against AMD. For this test, Nvidia pitted its entire RTX 40-series desktop GPU lineup against AMD's Radeon RX 7900 XTX. Every Nvidia GPU, starting from the RTX 4070 Super and higher, outperformed AMD's flagship — and the RTX 4090 outperformed it by 2.8x. The RTX 4060 Ti and RTX 4060 were noticeably slower, however. The takeaway is that Nvidia's flagship GPU is substantially quicker than the equivalent AMD GPU, at least in this specific workload. (Our own Stable Diffusion benchmarks tell a similar story, with the 4090 beating the 7900 XTX by 2.75X at 768x768 generation and 2.86X with 512x512 image generation.)

With the spotlight on NPU-equipped AI laptops and AI PCs this year, Nvidia is reminding everyone that its RTX GPUs are already far more potent alternatives. Not only are they very powerful, there are substantially more RTX GPUs out in the wild today than there are NPU-equipped PCs — meaning many existing laptops and PCs with RTX cards are already AI-ready.

Nvidia does make a good point: Its RTX GPUs do provide more performance compared to the latest NPUs seen on Qualcomm's Snapdragon X Elite and AMD's Ryzen 8040 series counterparts. However, we have yet to see Nvidia RTX GPUs become a crucial part of AI PCs. Microsoft's official definition for AI PCs requires the addition of an NPU alongside the CPU/GPU — meaning most RTX-equipped systems are still "unqualified" as most do not have built-in NPUs.

Microsoft is also clearly looking at not just raw computational power, but on the efficiency of doing the work. That an RTX 4090 Laptop GPU can pummel integrated NPUs shouldn't surprise everyone. But the mobile 4090 is also rated to draw 80W to 150W of power, roughly 2X to 5X more than what many of these mobile processors require.

It will be interesting to see if Microsoft changes its mind on the definition of an AI PC in the future — or more specifically, when it will change the definition and in what ways. 45 TOPS as a baseline level of performance, via an energy efficient NPU, will bring AI acceleration to mainstream consumers. In fact, Microsoft even insists that its Copilot software run on the NPU instead of the GPU, to improve battery life. But it's inevitable that at some point we'll have AI tools that need far more than this initial 45 TOPS of compute, and dedicated GPUs are already far down that path.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

usertests The point is energy efficiency. There have been a lot of ARM chips out there with around 1-10 TOPS accelerators (such as the RK3566 or MediaTek Genio 1200). These can be useful, and now similar but even more powerful capabilities are coming to the x86/ Windows (Snapdragon) ecosystem.Reply

If laptops or desktops are plugged in all the time, it's probably better to have a discrete GPU with much higher performance.

In the long run, INT4/INT8/BF16/etc. accelerators will become standard in all desktop PCs and consoles, with performance of 100 TOPS or higher, and they will get used instead of the GPU if the GPU's performance isn't needed. That could become relevant to gaming... in the 2030s. -

Notton I understand what nvidia is trying to do here.Reply

Crypto went bust, so they want AI to replace that void in "gaming" GPU sales.

Thusly, they are pitching TOPS performance to the formerly-crypto-formerly-blockchain-now-AI crowd.

I hope their arrogance comes to bite them in the butt this time around. -

leoneo.x64 Why don't they put a CPU / GPU toggle and let users decide? Battery life and power consumption hit less than the cost of acquiring new platform for NPU based CPUs. 45 TOPs certainly looks paltry when you add in the cost of acquiring new CPUs and therefore a new platform for some users. On the contrary, GPU based AI PC will make it truly flourishReply

and Intel should not talk about power efficiency after releasing power hogs for decades now. A frugal chip on a power guzzling monster CPU is marketing facade. Laughable Gelsinger strategy -

edzieba Reply

The question is, if accelerators of single/double-digit TOPS are actually of any value. Are there really any tasks of that scale that can be appreciably accelerated with such a minimal performance FFB? With 1000 TOPS of a dedicated GPU you can bring a task of hours or minutes down to seconds, which makes it an attractive user-oriented "press button, get result" feature. If your little on-die accelerator is just taking a task that takes hours and reducing it to a single hour, then that's just going to end up being a feature nobody uses.usertests said:The point is energy efficiency. There have been a lot of ARM chips out there with around 1-10 TOPS accelerators (such as the RK3566 or MediaTek Genio 1200). These can be useful, and now similar but even more powerful capabilities are coming to the x86/ Windows (Snapdragon) ecosystem.

"Tryng to do"? Nvidia have for the last several years been building said AI accelerator cards, revenue for which dwarfs gaming card (and the entire client card market) revenue and has for quite some time.Notton said:I understand what nvidia is trying to do here.

Crypto went bust, so they want AI to replace that void in "gaming" GPU sales.

Thusly, they are pitching TOPS performance to the formerly-crypto-formerly-blockchain-now-AI crowd.

I hope their arrogance comes to bite them in the butt this time around.

There isn't any "trying to do", this is what they have already done, through a decade of investment in CUDA, adding Tensor cores to all their cards, etc. -

hotaru251 NPU vs Nvidia GPU performance vs energy consumption.Reply

Thats the point of NPU.

bad take.Notton said:Crypto went bust, so they want AI to replace that void in "gaming" GPU sales.

Crypto busting doesnt harm them.

they sell more GPU for ai than they ever did for crypto.

i doubt gaming sales are even over 15% of their profit.

When you have companies like tesla buying massive amounts of ur ai focused gpu's that cost like 10 grand a pop....

Jensen already said Nvidia is not a gpu company anymore its an ai company they just havent changed name.

Want to do ai stuff? Nvidia is basically ur best option atm (and they know it hence their attitude) if you dont care about anything but end result.

now if u aren't that type of customer (which most arent)? NPU are cheaper & more effective at a lower energy cost.

Most people who use "ai pcs" arent needing the power from a 4090+ tier of gpu.

They are fine w/ a small npu.

Nvidias benefit has always been others made their stuff work with nvidia features. (mainly cuda)

Some are now trying to stop that and make stuff more open source but if it works long term nobody knows.

and if NPU's show as being the future over ur gpu doing it? You can bet nvidia will be there as they have a bunch of really smart people who can make it. -

usertests Reply

Like what? Maybe future versions of the Tribler torrent client?Amdlova said:Only thing I want IA do is help with my torrents

https://torrentfreak.com/researchers-showcase-decentralized-ai-powered-torrent-search-engine-240425/

Probably some lighter use cases like image recognition/real-time filtering, small LLMs (Copilot), Stable Diffusion, etc. are fine with the upcoming baseline of ~40-50 TOPS.edzieba said:The question is, if accelerators of single/double-digit TOPS are actually of any value. Are there really any tasks of that scale that can be appreciably accelerated with such a minimal performance FFB? With 1000 TOPS of a dedicated GPU you can bring a task of hours or minutes down to seconds, which makes it an attractive user-oriented "press button, get result" feature. If your little on-die accelerator is just taking a task that takes hours and reducing it to a single hour, then that's just going to end up being a feature nobody uses.

QNAP TS-133 1-bay NAS leverages Rockchip RK3566 AI capabilities for object and face recognitionWe’ve seen several hardware devices based on Rockchip RK3566 AIoT SoC that do not make use of the key features of the processor. But QNAP TS-133 1-bay NAS is different, since it relies on the native SATA and Gigabit Ethernet interfaces for network storage, and the built-in NPU is leveraged to accelerate object and face recognition by up to 6 times.

That's using the ~0.8 TOPS NPU in the RK3566. "Up to 6 times" the performance is probably in relation to either the relatively slow quad-core Cortex-A55, or the Mali-G52 iGPU.

I'm not too keen on the 9-16 TOPS NPUs found in Meteor Lake, Phoenix, and Hawk Point, since they can be outperformed by the accompanying iGPU. That should change this year once we see around 50 TOPS XDNA2 in Strix Point. Future versions of these NPUs are likely to hit at least low triple digit TOPS. -

Neilbob Reply

I know I sound completely ignorant here, and I am well aware I'm almost blindly cynical and skeptical about the Ayy-Eye jiggery-pokery, but please tell me exactly what task/s.edzieba said:If your little on-die accelerator is just taking a task that takes hours and reducing it to a single hour, then that's just going to end up being a feature nobody uses.

What are the tasks that will be so ubiquitous that such a large number of consumers (I can't stress that word enough) will require Nvidia's rather pricey version of TOPS (whatever the heck they are)? What might I need to do that could take hours?

If I need to 'generate' some kind of image, I can imagine I'd only do it a couple of times out of curiosity and then never bother again. I'm just not seeing what all the fuss is meant to be about for someone like me in everyday-world. Please educate me. -

ivan_vy some of us don't need 4090 IA power, we need better videocalling, noise filter for audio and video, light photoediting like color correction and background removal, we live in MS Office world, for everything else we can use the cloud services, power users, researchers and cutting edge content creators they will eventually go to Nvidia, that's until open source options make a better cost benefit ratio.Reply

for gaming would be nice to have AI lighting (RT or PT are too HW demanding) and buttery smooth framerates...it looks like where P5Pro is aiming to go. -

Eximo I think the key is that software developers will be encouraged to improve battery life and use the appropriate silicon to maximize that. Generally it would be seamless to the user.Reply

I could also see local processing for things like predictive text, search, or action prediction. For instance, loading up the appropriate code into memory anticipating the user's next actions. Makes the user experience better, and reports up to the big AI on your use habits of course.