Nvidia pumps another $2 billion into CoreWeave and announces standalone availability of Vera CPU — chipmaker increases stake in its customer to 9%

CoreWeave was experiencing funding issues in December.

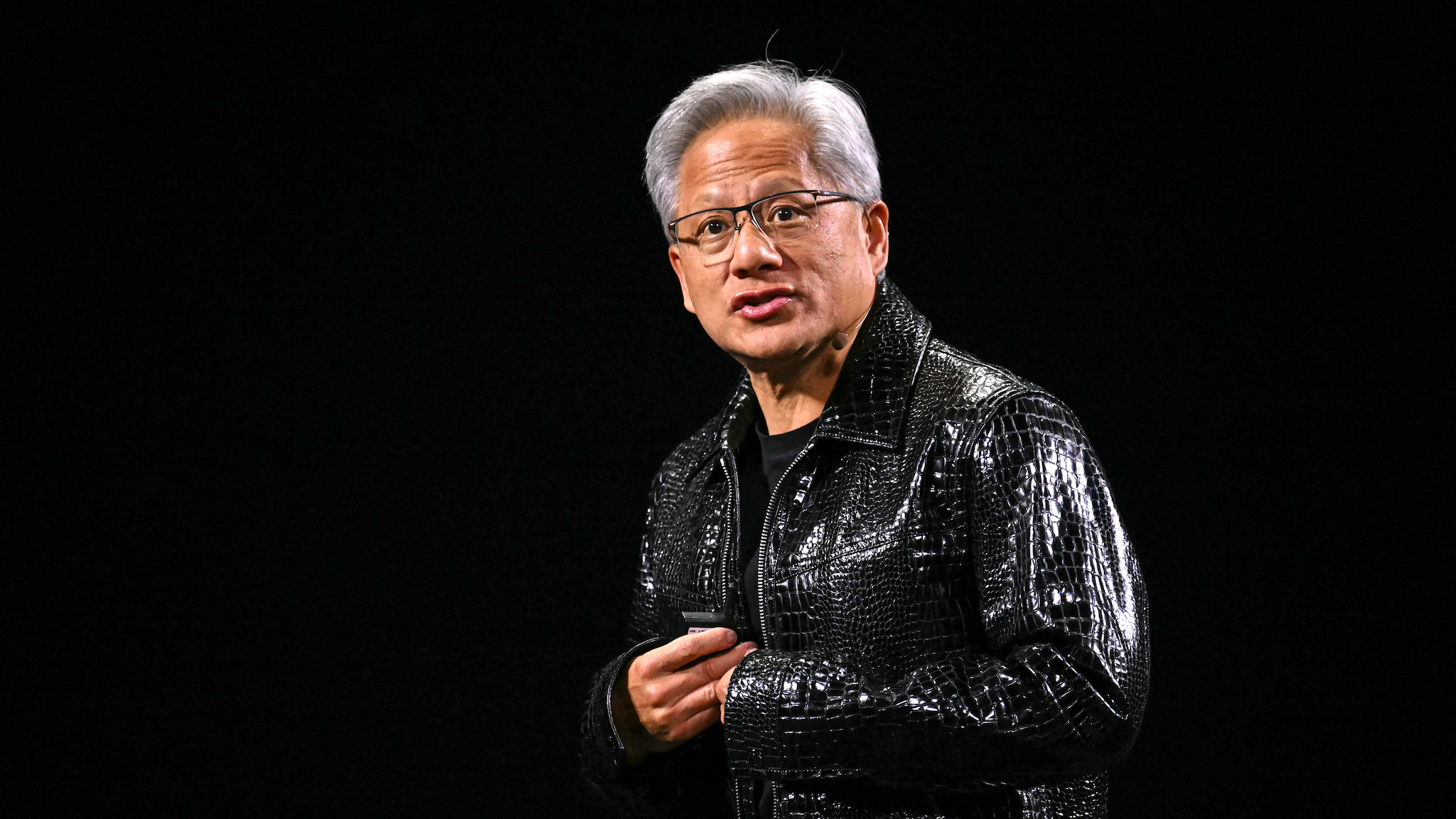

Another day, another deal earmarking large amounts of money from a big corporation to one of its customers in the AI world. The latest exchange is between Nvidia and cloud datacenter makers CoreWeave, where Jensen Huang's outfit bought a helping of CoreWeave Class A shares, for $87.20 a piece.

Before the deal, Nvidia owned just over 6% of CoreWeave, a slice that ought to have increased today to around 9%. Although those figures and today's purchase relate to standard Class A shares, some outfits are reporting that the chipmaker also owns some Class B stock — shares with 10x voting rights, privately traded.

For Huang, this investment reflects "confidence in their growth and confidence in CoreWeave’s management and confidence in their business model" [sic]. CoreWeave is one of the many companies rapidly burning through far more money than it's getting revenue, a fact that previously had some investors skittish, particularly after December when the firm revealed a plan to raise $2 billion by issuing debt to be exchanged for shares.

After today's news, though, CoreWeave's shares rallied to a 9% bump, reflecting the sizable cash injection and perhaps also Huang's outlook on the matter. Additionally, CoreWeave is apparently the first Nvidia customer getting access to its Vera CPU chip as a standalone unit, something that might have helped with the stock rise.

The green team's fresh Arm-based design was previously only available as part of an entire system board, but it's now going to be available as a standalone product, though seemingly for datacenter customers only for the time being.

As a refresher, Vera's specs are 88 cores and 176 threads, an Arm v9.2-A instruction set, 2 MB of L2 cache on each core, and 162 MB of shared L3 cache. Among many other interesting bits of info, Nvidia's Spatial Multithreading should allow each Vera core to effectively run two hardware threads, by way of divvying up resources by partition instead of time slicing them like standard SMT.

Other interesting figures include up to 1.5 TB of precious RAM per CPU, capable of pushing data at up to 1.2 TB per second. The onboard NVlink interconnect can also handle 1.8 TB/s of bytes flying by. As a rather obvious statement, the chip's super-wide design makes it perfect for AI workloads.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Bruno Ferreira is a contributing writer for Tom's Hardware. He has decades of experience with PC hardware and assorted sundries, alongside a career as a developer. He's obsessed with detail and has a tendency to ramble on the topics he loves. When not doing that, he's usually playing games, or at live music shows and festivals.

-

watzupken Sounds like same tactic to me; I inject cash in you, you buy GPU from me. Basically artificially creating sales and boosting CEOs pockets.Reply -

alan.campbell99 Curious, I had read yesterday that CoreWeave is facing a class action lawsuit alleging securities fraud.Reply -

das_stig Reply

The complaint filed alleges that, between March 28, 2025 and December 15, 2025, Defendants failed to disclose to investors that: (1) Defendants had overstated CoreWeave's ability to meet customer demand for its service; (2) Defendants materially understated the scope and severity of the risk that CoreWeave's reliance on a single third-party data center supplier presented for CoreWeave's ability to meet customer demand for its services; (3) the foregoing was reasonably likely to have a material negative impact on the Company's revenue; and (4) as a result, Defendants' positive statements about the Company's business, operations, and prospects were materially misleading and/or lacked a reasonable basis at all relevant times.alan.campbell99 said:Curious, I had read yesterday that CoreWeave is facing a class action lawsuit alleging securities fraud. -

bit_user Reply

Yeah, this "spatial multi-threading" part caught my attention. Nvidia describes it in almost those terms, but they point out that the core is physically partitioned between threads.The article said:Among many other interesting bits of info, Nvidia's Spatial Multithreading should allow each Vera core to effectively run two hardware threads, by way of divvying up resources by partition instead of time slicing them like standard SMT.

That's almost enough to make me wonder what's the point? There must be some shared resources, or else what you have isn't two threads sharing a core but rather two cores! "Time-slicing" (by which they presumably mean any sort of round-robin type arbitration) is done in typical SMT implementations to ensure that each thread gets fair access to shared resources.

Here's a tiny bit more information about it, that I'm drawing from:

https://www.nvidia.com/en-us/data-center/vera-cpu/ -

TerryLaze Reply

My understanding is that they are normal cores and then they can be split in half for/by spatial, sharing/splitting in half all resources.bit_user said:That's almost enough to make me wonder what's the point? There must be some shared resources, or else what you have isn't two threads sharing a core but rather two cores!

Time-slicing was around far before any type of SMT and is just a normal part of many OSes, it is being used on SMT threads as well but it's not an integral part of smt.bit_user said:"Time-slicing" (by which they presumably mean any sort of round-robin type arbitration) is done in typical SMT implementations to ensure that each thread gets fair access to shared resources.

Also there is no "fair" access to resources in SMT, whatever thread manager there is just tries to cram as many instructions into as few cycles as it can, it doesn't care if one of the threads starves for resources.

The priority that the thread has takes care of the thread getting enough cycles/resources, or not. -

bit_user Reply

Perhaps.TerryLaze said:My understanding is that they are normal cores and then they can be split in half for/by spatial, sharing/splitting in half all resources.

POWER 10 (and 11, I think) has cores with the ability to be configured as either 2x SMT-4 or a single SMT-8. However, the configuration to one or the other happens at boot-time and remains fixed during operation.

https://en.wikipedia.org/wiki/Power10#Variants

Yes, I'm aware of that. It makes no sense to mention in the context of SMT, unless what they actually mean is just some round-robin mechanism to ensure fairness to oversubscribed resources.TerryLaze said:Time-slicing was around far before any type of SMT

That's not very accurate.TerryLaze said:Also there is no "fair" access to resources in SMT,

I'll quote this part of an interview with Mike Clark, chief architect of AMD's Zen cores:

Mike Clark said:we’ve continued to enhance SMT. ... more from our watermarking in those though we competitively share them, there is some watermarking reserve some of the resources .

If one thread has been stuck on a long delay, we don’t want the other thread to consume all the resources, then it wakes up and wants to go and it can’t go. So, we want to keep a burstiness to it, but we want to competitively share it too, because the two threads can be at quite different performance levels, and we don’t want to be the one that can use all the resources.

Source: https://chipsandcheese.com/p/a-video-interview-with-mike-clark-chief-architect-of-zen-at-amd

So, Zen doesn't use round robin, but rather "watermarking" to prevent one thread from starving out its SMT sibling too badly.

I'm sure Intel does similar things, but I'll let you dig up those details (if you care to). -

TerryLaze Reply

So what exactly about what Mike said is fair sharing of resources?!?bit_user said:That's not very accurate.

I'll quote this part of an interview with Mike Clark, chief architect of AMD's Zen cores:

You should have quoted what I said about starving at least.

I hope not, why should you decrease the performance of every thread that can run on that core for the off chance that a stalled thread might take a bit longer to spin up.bit_user said:I'm sure Intel does similar things, but I'll let you dig up those details (if you care to). -

bit_user Reply

It's not exactly fair, as he did talk about "competitive sharing", but it tries not to be too unfair.TerryLaze said:So what exactly about what Mike said is fair sharing of resources?!?

I don't know about earlier Zen cores, but Zen 5 has a specific SMT mode that the core switches into, when the OS schedules a second thread to run on it. When it's in single-threaded mode, he said the lone thread gets all of the resources that aren't statically-partitioned.TerryLaze said:I hope not, why should you decrease the performance of every thread that can run on that core for the off chance that a stalled thread might take a bit longer to spin up.

So, the only time one thread is being held back is when there's another thread that actually stands to benefit. See the full interview, for details. -

TerryLaze Reply

When there is only one thread then there is no partitioning....the one thread gets all all the resources, not just all of them (except for) .bit_user said:When it's in single-threaded mode, he said the lone thread gets all of the resources that aren't statically-partitioned.

This sentence doesn't make any sense at all, any and all thread stands to benefit just from being run, so the first thread will always be held back. The only other scenario would be if there is only one thread to run but then why would that be mentioned in a conversation about SMT.bit_user said:So, the only time one thread is being held back is when there's another thread that actually stands to benefit. -

bit_user Reply

Again, you're "going off the reservation", here. In the actual interview that I linked, he does say there are some statically-partitioned resources that remain per-thread, even when the core is running in single-thread mode.TerryLaze said:When there is only one thread then there is no partitioning....the one thread gets all all the resources, not just all of them (except for) .

I didn't quote it, because it's not a very long interview and anyone who's interested in the subject really should just read or watch the whole thing. I shouldn't have to keep spoon-feeding excepts from it, here.

I was talking about the watermarks. As I understand it, they only come into play when the core has 2 threads running on it. In single-threaded mode, my read is that they go away and the single thread is allowed to consume all of the competitively-shared resources.TerryLaze said:This sentence doesn't make any sense at all,

It's relevant to talk about both scenarios, because it's not very common that a workload is keeping all SMT threads active, all the time. So, even on a SMT-capable CPU that has SMT enabled, you still care what happens when a core is executing just one thread. That's why it's a subject the interviewer wanted to delve into.TerryLaze said:The only other scenario would be if there is only one thread to run but then why would that be mentioned in a conversation about SMT.