Nvidia earned nearly as much as its next 9 fabless rivals combined last year

Demand for AI set to continue.

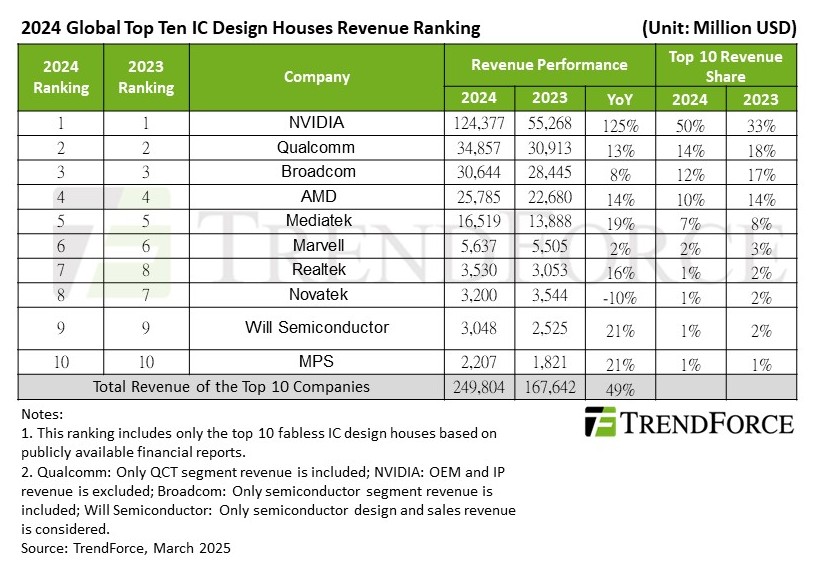

The global semiconductor industry saw explosive growth in 2024, mainly driven by sales of processors for AI applications, according to TrendForce. The Top 10 largest fabless chip developers earned nearly a quarter of a trillion dollars last year; roughly half of that sum came from Nvidia.

The largest fabless chip designers generated $249.8 billion in revenue, up 49% from the previous year. The growth boomed due to skyrocketing demand for AI GPUs, ASICs, adjacent chips (e.g., network processors, DPUs), datacenter CPUs, and recovered demand for client PCs. Market consolidation also intensified, with the Top 5 firms now accounting for over 90% of the revenue among the Top 10.

Nvidia was at the forefront of the industry and extended its dominance, posting $124.3 billion in revenue (a 125% increase from 2023) and capturing 50% of the revenue share. Hopper-based H100, H20, and H200 GPUs drove the company’s revenue increase, as Blackwell-based B200/GB200/B100 only emerged in the fourth quarter. As demand for Blackwell parts — which are believed to be more expensive than Hopper GPUs — increases this year, they will likely enable an even higher revenue for the green company this year.

Qualcomm ranked second, earning $34.86 billion, a 13% year-over-year increase. The company’s growth came from smartphones, the automotive sector, and PCs, a new source of the company’s revenue. The company secured a legal victory against Arm, and there are no risks that the latter will withdraw its licenses. The company also confirmed its interest in datacenter CPUs, though its entry into this market is likely a few years down the road.

Broadcom held third place, with its semiconductor unit bringing in $30.64 billion, up 8% from the previous year. AI-related products accounted for more than 30% of its semiconductor revenue. Despite a mid-year slump, demand for wireless communication, broadband, and server storage will drive the company’s growth in 2025.

AMD followed in fourth, increasing revenue by 14% to $25.79 billion. Its server business surged by 94%, boosting its position in datacenters and the cloud. Strategic partnerships with Dell, Google, and Microsoft are expected to help sustain its momentum, according to TrendForce.

MediaTek secured the fifth spot, with $16.52 billion in revenue, marking a 19% annual increase. The company’s success was driven by mainstream 5G smartphones, power management chips, and AI-related products. Its collaboration with Nvidia on Project Digits positions it for further expansion in 2025 as AI integration in mobile devices increases.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Marvell was the sixth largest fabless chip designer, with 5.637 billion in revenue, up 2% from the previous year. Realtek moved to seventh place with $3.53 billion (16% YoY growth), benefiting from a recovery in PC and automotive-related sales. Meanwhile, Novatek dropped to eighth, with revenue declining 10% to $3.2 billion.

Will Semiconductor and MPS closed the top ten, each showing 21% revenue growth, reaching $3.05 billion and $2.2 billion, respectively. Will Semiconductor benefited from high-end CMOS image sensors in Android phones and autonomous vehicles, while MPS saw success as its power management chips entered the AI server supply chain.

TrendForce expects AI to drive growth in various sectors, from datacenters to personal devices, in 2025.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

emike09 Yet they still gave us the RTX 5000 series. You'd think the last two years of excellent sales would have spurred a bigger push in R&D. Here's hoping the 6000 series takes advantage of this massive capital and we can have a repeat of the 3000 series - huge jump in performance and a drop in price compared to the 2000 series.Reply -

cyrusfox Reply

I truly think they will only ever be priced against competition, and as they have none, the moon is where it will continue to be unless either AMD or Intel can begin to vie for the high end.emike09 said:Yet they still gave us the RTX 5000 series. You'd think the last two years of excellent sales would have spurred a bigger push in R&D. Here's hoping the 6000 series takes advantage of this massive capital and we can have a repeat of the 3000 series - huge jump in performance and a drop in price compared to the 2000 series. -

DS426 Reply

R&D??? That's not the problem, it's QA/QC and allocating supplies to markets other than AI. And nVidia wasn't generous or such in "giving us RTX 5000 series." It's the worst gaming dGPU launch that nVidia has possibly ever had, and if anything, AMD has closed the gap... even while "only going mid-tier" this generation.emike09 said:Yet they still gave us the RTX 5000 series. You'd think the last two years of excellent sales would have spurred a bigger push in R&D. Here's hoping the 6000 series takes advantage of this massive capital and we can have a repeat of the 3000 series - huge jump in performance and a drop in price compared to the 2000 series.

There's nothing to learn -- it's always going to be following the money trail. nVidia wouldn't have the highest tech market cap in the world if it was some kind of "righteous" tech or gaming company; they maximized their potential as a core dGPU company and foundation with CUDA and the professional dev and enterprise community, so here we find ourselves today as is.

Sorry bud and no offense to nVidia fans but I think a lot of mindsights are far from practical life. -

renz496 Reply

Process node. 50 series still on the same node as 40 series. Cheaper? TSMC keep raising the price of their mature node. 5nm is not even their most bleeding edge node and we still see price increase on that.emike09 said:Yet they still gave us the RTX 5000 series. You'd think the last two years of excellent sales would have spurred a bigger push in R&D. Here's hoping the 6000 series takes advantage of this massive capital and we can have a repeat of the 3000 series - huge jump in performance and a drop in price compared to the 2000 series. -

TheOtherOne If any poor little casual gamer like me had any hopes of "decent" priced gaming GPUs, now you know why those good old days are NEVER coming back. 😞Reply -

blppt Reply

We might be reaching a point where this type of rendering architecture just can't provide any massive gains anymore without ridiculous power consumption---might need some kind of revolutionary product.emike09 said:Yet they still gave us the RTX 5000 series. You'd think the last two years of excellent sales would have spurred a bigger push in R&D. Here's hoping the 6000 series takes advantage of this massive capital and we can have a repeat of the 3000 series - huge jump in performance and a drop in price compared to the 2000 series. -

hannibal AI-market will increase. Production capacity does not increase in few upcoming years...Reply

So no price cuts for at least 5 years!

But we may see higher increase in speed along with higher increase in price... -

bit_user Reply

They are trying to do it via AI and neural rendering technologies. The technology is still getting its legs under it. DLSS is just the start.blppt said:We might be reaching a point where this type of rendering architecture just can't provide any massive gains anymore without ridiculous power consumption---might need some kind of revolutionary product.

It doesn't help that many people are using ridiculously high-res 4k+ displays, which is really more than you need for gaming. So, no wonder Nvidia and AMD don't want to directly compute all of those pixels - it's not necessary and not an efficient use of silicon. -

blppt Reply

Not good enough. I want a QUANTUM COMPUTING based GPU. ;)bit_user said:They are trying to do it via AI and neural rendering technologies. The technology is still getting its legs under it. DLSS is just the start.

It doesn't help that many people are using ridiculously high-res 4k+ displays, which is really more than you need for gaming. So, no wonder Nvidia and AMD don't want to directly compute all of those pixels - it's not necessary and not an efficient use of silicon.