Thunderbird packs up to 6,144 CPU cores into a single AI accelerator and scales up to 360,000 cores — InspireSemi's RISC-V 'supercomputer-cluster-on-a-chip' touts higher performance than Nvidia GPUs

InspireSemi preps 4-way Thunderbird card with up to 6,144 RISC-V cores.

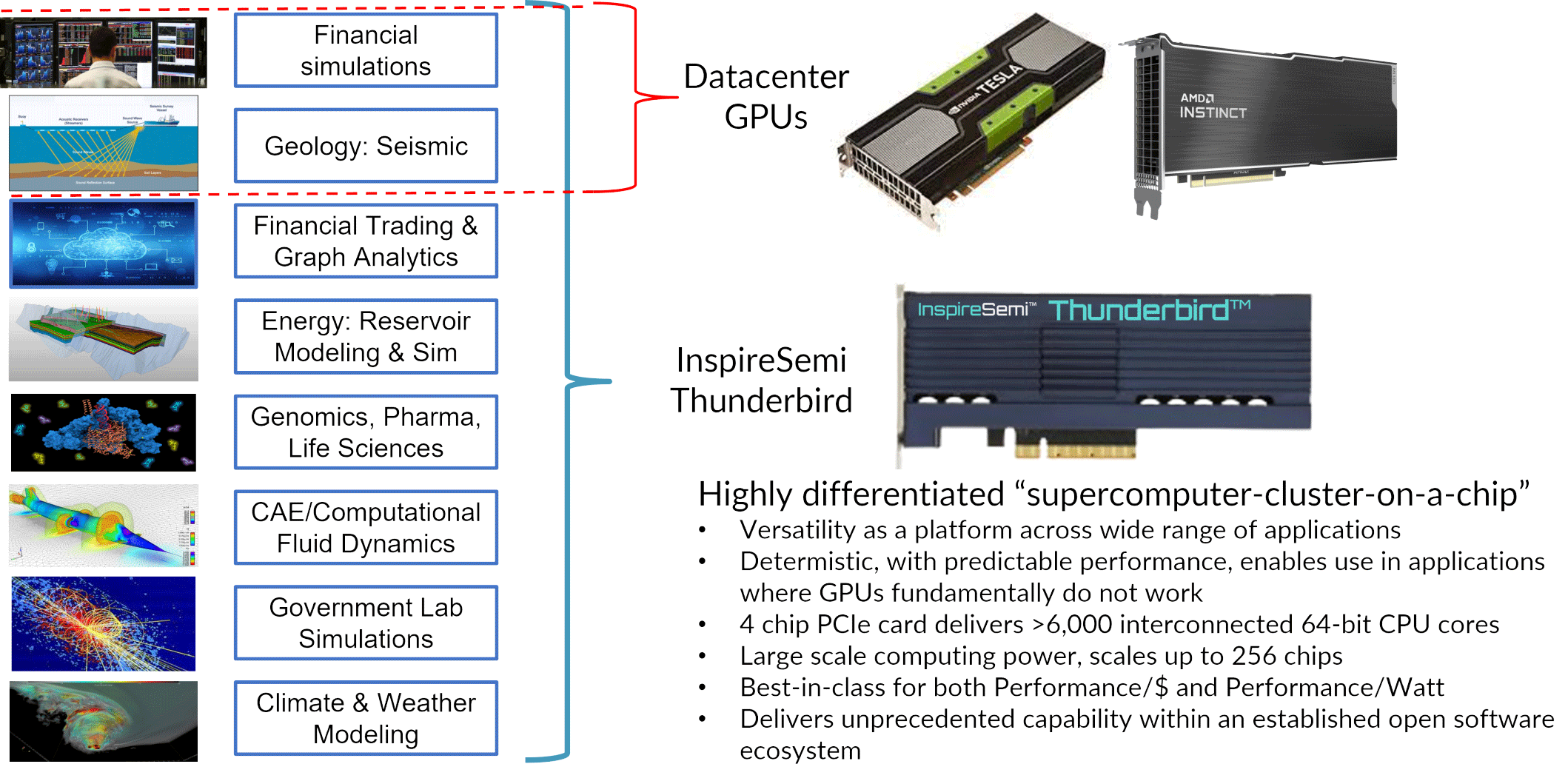

The Holy Grail of supercomputing chip design is an architecture that combines the versatility and programmability of CPUs with the explicit parallelism of GPUs, and InspireSemi strives to achieve just that. InspireSemi's Thunderbird 'supercomputer-cluster-on-a-chip' packs 1,536 RISC-V cores designed specifically for high-performance computing, but it also supports a general-purpose CPU programming model. It also has incredible scalability — four chips can be placed on a single accelerator card, which comes in a standard GPU-like form factor (AIC), bringing the total of cores per card up to 6,144, with extended scalability to 360,000 cores per cluster.

InspireSemi's Thunderbird processor packs 1,536 custom 64-bit superscalar RISC-V cores with plenty of high-performance SRAM, accelerators for several cryptography algorithms, and an on-chip low-latency mesh fabric for inter- and intra-chip connectivity. The chip also supports LPDDR memory, NVMe storage, PCIe, and GbE connectivity. It has been taped out and will be fabbed at TSMC, then packaged at ASE.

InspireSemi aims to install four Thunderbird chips onto a single board to offer developers 6,144 RISC-V cores. The current Thunderbird architecture supports scale-out capability to up to 256 processors connected using high-speed serial transceivers.

When it comes to performance, InspireSemi says that its solution offers up to 24 FP64 TFLOPS at 50 GFLOPS/W (at 480W), which is a formidable performance. To put this into context, Nvidia's A100 delivers 19.5 FP64 TFLOPS, whereas Nvidia's H100 reaches 67 FP64 TFLOPS. It's unclear if we are dealing with the performance of a single-chip Thunderbird card or a 4-way model. Delivering 1920W to an add-in card is hardly possible, so it is likely that we are dealing with four Thunderbird processors on a card that can offer 24 FP64 TFLOPS per chip.

The superscalar cores support vector tensor operations and mixed-precision floating point data formats, though there is no word that these cores are Linux-capable, which is why InspireSemi calls Thunderbird an accelerator rather than a general-purpose processor. Still, this processor can be programmed like a regular RISC-V CPU and supports a variety of workloads, such as AI, HPC, graph analytics, blockchain, and other compute-intensive applications. As a result, InspireSemi's customers will not have to use proprietary tools or software stacks like Nvidia's CUDA. The only question is whether industry-standard tools and software stacks will be enough to extract maximum performance from the Thunderbird I in all kinds of workloads.

"We are proud of the accomplishment of our engineering and operations team to finish the Thunderbird I design and submit it to our world-class supply chain partners, TSMC, ASE, and imec for production," said Ron Van Dell, CEO of InspireSemi. "We expect to begin customer deliveries in the fourth quarter."

Speaking of customers and partners, InspireSemi has a long list of companies it works with, including Lenovo, Penguin Computing, 2CRSI, World Wide Computing, GigaIO, Cadence, and GUC, just to name a few.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

"This is a major milestone for our company and an exciting time to be bringing this versatile accelerated computing solution to market," said Alex Gray, Founder, CTO, and President of InspireSemi. "Thunderbird accelerates many critical applications in important industries that other approaches do not, including life sciences, genomics, medical devices, climate change research, and applications that require deep simulation and modeling."

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user Reply

The downside of this approach is that all of those cores need to fetch instructions from memory and then spend energy decoding those instructions. Furthermore, to the extent they're executing the same kernels, all of that instruction cache the cores have with basically the same contents is also wasted area.The article said:InspireSemi's Thunderbird 'supercomputer-cluster-on-a-chip' packs 1,536 RISC-V cores designed specifically for high-performance computing

That's why GPUs use wide SIMD, with up to 128x 32-bit = 4096-bit for Nvidia's HPC/AI GPUs (though my info could be out of date). In AMD's case, I think AMD's MI200 and later are using 64x 64-bit, for what's effectively 4096-bit SIMD. MI100 and CGN were 64x 32-bit.

Okay, but AI doesn't use fp64.The article said:InspireSemi says that its solution offers up to 24 FP64 TFLOPS at 50 GFLOPS/W

This thing is seemingly hearkening back to the approach taken by Intel's failed Xeon Phi, except that they presumably get some benefit from RISC-V having less baggage than x86. Good luck to them, but I remain skeptical.

Their best case scenario might be if Nvidia, AMD, and Intel all turn their backs on the HPC market to focus on AI. Then, their general-purpose programmability + heavy fp64 horsepower might actually count for something. -

KnightShadey Replybit_user said:Okay, but AI doesn't use fp64.

Yes, it does, only it's mainly edge cases, not where all the focus is. Nor is it the sole focus of these designs, just an option.

Which is where anyone trying to compete with AMD, Google, & nV should be looking to make their mark/money.... where they aren't. 🤔

bit_user said:This thing is seemingly hearkening back to the approach taken by Intel's failed Xeon Phi, except that they presumably get some benefit from RISC-V having less baggage than x86. Good luck to them, but I remain skeptical.

No, it's almost an exact copy of Tenstorrent's design (just likely similar to what their next generation would be core/cluster wise), which makes sense timeframe wise. They are another group that focuses on the Edge case scenario to succeed, with a bunch of former AMD, Apple, intel, & nVidia folks at the helm (sometimes all in the same person😉).

If you're unfamiliar with them, Anand did a great interview with the founder/ceo Ljubisa Bajic & CTO Jim Keller (both former AMD colleagues). Jim's a big name in chip design;

https://www.anandtech.com/show/16709/an-interview-with-tenstorrent-ceo-ljubisa-bajic-and-cto-jim-keller

and THG did an article of the architecture in the past.

https://www.tomshardware.com/news/tenstorrent-shares-roadmap-of-ultra-high-performance-risc-v-cpus-and-ai-accelerators

Semi also has a good breakdown of benefits, and where they are focusing on the fringe applications (yet alread have large orders from LG etc.)

https://www.semianalysis.com/p/tenstorrent-wormhole-analysis-a-scale

https://www.semianalysis.com/p/tenstorrent-blackhole-grendel-and

-

bit_user Reply

Source?KnightShadey said:Yes, it does, only it's mainly edge cases, not where all the focus is.

How is it not a focus, when they literally compared their fp64/W to Nvidia's A100? That sure sounds like they were making it a focus!KnightShadey said:Nor is it the sole focus of these designs, just an option.

Furthermore, fp64 is key to many of their specified use cases:

As mentioned in the article, Nvidia's Hopper is no slouch on fp64! AMD's MI250X briefly took a lead on fp64, before Nvidia's H100 recaptured the crown. It's telling that they compared Thunderbird to the previous generation (A100), at a time when Nvidia is on the verge of launching their next generation (Blackwell). That shows InspireSemi is well aware they're not competitive on fp64.KnightShadey said:Which is where anyone trying to compete with AMD, Google, & nV should be looking to make their mark/money, where they aren't. 🤔

LOL, no. Their design has an order of magnitude more RISC-V cores than Tenstorrent's Tensix cores! That can only be true if their cores are much narrower. I'll bet they're also lacking direct-mapped SRAM like Tenstorrent and Crebras use, which puts them at a substantial efficiency disadvantage, because cache is a lot less energy-efficient than software-managed SRAM.KnightShadey said:No, it's almost an exact copy of Tenstorrent's design (just likely similar to what their next generation would be core/cluster wise),

Exactly which edge case scenarios is Tenstorrent focusing on?KnightShadey said:They are another group that focuses on the Edge case scenario to succeed,

I Read it. The whole thing. Both interviews he did with Jim, around that time, actually.KnightShadey said:If you're unfamiliar with them, Anand did a great interview with the founder/ceo Ljubisa Bajic & CTO Jim Keller

I heard about the LG deal, like a year ago. Any newer news?KnightShadey said:yet alread have large orders from LG etc.)

I read a lot of nice stuff about Tenstorrent. I hope they are successful, though I also hope they don't backtrack on their promises to open source their software and tools. -

KnightShadey Replybit_user said:Source?

See above post.

No one said preferred or exclusive, but again, also NOT 'not used' as you claim.🧐

However, to sum up, like yesterday.... You Disagree.

M'Kay, Thanks! 😎🤙

Like yesterday, you're probably running at maybe about 50% on your assumptions/claims, but that may be generous. 🤨 -

bit_user Reply

Again, please provide a source to support the assertion that fp64 is used in AI ...or drop the point.KnightShadey said:See above post.

No one said preferred or exclusive, but again, also NOT 'not used' as you claim.🧐

I kinda feel like you're trying to diminish my position by casting me as the dissenter, when mine was the original post.KnightShadey said:However, to sum up, like yesterday.... You Disagree.

M'Kay, Thanks! 😎🤙

Also, I asked you some questions and to support some of your claims, which (for the record) you've not done. That's fine, but it should be noted.

This is not a constructive statement. You're free to take issue with anything I've said, but to cast aspersions without specifics is a pretty low-brow tactic.KnightShadey said:Like yesterday, you're probably running at maybe about 50% on your assumptions/claims, but that may be generous. 🤨 -

KnightShadey Replybit_user said:I kinda feel like you're trying to diminish my position by casting me as the dissenter, when mine was the original post.

Casting you as the dissenter? But darling, YOU ARE the original dissenter...

"Okay, but AI doesn't use fp64." 🧐

Which you never provided exhaustive supporting evidence for. Yet feel you can compel others to do so? 🤨

So to be clear, and to further summarize your position: You Disagree with Tom's, Tenstorrent, InspireSemi, and myself.

Okay, Thanks ! 🤠🤙 -

bit_user Reply

Oh, am I? That statement wasn't disagreeing with anything - it was merely stating a relevant fact. There was no actual point in contention, until you contradicted it.KnightShadey said:Casting you as the dissenter? But darling, YOU ARE the original dissenter...

"Okay, but AI doesn't use fp64." 🧐

As you'll know, one can't prove a negative. However, let's look at some examples of AI accelerators lacking fp64.KnightShadey said:Which you never provided exhaustive supporting evidence for.

Tenstorrent - "On Grayskull this is a vector of 64 19 bit values while on Wormhole this is a vector of 32 32 bit values."

Cerebras - "The CE’s instruction set supports FP32, FP16, and INT16 data types"

Habana/Intel's Gaudi - "The TPC core natively supports the following data types: FP32, BF16, INT32, INT16, INT8, UINT32, UINT16 and UINT8."

Movidius/Intel's NPU (featured in Meteor Lake) - "Each NCE contains two VLIW programmable DSPs that supports nearly all data-types ranging between INT4 to FP32."

AMD's XDNA NPU (based on Xilinx Versal cores) - "Enhanced DSP Engines provide support for new operations and data types, including single and half-precision floating point and complex 18x18 operations."

Not to mention that Nvidia's inferencing-oriented L4 and L40 datacenter GPUs implement fp64 at just 1:64 relative to their vector fp32 support (plus no fp64 tensor support). That's almost certainly there as a vestige of their client GPUs fp64 scalar support, which is needed for the odd graphics task like matrix inversion.

I don't know about you, but I'd expect fp64 support to be a heck of a lot more prevalent in so many purpose-built AI accelerators and AI-optimized GPUs, if it were at all relevant for AI. Instead, what we see is that even training is mostly using just 16-bits or less!

Hey, you're the one who volunteered the oddly specific claim:KnightShadey said:Yet feel you can compel others to do so? 🤨

"Yes, it does, only it's mainly edge cases"

That seems to suggest specific knowledge of these "edge cases" where it's needed. If you don't actually know what some of those "edge cases" are, then how are you so sure it's needed for them?

As I said, I'm not in disagreement with them over this. In your zeal to score internet points, it seems you didn't take the time to digest what the article relayed about InspireSemi's strategy:KnightShadey said:So to be clear, and to further summarize your position: You Disagree with Tom's, Tenstorrent, InspireSemi, and myself.

Okay, Thanks ! 🤠🤙

"This is a major milestone for our company and an exciting time to be bringing this versatile accelerated computing solution to market," said Alex Gray, Founder, CTO, and President of InspireSemi. "Thunderbird accelerates many critical applications in important industries that other approaches do not,

So, they are taking a decidedly generalist approach, much more like Xeon Phi than Tenstorrent's chips. This is underscored by their point that:

"this processor can be programmed like a regular RISC-V CPU and supports a variety of workloads, such as AI, HPC, graph analytics, blockchain, and other compute-intensive applications. As a result, InspireSemi's customers will not have to use proprietary tools or software stacks like Nvidia's CUDA."

Above, I linked to Tenstorrent's TT-Metallium SDK. Writing compute kernels to run on their hardware requires specialized code, APIs, and tools, which is quite contrary to InspireSemi's pitch. -

KnightShadey Replybit_user said:Oh, am I? That statement wasn't disagreeing with anything - it was merely stating a relevant fact. There was no actual point in contention, until you contradicted it.

No, it's not a fact, and again, you obviously didn't bother reading about where it can be useful, as instructed, even though it has been pointed out to you before. You also didn't bother to at least look at InspireSemi's own material which specifically references it towards the end. 🧐

bit_user said:As you'll know, one can't prove a negative. However, let's look at some examples of AI accelerators lacking fp64.

Let's not, and focus on the fact that pointing to the typical use does not prove that it is the ONLY way.

AGAIN, if you even bothered to to follow the provided links, or even go to the end of InspireSemi's own material (instead of lazily cutting & pasting Tom's brief slice you would know the very specific areas they think they can leverage their HPC-centric design towards AI with the use of higher precision. This very thing has been brought to your attention before, and you ignore.

Even in your previous discussion with CmdrShprd the other day, you show that if people provide you evidence you dismiss it for any reason you can think of, including questioning the validity of the people involved or the examples provided.

Which is why I pointed out that it was for those exceptions to the rule, which you flew by in your default mode. You did that 2 days ago as well, with equally arrogant ignorance of the discussion to cut & paste your default assumptions as if they were fact, YET AGAIN, which were then shown to be incorrect, and Once Again, MISSING THE POINT. 🙃

bit_user said:That seems to suggest specific knowledge of these "edge cases" where it's needed. If you don't actually know what some of those "edge cases" are, then how are you so sure it's needed for them?

I have specific knowledge of those Edge cases, and have even mentioned them to you before, because AGAIN I bothered to read and understand the material in their presentation as well as that provided to you.

bit_user said:it seems you didn't take the time to digest what the article relayed about InspireSemi's strategy:

So, they are taking a decidedly generalist approach, much more like Xeon Phi than Tenstorrent's chips.

No, it is clear you didn't even understand that the reason they have that generalist approach, is because folks like AMD & nV have very specialized designs that squeeze every last ounce of performance out of their much more advanced silicon for very specific targeted tasks , so InsprieSemi specifically mention how they can use that jack of all trades thinking and apply it to those edge cases where they can make their mark/money and not worry about AMD & nVIda beating them because they can pack more transistors in their 3/5 nm process vs Inspire's use of TSMC 12nm. They are specifically mentioning the very small boundary scenarios that they can exploit with their design, even in Ai, because they can go where AMD & nVidia ain't and also they can't simply follow with their money and TSMC relationship advantages.

Again, Exploiting the Edge Cases! 🧐

As before, YOU COMPLETELY MISSED THE POINT. 🙄 -

sitehostplus I think we should wait to see how it works in the real world before judging anything.Reply

All that alleged power, there has to be a downside we can't see until it's out in the wild actually doing stuff. -

KnightShadey Replybit_user said:As I said, I'm not in disagreement with them over this. In your zeal to score internet points

Dude, you're the one trying to score internet points, as I will quote : "(for the record) ... it should be noted." I mean who even talks like that unless they are solely concerned about scoring points ? 🤨

So to summarize YOU: Your assumptions are unquestionable facts, everyone else must be wrong and have ulterior motives if you disagree. 🤡M'kay, Thanks ! 🥸🤙