Unreal Engine 5.6 up to 30% faster than the infamously bad version it succeeds -- better graphics fidelity promised, too

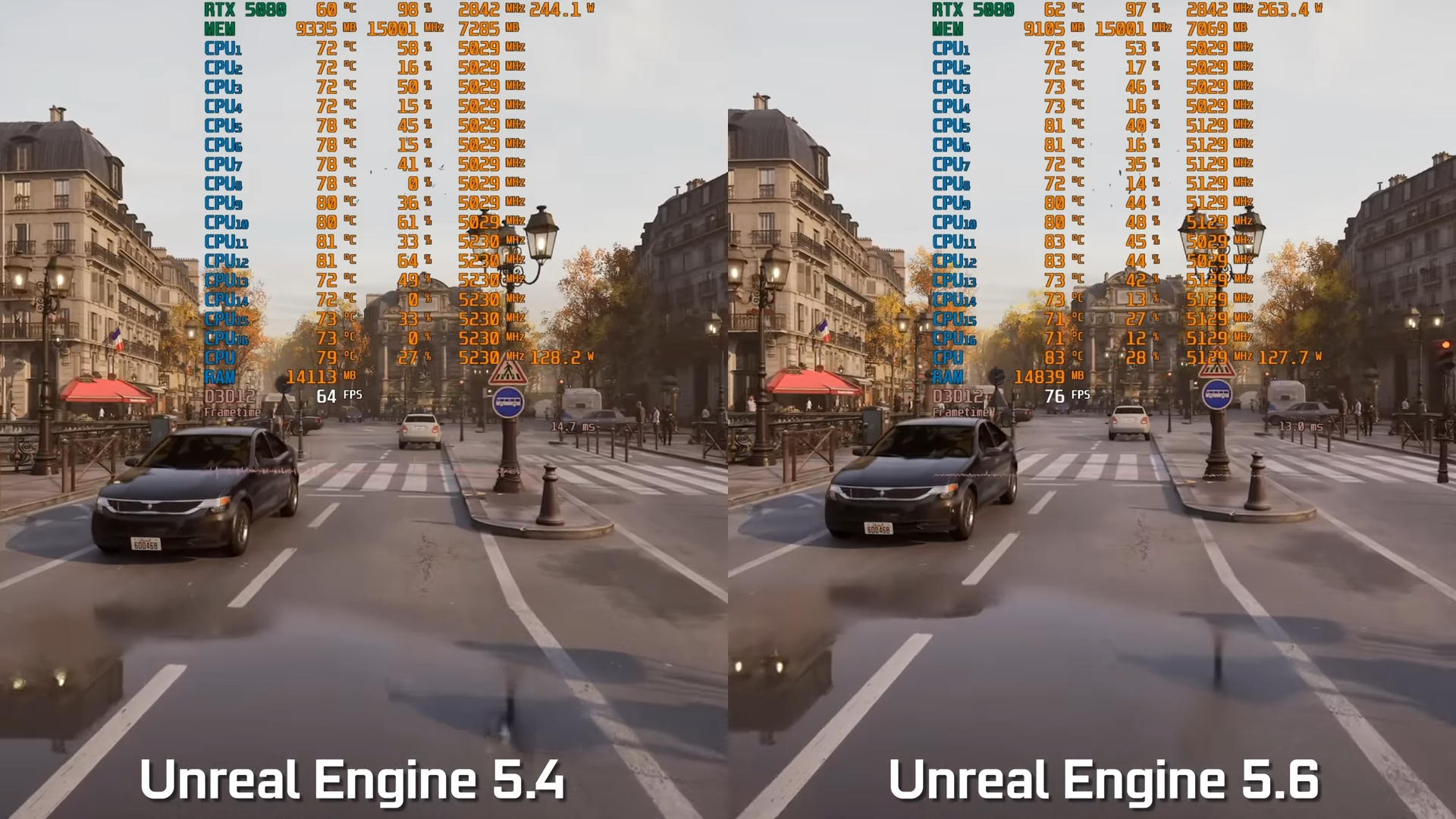

Unreal Engine 5.6 has been benchmarked, revealing up to an impressive 30% performance gain while boosting graphics fidelity over Unreal Engine 5.4, perhaps finally addressing many of the engine's infamous stuttering issues. MxBenchmarkPC on YouTube showcased an Unreal Engine Paris tech demo running on an RTX 5080 and Core i7-14700F, comparing the 5.6 and 5.4 versions of the engine against each other at 1440p and 4K resolutions.

The YouTuber provided five runs featuring direct comparisons between engine versions, with several standalone runs mixed in. The first two runs involved moving benchmarks featuring a walk around the streets of Paris. The first run was benchmarked at 1440p, while the second was run at 720p to demonstrate a CPU-limited scenario.

In the first run, Unreal Engine 5.6 was 22% faster compared to version 5.4; additionally, CPU usage dropped by around 17% on average across all 16 threads (of the 14700F's 8 P-cores) with version 5.6. The 720p run showed even greater gains for Unreal Engine 5.6, which outperformed version 5.4 by a whopping 30%. The last three runs (with direct comparisons of 5.6 vs. 5.4) involved static shots of different areas of the city. These three runs were anywhere between 15% to 22% faster on Unreal Engine 5.6 compared to version 5.4.

The Paris demo also showcased improved environmental and object lighting in most scenes. Interior scenes are particularly darker with chairs and tables gaining extra shadowing in 5.6 over 5.4. The improved lighting fidelity gives the demo a more photorealistic look in version 5.6, while version 5.4 lighting looks more "gamified" by contrast.

Version 5.6's massive improvement in performance can be attributed to several updates the devs made to the engine. Including offloading more tasks from the CPU to the GPU for workloads related to its Lumen global illumination system, and the introduction of the Fast Genometry plugin that improves open-world loading speeds. Unreal Engine 5.6 is primarily a performance-focused patch targeting 60 FPS with hardware ray tracing on the latest consoles, high-end PCs, and powerful mobile devices.

We have yet to see any games (beyond Fortnite, allegedly) taking advantage of Unreal Engine 5.6. But this new update provides the best opportunity yet for the engine to rid itself of its infamous stuttering issues plaguing many Unreal Engine 5 titles.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

VizzieTheViz That’s all well and good but can this be used to fix games that are already out there with older versions of the unreal 5 engine or is it just for new games?Reply

Even if it can be back ported to current games were still at the mercy of developers if they take the time to do so.

Well at least hopefully future games on this engine might run better. -

abufrejoval Reply

Nothing automatic, I'm afraid, whether existing games will be upgraded depends on their vendors.VizzieTheViz said:That’s all well and good but can this be used to fix games that are already out there with older versions of the unreal 5 engine or is it just for new games?

Even if it can be back ported to current games were still at the mercy of developers if they take the time to do so.

Well at least hopefully future games on this engine might run better.

My main game, ARK Survival Ascended launched late in '23 as one of the first UR5 titles and has just been upgraded to 5.5 in a major effort, and if I remember correctly, they are planning to do one engine upgrade a year this time around.

The previous variant ARK Survival Evolved launched in '17 as one of the earliest UR4 titles and never saw an engine update AFAIK, mostly I believe they used a private fork and a dev kit out there using it for tons of 3rd party extensions... that could then break.

I've just tried to give UR5.6 a spin, but so far there is no DLSS plug-ins for 5.6 from Nvidia and most of the typical demos (e.g. City Sample) haven't been updated to 5.6, either, so doing an a/b comparison isn't trivial for someone like me who's only ever spent a few hours compiling and running the demos and never dove into real game development.

From the idiot level where I am at, upgrading from 5.4 to 5.6 doesn't seem to involve rewriting the game per-se, it seems to center mostly around optimizations not ground-up new features and technology. But given the fact that even the Unreal demos aren't just compatible with 5.6 from day one, it has to be more involved than just a few clicks. -

hwertz Great that they're getting UE5 up to speed. It seems like a pig compared to UE4, and I don't buy that every single UE5 game is just unioptimized and use so many more resources than any UE4 game.. Good on them to address the speed issues.Reply

Disappointing that it's some major effort to switch though, it would have been nice if (as long as they didn't customize the engine) one could just pull down 5.6 and load up the UE5 games project files in it and let it build, done and done. -

abufrejoval Just doing an initial project open/compile on the ElectricDreams demo... probably half an hour so far...Reply

And that's my 24x7 workstation/VM server host, a 5950X 16-core with 128GB of RAM.

I guess I know who's buying 96 core Threadrippers, at least it's using all the cores for compiles... -

abufrejoval Valley of the Ancient with 80-100FPS @4k on an RTX 5070 without DLSS?Reply

I don't remember seeing that sort of performance on a medium range card before...

Electric Dream is only 30FPS and 55FPS@1080, so that's much more modest.

I guess I'll want to try the 7950X3D and the RTX 4090 next... -

Elf_Boy It is nice to see new tech performing.Reply

Sadly, as nice as that is it means nothing to me because I am on an AMD system and just because NV does well (or not well) doesnt mean the same. I wish reviewers would remember to check all sides including Intel with stuff like this.

For all we know AMD/Intel does better or worse. We know there has traditionally been a bias in the industry to optimize more for NV than others. -

abufrejoval Reply

I don't haven an AMD card on hand to check, but those demos are normally without any type of DLSS or frame gen at all, you'd have to include and activate accelerator libraries to support them.Elf_Boy said:It is nice to see new tech performing.

Sadly, as nice as that is it means nothing to me because I am on an AMD system and just because NV does well (or not well) doesnt mean the same. I wish reviewers would remember to check all sides including Intel with stuff like this.

For all we know AMD/Intel does better or worse. We know there has traditionally been a bias in the industry to optimize more for NV than others.

I've done that in the past, but last time I checked DLSS plug-ins weren't ready for 5.6 yet, no idea what the AMD situation is.

Now, would games on AMD benefit in a similar manner?

I'm positive that Unreal would do their utmost to make that happen. Remember, their main market is consoles, and those tend to be AMD.

It's quite literally their business to abstract from the hardware and squeeze the maximum performance out of each. Of course they can't do it all alone, but they'll try their best to make it happen, because otherwise people would use other engines. -

abufrejoval Reply

Pretty sure that will happen eventually. This is still very early days.Elf_Boy said:I wish reviewers would remember to check all sides including Intel with stuff like this.

But of course everybody follows the money and the effort isn't trivial, as these GPU specific plug-ins can have severe side-effects and kill your game.

Most game vendors would prefer if they just didn't have to care at all, Valve would love to make that happen, but GPU vendors want to push their USPs...

It suckes when you have to wait longer or are even left behind. But the alternative without that cut-throat competition would still be playing Pong. -

hwertz Reply

Dang, that's a hefty build. I suppose the optimizer in the compiler probably goes absolutely nutso on looking for optimizations in that code.abufrejoval said:Just doing an initial project open/compile on the ElectricDreams demo... probably half an hour so far...

And that's my 24x7 workstation/VM server host, a 5950X 16-core with 128GB of RAM.

I guess I know who's buying 96 core Threadrippers, at least it's using all the cores for compiles...

I've found CPU emulators drive the compiler the most nuts, but 3D code and things involving matrix math give the compiler a nice workout too. I imagine it checks if it can use MMX/SSE/AVX/etc. instructions to speed things up; potential speedups from loop unrolling; seeing if reordering instructions could yield some massive speedup; sees if some array is being looped through top-to-bottom when left-to-right would be much faster (or vice-versa), and so on. I mean the compiler 'always' checks for those things but a lot of code it can probably just see they don't apply and skip them, the kind of work 3D code does it probably carefully runs through every single check. -

abufrejoval Reply

That Unreal "compilation" isn't your ordinary C compiler most of the time. First of all, most "coding" in Unreal isn't code in the traditional sense, but visual assembly via Blueprints. And lots of game data is just that, data. Yet it still needs to be converted from some abstract format that is as machine independent as possible into something that runs devilishly fast on the target platform underneath. Good chance that includes generating abstract shader language that then again needs to be compiled into a binary format for the hardware.hwertz said:Dang, that's a hefty build. I suppose the optimizer in the compiler probably goes absolutely nutso on looking for optimizations in that code.

I haven't really gone deep, I was mostly interested to see if I could easily plug in external code e.g. to make Dinos in Ark send what they saw with their eyes to some machine learning model and then translate its recognitions into behavior and actions.

Turned out that couldn't be done in a week-end or three, so I stopped there, but it was still amazing to see and dabble.

I completely agree, modern CPUs have single vector instructions, that would require pages and pages of normal C code to emulate functionally or some other RTL or similar format to describe functionally: even if there is a rough functional equivalent, you can't just translate from one to another.hwertz said:I've found CPU emulators drive the compiler the most nuts, but 3D code and things involving matrix math give the compiler a nice workout too. I imagine it checks if it can use MMX/SSE/AVX/etc. instructions to speed things up; potential speedups from loop unrolling; seeing if reordering instructions could yield some massive speedup; sees if some array is being looped through top-to-bottom when left-to-right would be much faster (or vice-versa), and so on. I mean the compiler 'always' checks for those things but a lot of code it can probably just see they don't apply and skip them, the kind of work 3D code does it probably carefully runs through every single check.

That's why Unreal attempts to start with a very high abstraction, which has a perhaps better defined visual goal than those HPC applications you were trying to emulate.

And then it does the magic that makes it so valuable.

It's all open and free to check out, so if you find the time, have a go! Better than lots of doom-scrolling...